当前位置:

X-MOL 学术

›

Nat. Hum. Behav.

›

论文详情

Our official English website, www.x-mol.net, welcomes your

feedback! (Note: you will need to create a separate account there.)

Comparing meta-analyses and preregistered multiple-laboratory replication projects.

Nature Human Behaviour ( IF 21.4 ) Pub Date : 2019-12-23 , DOI: 10.1038/s41562-019-0787-z Amanda Kvarven 1 , Eirik Strømland 1 , Magnus Johannesson 2

Nature Human Behaviour ( IF 21.4 ) Pub Date : 2019-12-23 , DOI: 10.1038/s41562-019-0787-z Amanda Kvarven 1 , Eirik Strømland 1 , Magnus Johannesson 2

Affiliation

|

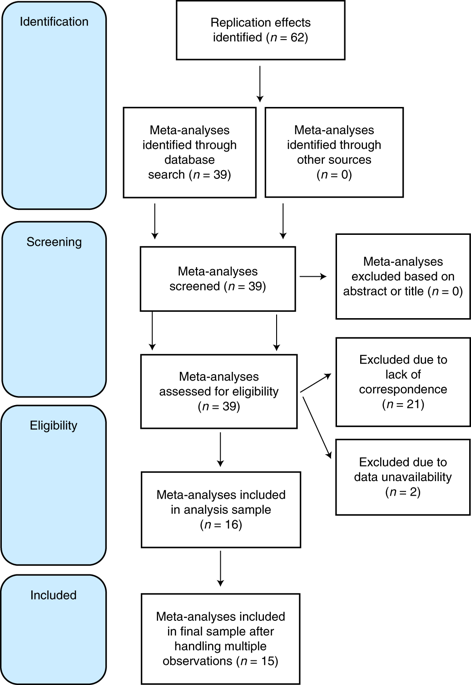

Many researchers rely on meta-analysis to summarize research evidence. However, there is a concern that publication bias and selective reporting may lead to biased meta-analytic effect sizes. We compare the results of meta-analyses to large-scale preregistered replications in psychology carried out at multiple laboratories. The multiple-laboratory replications provide precisely estimated effect sizes that do not suffer from publication bias or selective reporting. We searched the literature and identified 15 meta-analyses on the same topics as multiple-laboratory replications. We find that meta-analytic effect sizes are significantly different from replication effect sizes for 12 out of the 15 meta-replication pairs. These differences are systematic and, on average, meta-analytic effect sizes are almost three times as large as replication effect sizes. We also implement three methods of correcting meta-analysis for bias, but these methods do not substantively improve the meta-analytic results.

中文翻译:

比较荟萃分析和预先注册的多实验室复制项目。

许多研究人员依靠荟萃分析来总结研究证据。然而,有人担心发表偏倚和选择性报告可能会导致荟萃分析效应量有偏差。我们将荟萃分析的结果与在多个实验室进行的大规模预先注册的心理学复制进行比较。多实验室重复提供精确估计的效应量,不受发表偏倚或选择性报告的影响。我们检索了文献并确定了 15 项与多实验室重复相同主题的荟萃分析。我们发现元分析效应大小与 15 个元复制对中的 12 个的复制效应大小显着不同。这些差异是系统性的,平均而言,元分析效应量几乎是复制效应量的三倍。我们还实施了三种纠正元分析偏差的方法,但这些方法并没有实质性地改善元分析结果。

更新日期:2019-12-23

中文翻译:

比较荟萃分析和预先注册的多实验室复制项目。

许多研究人员依靠荟萃分析来总结研究证据。然而,有人担心发表偏倚和选择性报告可能会导致荟萃分析效应量有偏差。我们将荟萃分析的结果与在多个实验室进行的大规模预先注册的心理学复制进行比较。多实验室重复提供精确估计的效应量,不受发表偏倚或选择性报告的影响。我们检索了文献并确定了 15 项与多实验室重复相同主题的荟萃分析。我们发现元分析效应大小与 15 个元复制对中的 12 个的复制效应大小显着不同。这些差异是系统性的,平均而言,元分析效应量几乎是复制效应量的三倍。我们还实施了三种纠正元分析偏差的方法,但这些方法并没有实质性地改善元分析结果。

京公网安备 11010802027423号

京公网安备 11010802027423号