当前位置:

X-MOL 学术

›

J. Biomed. Inform.

›

论文详情

Our official English website, www.x-mol.net, welcomes your

feedback! (Note: you will need to create a separate account there.)

Testing the face validity and inter-rater agreement of a simple approach to drug-drug interaction evidence assessment.

Journal of Biomedical informatics ( IF 4.0 ) Pub Date : 2019-12-12 , DOI: 10.1016/j.jbi.2019.103355 Amy J Grizzle 1 , Lisa E Hines 2 , Daniel C Malone 3 , Olga Kravchenko 4 , Harry Hochheiser 5 , Richard D Boyce 4

Journal of Biomedical informatics ( IF 4.0 ) Pub Date : 2019-12-12 , DOI: 10.1016/j.jbi.2019.103355 Amy J Grizzle 1 , Lisa E Hines 2 , Daniel C Malone 3 , Olga Kravchenko 4 , Harry Hochheiser 5 , Richard D Boyce 4

Affiliation

|

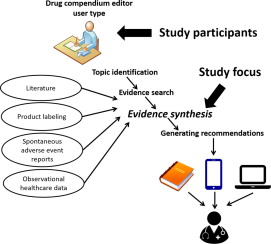

Low concordance between drug-drug interaction (DDI) knowledge bases is a well-documented concern. One potential cause of inconsistency is variability between drug experts in approach to assessing evidence about potential DDIs. In this study, we examined the face validity and inter-rater reliability of a novel DDI evidence evaluation instrument designed to be simple and easy to use.

METHODS

A convenience sample of participants with professional experience evaluating DDI evidence was recruited. Participants independently evaluated pre-selected evidence items for 5 drug pairs using the new instrument. For each drug pair, participants labeled each evidence item as sufficient or insufficient to establish the existence of a DDI based on the evidence categories provided by the instrument. Participants also decided if the overall body of evidence supported a DDI involving the drug pair. Agreement was computed both at the evidence item and drug pair levels. A cut-off of ≥ 70% was chosen as the agreement threshold for percent agreement, while a coefficient > 0.6 was used as the cut-off for chance-corrected agreement. Open ended comments were collected and coded to identify themes related to the participants' experience using the novel approach.

RESULTS

The face validity of the new instrument was established by two rounds of evaluation involving a total of 6 experts. Fifteen experts agreed to participate in the reliability assessment, and 14 completed the study. Participant agreement on the sufficiency of 22 of the 34 evidence items (65%) did not exceed the a priori agreement threshold. Similarly, agreement on the sufficiency of evidence for 3 of the 5 drug pairs (60%) was poor. Chance-corrected agreement at the drug pair level further confirmed the poor interrater reliability of the instrument (Gwet's AC1 = 0.24, Conger's Kappa = 0.24). Participant comments suggested several possible reasons for the disagreements including unaddressed subjectivity in assessing an evidence item's type and study design, an infeasible separation of evidence evaluation from the consideration of clinical relevance, and potential issues related to the evaluation of DDI case reports.

CONCLUSIONS

Even though the key findings were negative, the study's results shed light on how experts approach DDI evidence assessment, including the importance situating evidence assessment within the context of consideration of clinical relevance. Analysis of participant comments within the context of the negative findings identified several promising future research directions including: novel computer-based support for evidence assessment; formal evaluation of a more comprehensive evidence assessment approach that requires consideration of specific, explicitly stated, clinical consequences; and more formal investigation of DDI case report assessment instruments.

中文翻译:

测试药物相互作用证据评估的简单方法的表面有效性和评估者间一致性。

药物间相互作用 (DDI) 知识库之间的低一致性是一个有据可查的问题。不一致的一个潜在原因是药物专家评估潜在 DDI 证据的方法存在差异。在本研究中,我们检验了一种新型 DDI 证据评估工具的表面效度和评估者间信度,该工具设计简单且易于使用。方法 招募了具有评估 DDI 证据专业经验的参与者的方便样本。参与者使用新仪器独立评估了 5 个药物对的预选证据项目。对于每个药物对,参与者根据仪器提供的证据类别将每个证据项目标记为足以或不足以确定 DDI 的存在。参与者还决定整体证据是否支持涉及药物对的 DDI。在证据项目和药物对水平上计算一致性。选择 ≥ 70% 的截止值作为一致性百分比的一致性阈值,而使用 > 0.6 的系数作为机会校正一致性的截止值。收集开放式评论并进行编码,以确定与参与者使用新颖方法的体验相关的主题。结果新仪器的表面效度通过两轮共6位专家的评估确定。 15 名专家同意参加可靠性评估,14 名专家完成了研究。参与者对 34 个证据项目中的 22 个证据项目 (65%) 的充分性的同意没有超过先验同意阈值。同样,对于 5 种药物对中的 3 种 (60%) 的证据充分性共识也很差。 药物对水平上的机会校正一致性进一步证实了该仪器的较差的评价者间可靠性(Gwet 的 AC1 = 0.24,Conger 的 Kappa = 0.24)。参与者的评论提出了造成分歧的几个可能原因,包括评估证据项目类型和研究设计时未解决主观性问题、证据评估与临床相关性考虑不可行,以及与 DDI 病例报告评估相关的潜在问题。结论 尽管主要发现是否定的,但该研究的结果揭示了专家如何进行 DDI 证据评估,包括在考虑临床相关性的背景下进行证据评估的重要性。在负面结果的背景下对参与者的评论进行分析,确定了几个有前途的未来研究方向,包括:基于计算机的新型证据评估支持;对更全面的证据评估方法进行正式评估,需要考虑具体的、明确说明的临床后果;对 DDI 案例报告评估工具进行更正式的调查。

更新日期:2019-12-13

中文翻译:

测试药物相互作用证据评估的简单方法的表面有效性和评估者间一致性。

药物间相互作用 (DDI) 知识库之间的低一致性是一个有据可查的问题。不一致的一个潜在原因是药物专家评估潜在 DDI 证据的方法存在差异。在本研究中,我们检验了一种新型 DDI 证据评估工具的表面效度和评估者间信度,该工具设计简单且易于使用。方法 招募了具有评估 DDI 证据专业经验的参与者的方便样本。参与者使用新仪器独立评估了 5 个药物对的预选证据项目。对于每个药物对,参与者根据仪器提供的证据类别将每个证据项目标记为足以或不足以确定 DDI 的存在。参与者还决定整体证据是否支持涉及药物对的 DDI。在证据项目和药物对水平上计算一致性。选择 ≥ 70% 的截止值作为一致性百分比的一致性阈值,而使用 > 0.6 的系数作为机会校正一致性的截止值。收集开放式评论并进行编码,以确定与参与者使用新颖方法的体验相关的主题。结果新仪器的表面效度通过两轮共6位专家的评估确定。 15 名专家同意参加可靠性评估,14 名专家完成了研究。参与者对 34 个证据项目中的 22 个证据项目 (65%) 的充分性的同意没有超过先验同意阈值。同样,对于 5 种药物对中的 3 种 (60%) 的证据充分性共识也很差。 药物对水平上的机会校正一致性进一步证实了该仪器的较差的评价者间可靠性(Gwet 的 AC1 = 0.24,Conger 的 Kappa = 0.24)。参与者的评论提出了造成分歧的几个可能原因,包括评估证据项目类型和研究设计时未解决主观性问题、证据评估与临床相关性考虑不可行,以及与 DDI 病例报告评估相关的潜在问题。结论 尽管主要发现是否定的,但该研究的结果揭示了专家如何进行 DDI 证据评估,包括在考虑临床相关性的背景下进行证据评估的重要性。在负面结果的背景下对参与者的评论进行分析,确定了几个有前途的未来研究方向,包括:基于计算机的新型证据评估支持;对更全面的证据评估方法进行正式评估,需要考虑具体的、明确说明的临床后果;对 DDI 案例报告评估工具进行更正式的调查。

京公网安备 11010802027423号

京公网安备 11010802027423号