Nature Electronics ( IF 33.7 ) Pub Date : 2019-11-18 , DOI: 10.1038/s41928-019-0321-3 Kai Ni , Xunzhao Yin , Ann Franchesca Laguna , Siddharth Joshi , Stefan Dünkel , Martin Trentzsch , Johannes Müller , Sven Beyer , Michael Niemier , Xiaobo Sharon Hu , Suman Datta

|

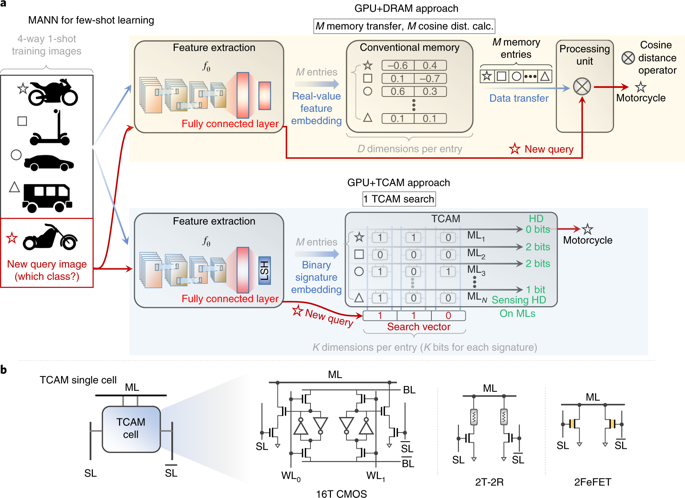

Deep neural networks are efficient at learning from large sets of labelled data, but struggle to adapt to previously unseen data. In pursuit of generalized artificial intelligence, one approach is to augment neural networks with an attentional memory so that they can draw on already learnt knowledge patterns and adapt to new but similar tasks. In current implementations of such memory augmented neural networks (MANNs), the content of a network’s memory is typically transferred from the memory to the compute unit (a central processing unit or graphics processing unit) to calculate similarity or distance norms. The processing unit hardware incurs substantial energy and latency penalties associated with transferring the data from the memory and updating the data at random memory addresses. Here, we show that ternary content-addressable memories (TCAMs) can be used as attentional memories, in which the distance between a query vector and each stored entry is computed within the memory itself, thus avoiding data transfer. Our compact and energy-efficient TCAM cell is based on two ferroelectric field-effect transistors. We evaluate the performance of our ferroelectric TCAM array prototype for one- and few-shot learning applications. When compared with a MANN where cosine distance calculations are performed on a graphics processing unit, the ferroelectric TCAM approach provides a 60-fold reduction in energy and 2,700-fold reduction in latency for a single memory search operation.

中文翻译:

铁电三元内容可寻址存储器,用于一次学习

深度神经网络可以有效地从大量带标签的数据中学习,但是很难适应以前看不见的数据。为了追求通用人工智能,一种方法是用注意力记忆来增强神经网络,以便它们可以利用已经学习的知识模式并适应新的但相似的任务。在这样的存储器增强神经网络(MANN)的当前实现中,网络存储器的内容通常从存储器传送到计算单元(中央处理单元或图形处理单元)以计算相似性或距离规范。处理单元硬件招致与从存储器传送数据和在随机存储器地址处更新数据有关的大量能量和等待时间的损失。这里,我们表明,三元内容可寻址存储器(TCAM)可以用作注意力存储器,其中查询向量与每个存储的条目之间的距离是在存储器本身内计算的,从而避免了数据传输。我们的紧凑型节能TCAM电池基于两个铁电场效应晶体管。我们评估了铁电TCAM阵列原型在单次和多次学习应用中的性能。与在图形处理单元上执行余弦距离计算的MANN相比,铁电TCAM方法可为单个内存搜索操作提供60倍的能耗降低和2700倍的延迟降低。从而避免了数据传输。我们的紧凑型节能TCAM电池基于两个铁电场效应晶体管。我们评估了铁电TCAM阵列原型在单次和多次学习应用中的性能。与在图形处理单元上执行余弦距离计算的MANN相比,铁电TCAM方法可为单个内存搜索操作提供60倍的能耗降低和2700倍的延迟降低。从而避免了数据传输。我们的紧凑型节能TCAM电池基于两个铁电场效应晶体管。我们评估了铁电TCAM阵列原型在单次和多次学习应用中的性能。与在图形处理单元上执行余弦距离计算的MANN相比,铁电TCAM方法可为单个内存搜索操作提供60倍的能耗降低和2700倍的延迟降低。

京公网安备 11010802027423号

京公网安备 11010802027423号