Journal of Biomedical informatics ( IF 4.5 ) Pub Date : 2024-01-30 , DOI: 10.1016/j.jbi.2024.104600 Jacqueline Michelle Metsch , Anna Saranti , Alessa Angerschmid , Bastian Pfeifer , Vanessa Klemt , Andreas Holzinger , Anne-Christin Hauschild

|

Background:

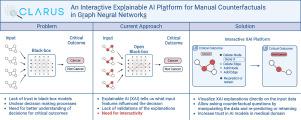

Lack of trust in artificial intelligence (AI) models in medicine is still the key blockage for the use of AI in clinical decision support systems (CDSS). Although AI models are already performing excellently in systems medicine, their black-box nature entails that patient-specific decisions are incomprehensible for the physician. Explainable AI (XAI) algorithms aim to “explain” to a human domain expert, which input features influenced a specific recommendation. However, in the clinical domain, these explanations must lead to some degree of causal understanding by a clinician.

Results:

We developed the CLARUS platform, aiming to promote human understanding of graph neural network (GNN) predictions. CLARUS enables the visualisation of patient-specific networks, as well as, relevance values for genes and interactions, computed by XAI methods, such as GNNExplainer. This enables domain experts to gain deeper insights into the network and more importantly, the expert can interactively alter the patient-specific network based on the acquired understanding and initiate re-prediction or retraining. This interactivity allows us to ask manual counterfactual questions and analyse the effects on the GNN prediction.

Conclusion:

We present the first interactive XAI platform prototype, CLARUS, that allows not only the evaluation of specific human counterfactual questions based on user-defined alterations of patient networks and a re-prediction of the clinical outcome but also a retraining of the entire GNN after changing the underlying graph structures. The platform is currently hosted by the GWDG on https://rshiny.gwdg.de/apps/clarus/.

中文翻译:

CLARUS:一个交互式可解释的人工智能平台,用于图神经网络中的手动反事实

背景:

对医学人工智能(AI)模型缺乏信任仍然是在临床决策支持系统(CDSS)中使用人工智能的主要障碍。尽管人工智能模型已经在系统医学中表现出色,但其黑箱性质意味着医生无法理解针对患者的特定决策。可解释的人工智能(XAI)算法旨在向人类领域专家“解释”哪些输入特征影响特定推荐。然而,在临床领域,这些解释必须导致临床医生对因果关系有一定程度的理解。

结果:

我们开发了 CLARUS 平台,旨在促进人类对图神经网络(GNN)预测的理解。 CLARUS 能够实现患者特定网络的可视化,以及基因和相互作用的相关值,由 XAI 方法(例如 GNNExplainer)计算得出。这使得领域专家能够更深入地了解网络,更重要的是,专家可以根据所获得的理解交互式地改变患者特定的网络,并启动重新预测或重新训练。这种交互性使我们能够提出手动反事实问题并分析对 GNN 预测的影响。

结论:

我们提出了第一个交互式 XAI 平台原型 CLARUS,它不仅可以根据用户定义的患者网络更改来评估特定的人类反事实问题并重新预测临床结果,还可以在更改后重新训练整个 GNN底层的图形结构。该平台目前由 GWDG 托管,网址为https://rshiny.gwdg.de/apps/clarus/。

京公网安备 11010802027423号

京公网安备 11010802027423号