Journal of Advanced Research ( IF 11.4 ) Pub Date : 2022-09-07 , DOI: 10.1016/j.jare.2022.08.021 Chiagoziem C Ukwuoma 1 , Zhiguang Qin 1 , Md Belal Bin Heyat 2 , Faijan Akhtar 3 , Olusola Bamisile 4 , Abdullah Y Muaad 5 , Daniel Addo 1 , Mugahed A Al-Antari 6

|

Introduction

Pneumonia is a microorganism infection that causes chronic inflammation of the human lung cells. Chest X-ray imaging is the most well-known screening approach used for detecting pneumonia in the early stages. While chest-Xray images are mostly blurry with low illumination, a strong feature extraction approach is required for promising identification performance.

Objectives

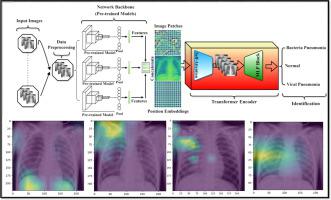

A new hybrid explainable deep learning framework is proposed for accurate pneumonia disease identification using chest X-ray images.

Methods

The proposed hybrid workflow is developed by fusing the capabilities of both ensemble convolutional networks and the Transformer Encoder mechanism. The ensemble learning backbone is used to extract strong features from the raw input X-ray images in two different scenarios: ensemble A (i.e., DenseNet201, VGG16, and GoogleNet) and ensemble B (i.e., DenseNet201, InceptionResNetV2, and Xception). Whereas, the Transformer Encoder is built based on the self-attention mechanism with multilayer perceptron (MLP) for accurate disease identification. The visual explainable saliency maps are derived to emphasize the crucial predicted regions on the input X-ray images. The end-to-end training process of the proposed deep learning models over all scenarios is performed for binary and multi-class classification scenarios.

Results

The proposed hybrid deep learning model recorded 99.21% classification performance in terms of overall accuracy and F1-score for the binary classification task, while it achieved 98.19% accuracy and 97.29% F1-score for multi-classification task. For the ensemble binary identification scenario, ensemble A recorded 97.22% accuracy and 97.14% F1-score, while ensemble B achieved 96.44% for both accuracy and F1-score. For the ensemble multiclass identification scenario, ensemble A recorded 97.2% accuracy and 95.8% F1-score, while ensemble B recorded 96.4% accuracy and 94.9% F1-score.

Conclusion

The proposed hybrid deep learning framework could provide promising and encouraging explainable identification performance comparing with the individual, ensemble models, or even the latest AI models in the literature. The code is available here: https://github.com/chiagoziemchima/Pneumonia_Identificaton.

中文翻译:

一种用于从胸部 X 光图像中识别肺炎的混合可解释集成变换器编码器

介绍

肺炎是一种微生物感染,会引起人体肺细胞的慢性炎症。胸部 X 光成像是用于早期检测肺炎的最著名的筛查方法。虽然胸部 X 射线图像在低照度下大多模糊,但需要强大的特征提取方法才能实现有前途的识别性能。

目标

提出了一种新的混合可解释深度学习框架,用于使用胸部 X 光图像准确识别肺炎疾病。

方法

所提出的混合工作流是通过融合集成卷积网络和 Transformer Encoder 机制的功能而开发的。集成学习主干用于在两种不同场景下从原始输入 X 射线图像中提取强特征:集成 A(即 DenseNet201、VGG16 和 GoogleNet)和集成 B(即 DenseNet201、InceptionResNetV2 和 Xception)。而 Transformer 编码器是基于具有多层感知器 (MLP) 的自我注意机制构建的,用于准确识别疾病。导出视觉可解释显着图以强调输入 X 射线图像上的关键预测区域。针对二进制和多类分类场景执行所提出的所有场景的深度学习模型的端到端训练过程。

结果

所提出的混合深度学习模型在二分类任务的总体准确度和 F1 分数方面记录了 99.21% 的分类性能,而在多分类任务中达到了 98.19% 的准确度和 97.29% 的 F1 分数。对于集成二进制识别场景,集成 A 的准确率和 F1 得分分别为 97.22% 和 97.14%,而集成 B 的准确率和 F1 得分均达到 96.44%。对于集成多类识别场景,集成 A记录了 97.2% 的准确度和 95.8% 的 F1 分数,而集成 B记录了 96.4% 的准确度和 94.9% 的 F1 分数。

结论

与文献中的个体模型、集成模型甚至最新的 AI 模型相比,所提出的混合深度学习框架可以提供有前途且令人鼓舞的可解释识别性能。代码可在此处获得:https://github.com/chiagoziemchima/Pneumonia_Identificaton。

京公网安备 11010802027423号

京公网安备 11010802027423号