Medical Image Analysis ( IF 10.7 ) Pub Date : 2022-07-30 , DOI: 10.1016/j.media.2022.102559 Xiyue Wang 1 , Sen Yang 2 , Jun Zhang 2 , Minghui Wang 1 , Jing Zhang 3 , Wei Yang 2 , Junzhou Huang 2 , Xiao Han 2

|

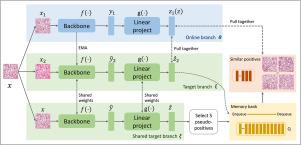

A large-scale and well-annotated dataset is a key factor for the success of deep learning in medical image analysis. However, assembling such large annotations is very challenging, especially for histopathological images with unique characteristics (e.g., gigapixel image size, multiple cancer types, and wide staining variations). To alleviate this issue, self-supervised learning (SSL) could be a promising solution that relies only on unlabeled data to generate informative representations and generalizes well to various downstream tasks even with limited annotations. In this work, we propose a novel SSL strategy called semantically-relevant contrastive learning (SRCL), which compares relevance between instances to mine more positive pairs. Compared to the two views from an instance in traditional contrastive learning, our SRCL aligns multiple positive instances with similar visual concepts, which increases the diversity of positives and then results in more informative representations. We employ a hybrid model (CTransPath) as the backbone, which is designed by integrating a convolutional neural network (CNN) and a multi-scale Swin Transformer architecture. The CTransPath is pretrained on massively unlabeled histopathological images that could serve as a collaborative local–global feature extractor to learn universal feature representations more suitable for tasks in the histopathology image domain. The effectiveness of our SRCL-pretrained CTransPath is investigated on five types of downstream tasks (patch retrieval, patch classification, weakly-supervised whole-slide image classification, mitosis detection, and colorectal adenocarcinoma gland segmentation), covering nine public datasets. The results show that our SRCL-based visual representations not only achieve state-of-the-art performance in each dataset, but are also more robust and transferable than other SSL methods and ImageNet pretraining (both supervised and self-supervised methods). Our code and pretrained model are available at https://github.com/Xiyue-Wang/TransPath.

中文翻译:

基于 Transformer 的无监督对比学习用于组织病理学图像分类

大规模且注释良好的数据集是深度学习在医学图像分析中取得成功的关键因素。然而,组装如此大的注释非常具有挑战性,尤其是对于具有独特特征的组织病理学图像(例如,十亿像素图像大小、多种癌症类型和广泛的染色变化)。为了缓解这个问题,自我监督学习 (SSL) 可能是一种很有前途的解决方案,它仅依赖于未标记的数据来生成信息表示,并且即使在注释有限的情况下也能很好地泛化到各种下游任务。在这项工作中,我们提出了一种称为语义相关对比学习 (SRCL) 的新型 SSL 策略,它比较实例之间的相关性以挖掘更多的正对。与传统对比学习中一个实例的两个视图相比,我们的 SRCL 将多个正面实例与相似的视觉概念对齐,这增加了正面的多样性,然后产生更多信息的表示。我们采用混合模型(CTransPath ) 作为骨干,它是通过集成卷积神经网络 (CNN) 和多尺度 Swin Transformer 架构设计的。CTransPath在大量未标记的组织病理学图像上进行了预训练,这些图像可以作为协作的局部-全局特征提取器来学习更适合组织病理学图像领域任务的通用特征表示。我们的 SRCL 预训练CTransPath的有效性研究了五种类型的下游任务(补丁检索、补丁分类、弱监督全幻灯片图像分类、有丝分裂检测和结直肠腺癌腺体分割),涵盖九个公共数据集。结果表明,我们基于 SRCL 的视觉表示不仅在每个数据集中实现了最先进的性能,而且比其他 SSL 方法和 ImageNet 预训练(监督和自我监督方法)更稳健和可迁移。我们的代码和预训练模型可在 https://github.com/Xiyue-Wang/TransPath 获得。

京公网安备 11010802027423号

京公网安备 11010802027423号