当前位置:

X-MOL 学术

›

J. Chem. Inf. Model.

›

论文详情

Our official English website, www.x-mol.net, welcomes your

feedback! (Note: you will need to create a separate account there.)

Permutation Invariant Graph-to-Sequence Model for Template-Free Retrosynthesis and Reaction Prediction

Journal of Chemical Information and Modeling ( IF 5.6 ) Pub Date : 2022-07-26 , DOI: 10.1021/acs.jcim.2c00321 Zhengkai Tu 1 , Connor W Coley 2, 3

Journal of Chemical Information and Modeling ( IF 5.6 ) Pub Date : 2022-07-26 , DOI: 10.1021/acs.jcim.2c00321 Zhengkai Tu 1 , Connor W Coley 2, 3

Affiliation

|

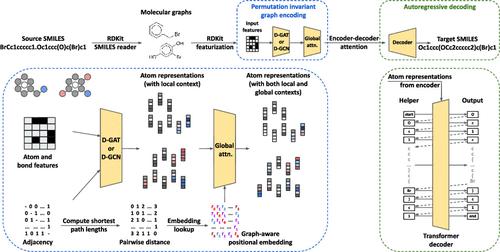

Synthesis planning and reaction outcome prediction are two fundamental problems in computer-aided organic chemistry for which a variety of data-driven approaches have emerged. Natural language approaches that model each problem as a SMILES-to-SMILES translation lead to a simple end-to-end formulation, reduce the need for data preprocessing, and enable the use of well-optimized machine translation model architectures. However, SMILES representations are not efficient for capturing information about molecular structures, as evidenced by the success of SMILES augmentation to boost empirical performance. Here, we describe a novel Graph2SMILES model that combines the power of Transformer models for text generation with the permutation invariance of molecular graph encoders that mitigates the need for input data augmentation. In our encoder, a directed message passing neural network (D-MPNN) captures local chemical environments, and the global attention encoder allows for long-range and intermolecular interactions, enhanced by graph-aware positional embedding. As an end-to-end architecture, Graph2SMILES can be used as a drop-in replacement for the Transformer in any task involving molecule(s)-to-molecule(s) transformations, which we empirically demonstrate leads to improved performance on existing benchmarks for both retrosynthesis and reaction outcome prediction.

中文翻译:

用于无模板逆合成和反应预测的置换不变图到序列模型

合成计划和反应结果预测是计算机辅助有机化学中的两个基本问题,已经出现了各种数据驱动的方法。将每个问题建模为 SMILES 到 SMILES 翻译的自然语言方法可实现简单的端到端公式化,减少对数据预处理的需求,并支持使用优化良好的机器翻译模型架构。然而,SMILES 表示在捕获有关分子结构的信息方面效率不高,SMILES 增强在提高经验性能方面的成功证明了这一点。在这里,我们描述了一种新颖的 Graph2SMILES 模型,它将用于文本生成的 Transformer 模型的强大功能与分子图编码器的排列不变性相结合,从而减轻了对输入数据增强的需求。在我们的编码器中,定向消息传递神经网络 (D-MPNN) 捕获局部化学环境,全局注意力编码器允许远程和分子间相互作用,并通过图形感知位置嵌入得到增强。作为一种端到端架构,Graph2SMILES 可以在任何涉及分子到分子转换的任务中用作 Transformer 的替代品,我们凭经验证明这可以提高现有基准的性能用于逆合成和反应结果预测。

更新日期:2022-07-26

中文翻译:

用于无模板逆合成和反应预测的置换不变图到序列模型

合成计划和反应结果预测是计算机辅助有机化学中的两个基本问题,已经出现了各种数据驱动的方法。将每个问题建模为 SMILES 到 SMILES 翻译的自然语言方法可实现简单的端到端公式化,减少对数据预处理的需求,并支持使用优化良好的机器翻译模型架构。然而,SMILES 表示在捕获有关分子结构的信息方面效率不高,SMILES 增强在提高经验性能方面的成功证明了这一点。在这里,我们描述了一种新颖的 Graph2SMILES 模型,它将用于文本生成的 Transformer 模型的强大功能与分子图编码器的排列不变性相结合,从而减轻了对输入数据增强的需求。在我们的编码器中,定向消息传递神经网络 (D-MPNN) 捕获局部化学环境,全局注意力编码器允许远程和分子间相互作用,并通过图形感知位置嵌入得到增强。作为一种端到端架构,Graph2SMILES 可以在任何涉及分子到分子转换的任务中用作 Transformer 的替代品,我们凭经验证明这可以提高现有基准的性能用于逆合成和反应结果预测。

京公网安备 11010802027423号

京公网安备 11010802027423号