Medical Image Analysis ( IF 10.7 ) Pub Date : 2022-06-11 , DOI: 10.1016/j.media.2022.102514 Sureerat Reaungamornrat 1 , Hasan Sari 2 , Ciprian Catana 2 , Ali Kamen 1

|

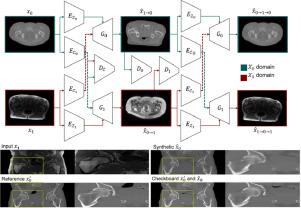

Growing number of methods for attenuation-coefficient map estimation from magnetic resonance (MR) images have recently been proposed because of the increasing interest in MR-guided radiotherapy and the introduction of positron emission tomography (PET) MR hybrid systems. We propose a deep-network ensemble incorporating stochastic-binary-anatomical encoders and imaging-modality variational autoencoders, to disentangle image-latent spaces into a space of modality-invariant anatomical features and spaces of modality attributes. The ensemble integrates modality-modulated decoders to normalize features and image intensities based on imaging modality. Besides promoting disentanglement, the architecture fosters uncooperative learning, offering ability to maintain anatomical structure in a cross-modality reconstruction. Introduction of a modality-invariant structural consistency constraint further enforces faithful embedding of anatomy. To improve training stability and fidelity of synthesized modalities, the ensemble is trained in a relativistic generative adversarial framework incorporating multiscale discriminators. Analyses of priors and network architectures as well as performance validation were performed on computed tomography (CT) and MR pelvis datasets. The proposed method demonstrated robustness against intensity inhomogeneity, improved tissue-class differentiation, and offered synthetic CT in Hounsfield units with intensities consistent and smooth across slices compared to the state-of-the-art approaches, offering median normalized mutual information of 1.28, normalized cross correlation of 0.97, and gradient cross correlation of 0.59 over 324 images.

中文翻译:

基于解剖和模态特定特征的解开表示的多模态图像合成,使用非合作相对论 GAN 学习

由于人们对 MR 引导放射治疗的兴趣日益浓厚以及正电子发射断层扫描 (PET) MR 混合系统的引入,最近提出了越来越多的从磁共振 (MR) 图像估计衰减系数图的方法。我们提出了一种结合随机二进制解剖编码器和成像模态变分自动编码器的深度网络集成,将图像潜在空间分解为模态不变解剖特征空间和模态属性空间。该整体集成了模态调制解码器,以根据成像模态对特征和图像强度进行归一化。除了促进解开之外,该架构还促进不合作学习,提供在跨模态重建中维持解剖结构的能力。模态不变的结构一致性约束的引入进一步增强了解剖结构的忠实嵌入。为了提高合成模式的训练稳定性和保真度,该集成在包含多尺度判别器的相对论生成对抗框架中进行训练。对计算机断层扫描 (CT) 和 MR 骨盆数据集进行了先验和网络架构分析以及性能验证。所提出的方法证明了对强度不均匀性的稳健性,改善了组织类别分化,并提供了 Hounsfield 单位的合成 CT,与最先进的方法相比,各切片的强度一致且平滑,提供中值归一化互信息 1.28,归一化324 个图像的互相关性为 0.97,梯度互相关性为 0.59。

京公网安备 11010802027423号

京公网安备 11010802027423号