Image and Vision Computing ( IF 4.2 ) Pub Date : 2021-05-06 , DOI: 10.1016/j.imavis.2021.104190 Yiming Lin , Jie Shen , Yujiang Wang , Maja Pantic

|

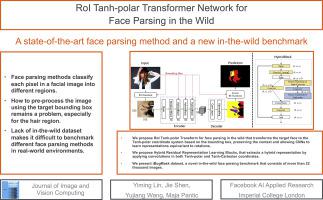

Face parsing aims to predict pixel-wise labels for facial components of a target face in an image. Existing approaches usually crop the target face from the input image with respect to a bounding box calculated during pre-processing, and thus can only parse inner facial Regions of Interest (RoIs). Peripheral regions like hair are ignored and nearby faces that are partially included in the bounding box can cause distractions. Moreover, these methods are only trained and evaluated on near-frontal portrait images and thus their performance for in-the-wild cases has been unexplored. To address these issues, this paper makes three contributions. First, we introduce iBugMask dataset for face parsing in the wild, which consists of 21,866 training images and 1000 testing images. The training images are obtained by augmenting an existing dataset with large face poses. The testing images are manually annotated with 11 facial regions and there are large variations in sizes, poses, expressions and background. Second, we propose RoI Tanh-polar transform that warps the whole image to a Tanh-polar representation with a fixed ratio between the face area and the context, guided by the target bounding box. The new representation contains all information in the original image, and allows for rotation equivariance in the convolutional neural networks (CNNs). Third, we propose a hybrid residual representation learning block, coined HybridBlock, that contains convolutional layers in both the Tanh-polar space and the Tanh-Cartesian space, allowing for receptive fields of different shapes in CNNs. Through extensive experiments, we show that the proposed method improves the state-of-the-art for face parsing in the wild and does not require facial landmarks for alignment.

中文翻译:

RoI Tanh-极地变压器网络,用于野外人脸解析

脸部分析旨在预测图像中目标脸部的脸部分量的逐像素标签。现有的方法通常相对于在预处理过程中计算出的边界框从输入图像中裁剪出目标面部,因此只能解析感兴趣的内部面部区域(RoIs)。诸如头发之类的外围区域将被忽略,并且包围盒中部分包含的附近面孔可能会引起干扰。此外,这些方法仅在近额正面肖像图像上进行训练和评估,因此尚未探索其在野外情况下的性能。为了解决这些问题,本文做出了三点贡献。首先,我们介绍了iBugMask数据集,用于野外人脸解析,该数据集包含21,866个训练图像和1000个测试图像。通过使用大脸部姿势扩充现有数据集来获得训练图像。测试图像用11个面部区域手动注释,并且大小,姿势,表情和背景存在很大差异。其次,我们提出了RoI Tanh-polar变换,该变换将整个图像扭曲为Tanh-polar表示,并在目标边界框的引导下以固定的面部面积和上下文比率进行变换。新的表示形式包含原始图像中的所有信息,并允许卷积神经网络(CNN)中的旋转等方差。第三,我们提出了一个混合残差表示学习块,称为“混合块”,该块在Tanh-极空间和Tanh-Cartesian空间中都包含卷积层,从而允许CNN中具有不同形状的接收场。通过广泛的实验,

京公网安备 11010802027423号

京公网安备 11010802027423号