Physics Letters A ( IF 2.3 ) Pub Date : 2021-05-01 , DOI: 10.1016/j.physleta.2021.127387 Xiaojie Liu , Lingling Duan , Fabing Duan , François Chapeau-Blondeau , Derek Abbott

|

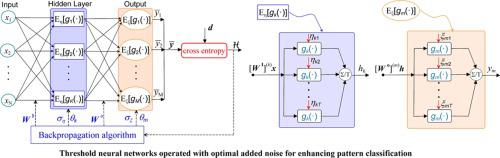

Hard-threshold nonlinearities are of significant interest for neural-network information processing due to their simplicity and low-cost implementation. They however lack an important differentiability property. Here, hard-threshold nonlinearities receiving assistance from added noise are pooled into a large-scale summing array to approximate a neuron with a noise-smoothed activation function. Differentiability that facilitates gradient-based learning is restored for such neurons, which are assembled into a feed-forward neural network. The added noise components used to smooth the hard-threshold responses have adjustable parameters that are adaptively optimized during the learning process. The converged non-zero optimal noise levels establish a beneficial role for added noise in operation of the threshold neural network. In the retrieval phase the threshold neural network operating with non-zero optimal added noise, is tested for data classification and for handwritten digit recognition, which achieves state-of-the-art performance of existing backpropagation-trained analog neural networks, while requiring only simpler two-state binary neurons.

中文翻译:

通过超阈值随机共振增强阈值神经网络进行模式分类

硬阈值非线性由于其简单性和低成本实现而对于神经网络信息处理具有重大意义。但是,它们缺乏重要的差异性。在这里,从添加的噪声中获得帮助的硬阈值非线性被合并到一个大型求和数组中,以近似具有噪声平滑激活函数的神经元。对于这样的神经元,恢复了促进基于梯度的学习的可微性,这些神经元被组装成前馈神经网络。用于平滑硬阈值响应的附加噪声成分具有可调整的参数,这些参数在学习过程中会进行自适应优化。收敛的非零最佳噪声水平在阈值神经网络的操作中为增加的噪声建立了有益的作用。

京公网安备 11010802027423号

京公网安备 11010802027423号