Computers & Graphics ( IF 2.5 ) Pub Date : 2021-05-01 , DOI: 10.1016/j.cag.2021.04.030 Heng Zhang , Yuanyuan Pu , Rencan Nie , Dan Xu , Zhengpeng Zhao , Wenhua Qian

|

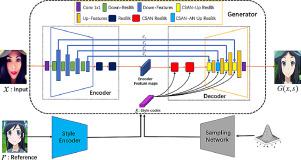

We propose a novel unsupervised image translation model following an end-to-end manner,which incorporates Content-Style Adaptive Normalization(CSAN) and Attentive Normalization(AN). First of all, a new attentive normalization is applied for the first time in the style transfer task, which is an improvement and supplement to the traditional instance normalization, it helps to guide the model to pay more attention to the key areas in image translation, while ignoring the secondary areas. Secondly, our proposed CSAN function absorbs not only information of style codes, but also that of content codes. Compared with Adaptive Instance Normalization(AdaIN), CSAN is more favorable to retain content information of input images. In addition, CSAN can help the attention mechanism to flexibly control the amount of change in texture and shape of input images. Finally, a series of comparative experiments and qualitative and quantitative evaluations on the challenging datasets prove that the proposed model is superior and more advanced than State-Of-The-Art(SOTA) in terms of visual quality, diversity,semantic integrity, and style reflection of generated images.

中文翻译:

内容样式自适应归一化和注意力归一化相结合的多模态图像合成

我们遵循端到端的方式提出了一种新颖的无监督图像转换模型,该模型结合了内容样式自适应归一化(CSAN)和专心归一化(AN)。首先,样式转移任务中首次应用了新的注意力标准化,这是对传统实例标准化的改进和补充,它有助于指导模型更加关注图像翻译的关键领域,而忽略了次要领域。其次,我们提出的CSAN功能不仅吸收样式代码的信息,而且吸收内容代码的信息。与自适应实例规范化(AdaIN)相比,CSAN更适合保留输入图像的内容信息。另外,CSAN可以帮助注意力机制灵活地控制输入图像的纹理和形状的变化量。

京公网安备 11010802027423号

京公网安备 11010802027423号