Energy and Buildings ( IF 6.7 ) Pub Date : 2021-02-20 , DOI: 10.1016/j.enbuild.2021.110833 Zhanhong Jiang , Michael J. Risbeck , Vish Ramamurti , Sugumar Murugesan , Jaume Amores , Chenlu Zhang , Young M. Lee , Kirk H. Drees

|

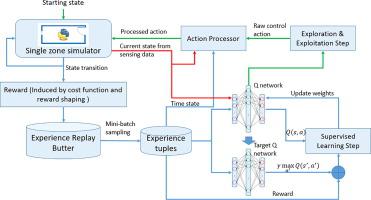

Energy efficiency remains a significant topic in the control of building heating, ventilation, and air-conditioning (HVAC) systems, and diverse set of control strategies have been developed to optimize performance, including recently emerging techniques of deep reinforcement learning (DRL). While most existing works have focused on minimizing energy consumption, the generalization to energy cost minimization under time-varying electricity price profiles and demand charges has rarely been studied. Under these utility structures, significant cost savings can be achieved by pre-cooling buildings in the early morning when electricity is cheaper, thereby reducing expensive afternoon consumption and lowering peak demand. However, correctly identifying these savings requires planning horizons of one day or more. To tackle this problem, we develop Deep Q-Network (DQN) with an action processor, defining the environment as a Partially Observable Markov Decision Process (POMDP) with a reward function consisting of energy cost (time-of-use and peak demand charges) and a discomfort penalty, which is an extension of most reward functions used in existing DRL works in this area. Moreover, we develop a reward shaping technique to overcome the issue of reward sparsity caused by the demand charge. Through a single-zone building simulation platform, we demonstrate that the customized DQN outperforms the baseline rule-based policy, saving close to 6% of total cost with demand charges, while close to 8% without demand charges.

中文翻译:

通过加强学习来构建HVAC控制,以降低能源成本和需求费用

能源效率仍然是控制建筑采暖,通风和空调(HVAC)系统的重要课题,并且已经开发出多种控制策略来优化性能,包括最近出现的深度强化学习(DRL)技术。虽然大多数现有工作都集中在最大程度地减少能源消耗上,但归纳为能源成本很少研究时变电价曲线和需求费用下的最小化。在这些公用事业结构下,可以通过在电价便宜的清晨对建筑物进行预冷却来节省大量成本,从而减少昂贵的下午消耗并降低高峰需求。但是,正确识别这些节省需要计划一天或更长的时间。为了解决此问题,我们开发了具有动作处理器的深度Q网络(DQN),将环境定义为部分可观察的马尔可夫决策过程(POMDP),其奖励功能包括能源成本(使用时间和高峰需求费用) )和不适感惩罚,这是该领域现有DRL工作中使用的大多数奖励功能的扩展。而且,我们开发了一种奖励塑造技术,以克服由需求收费导致的奖励稀疏性问题。通过单区域建筑仿真平台,我们证明了定制的DQN优于基于规则的基准策略,在按需收费的情况下可节省近6%的总成本,而在不按需收费的情况下可节省近8%的成本。

京公网安备 11010802027423号

京公网安备 11010802027423号