Computers & Electrical Engineering ( IF 4.0 ) Pub Date : 2020-12-30 , DOI: 10.1016/j.compeleceng.2020.106943 Dan Oneață , Horia Cucu

|

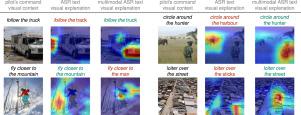

Unmanned aerial vehicles (UAVs) are becoming widespread with applications ranging from film-making and journalism to rescue operations and surveillance. Research communities (speech processing, computer vision, control) are starting to explore the limits of UAVs, but their efforts remain somewhat isolated. In this paper we unify multiple modalities (speech, vision, language) into a speech interface for UAV control. Our goal is to perform unconstrained speech recognition while leveraging the visual context. To this end, we introduce a multimodal evaluation dataset, consisting of spoken commands and associated images, which represent the visual context of what the UAV “sees” when the pilot utters the command. We provide baseline results and address two main research directions. First, we investigate the robustness of the system by (i) training it with a partial list of commands, and (ii) corrupting the recordings with outdoor noise. We perform a controlled set of experiments by varying the size of the training data and the signal-to-noise ratio. Second, we look at how to incorporate visual information into our model. We show that we can incorporate visual cues in the pipeline through the language model, which we implemented using a recurrent neural network. Moreover, by using gradient activation maps the system can provide visual feedback to the pilot regarding the UAV’s understanding of the command. Our conclusions are that multimodal speech recognition can be successfully used in this scenario and that visual information helps especially when the noise level is high. The dataset and our code are available at http://kite.speed.pub.ro.

中文翻译:

无人机的多模式语音识别

无人驾驶飞机(UAV)的应用范围非常广泛,从电影制作和新闻业到救援行动和监视。研究社区(语音处理,计算机视觉,控制)开始探索无人机的局限性,但是他们的努力仍然有些孤立。在本文中,我们将多种模式(语音,视觉,语言)统一到用于UAV控制的语音界面中。我们的目标是在利用视觉环境的同时执行无限制的语音识别。为此,我们引入了一个多模态评估数据集,该数据集由口头命令和相关图像组成,它们表示飞行员发出命令时无人机“看到”的视觉内容。我们提供基准结果并解决两个主要的研究方向。首先,我们通过(i)使用部分命令列表对其进行训练,以及(ii)由于室外噪音而破坏了录音。我们通过改变训练数据的大小和信噪比来执行一组受控的实验。其次,我们研究如何将视觉信息整合到我们的模型中。我们展示了我们可以通过语言模型将视觉提示整合到管道中,该语言模型是使用递归神经网络实现的。此外,通过使用梯度激活图,系统可以向飞行员提供有关无人机对命令的理解的视觉反馈。我们的结论是,多模式语音识别可以在这种情况下成功使用,并且视觉信息尤其在噪声水平较高时会有所帮助。数据集和我们的代码可在http://kite.speed.pub.ro获得。

京公网安备 11010802027423号

京公网安备 11010802027423号