当前位置:

X-MOL 学术

›

Energy Technol.

›

论文详情

Our official English website, www.x-mol.net, welcomes your

feedback! (Note: you will need to create a separate account there.)

A Double‐Deep Q‐Network‐Based Energy Management Strategy for Hybrid Electric Vehicles under Variable Driving Cycles

Energy Technology ( IF 3.6 ) Pub Date : 2020-12-22 , DOI: 10.1002/ente.202000770 Jiaqi Zhang 1, 2 , Xiaohong Jiao 1, 2 , Chao Yang 3

Energy Technology ( IF 3.6 ) Pub Date : 2020-12-22 , DOI: 10.1002/ente.202000770 Jiaqi Zhang 1, 2 , Xiaohong Jiao 1, 2 , Chao Yang 3

Affiliation

|

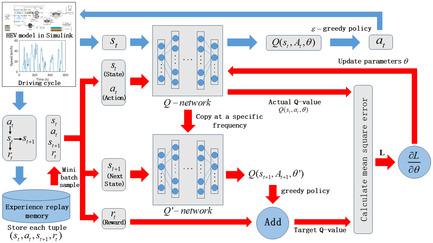

As a core part of hybrid electric vehicles (HEVs), energy management strategy (EMS) directly affects the vehicle fuel‐saving performance by regulating energy flow between engine and battery. Currently, most studies on EMS are focused on buses or commuter private cars, whose driving cycles are relatively fixed. However, there is also a great demand for the EMS that adapts to variable driving cycles. The rise of machine learning, especially deep learning and reinforcement learning, provides a new opportunity for the design of EMS for HEVs. Motivated by this issue, herein, a double‐deep Q‐network (DDQN)‐based EMS for HEVs under variable driving cycles is proposed. The distance traveled of the driving cycle is creatively introduced as states into the DDQN‐based EMS of HEV. The relevant problem of “curse of dimensionality” caused by choosing too many states in the process of training is solved via the good generalization of deep neural network. For the problem of overestimation in model training, two different neural networks are designed for action selection and target value calculation, respectively. The effectiveness and adaptability to variable driving cycles of the proposed DDQN‐based EMS are verified by simulation comparison with Q‐learning‐based EMS and rule‐based EMS for improving fuel economy.

中文翻译:

可变行驶循环下基于双深度Q网络的混合动力汽车能源管理策略

作为混合动力汽车(HEV)的核心部分,能源管理策略(EMS)通过调节引擎与电池之间的能量流直接影响汽车的节油性能。当前,大多数关于EMS的研究都集中在公交车或通勤私家车上,它们的驾驶周期相对固定。然而,对适应可变驾驶周期的EMS也有很大的需求。机器学习(尤其是深度学习和强化学习)的兴起为混合动力汽车的EMS设计提供了新的机会。出于这个问题的动机,本文提出了一种在可变行驶周期下的混合动力汽车基于双深层Q网络(DDQN)的EMS。驾驶循环的行驶距离作为状态被创造性地引入了基于DDQN的HEV EMS中。通过深度神经网络的良好推广,解决了在训练过程中选择过多状态而导致的“维数诅咒”相关问题。对于模型训练中的高估问题,分别设计了两个不同的神经网络来进行动作选择和目标值计算。通过与基于Q学习的EMS和基于规则的EMS进行仿真比较,以验证所提出的基于DDQN的EMS的有效性和适应性,以改善燃油经济性。

更新日期:2021-02-05

中文翻译:

可变行驶循环下基于双深度Q网络的混合动力汽车能源管理策略

作为混合动力汽车(HEV)的核心部分,能源管理策略(EMS)通过调节引擎与电池之间的能量流直接影响汽车的节油性能。当前,大多数关于EMS的研究都集中在公交车或通勤私家车上,它们的驾驶周期相对固定。然而,对适应可变驾驶周期的EMS也有很大的需求。机器学习(尤其是深度学习和强化学习)的兴起为混合动力汽车的EMS设计提供了新的机会。出于这个问题的动机,本文提出了一种在可变行驶周期下的混合动力汽车基于双深层Q网络(DDQN)的EMS。驾驶循环的行驶距离作为状态被创造性地引入了基于DDQN的HEV EMS中。通过深度神经网络的良好推广,解决了在训练过程中选择过多状态而导致的“维数诅咒”相关问题。对于模型训练中的高估问题,分别设计了两个不同的神经网络来进行动作选择和目标值计算。通过与基于Q学习的EMS和基于规则的EMS进行仿真比较,以验证所提出的基于DDQN的EMS的有效性和适应性,以改善燃油经济性。

京公网安备 11010802027423号

京公网安备 11010802027423号