当前位置:

X-MOL 学术

›

Hum. Brain Mapp.

›

论文详情

Our official English website, www.x-mol.net, welcomes your

feedback! (Note: you will need to create a separate account there.)

Categorizing human vocal signals depends on an integrated auditory‐frontal cortical network

Human Brain Mapping ( IF 3.5 ) Pub Date : 2020-12-08 , DOI: 10.1002/hbm.25309 Claudia Roswandowitz 1, 2 , Huw Swanborough 1, 2 , Sascha Frühholz 1, 2, 3

Human Brain Mapping ( IF 3.5 ) Pub Date : 2020-12-08 , DOI: 10.1002/hbm.25309 Claudia Roswandowitz 1, 2 , Huw Swanborough 1, 2 , Sascha Frühholz 1, 2, 3

Affiliation

|

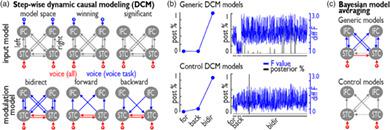

Voice signals are relevant for auditory communication and suggested to be processed in dedicated auditory cortex (AC) regions. While recent reports highlighted an additional role of the inferior frontal cortex (IFC), a detailed description of the integrated functioning of the AC–IFC network and its task relevance for voice processing is missing. Using neuroimaging, we tested sound categorization while human participants either focused on the higher‐order vocal‐sound dimension (voice task) or feature‐based intensity dimension (loudness task) while listening to the same sound material. We found differential involvements of the AC and IFC depending on the task performed and whether the voice dimension was of task relevance or not. First, when comparing neural vocal‐sound processing of our task‐based with previously reported passive listening designs we observed highly similar cortical activations in the AC and IFC. Second, during task‐based vocal‐sound processing we observed voice‐sensitive responses in the AC and IFC whereas intensity processing was restricted to distinct AC regions. Third, the IFC flexibly adapted to the vocal‐sounds' task relevance, being only active when the voice dimension was task relevant. Forth and finally, connectivity modeling revealed that vocal signals independent of their task relevance provided significant input to bilateral AC. However, only when attention was on the voice dimension, we found significant modulations of auditory‐frontal connections. Our findings suggest an integrated auditory‐frontal network to be essential for behaviorally relevant vocal‐sounds processing. The IFC seems to be an important hub of the extended voice network when representing higher‐order vocal objects and guiding goal‐directed behavior.

中文翻译:

人类声音信号的分类取决于集成的听觉额叶皮层网络

语音信号与听觉交流相关,建议在专门的听觉皮层 (AC) 区域进行处理。虽然最近的报告强调了下额皮质 (IFC) 的额外作用,但缺少对 AC-IFC 网络的综合功能及其与语音处理的任务相关性的详细描述。使用神经影像学,我们测试了声音分类,而人类参与者在聆听相同的声音材料时要么关注高阶声音维度(语音任务),要么关注基于特征的强度维度(响度任务)。我们发现 AC 和 IFC 的参与程度不同,具体取决于所执行的任务以及声音维度是否与任务相关。首先,当将我们基于任务的神经声音处理与之前报道的被动聆听设计进行比较时,我们观察到 AC 和 IFC 中的皮层激活高度相似。其次,在基于任务的声音处理过程中,我们观察到 AC 和 IFC 中的声音敏感反应,而强度处理仅限于不同的 AC 区域。第三,IFC 灵活地适应声音的任务相关性,仅当语音维度与任务相关时才激活。最后,连接模型显示,独立于任务相关性的声音信号为双边 AC 提供了重要的输入。然而,只有当注意力集中在声音维度时,我们才发现听觉额叶连接的显着调节。我们的研究结果表明,集成的听觉额叶网络对于行为相关的声音处理至关重要。在表示高阶声音对象和指导目标导向行为时,IFC 似乎是扩展语音网络的重要枢纽。

更新日期:2020-12-08

中文翻译:

人类声音信号的分类取决于集成的听觉额叶皮层网络

语音信号与听觉交流相关,建议在专门的听觉皮层 (AC) 区域进行处理。虽然最近的报告强调了下额皮质 (IFC) 的额外作用,但缺少对 AC-IFC 网络的综合功能及其与语音处理的任务相关性的详细描述。使用神经影像学,我们测试了声音分类,而人类参与者在聆听相同的声音材料时要么关注高阶声音维度(语音任务),要么关注基于特征的强度维度(响度任务)。我们发现 AC 和 IFC 的参与程度不同,具体取决于所执行的任务以及声音维度是否与任务相关。首先,当将我们基于任务的神经声音处理与之前报道的被动聆听设计进行比较时,我们观察到 AC 和 IFC 中的皮层激活高度相似。其次,在基于任务的声音处理过程中,我们观察到 AC 和 IFC 中的声音敏感反应,而强度处理仅限于不同的 AC 区域。第三,IFC 灵活地适应声音的任务相关性,仅当语音维度与任务相关时才激活。最后,连接模型显示,独立于任务相关性的声音信号为双边 AC 提供了重要的输入。然而,只有当注意力集中在声音维度时,我们才发现听觉额叶连接的显着调节。我们的研究结果表明,集成的听觉额叶网络对于行为相关的声音处理至关重要。在表示高阶声音对象和指导目标导向行为时,IFC 似乎是扩展语音网络的重要枢纽。

京公网安备 11010802027423号

京公网安备 11010802027423号