Journal of Biomedical informatics ( IF 4.0 ) Pub Date : 2020-10-22 , DOI: 10.1016/j.jbi.2020.103607 Hao Liu 1 , Yehoshua Perl 1 , James Geller 1

|

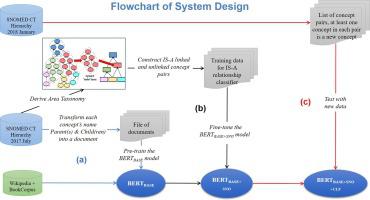

The comprehensive modeling and hierarchical positioning of a new concept in an ontology heavily relies on its set of proper subsumption relationships (IS-As) to other concepts. Identifying a concept’s IS-A relationships is a laborious task requiring curators to have both domain knowledge and terminology skills. In this work, we propose a method to automatically predict the presence of IS-A relationships between a new concept and pre-existing concepts based on the language representation model BERT. This method converts the neighborhood network of a concept into “sentences” and harnesses BERT’s Next Sentence Prediction (NSP) capability of predicting the adjacency of two sentences. To augment our method’s performance, we refined the training data by employing an ontology summarization technique. We trained our model with the two largest hierarchies of the SNOMED CT 2017 July release and applied it to predicting the parents of new concepts added in the SNOMED CT 2018 January release. The results showed that our method achieved an average F1 score of 0.88, and the average Recall score improves slightly from 0.94 to 0.96 by using the ontology summarization technique.

中文翻译:

使用通过转换和总结生物医学本体结构训练的 BERT 进行概念放置

本体中新概念的综合建模和层次定位在很大程度上依赖于它与其他概念的适当包含关系 (IS-As) 的集合。识别概念的 IS-A 关系是一项艰巨的任务,要求策展人同时具备领域知识和术语技能。在这项工作中,我们提出了一种基于语言表示模型 BERT 自动预测新概念和预先存在的概念之间存在 IS-A 关系的方法。该方法将概念的邻域网络转换为“句子”,并利用 BERT 的下一句预测 (NSP) 能力来预测两个句子的邻接关系。为了增强我们方法的性能,我们通过使用本体总结技术来改进训练数据。我们使用 SNOMED CT 2017 年 7 月版的两个最大层次结构训练我们的模型,并将其应用于预测 SNOMED CT 2018 年 1 月版中添加的新概念的父级。结果表明,我们的方法获得了 0.88 的平均 F1 分数,并且通过使用本体摘要技术,平均召回分数从 0.94 略微提高到 0.96。

京公网安备 11010802027423号

京公网安备 11010802027423号