当前位置:

X-MOL 学术

›

Ecol. Evol.

›

论文详情

Our official English website, www.x-mol.net, welcomes your

feedback! (Note: you will need to create a separate account there.)

Improving the accessibility and transferability of machine learning algorithms for identification of animals in camera trap images: MLWIC2

Ecology and Evolution ( IF 2.3 ) Pub Date : 2020-09-16 , DOI: 10.1002/ece3.6692 Michael A Tabak 1, 2 , Mohammad S Norouzzadeh 3 , David W Wolfson 4 , Erica J Newton 5 , Raoul K Boughton 6 , Jacob S Ivan 7 , Eric A Odell 7 , Eric S Newkirk 7 , Reesa Y Conrey 7 , Jennifer Stenglein 8 , Fabiola Iannarilli 9 , John Erb 10 , Ryan K Brook 11 , Amy J Davis 12 , Jesse Lewis 13 , Daniel P Walsh 14 , James C Beasley 15 , Kurt C VerCauteren 16 , Jeff Clune 17 , Ryan S Miller 18

Ecology and Evolution ( IF 2.3 ) Pub Date : 2020-09-16 , DOI: 10.1002/ece3.6692 Michael A Tabak 1, 2 , Mohammad S Norouzzadeh 3 , David W Wolfson 4 , Erica J Newton 5 , Raoul K Boughton 6 , Jacob S Ivan 7 , Eric A Odell 7 , Eric S Newkirk 7 , Reesa Y Conrey 7 , Jennifer Stenglein 8 , Fabiola Iannarilli 9 , John Erb 10 , Ryan K Brook 11 , Amy J Davis 12 , Jesse Lewis 13 , Daniel P Walsh 14 , James C Beasley 15 , Kurt C VerCauteren 16 , Jeff Clune 17 , Ryan S Miller 18

Affiliation

|

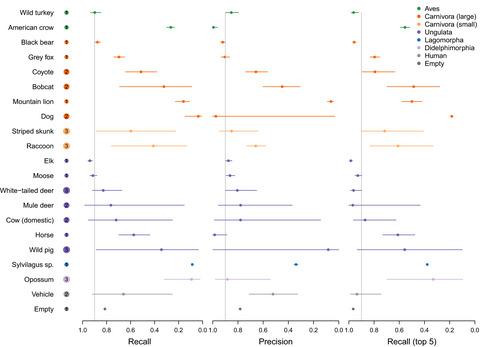

Motion‐activated wildlife cameras (or “camera traps”) are frequently used to remotely and noninvasively observe animals. The vast number of images collected from camera trap projects has prompted some biologists to employ machine learning algorithms to automatically recognize species in these images, or at least filter‐out images that do not contain animals. These approaches are often limited by model transferability, as a model trained to recognize species from one location might not work as well for the same species in different locations. Furthermore, these methods often require advanced computational skills, making them inaccessible to many biologists. We used 3 million camera trap images from 18 studies in 10 states across the United States of America to train two deep neural networks, one that recognizes 58 species, the “species model,” and one that determines if an image is empty or if it contains an animal, the “empty‐animal model.” Our species model and empty‐animal model had accuracies of 96.8% and 97.3%, respectively. Furthermore, the models performed well on some out‐of‐sample datasets, as the species model had 91% accuracy on species from Canada (accuracy range 36%–91% across all out‐of‐sample datasets) and the empty‐animal model achieved an accuracy of 91%–94% on out‐of‐sample datasets from different continents. Our software addresses some of the limitations of using machine learning to classify images from camera traps. By including many species from several locations, our species model is potentially applicable to many camera trap studies in North America. We also found that our empty‐animal model can facilitate removal of images without animals globally. We provide the trained models in an R package (MLWIC2: Machine Learning for Wildlife Image Classification in R), which contains Shiny Applications that allow scientists with minimal programming experience to use trained models and train new models in six neural network architectures with varying depths.

中文翻译:

提高机器学习算法的可访问性和可转移性,以识别相机陷阱图像中的动物:MLWIC2

动作激活的野生动物摄像机(或“相机陷阱”)经常用于远程和非侵入性地观察动物。从相机陷阱项目收集的大量图像促使一些生物学家采用机器学习算法来自动识别这些图像中的物种,或者至少过滤掉不包含动物的图像。这些方法通常受到模型可迁移性的限制,因为经过训练以识别来自一个位置的物种的模型可能无法很好地识别不同位置的同一物种。此外,这些方法通常需要先进的计算技能,这使得许多生物学家无法使用它们。我们使用了来自美国 10 个州的 18 项研究的 300 万张相机陷阱图像来训练两个深度神经网络,一个可以识别 58 个物种,即“物种模型”,另一个可以确定图像是否为空或是否为空图像。包含一种动物,即“空动物模型”。我们的物种模型和空动物模型的准确度分别为 96.8% 和 97.3%。此外,这些模型在一些样本外数据集上表现良好,因为物种模型对加拿大物种的准确度为 91%(所有样本外数据集的准确度范围为 36%–91%),而空动物模型在来自不同大陆的样本外数据集上实现了 91%–94% 的准确率。我们的软件解决了使用机器学习对相机陷阱图像进行分类的一些限制。通过包含来自多个地点的许多物种,我们的物种模型可能适用于北美的许多相机陷阱研究。我们还发现,我们的空动物模型可以促进全局范围内没有动物的图像的删除。 我们在 R 包中提供经过训练的模型(MLWIC2:R 中野生动物图像分类的机器学习),其中包含闪亮的应用程序,允许具有最少编程经验的科学家使用经过训练的模型并在具有不同深度的六种神经网络架构中训练新模型。

更新日期:2020-10-12

中文翻译:

提高机器学习算法的可访问性和可转移性,以识别相机陷阱图像中的动物:MLWIC2

动作激活的野生动物摄像机(或“相机陷阱”)经常用于远程和非侵入性地观察动物。从相机陷阱项目收集的大量图像促使一些生物学家采用机器学习算法来自动识别这些图像中的物种,或者至少过滤掉不包含动物的图像。这些方法通常受到模型可迁移性的限制,因为经过训练以识别来自一个位置的物种的模型可能无法很好地识别不同位置的同一物种。此外,这些方法通常需要先进的计算技能,这使得许多生物学家无法使用它们。我们使用了来自美国 10 个州的 18 项研究的 300 万张相机陷阱图像来训练两个深度神经网络,一个可以识别 58 个物种,即“物种模型”,另一个可以确定图像是否为空或是否为空图像。包含一种动物,即“空动物模型”。我们的物种模型和空动物模型的准确度分别为 96.8% 和 97.3%。此外,这些模型在一些样本外数据集上表现良好,因为物种模型对加拿大物种的准确度为 91%(所有样本外数据集的准确度范围为 36%–91%),而空动物模型在来自不同大陆的样本外数据集上实现了 91%–94% 的准确率。我们的软件解决了使用机器学习对相机陷阱图像进行分类的一些限制。通过包含来自多个地点的许多物种,我们的物种模型可能适用于北美的许多相机陷阱研究。我们还发现,我们的空动物模型可以促进全局范围内没有动物的图像的删除。 我们在 R 包中提供经过训练的模型(MLWIC2:R 中野生动物图像分类的机器学习),其中包含闪亮的应用程序,允许具有最少编程经验的科学家使用经过训练的模型并在具有不同深度的六种神经网络架构中训练新模型。

京公网安备 11010802027423号

京公网安备 11010802027423号