当前位置:

X-MOL 学术

›

WIREs Data Mining Knowl. Discov.

›

论文详情

Our official English website, www.x-mol.net, welcomes your

feedback! (Note: you will need to create a separate account there.)

Interpretability of machine learning‐based prediction models in healthcare

WIREs Data Mining and Knowledge Discovery ( IF 6.4 ) Pub Date : 2020-06-29 , DOI: 10.1002/widm.1379 Gregor Stiglic 1, 2 , Primoz Kocbek 1 , Nino Fijacko 1 , Marinka Zitnik 3 , Katrien Verbert 4 , Leona Cilar 1

WIREs Data Mining and Knowledge Discovery ( IF 6.4 ) Pub Date : 2020-06-29 , DOI: 10.1002/widm.1379 Gregor Stiglic 1, 2 , Primoz Kocbek 1 , Nino Fijacko 1 , Marinka Zitnik 3 , Katrien Verbert 4 , Leona Cilar 1

Affiliation

|

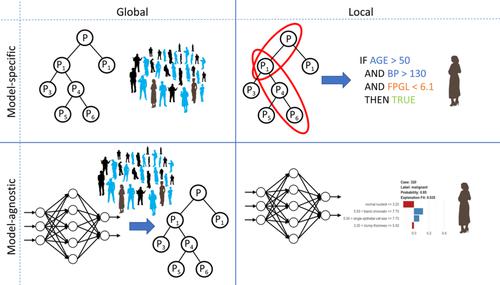

There is a need of ensuring that learning (ML) models are interpretable. Higher interpretability of the model means easier comprehension and explanation of future predictions for end‐users. Further, interpretable ML models allow healthcare experts to make reasonable and data‐driven decisions to provide personalized decisions that can ultimately lead to higher quality of service in healthcare. Generally, we can classify interpretability approaches in two groups where the first focuses on personalized interpretation (local interpretability) while the second summarizes prediction models on a population level (global interpretability). Alternatively, we can group interpretability methods into model‐specific techniques, which are designed to interpret predictions generated by a specific model, such as a neural network, and model‐agnostic approaches, which provide easy‐to‐understand explanations of predictions made by any ML model. Here, we give an overview of interpretability approaches using structured data and provide examples of practical interpretability of ML in different areas of healthcare, including prediction of health‐related outcomes, optimizing treatments, or improving the efficiency of screening for specific conditions. Further, we outline future directions for interpretable ML and highlight the importance of developing algorithmic solutions that can enable ML driven decision making in high‐stakes healthcare problems.

中文翻译:

基于机器学习的预测模型在医疗保健中的可解释性

需要确保学习(ML)模型是可解释的。该模型的更高可解释性意味着更容易理解和解释最终用户的未来预测。此外,可解释的机器学习模型使医疗保健专家能够做出合理的,由数据驱动的决策,从而提供个性化的决策,从而最终提高医疗保健服务的质量。通常,我们可以将可解释性方法分为两类,第一个集中于个性化解释(本地可解释性),而第二个概述人口层次上的预测模型(全局可解释性)。另外,我们可以将可解释性方法归类为特定于模型的技术,这些技术旨在解释由特定模型(例如神经网络)和模型不可知方法生成的预测,它提供了对任何ML模型所做的预测的易于理解的解释。在此,我们概述了使用结构化数据的可解释性方法,并提供了在医疗保健不同领域中ML的实际可解释性的示例,包括预测与健康相关的结果,优化治疗方法或提高针对特定病症的筛查效率。此外,我们概述了可解释的ML的未来方向,并强调了开发算法解决方案的重要性,该解决方案可以在高风险医疗保健问题中支持ML驱动的决策。包括预测与健康相关的结果,优化治疗方法或提高针对特定疾病的筛查效率。此外,我们概述了可解释的ML的未来方向,并强调了开发算法解决方案的重要性,该解决方案可以在高风险医疗保健问题中支持ML驱动的决策。包括预测与健康相关的结果,优化治疗方法或提高针对特定疾病的筛查效率。此外,我们概述了可解释的ML的未来方向,并强调了开发算法解决方案的重要性,该解决方案可以在高风险医疗保健问题中支持ML驱动的决策。

更新日期:2020-06-29

中文翻译:

基于机器学习的预测模型在医疗保健中的可解释性

需要确保学习(ML)模型是可解释的。该模型的更高可解释性意味着更容易理解和解释最终用户的未来预测。此外,可解释的机器学习模型使医疗保健专家能够做出合理的,由数据驱动的决策,从而提供个性化的决策,从而最终提高医疗保健服务的质量。通常,我们可以将可解释性方法分为两类,第一个集中于个性化解释(本地可解释性),而第二个概述人口层次上的预测模型(全局可解释性)。另外,我们可以将可解释性方法归类为特定于模型的技术,这些技术旨在解释由特定模型(例如神经网络)和模型不可知方法生成的预测,它提供了对任何ML模型所做的预测的易于理解的解释。在此,我们概述了使用结构化数据的可解释性方法,并提供了在医疗保健不同领域中ML的实际可解释性的示例,包括预测与健康相关的结果,优化治疗方法或提高针对特定病症的筛查效率。此外,我们概述了可解释的ML的未来方向,并强调了开发算法解决方案的重要性,该解决方案可以在高风险医疗保健问题中支持ML驱动的决策。包括预测与健康相关的结果,优化治疗方法或提高针对特定疾病的筛查效率。此外,我们概述了可解释的ML的未来方向,并强调了开发算法解决方案的重要性,该解决方案可以在高风险医疗保健问题中支持ML驱动的决策。包括预测与健康相关的结果,优化治疗方法或提高针对特定疾病的筛查效率。此外,我们概述了可解释的ML的未来方向,并强调了开发算法解决方案的重要性,该解决方案可以在高风险医疗保健问题中支持ML驱动的决策。

京公网安备 11010802027423号

京公网安备 11010802027423号