Computer-Aided Design ( IF 3.0 ) Pub Date : 2020-06-24 , DOI: 10.1016/j.cad.2020.102906 Ramin Bostanabad

|

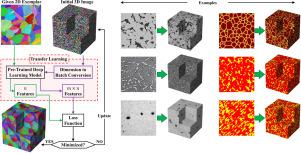

Computational analysis, modeling, and prediction of many phenomena in materials require a three-dimensional (3D) microstructure sample that embodies the salient features of the material system under study. Since acquiring 3D microstructural images is expensive and time-consuming, an alternative approach is to extrapolate a 2D image (aka exemplar) into a virtual 3D sample and thereafter use the 3D image in the analyses and design. In this paper, we introduce an efficient and novel approach based on transfer learning to accomplish this extrapolation-based reconstruction for a wide range of microstructures including alloys, porous media, and polycrystalline. We cast the reconstruction task as an optimization problem where a random 3D image is iteratively refined to match its microstructural features to those of the exemplar. VGG19, a pre-trained deep convolutional neural network, constitutes the backbone of this optimization where it is used to obtain the microstructural features and construct the objective function. By augmenting the architecture of VGG19 with a permutation operator, we enable it to take 3D images as inputs and generate a collection of 2D features that approximate an underlying 3D feature map. We demonstrate the applications of our approach with nine examples on various microstructure samples and image types (grayscale, binary, and RGB). As measured by independent statistical metrics, our approach ensures the statistical equivalency between the 3D reconstructed samples and the corresponding 2D exemplar quite well.

中文翻译:

通过转移学习从2D图像重建3D微观结构

材料中许多现象的计算分析,建模和预测需要一个三维(3D)微观结构样本,该样本体现了所研究材料系统的显着特征。由于获取3D微结构图像既昂贵又费时,因此,另一种方法是将2D图像(又名示例)外推到虚拟3D样本中,然后在分析和设计中使用3D图像。在本文中,我们介绍了一种基于转移学习的有效且新颖的方法,以针对多种微观结构(包括合金,多孔介质和多晶)完成基于外推的重构。我们将重建任务视为一个优化问题,其中对3D随机图像进行迭代优化以使其微观结构与示例相匹配。VGG19,预训练的深度卷积神经网络构成了此优化的主干,用于获取微观结构特征并构建目标函数。通过使用置换运算符扩展VGG19的体系结构,我们使它能够将3D图像用作输入并生成近似基础3D特征图的2D特征集合。我们通过在各种微观结构样本和图像类型(灰度,二进制和RGB)上的9个示例演示了我们方法的应用。通过独立的统计指标进行衡量,我们的方法可确保3D重构样本与相应的2D示例之间的统计等效性很好。构成了此优化的主干,用于获取微观结构特征并构建目标函数。通过使用置换运算符扩展VGG19的体系结构,我们使它能够将3D图像用作输入并生成近似基础3D特征图的2D特征集合。我们通过在各种微观结构样本和图像类型(灰度,二进制和RGB)上的9个示例演示了我们方法的应用。通过独立的统计指标进行衡量,我们的方法可确保3D重构样本与相应的2D示例之间的统计等效性很好。构成了此优化的主干,用于获取微观结构特征并构建目标函数。通过使用置换运算符扩展VGG19的体系结构,我们使它能够将3D图像用作输入并生成近似基础3D特征图的2D特征集合。我们通过在各种微观结构样本和图像类型(灰度,二进制和RGB)上的9个示例演示了我们方法的应用。通过独立的统计指标进行衡量,我们的方法可确保3D重构样本与相应的2D示例之间的统计等效性很好。我们使它能够将3D图像用作输入,并生成近似基础3D特征图的2D特征集合。我们通过在各种微观结构样本和图像类型(灰度,二进制和RGB)上的9个示例演示了我们方法的应用。通过独立的统计指标进行衡量,我们的方法可确保3D重构样本与相应的2D示例之间的统计等效性很好。我们使它能够将3D图像作为输入,并生成近似基础3D特征图的2D特征集合。我们通过在各种微观结构样本和图像类型(灰度,二进制和RGB)上的9个示例演示了我们方法的应用。通过独立的统计指标进行衡量,我们的方法可确保3D重构样本与相应的2D示例之间的统计等效性很好。

京公网安备 11010802027423号

京公网安备 11010802027423号