Computers in Biology and Medicine ( IF 7.0 ) Pub Date : 2020-06-17 , DOI: 10.1016/j.compbiomed.2020.103865 Graziani M 1 , Andrearczyk V 2 , Marchand-Maillet S 3 , Müller H 1

|

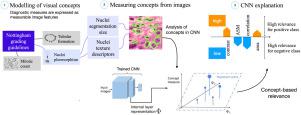

Deep learning explainability is often reached by gradient-based approaches that attribute the network output to perturbations of the input pixels. However, the relevance of input pixels may be difficult to relate to relevant image features in some applications, e.g. diagnostic measures in medical imaging. The framework described in this paper shifts the attribution focus from pixel values to user-defined concepts. By checking if certain diagnostic measures are present in the learned representations, experts can explain and entrust the network output. Being post-hoc, our method does not alter the network training and can be easily plugged into the latest state-of-the-art convolutional networks. This paper presents the main components of the framework for attribution to concepts, in addition to the introduction of a spatial pooling operation on top of the feature maps to obtain a solid interpretability analysis. Furthermore, regularized regression is analyzed as a solution to the regression overfitting in high-dimensionality latent spaces. The versatility of the proposed approach is shown by experiments on two medical applications, namely histopathology and retinopathy, and on one non-medical task, the task of handwritten digit classification. The obtained explanations are in line with clinicians’ guidelines and complementary to widely used visualization tools such as saliency maps.

中文翻译:

概念归因:向医生解释CNN的决定。

深度学习的可解释性通常是通过基于梯度的方法实现的,该方法将网络输出归因于输入像素的扰动。然而,在一些应用中,例如医学成像中的诊断措施,输入像素的相关性可能难以与相关图像特征相关。本文描述的框架将归因重点从像素值转移到用户定义的概念。通过检查学习的表示形式中是否存在某些诊断措施,专家可以解释并委托网络输出。事后,我们的方法不会改变网络训练,并且可以轻松地插入最新的最新卷积网络。本文介绍了归因于概念的框架的主要组成部分,除了在特征图的顶部引入空间合并操作以获取可靠的可解释性分析之外。此外,分析了正则回归作为高维潜在空间中回归过度拟合的解决方案。通过在两种医学应用(即组织病理学和视网膜病变)以及一项非医学任务(即手写数字分类任务)上的实验,表明了该方法的多功能性。所获得的解释符合临床医生的指导方针,并与诸如显着性图之类的广泛使用的可视化工具相辅相成。通过在两种医学应用(即组织病理学和视网膜病变)以及一项非医学任务(即手写数字分类任务)上的实验,表明了该方法的多功能性。所获得的解释符合临床医生的指导原则,并与诸如显着性图之类的广泛使用的可视化工具相辅相成。通过在两种医学应用(即组织病理学和视网膜病变)以及一项非医学任务(即手写数字分类任务)上的实验,表明了该方法的多功能性。所获得的解释与临床医生的指南相符,并且与诸如显着性图之类的广泛使用的可视化工具相辅相成。

京公网安备 11010802027423号

京公网安备 11010802027423号