Pattern Recognition Letters ( IF 3.9 ) Pub Date : 2020-05-13 , DOI: 10.1016/j.patrec.2020.05.017 Masayuki Tanaka

|

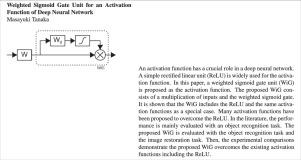

An activation function has a crucial role in a deep neural network. A simple rectified linear unit (ReLU) is widely used for the activation function. In this paper, a weighted sigmoid gate unit (WiG) is proposed as the activation function. The proposed WiG consists of a multiplication of inputs and the weighted sigmoid gate. It is shown that the WiG includes the ReLU and the same activation functions as a special case. Many activation functions have been proposed to overcome the ReLU. In the literature, the performance is mainly evaluated with an object recognition task. The proposed WiG is evaluated with the object recognition task and the image restoration task. Then, the experimental comparisons demonstrate the proposed WiG overcomes the existing activation functions including the ReLU.

中文翻译:

用于深度神经网络激活功能的加权S型门单元

激活功能在深度神经网络中具有至关重要的作用。简单的整流线性单元(ReLU)被广泛用于激活功能。在本文中,提出了加权乙状结肠门单元(WiG)作为激活函数。提出的WiG由输入和加权S型门的乘法组成。结果表明,WiG包含ReLU,并具有与特殊情况相同的激活功能。已经提出了许多激活功能来克服ReLU。在文献中,主要通过对象识别任务来评估性能。通过对象识别任务和图像恢复任务对提出的WiG进行评估。然后,实验比较表明,所提出的WiG克服了现有的激活功能,包括ReLU。

京公网安备 11010802027423号

京公网安备 11010802027423号