当前位置:

X-MOL 学术

›

Comput. Graph. Forum

›

论文详情

Our official English website, www.x-mol.net, welcomes your

feedback! (Note: you will need to create a separate account there.)

Detection and Synthesis of Full‐Body Environment Interactions for Virtual Humans

Computer Graphics Forum ( IF 2.7 ) Pub Date : 2019-07-12 , DOI: 10.1111/cgf.13802 A. Juarez‐Perez 1, 2 , M. Kallmann 1

Computer Graphics Forum ( IF 2.7 ) Pub Date : 2019-07-12 , DOI: 10.1111/cgf.13802 A. Juarez‐Perez 1, 2 , M. Kallmann 1

Affiliation

|

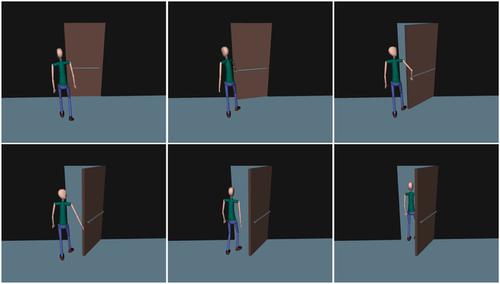

We present a new methodology for enabling virtual humans to autonomously detect and perform complex full‐body interactions with their environments. Given a parameterized walking controller and a set of motion‐captured example interactions, our method is able to detect when interactions can occur and to coordinate the detected upper‐body interaction with the walking controller in order to achieve full‐body mobile interactions in similar situations. Our approach is based on learning spatial coordination features from the example motions and on associating body‐environment proximity information to the body configurations of each performed action. Body configurations become the input to a regression system, which in turn is able to generate new interactions for different situations in similar environments. The regression model is capable of selecting, encoding and replicating key spatial strategies with respect to body coordination and management of environment constraints as well as determining the correct moment in time and space for starting an interaction. As a result, we obtain an interactive controller able to detect and synthesize coordinated full‐body motions for a variety of complex interactions requiring body mobility. Our results achieve complex interactions, such as opening doors and drawing in a wide whiteboard. The presented approach introduces the concept of learning interaction coordination models that can be applied on top of any given walking controller. The obtained method is simple and flexible, it handles the detection of possible interactions and is suitable for real‐time applications.

中文翻译:

虚拟人全身环境交互的检测与合成

我们提出了一种新方法,使虚拟人能够自主检测并与环境进行复杂的全身交互。给定一个参数化的步行控制器和一组动作捕捉的示例交互,我们的方法能够检测交互何时发生,并将检测到的上身交互与步行控制器协调,以在类似情况下实现全身移动交互. 我们的方法基于从示例运动中学习空间协调特征,并将身体环境接近信息与每个执行动作的身体配置相关联。身体配置成为回归系统的输入,反过来又能够为类似环境中的不同情况生成新的交互。回归模型能够选择,编码和复制与身体协调和环境约束管理相关的关键空间策略,以及确定开始交互的正确时间和空间时刻。因此,我们获得了一个交互式控制器,能够检测和合成协调的全身运动,用于需要身体移动性的各种复杂交互。我们的结果实现了复杂的交互,例如打开门和在宽白板上绘图。所提出的方法引入了学习交互协调模型的概念,该模型可以应用于任何给定的步行控制器之上。所获得的方法简单灵活,可以处理可能的交互作用的检测,适用于实时应用。

更新日期:2019-07-12

中文翻译:

虚拟人全身环境交互的检测与合成

我们提出了一种新方法,使虚拟人能够自主检测并与环境进行复杂的全身交互。给定一个参数化的步行控制器和一组动作捕捉的示例交互,我们的方法能够检测交互何时发生,并将检测到的上身交互与步行控制器协调,以在类似情况下实现全身移动交互. 我们的方法基于从示例运动中学习空间协调特征,并将身体环境接近信息与每个执行动作的身体配置相关联。身体配置成为回归系统的输入,反过来又能够为类似环境中的不同情况生成新的交互。回归模型能够选择,编码和复制与身体协调和环境约束管理相关的关键空间策略,以及确定开始交互的正确时间和空间时刻。因此,我们获得了一个交互式控制器,能够检测和合成协调的全身运动,用于需要身体移动性的各种复杂交互。我们的结果实现了复杂的交互,例如打开门和在宽白板上绘图。所提出的方法引入了学习交互协调模型的概念,该模型可以应用于任何给定的步行控制器之上。所获得的方法简单灵活,可以处理可能的交互作用的检测,适用于实时应用。

京公网安备 11010802027423号

京公网安备 11010802027423号