当前位置:

X-MOL 学术

›

WIREs Data Mining Knowl. Discov.

›

论文详情

Our official English website, www.x-mol.net, welcomes your

feedback! (Note: you will need to create a separate account there.)

O‐MedAL: Online active deep learning for medical image analysis

WIREs Data Mining and Knowledge Discovery ( IF 6.4 ) Pub Date : 2020-01-27 , DOI: 10.1002/widm.1353 Asim Smailagic 1 , Pedro Costa 2 , Alex Gaudio 1 , Kartik Khandelwal 1 , Mostafa Mirshekari 3 , Jonathon Fagert 3 , Devesh Walawalkar 1 , Susu Xu 3 , Adrian Galdran 2 , Pei Zhang 1 , Aurélio Campilho 2, 4 , Hae Young Noh 3

WIREs Data Mining and Knowledge Discovery ( IF 6.4 ) Pub Date : 2020-01-27 , DOI: 10.1002/widm.1353 Asim Smailagic 1 , Pedro Costa 2 , Alex Gaudio 1 , Kartik Khandelwal 1 , Mostafa Mirshekari 3 , Jonathon Fagert 3 , Devesh Walawalkar 1 , Susu Xu 3 , Adrian Galdran 2 , Pei Zhang 1 , Aurélio Campilho 2, 4 , Hae Young Noh 3

Affiliation

|

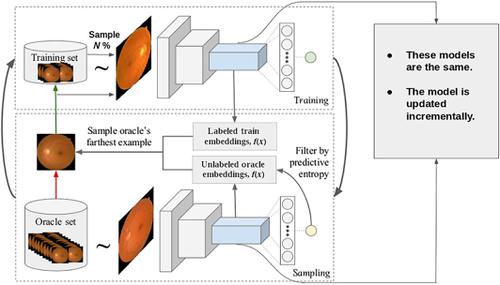

Active learning (AL) methods create an optimized labeled training set from unlabeled data. We introduce a novel online active deep learning method for medical image analysis. We extend our MedAL AL framework to present new results in this paper. A novel sampling method queries the unlabeled examples that maximize the average distance to all training set examples. Our online method enhances performance of its underlying baseline deep network. These novelties contribute to significant performance improvements, including improving the model's underlying deep network accuracy by 6.30%, using only 25% of the labeled dataset to achieve baseline accuracy, reducing backpropagated images during training by as much as 67%, and demonstrating robustness to class imbalance in binary and multiclass tasks.

中文翻译:

O‐MedAL:用于医学图像分析的在线主动深度学习

主动学习(AL)方法根据未标记的数据创建优化的标记训练集。我们介绍了一种用于医学图像分析的新型在线主动深度学习方法。我们扩展了MedAL AL框架,以在本文中提出新的结果。一种新颖的采样方法查询未标记的示例,该示例将与所有训练集示例的平均距离最大化。我们的在线方法提高了其基础基线深层网络的性能。这些新颖性有助于显着提高性能,包括将模型的基础深层网络精度提高6.30%,仅使用25%的标记数据集来实现基线精度,在训练过程中将反向传播的图像减少多达67%以及证明分类的鲁棒性二元和多类任务的不平衡。

更新日期:2020-01-27

中文翻译:

O‐MedAL:用于医学图像分析的在线主动深度学习

主动学习(AL)方法根据未标记的数据创建优化的标记训练集。我们介绍了一种用于医学图像分析的新型在线主动深度学习方法。我们扩展了MedAL AL框架,以在本文中提出新的结果。一种新颖的采样方法查询未标记的示例,该示例将与所有训练集示例的平均距离最大化。我们的在线方法提高了其基础基线深层网络的性能。这些新颖性有助于显着提高性能,包括将模型的基础深层网络精度提高6.30%,仅使用25%的标记数据集来实现基线精度,在训练过程中将反向传播的图像减少多达67%以及证明分类的鲁棒性二元和多类任务的不平衡。

京公网安备 11010802027423号

京公网安备 11010802027423号