当前位置:

X-MOL 学术

›

Nat. Protoc.

›

论文详情

Our official English website, www.x-mol.net, welcomes your

feedback! (Note: you will need to create a separate account there.)

Toward a unified framework for interpreting machine-learning models in neuroimaging.

Nature Protocols ( IF 13.1 ) Pub Date : 2020-03-18 , DOI: 10.1038/s41596-019-0289-5 Lada Kohoutová 1, 2 , Juyeon Heo 3 , Sungmin Cha 3 , Sungwoo Lee 1, 2 , Taesup Moon 3 , Tor D Wager 4, 5, 6 , Choong-Wan Woo 1, 2

Nature Protocols ( IF 13.1 ) Pub Date : 2020-03-18 , DOI: 10.1038/s41596-019-0289-5 Lada Kohoutová 1, 2 , Juyeon Heo 3 , Sungmin Cha 3 , Sungwoo Lee 1, 2 , Taesup Moon 3 , Tor D Wager 4, 5, 6 , Choong-Wan Woo 1, 2

Affiliation

|

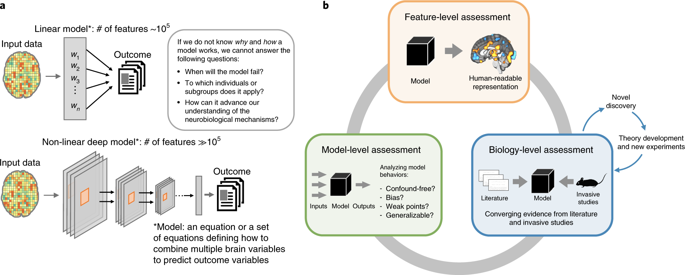

Machine learning is a powerful tool for creating computational models relating brain function to behavior, and its use is becoming widespread in neuroscience. However, these models are complex and often hard to interpret, making it difficult to evaluate their neuroscientific validity and contribution to understanding the brain. For neuroimaging-based machine-learning models to be interpretable, they should (i) be comprehensible to humans, (ii) provide useful information about what mental or behavioral constructs are represented in particular brain pathways or regions, and (iii) demonstrate that they are based on relevant neurobiological signal, not artifacts or confounds. In this protocol, we introduce a unified framework that consists of model-, feature- and biology-level assessments to provide complementary results that support the understanding of how and why a model works. Although the framework can be applied to different types of models and data, this protocol provides practical tools and examples of selected analysis methods for a functional MRI dataset and multivariate pattern-based predictive models. A user of the protocol should be familiar with basic programming in MATLAB or Python. This protocol will help build more interpretable neuroimaging-based machine-learning models, contributing to the cumulative understanding of brain mechanisms and brain health. Although the analyses provided here constitute a limited set of tests and take a few hours to days to complete, depending on the size of data and available computational resources, we envision the process of annotating and interpreting models as an open-ended process, involving collaborative efforts across multiple studies and laboratories.

中文翻译:

建立一个统一的框架来解释神经影像学中的机器学习模型。

机器学习是一种强大的工具,可用于创建将大脑功能与行为相关联的计算模型,并且其在神经科学中的应用越来越广泛。然而,这些模型很复杂并且通常难以解释,因此很难评估它们的神经科学有效性和对理解大脑的贡献。为了使基于神经影像的机器学习模型具有可解释性,它们应该 (i) 人类可以理解,(ii) 提供有关特定大脑通路或区域中代表哪些心理或行为结构的有用信息,以及 (iii) 证明它们基于相关的神经生物学信号,而不是伪影或混淆。在这个协议中,我们引入了一个由模型组成的统一框架,特征和生物学层面的评估,以提供补充结果,支持理解模型的工作原理和原因。尽管该框架可以应用于不同类型的模型和数据,但该协议为功能性 MRI 数据集和基于多变量模式的预测模型提供了实用工具和所选分析方法的示例。该协议的用户应该熟悉 MATLAB 或 Python 的基本编程。该协议将有助于建立更多可解释的基于神经影像学的机器学习模型,有助于对大脑机制和大脑健康的累积理解。尽管此处提供的分析构成了一组有限的测试,并且需要几小时到几天才能完成,具体取决于数据的大小和可用的计算资源,

更新日期:2020-03-18

中文翻译:

建立一个统一的框架来解释神经影像学中的机器学习模型。

机器学习是一种强大的工具,可用于创建将大脑功能与行为相关联的计算模型,并且其在神经科学中的应用越来越广泛。然而,这些模型很复杂并且通常难以解释,因此很难评估它们的神经科学有效性和对理解大脑的贡献。为了使基于神经影像的机器学习模型具有可解释性,它们应该 (i) 人类可以理解,(ii) 提供有关特定大脑通路或区域中代表哪些心理或行为结构的有用信息,以及 (iii) 证明它们基于相关的神经生物学信号,而不是伪影或混淆。在这个协议中,我们引入了一个由模型组成的统一框架,特征和生物学层面的评估,以提供补充结果,支持理解模型的工作原理和原因。尽管该框架可以应用于不同类型的模型和数据,但该协议为功能性 MRI 数据集和基于多变量模式的预测模型提供了实用工具和所选分析方法的示例。该协议的用户应该熟悉 MATLAB 或 Python 的基本编程。该协议将有助于建立更多可解释的基于神经影像学的机器学习模型,有助于对大脑机制和大脑健康的累积理解。尽管此处提供的分析构成了一组有限的测试,并且需要几小时到几天才能完成,具体取决于数据的大小和可用的计算资源,

京公网安备 11010802027423号

京公网安备 11010802027423号