Nature Machine Intelligence ( IF 18.8 ) Pub Date : 2020-01-17 , DOI: 10.1038/s42256-019-0138-9 Scott M Lundberg 1, 2 , Gabriel Erion 2, 3 , Hugh Chen 2 , Alex DeGrave 2, 3 , Jordan M Prutkin 4 , Bala Nair 5, 6 , Ronit Katz 7 , Jonathan Himmelfarb 7 , Nisha Bansal 7 , Su-In Lee 2

|

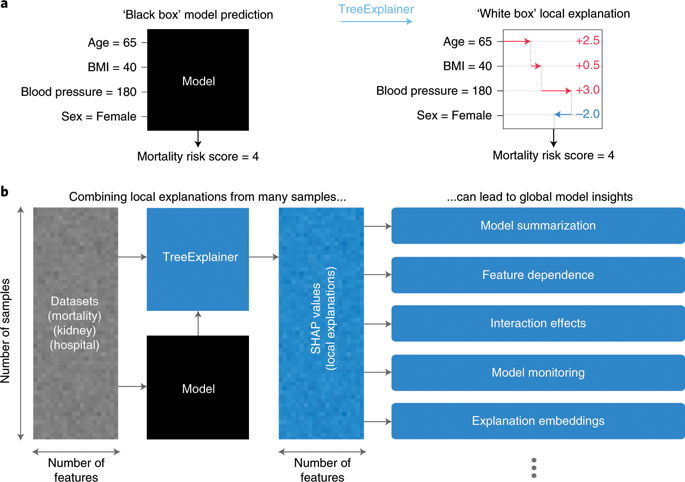

Tree-based machine learning models such as random forests, decision trees and gradient boosted trees are popular nonlinear predictive models, yet comparatively little attention has been paid to explaining their predictions. Here we improve the interpretability of tree-based models through three main contributions. (1) A polynomial time algorithm to compute optimal explanations based on game theory. (2) A new type of explanation that directly measures local feature interaction effects. (3) A new set of tools for understanding global model structure based on combining many local explanations of each prediction. We apply these tools to three medical machine learning problems and show how combining many high-quality local explanations allows us to represent global structure while retaining local faithfulness to the original model. These tools enable us to (1) identify high-magnitude but low-frequency nonlinear mortality risk factors in the US population, (2) highlight distinct population subgroups with shared risk characteristics, (3) identify nonlinear interaction effects among risk factors for chronic kidney disease and (4) monitor a machine learning model deployed in a hospital by identifying which features are degrading the model’s performance over time. Given the popularity of tree-based machine learning models, these improvements to their interpretability have implications across a broad set of domains.

A preprint version of the article is available at ArXiv.中文翻译:

通过可解释的树木人工智能从局部解释到全局理解。

基于树的机器学习模型(例如随机森林、决策树和梯度提升树)是流行的非线性预测模型,但相对较少的注意力集中在解释它们的预测上。在这里,我们通过三个主要贡献提高了基于树的模型的可解释性。 (1)基于博弈论计算最优解释的多项式时间算法。 (2)直接衡量局部特征交互效应的新型解释。 (3) 一套新的工具,用于基于结合每个预测的许多局部解释来理解全局模型结构。我们将这些工具应用于三个医学机器学习问题,并展示了如何结合许多高质量的局部解释使我们能够表示全局结构,同时保留对原始模型的局部忠实度。这些工具使我们能够 (1) 识别美国人群中高强度但低频的非线性死亡风险因素,(2) 突出具有共同风险特征的不同人群亚组,(3) 识别慢性肾病危险因素之间的非线性相互作用效应(4) 通过识别哪些特征会随着时间的推移降低模型的性能来监控医院中部署的机器学习模型。鉴于基于树的机器学习模型的流行,这些可解释性的改进对广泛的领域产生了影响。

ArXiv 提供了该文章的预印本。

京公网安备 11010802027423号

京公网安备 11010802027423号