International Journal of Computer Vision ( IF 19.5 ) Pub Date : 2024-04-08 , DOI: 10.1007/s11263-024-02046-2 Yuhang Li , Shikuang Deng , Xin Dong , Shi Gu

|

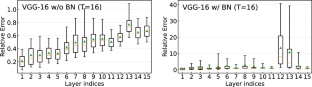

Spiking Neural Network (SNN), originating from the neural behavior in biology, has been recognized as one of the next-generation neural networks. Conventionally, SNNs can be obtained by converting from pre-trained Artificial Neural Networks (ANNs) by replacing the non-linear activation with spiking neurons without changing the parameters. In this work, we argue that simply copying and pasting the weights of ANN to SNN inevitably results in activation mismatch, especially for ANNs that are trained with batch normalization (BN) layers. To tackle the activation mismatch issue, we first provide a theoretical analysis by decomposing local layer-wise conversion error, and then quantitatively measure how this error propagates throughout the layers using the second-order analysis. Motivated by the theoretical results, we propose a set of layer-wise parameter calibration algorithms, which adjusts the parameters to minimize the activation mismatch. To further remove the dependency on data, we propose a privacy-preserving conversion regime by distilling synthetic data from source ANN and using it to calibrate the SNN. Extensive experiments for the proposed algorithms are performed on modern architectures and large-scale tasks including ImageNet classification and MS COCO detection. We demonstrate that our method can handle the SNN conversion and effectively preserve high accuracy even in 32 time steps. For example, our calibration algorithms can increase up to 63% accuracy when converting MobileNet against baselines.

中文翻译:

通过训练后参数校准从 ANN 到 SNN 的错误感知转换

脉冲神经网络(Spiking Neural Network,SNN)起源于生物学中的神经行为,被认为是下一代神经网络之一。传统上,SNN 可以通过从预训练的人工神经网络 (ANN) 转换来获得,方法是用尖峰神经元替换非线性激活,而不改变参数。在这项工作中,我们认为简单地将 ANN 的权重复制并粘贴到 SNN 不可避免地会导致激活不匹配,特别是对于使用批量归一化 (BN) 层进行训练的 ANN。为了解决激活不匹配问题,我们首先通过分解局部逐层转换误差来提供理论分析,然后使用二阶分析定量测量该误差如何在各层中传播。在理论结果的推动下,我们提出了一组分层参数校准算法,该算法调整参数以最小化激活不匹配。为了进一步消除对数据的依赖,我们提出了一种隐私保护转换机制,通过从源 ANN 中提取合成数据并使用它来校准 SNN。所提出的算法在现代架构和大规模任务(包括 ImageNet 分类和 MS COCO 检测)上进行了广泛的实验。我们证明我们的方法可以处理 SNN 转换,并且即使在 32 个时间步长内也能有效保持高精度。例如,当根据基线转换 MobileNet 时,我们的校准算法可以将准确度提高高达 63%。

京公网安备 11010802027423号

京公网安备 11010802027423号