Abstract

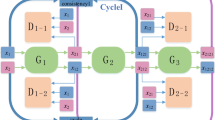

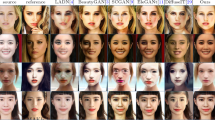

Makeup studies have recently caught much attention in computer version. Two of the typical tasks are makeup-invariant face verification and makeup transfer. Although having experienced remarkable progress, both tasks remain challenging, especially encountering data in the wild. In this paper, we propose a disentangled feature learning strategy to fulfil both tasks in a single generative network. Overall, a makeup portrait can be decomposed into three components: makeup, identity and geometry (including expression, pose etc.). We assume that the extracted image representation can be decomposed into a makeup code that captures the makeup style and an identity code to preserve the source identity. As for other variation factors, we consider them as native structures from the source image that should be reserved. Thus a dense correspondence field is integrated in the network to preserve the geometry on a face. To encourage delightful visual results after makeup transfer, we propose a cosmetic loss to learn makeup styles in a delicate way. Finally, a new Cross-Makeup Face (CMF) benchmark dataset (https://github.com/ly-joy/Cross-Makeup-Face) with in-the-wild makeup portraits is built up to push the frontiers of related research. Both visual and quantitative experimental results on four makeup datasets demonstrate the superiority of the proposed method.

Similar content being viewed by others

References

Alashkar, T., Jiang, S., Wang, S., & Fu, Y. (2017). Examples-rules guided deep neural network for makeup recommendation. In The thirty-first AAAI conference on artificial intelligence (pp. 941–947). AAAI Press.

Bao, J., Chen, D., Wen, F., Li, H., & Hua, G. (2017). CVAE-GAN: Fine-grained image generation through asymmetric training. In The IEEE international conference on computer vision.

Blanz, V., & Vetter, T. (1999). A morphable model for the synthesis of 3D faces. In Conference on computer graphics and interactive techniques (pp. 187–194).

Booth, J., & Zafeiriou, S. (2014). Optimal uv spaces for facial morphable model construction. In IEEE international conference on image processing (pp. 4672–4676). IEEE.

Cao, J., Hu, Y., Zhang, H., He, R., & Sun, Z. (2018). Learning a high fidelity pose invariant model for high-resolution face frontalization. In Advances in neural information processing systems (pp. 2872–2882)

Chang, H., Lu, J., Yu, F., & Finkelstein, A. (2018). Pairedcyclegan: Asymmetric style transfer for applying and removing makeup. In The IEEE conference on computer vision and pattern recognition.

Chen, C., Dantcheva, A., & Ross, A. (2016). An ensemble of patch-based subspaces for makeup-robust face recognition. Information Fusion, 32, 80–92.

Chen, X., Duan, Y., Houthooft, R., Schulman, J., Sutskever, I., & Abbeel, P. (2016). Infogan: Interpretable representation learning by information maximizing generative adversarial nets. In Advances in neural information processing systems (pp. 2172–2180).

Chen, Y. C., Shen, X., & Jia, J. (2017). Makeup-go: Blind reversion of portrait edit. In The IEEE international conference on computer vision (Vol. 2).

Choi, Y., Choi, M., Kim, M., Ha, J. W., Kim, S., & Choo, J. (2018). Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. In The IEEE international conference on computer vision.

Dantcheva, A., Chen, C., & Ross, A. (2012). Can facial cosmetics affect the matching accuracy of face recognition systems? In the fifth international conference on biometrics: theory, applications and systems (pp. 391–398). IEEE.

Gonzalez-Garcia, A., van de Weijer, J., & Bengio, Y. (2018). Image-to-image translation for cross-domain disentanglement. In Advances in neural information processing systems (pp. 1294–1305).

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., & Bengio, Y. (2014). Generative adversarial nets. In Advances in neural information processing systems (pp. 2672–2680).

Güler, R. A., Neverova, N., & Kokkinos, I. (2018). Densepose: Dense human pose estimation in the wild. In The IEEE conference on computer vision and pattern recognition.

Güler, R. A., Trigeorgis, G., Antonakos, E., Snape, P., Zafeiriou, S., & Kokkinos, I. (2017) Densereg: Fully convolutional dense shape regression in-the-wild. In the IEEE conference on computer vision and pattern recognition (Vol. 2, p. 5).

Guo, G., Wen, L., & Yan, S. (2014). Face authentication with makeup changes. IEEE Transactions on Circuits and Systems for Video Technology, 24(5), 814–825.

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In The IEEE conference on computer vision and pattern recognition (pp. 770–778).

He, R., Wu, X., Sun, Z., & Tan, T. (2017). Learning invariant deep representation for nir-vis face recognition. In The thirty-first AAAI conference on artificial intelligence (pp. 2000–2006). AAAI Press.

Hu, J., Ge, Y., Lu, J., & Feng, X. (2013). Makeup-robust face verification. In International conference on acoustics, speech and signal processing (pp. 2342–2346).

Hu, Y., Wu, X., Yu, B., He, R., & Sun, Z. (2018). Pose-guided photorealistic face rotation. In the IEEE conference on computer vision and pattern recognition.

Huang, H., He, R., Sun, Z., & Tan, T., et al. (2018). Introvae: Introspective variational autoencoders for photographic image synthesis. In Advances in neural information processing systems (pp. 52–63).

Huang, R., Zhang, S., Li, T., & He, R. (2017). Beyond face rotation: Global and local perception gan for photorealistic and identity preserving frontal view synthesis. In the IEEE international conference on computer vision (pp. 2439–2448).

Huang, X., Liu, M. Y., Belongie, S., & Kautz, J. (2018). Multimodal unsupervised image-to-image translation. In the European conference on computer vision (pp. 172–189).

Isola, P., Zhu, J. Y., Zhou, T., & Efros, A. A. (2017). Image-to-image translation with conditional adversarial networks. In the IEEE conference on computer vision and pattern recognition (pp. 5967–5976). IEEE.

Jing, X. Y., Wu, F., Zhu, X., Dong, X., Ma, F., & Li, Z. (2016). Multi-spectral low-rank structured dictionary learning for face recognition. Pattern Recognition, 59, 14–25.

Karras, T., Aila, T., Laine, S., & Lehtinen, J. (2018). Progressive growing of gans for improved quality, stability, and variation. In The international conference on learning representations.

Kingma, D. P., Mohamed, S., Rezende, D. J., & Welling, M. (2014). Semi-supervised learning with deep generative models. In Advances in neural information processing systems (pp. 3581–3589).

Kingma, D. P., Salimans, T., Jozefowicz, R., Chen, X., Sutskever, I., & Welling, M. (2016). Improved variational inference with inverse autoregressive flow. In Advances in neural information processing systems (pp. 4743–4751).

Kingma, D. P., Welling, M. (2014). Auto-encoding variational bayes. In International conference on learning representations.

Larsen, A. B. L., Sønderby, S. K., Larochelle, H., & Winther, O. (2016). Autoencoding beyond pixels using a learned similarity metric. In International conference on machine learning (pp. 1558–1566).

Lee, H. Y., Tseng, H. Y., Huang, J. B., Singh, M., & Yang, M. H. (2018). Diverse image-to-image translation via disentangled representations. In The European conference on computer vision (ECCV) (pp. 35–51).

Li, T., Qian, R., Dong, C., Liu, S., Yan, Q., Zhu, W., & Lin, L. (2018). Beautygan: Instance-level facial makeup transfer with deep generative adversarial network. In 2018 ACM multimedia conference on multimedia conference (pp. 645–653). ACM.

Li, X., Liu, S., Kautz, J., & Yang, M. H. (2019). Learning linear transformations for fast image and video style transfer. In the IEEE conference on computer vision and pattern recognition (pp. 3809–3817).

Li, Y., Liu, M.Y., Li, X., Yang, M. H., & Kautz, J. (2018). A closed-form solution to photorealistic image stylization. In The European conference on computer vision (pp. 453–468).

Li, Y., Song, L., Wu, X., He, R., Tan, T. (2018). Anti-makeup: Learning a bi-level adversarial network for makeup-invariant face verification. In The thirty-second AAAI conference on artificial intelligence.

Li, Y., Song, L., Wu, X., He, R., & Tan, T. (2019). Learning a bi-level adversarial network with global and local perception for makeup-invariant face verification. Pattern Recognition, 90, 99–108.

Liao, J., Yao, Y., Yuan, L., Hua, G., & Kang, S. B. (2017). Visual attribute transfer through deep image analogy. ACM Transactions on Graphics, 36(4), 120.

Liu, S., Ou, X., Qian, R., Wang, W., Cao, X. (2016). Makeup like a superstar: deep localized makeup transfer network. In The twenty-fifth international joint conference on Artificial intelligence (pp. 2568–2575). AAAI Press.

Lu, Z., Hu, T., Song, L., Zhang, Z., He, R. (2018). Conditional expression synthesis with face parsing transformation. In 2018 ACM multimedia conference on multimedia conference (pp. 1083–1091). ACM.

Nguyen, H. V., Bai, L. (2010). Cosine similarity metric learning for face verification. In Asian conference on computer vision (pp. 709–720). Springer.

Odena, A., Olah, C., & Shlens, J. (2017). Conditional image synthesis with auxiliary classifier gans. In the 34th international conference on machine learning (Vol. 70, pp. 2642–2651). JMLR. org.

Oord, A. v. d., Kalchbrenner, N., & Kavukcuoglu, K. (2016). Pixel recurrent neural networks. In the 33rd international conference on machine learning.

Paysan, P., Knothe, R., Amberg, B., Romdhani, S., & Vetter, T. (2009). A 3d face model for pose and illumination invariant face recognition. In 2009 Sixth IEEE international conference on advanced video and signal based surveillance (pp. 296–301). IEEE.

Rezende, D. J., Mohamed, S., & Wierstra, D. (2014). Stochastic backpropagation and approximate inference in deep generative models. In the 31st international conference on machine learning (pp. II–1278). JMLR. org.

Ronneberger, O., Fischer, P., & Brox, T. (2015). U-net: Convolutional networks for biomedical image segmentation. In International conference on medical image computing and computer-assisted intervention (pp. 234–241). Springer.

Roth, J., Tong, Y., & Liu, X. (2015). Unconstrained 3D face reconstruction. In The IEEE conference on computer vision and pattern recognition (pp. 2606–2615).

Roth, J., Tong, Y., & Liu, X. (2016). Adaptive 3D face reconstruction from unconstrained photo collections. In The IEEE conference on computer vision and pattern recognition (pp. 4197–4206).

Simonyan, K., & Zisserman, A. (2015). Very deep convolutional networks for large-scale image recognition. In The 3rd international conference on learning representations.

Song, L., Lu, Z., He, R., Sun, Z., & Tan, T. (2018). Geometry guided adversarial facial expression synthesis. In 2018 ACM multimedia conference on multimedia conference (pp. 627–635). ACM.

Sun, Y., Chen, Y., Wang, X., & Tang, X. (2014). Deep learning face representation by joint identification-verification. In Advances in neural information processing systems (pp. 1988–1996).

Sun, Y., Ren, L., Wei, Z., Liu, B., Zhai, Y., & Liu, S. (2017). A weakly supervised method for makeup-invariant face verification. Pattern Recognition, 66, 153–159.

Sun, Y., Wang, X., & Tang, X. (2013). Deep convolutional network cascade for facial point detection. In The IEEE conference on computer vision and pattern recognition (pp. 3476–3483).

Sun, Y., Wang, X., & Tang, X. (2013) Hybrid deep learning for face verification. In The IEEE international conference on computer vision (pp. 1489–1496).

Taigman, Y., Yang, M., Ranzato, M., & Wolf, L. (2014). Deepface: Closing the gap to human-level performance in face verification. In The IEEE conference on computer vision and pattern recognition (pp. 1701–1708).

Tong, W. S., Tang, C. K., Brown, M. S., & Xu, Y. Q. (2007). Example-based cosmetic transfer. In The 15th pacific conference on computer graphics and applications (PG’07) (pp. 211–218). IEEE.

Tran, L., Kossaifi, J., Panagakis, Y., & Pantic, M. (2019). Disentangling geometry and appearance with regularised geometry-aware generative adversarial networks. International Journal of Computer Vision, 127(6–7), 824–844.

Tu, X., Zhao, J., Jiang, Z., Luo, Y., Xie, M., Zhao, Y., He, L., Ma, Z., & Feng, J. (2019). Joint 3d face reconstruction and dense face alignment from a single image with 2d-assisted self-supervised learning. arXiv preprint arXiv:1903.09359.

Wang, S., Fu, Y. (2016). Face behind makeup. In The thirtieth AAAI conference on artificial intelligence.

Wei, Z., Sun, Y., Wang, J., Lai, H., & Liu, S. (2017). Learning adaptive receptive fields for deep image parsing network. In The IEEE conference on computer vision and pattern recognition (pp. 2434–2442).

Wu, X., He, R., Sun, Z., & Tan, T. (2018). A light cnn for deep face representation with noisy labels. IEEE Transactions on Information Forensics and Security, 13(11), 2884–2896.

Yu, J., Cao, J., Li, Y., Jia, X., & He, R. (2019). Pose-preserving cross spectral face hallucination. In International joint conference on artificial intelligence.

Zhang, H., Riggan, B. S., Hu, S., Short, N. J., & Patel, V. M. (2019). Synthesis of high-quality visible faces from polarimetric thermal faces using generative adversarial networks. International Journal of Computer Vision, 127(6–7), 845–862.

Zhang, S., He, R., Sun, Z., & Tan, T. (2016). Multi-task convnet for blind face inpainting with application to face verification. In International conference on biometrics (pp. 1–8).

Zhao, J., Cheng, Y., Cheng, Y., Yang, Y., Zhao, F., Li, J., Liu, H., Yan, S., & Feng, J. (2019). Look across elapse: Disentangled representation learning and photorealistic cross-age face synthesis for age-invariant face recognition. In The AAAI conference on artificial intelligence (Vol. 33, pp. 9251–9258).

Zhao, J., Xiong, L., Jayashree, P. K., Li, J., Zhao, F., Wang, Z., Pranata, P. S., Shen, P. S., Yan, S., & Feng, J. (2017). Dual-agent gans for photorealistic and identity preserving profile face synthesis. In Advances in neural information processing systems (pp. 66–76).

Zhu, J. Y., Park, T., Isola, P., Efros, A. A. (2017). Unpaired image-to-image translation using cycle-consistent adversarial networks. In The IEEE international conference on computer vision.

Zhu, X., Lei, Z., Liu, X., Shi, H., Li, S. Z. (2016). Face alignment across large poses: A 3d solution. In The IEEE conference on computer vision and pattern recognition (pp. 146–155).

Acknowledgements

This work was funded by State Key Development Program (Grant No. 2016YFB1001001), and National Natural Science Foundation of China (Grant Nos. 61622310, 61473289 and 61721004).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Li Liu, Matti Pietikäinen, Jie Qin, Jie Chen, Wanli Ouyang, Luc Van Gool.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, Y., Huang, H., Cao, J. et al. Disentangled Representation Learning of Makeup Portraits in the Wild. Int J Comput Vis 128, 2166–2184 (2020). https://doi.org/10.1007/s11263-019-01267-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-019-01267-0