When China locked down the city of Shanghai in April 2022 during the COVID-19 pandemic, the ripples from that decision quickly reached people receiving treatment for cardiac conditions in the United States. The lockdown shut a facility belonging to General Electric (GE) Healthcare, an important producer of ‘iodinated contrast dyes’, used to make blood visible in angiograms.

Soon US hospitals were asking people with mild chest pain to wait, so that the suddenly precious dyes could be reserved for use in those thought to be experiencing acute heart attacks. GE Healthcare scrambled to shift some of its production to Ireland to increase supply. A study in the American Journal of Neuroradiology later revealed that during the shortage, which lasted from mid-April to early June, the number of daily computed tomography (CT) angiograms dropped by 10% and CT perfusion tests were down almost 30%1.

Such disruption caused by supply-chain problems might, in future, be avoided through the use of virtual contrast agents. Techniques powered by artificial intelligence (AI) could highlight the same hidden features that the dyes reveal, without having to inject a foreign substance into the body. “With AI tools, all this hassle can be removed,” says Shuo Li, an AI researcher at Case Western Reserve University in Cleveland, Ohio.

AI has already made its way into conventional medical imaging, with deep-learning algorithms able to match and sometimes exceed the performance of radiologists in spotting anomalies in X-ray or magnetic resonance imaging (MRI) scans. Now the technology is starting to go even further. In addition to the computer-generated contrast agents that several groups around the world are working on, some researchers are exploring what features AI can detect that radiologists don’t normally even look for in scans. Other scientists are studying whether AI might enable brain scans to be used to diagnose neuro-developmental issues, such as attention deficit hyperactivity disorder (ADHD).

Li has been pursuing virtual contrast agents since 2017, and now he’s seeing a global wave of interest in the area. The potential benefits are many. All imaging methods can be enhanced by contrast agents — iodinated dyes in the case of computed tomography (CT) scans, microbubbles in ultrasound, or gadolinium in MRI. And all of those contrast agents, although generally safe, carry some risks, including allergic reactions. Gadolinium, for instance, often can’t be given to people with kidney problems, pregnant people or those who take certain diabetes or blood-pressure medications.

There’s also the issue of cost. The global market for gadolinium as a contrast agent was estimated to be worth US$1.6–2 billion in 2023, and the market for contrast agents in general is worth at least $6.3 billion. The use of contrast agents also requires extra time: many scans involve taking an image, then injecting the agent and repeating part of the scan.

Although it drags out the imaging process, that repetition helps to provides training data for an AI model. The computer studies the initial image to learn subtle variations in the pixels, then compares those with the corresponding pixels on the image taken after the contrast agent was injected. After training, the AI can look at a fresh image and show what it would look like if the contrast agent had been applied.

At the start of this year, Li and his colleagues at the Center for Imaging Research in Case Western’s School of Medicine received a $1.1-million grant from the US National Science Foundation to pursue this idea. They’d already done some preliminary work, training an AI on a few hundred images. Because of the small data set, the results were not as accurate as they would like, Li says. But with funding to study 10,000 or even 100,000 images, performance should improve. The researchers are also working on a similar project to detect liver cancer from scans.

Filling in the picture

If a computer can identify health issues in images, the next step will be to show radiologists a set of images produced with actual and virtual contrast agents, to see whether the specialists, who don’t know which is which, get different results from stained as opposed to AI-enhanced images. After that, says Li, it will take a clinical trial to win approval from the US Food and Drug Administration.

A similar approach could work for slides of tissue samples that pathologists stain and view under a microscope. By treating thin slices of tissue taken during a biopsy, pathologists can make certain features stand out and thereby see cellular abnormalities that aid in the identification of cancer or other diseases.

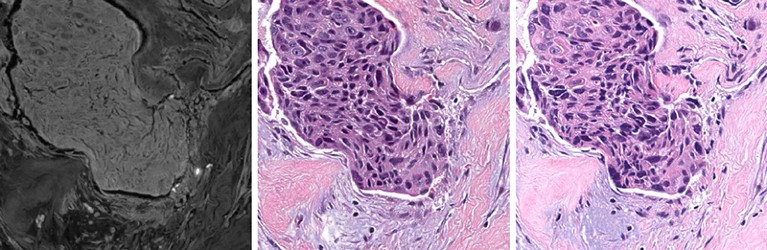

With AI-assisted virtual staining, Aydogan Ozcan, an optical engineer at the University of California, Los Angeles, says he can take an image using a mobile phone attached to a microscope and then, despite the image’s limited resolution and distortion, teach a neural network to make it look as if it was created by a laboratory-grade instrument2. The technology’s ability to transform one type of image into another doesn’t stop there. Ozcan starts with standard tissue samples, but rather than staining them, he places them under a fluorescence microscope and shines light through them, prompting the tissue to autofluoresce. The resulting images come out in shades of grey, very different from the coloured ones pathologists are used to. “Microscopically it’s very rich, but nobody cares to look at those black-and-white images,” Ozcan says.

To incorporate colour, he passes the samples to a histopathology lab for conventional staining, and captures images of the samples with a standard microscope. Ozcan then shows both types of image to a neural network, which learns how the details in the fluorescence images match up with the effects of the chemical stains. Having learnt this correspondence, the AI can then take new fluorescence images and present them as if they had been stained3.

A fluorescent microscope captures a black-and-white image of a tissue sample (left). The AI generates a version of that image with a virtual stain (centre), which closely resembles the chemically stained sample (right).Credit: Ref. 2

Although one particular stain, H&E, made up of the compounds haematoxylin and eosin, is by far the most common, pathologists use plenty of others, some of which are preferable for highlighting certain features. Trained on the other stains, the AI can transform the original image to incorporate any stain the pathologist wants. This technique allows researchers to simulate hundreds of different stains for the same small tissue sample. That means pathologists will never run out of tissue for a particular biopsy and ensures that they’re looking at the same area in each stain.

AI’s ability to manipulate medical images is not limited to transforming them. It can also extrapolate missing image data in such a way as to give radiologists access to clinically important information that they would otherwise have missed. Kim Sandler, a radiologist at Vanderbilt University Medical Center in Nashville, Tennessee, was interested in whether measures of body fat could help to predict clinical outcomes in people receiving CT scans to screen for lung cancer.

Often, radiologists will crop out areas of a chest CT scan that they’re not interested in, such as the abdomen and organs such as the spleen or liver. This selectivity improves the quality of the rest of the image and aids the identification of shadows or nodules that might indicate lung disease. But, Sandler thought, an AI could perhaps learn more by taking the opposite tack and expanding the field of view4. She worked with computer engineers who taught a neural network to look at the image differently by either adding back the cropped-out parts from the raw data, or combining what it saw with knowledge from the medical literature to decide what should be in the missing areas.

Having done that, the AI then made quantitative estimates of the amount of fat in the skeletal muscles — the lower the muscle density, the more fat present. There is a known association between body composition and health outcomes. In people with a lower muscle density as determined by AI, “we found that there was a higher risk of cardiovascular-disease-related death, a higher incidence of lung-cancer-related death,” as well as higher death rates from any cause over the 12.3 years the study looked at5, Sandler says. The AI did not, however, improve cancer diagnosis. “This was not helpful in terms of who would develop lung cancer, but it was helpful in predicting mortality,” she says.

The results are nonetheless diagnostically useful, Sandler says. People whose risk of mortality is elevated can be offered more aggressive therapies or more frequent screening if no lung cancer is yet apparent in the scans.

Invisible signs

AI might even be able to spot types of diagnostic information that physicians had never thought to look for, in part because it’s not something they’ve been able to see themselves. ADHD, for instance, is diagnosed on the basis on self-reported and observed behaviour rather than a biomarker. “There are behaviours that are relatively specific for ADHD, but we don’t have a good understanding of how those manifest in the neural circuitry of the brain,” says Andreas Rauschecker, a neuroradiologist at the University of California, San Francisco. As someone who spends a lot of time looking at brain images, he wanted to see whether he could find such an indicator.

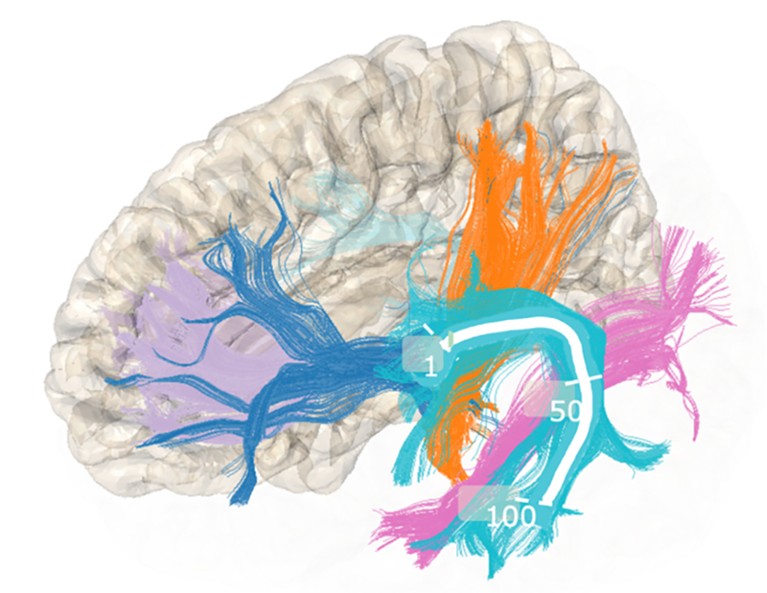

He and his team trained an AI on MRI scans of 1,704 participants in the Adolescent Brain Cognitive Development Study, a long-term investigation of brain development in US adolescents. The system learnt to look at water molecules moving along certain white-matter tracts that connect different areas of the brain, and tried to link any variations with ADHD. It turned out that certain measurements in the tracts were significantly higher in children identified as possibly having ADHD.

Andreas Rauschecker and his colleagues have been studying the movement of water molecules along tracts of white matter in the brain.Credit: Pierre Nedelec

Rauschecker emphasizes that this is a preliminary study; it was presented at a Radiological Society of North America meeting in November 2023 and has not yet been published. In fact, he says, no type of brain imaging currently in use can diagnose any neuropsychiatric condition. Still, he adds, it would make sense if some of those conditions were linked to structural changes in the brain, and he holds out hope that scans could prove useful in the future. Within a decade, he says, it’s likely that there will be “a lot more imaging related to neuropsychiatric disease” than there is now.

Even with help from AI, physicians don’t make diagnoses on the basis of on images alone. They also have their own observations: clinical indicators such as blood pressure, heart rate or blood glucose levels; patient and family histories; and perhaps the results of genetic testing. If AI could be trained to take in all these different sorts of data and look at them as a whole, perhaps they could become even better diagnosticians. “And that is exactly what we found,” says Daniel Truhn, a physicist and clinical radiologist at RWTH Aachen University in Germany. “Using the combined information is much more useful” than using either clinical or imaging data alone.

Part of Nature Outlook: Medical diagnostics

What makes combining the different types of data possible is the deep-learning architecture underlying the large language models behind applications such as ChatGPT6. Those systems rely on a form of deep learning called a transformer to break data into tokens, which can be words or word fragments, or even portions of images. Transformers assign numerical weights to individual tokens on the basis of on how much their presence should affect tokens further down the line — a metric known as attention. For instance, based on attention, a transformer that sees a mention of music is more likely to interpret ‘hit’ to mean a popular song than a striking action when it comes up a few sentences later. The attention mechanism, Truhn says, makes it possible to join imaging data with numerical data from clinical tests and verbal data from physicians’ notes. He and his colleagues trained their AI to diagnose 25 different conditions, ruling ‘yes’ or ‘no’ for each7. That’s obviously not how humans work, he says, but it did help to demonstrate the power of combining modalities.

In the long run, Sandler expects AI to show physicians clues they couldn’t glean before, and to become an important tool for improving diagnoses. But she does not see them replacing specialists. “I often use the analogy of flying a plane,” she says. “We have a lot of technology that helps planes fly, but we still have pilots.” She expects that radiologists will spend less time writing reports about what they see in images, and more time vetting AI-generated reports, agreeing or disagreeing with certain details. “My hope is that it will make us better and more efficient, and that it’ll make patient care better,” Sandler says. “I think that is the direction that we’re going.”

Breaking into the black box of artificial intelligence

Breaking into the black box of artificial intelligence

Learning over a lifetime

Learning over a lifetime

Tracking down tuberculosis

Tracking down tuberculosis