Abstract

This paper constructs a simple model of decision-making that accounts for the paradoxes of Ellsberg and Machina. It does so by representing decision makers’ beliefs on the vector space \({\mathbb{R}}\times {\mathbb{R}}\) and by providing a reasonable decision rule with axiomatic foundations. Moreover, the model allows for a characterization that clearly distinguishes between the two paradoxes. The interesting feature of the paper is that the ‘resolution’ of the paradoxes is along the lines suggested by the eponymous authors themselves. This is to say, the decision rule derived from the axioms corresponds to Ellsberg’s own rule in the narrow set of circumstances that he explored, and the decision rule embodies Machina’s injunction that we treat choice under uncertainty in a similar manner to the way we treat standard consumer theory. That the paper cleaves to the advice of both authors is a little surprising given that it utilizes a two-dimensional vector space that neither author deployed.

Similar content being viewed by others

1 Introduction

The Ellsberg and Machina paradoxes have somewhat discommoded canonical decision theory and several putative alternatives. This paper argues that there is a relatively straightforward extension of the traditional expected utility model which can account for both paradoxes. Moreover, the proposed model resolves both paradoxes along the lines suggested by the eponymous authors.

The model proposed here uses the simplest possible extension of the real numbers to express decision-makers’ beliefs. Specifically, we use the vector space \({\mathbb{R}}\times {\mathbb{R}}\) to represent beliefs—with objective beliefs located on one part of the vector space, and subjective beliefs located the other part. Decision-makers are then shown to maximize a form of rank-dependent expected utility given their beliefs. Decision-makers whose beliefs are given on \({\mathbb{R}}\times {\mathbb{R}}\) and who act in the manner described are then shown to act in ways consistent with both the Ellsberg and Machina paradoxes.

In demonstrating the model of decision-making and its explanation of the paradoxes, the paper has the following structure. Section 2 provides a brief literature review. Section 3 provides a discursive treatment of the paper’s model and how it explains the given paradoxes. Section 4 describes decision-makers’ beliefs. Section 5 describes the random variables and the lotteries that are the artefacts of interest for decision-makers. Section 6 describes a reasonable set of axioms that decision-makers are assumed to conform to, and a representation result is given. Section 7 describes Ellsberg’s ‘3 colour’ problem, Machina’s ‘reflection’ and ‘50:51’ examples, and Blavatskyy’s twist of the former; and we show how the model of behaviour ‘resolves’ these paradoxes. Section 8 concludes with some remarks on how the model can also be used to solve Ellsberg’s n-colour problem and another well-known paradox.

2 Literature

Ellsberg’s seminal paper (1961) is well known. It utilizes several thought experiments to undermine the normative force of Savage’s (1954) theory of subjective probability, which subsequent real experiments have largely verified (the literature is thoroughly canvassed in Machina & Siniscalchi, 2014, §§13.1 & 13.4).

Somewhat belatedly, a theoretical literature developed which aimed to address the paradox in a normative (axiomatic) manner.Footnote 1 Notable among these contributions is that of Schmeidler (1989), which proposed the model of Choquet expected utility.

Machina’s (2009) paper utilizes several thought experiments to undermine the model of Schmeidler in a manner “similar to those posed by Ellsberg’s original counterexamples to the classical subjective expected utility hypothesis” (Machina, 2009, p. 386). Machina’s paradox was experimentally confirmed by L’Haridon and Placido (2010). Subsequently, Baillon et al. (2011) demonstrated that the paradox embarrassed several models other than Choquet expected utility, notably maxmin expected utility (of Gilboa & Schmeidler, 1989), variational preferences (of Maccheroni et al., 2006), α-maxmin (of Ghirardato et al., 2004), and the smooth model of ambiguity aversion (of Klibanoff et al., 2005). Machina (2014) provided further thought experiments to impugn the normative claims of those models. Blavatskyy (2013a) showed that the paradox also contraindicated the models of Siniscalchi (2009) and Nau (2006). The models to survive the extinction event are few. Notably, Segal’s two-stage model survives (Dillenberger & Segal, 2014), and Gul and Pesendorfer’s (2014) expected uncertain utility theory also fits the data.

To understand the relationship of the model in this paper to the others, we first observe that this paper survives the Machina filter, which puts it alongside the models of Dillenberger and Segal (2014), and Gul and Pesendorfer (2014). All three models avoid the “tail [event] separability” that Machina identifies as being the problematic feature of many models that fail his filter.Footnote 2 Interestingly, all three survivors are two-component models—bivalent beliefs in this paper, interval utilities in Gul and Pesendorfer (2014), and the two-stage model discussed in Dillenberger and Segal (2014) with non-expected utility functions operating at both stages.Footnote 3 The model in this paper stands closer to that of Gul and Pesendorfer than it does to that of Dillenberger and Segal. In the functional representations of Gul and Pesendorfer (2014) and in the model presented below, objective probabilities are used as weights on unambiguous events, while a non-linear ‘distortion’ is applied over utility pairs in the relevant representative functions to deliver behaviour consistent with Machina’s paradox (distortion here being understood with respect to the historically normative expected utility representation). Despite that similarity, the two models differ as to where the ‘burden’ of representing ambiguity is ultimately borne. In Gul and Pesendorfer’s case, it is borne by interval utilities, whereas here it is borne by bivalent beliefs. Thus, although similar in some ways, both models pursue somewhat different paths to resolve the Ellsberg and Machina paradoxes.

3 Discourse

We can gain an intuitive understanding of the approach taken in this paper to resolving the Ellsberg and Machina paradoxes by considering the following discursive and diagrammatic arguments (technical arguments are given in subsequent sections of the paper).

We begin by clarifying what is meant by the term ‘ambiguity’. In the literature, ambiguity is generally defined to be a situation where there is no stochastic description of the world given by objective probabilities (where, by ‘the world’ we mean a measurable space composed of a set of states, \(\Omega\), and its associated event space, \(\mathcal{F}\)).Footnote 4 In other words, ‘ambiguity’ in the sense of Ellsberg (1961) is equivalent to ‘uncertainty’ in the sense of Knight (1921).Footnote 5 Such situations are distinguished from situations of ‘risk’, where ‘objective’ or ‘known’ or ‘physical’ probabilities of events are taken as parametric by decision-makers. [In this latter case of risk, decision-makers operate within a measure space \((\Omega ,\mathcal{F},p)\) (where \(p\) is a probability measure).]

This distinction between uncertainty and risk is associated with different objects of choice in these two domains. In uncertain situations, an ‘act’ or a ‘horse race lottery’ is a mapping from the set of states to a set of ‘outcomes’ or ‘consequences’ or ‘prizes’ (i.e., to each state in \(\Omega ,\) there is assigned a particular outcome from the set of possible outcomes, \(X\)). The choice domain is the set of horse race lotteries. In risky situations, each state in \(\Omega\) is similarly assigned a particular outcome, but, since each state has a known ‘objective’ probability of occurring, the objects of choice can be represented as vectors of probabilities over outcomes. Each such object is known as ‘roulette wheel’ or a ‘lottery’, and the choice domain is the set of roulette wheels.

In our approach here, we proceed a little differently. We do not adopt the binary distinction between uncertain worlds and their associated horse race lotteries on the one hand, and risky worlds and roulette wheels on the other. Rather, we suppose that these two kinds of world are at the extremes of a spectrum of ambiguity. Typically, we suppose, decision-makers are in a world in which there is ‘partial objective’ stochastic information. This partial, objective information is encoded in non-additive probabilities known as capacities.Footnote 6

Capacities were originally discussed by Choquet (1954), and were introduced into decision theory, broadly defined, by Shafer (1976), building on the earlier work of Dempster (1967). They were introduced into economics by Schmeidler (1989). In their use by those authors, and subsequently, capacities represent decision makers’ subjective beliefs about the world in situations of uncertainty. This approach contrasts with the approach of subjective Bayesians who argue that decision-makers’ beliefs in situations of uncertainty are probabilities. The canonical texts of subjective Bayesianism are Savage (1954) and Anscombe and Aumann (1963, who allow decision-makers to calibrate their subjective beliefs over horse race lotteries by comparing them with roulette wheels).

In our approach, we make use of both capacities and probabilities, and we argue that decision-makers have bivalent beliefs. Objective beliefs are encoded by capacities, and decision-makers ‘complete’ these beliefs by adding their own subjective judgments to those objective beliefs, and the combined beliefs are probabilities. A decision-maker’s bivalent beliefs are referred to as capa-bilities (or, simply, capabilities), a portmanteau of capacities and probabilities.Footnote 7

To see what we mean, here, we may consider the classic Ellsberg example (which is discussed more formally below, in Sect. 7). In the Ellsberg example, there is a single urn containing 90 balls; 30 of those balls are red, and the remaining 60 are black or yellow in unknown proportions.

The following table (Table 1) represents the beliefs of a decision-maker for whom objective beliefs are defined by the minimum chance of each event occurring, and for whom subjective beliefs are determined by the principle of indifference (or, if one prefers, the principle of insufficient reason).Footnote 8,Footnote 9

The objects of choice in this set up are ‘urn draws’, where an urn draw is a gamble, such that each state—as represented by the colour of a ball drawn from the urn—delivers a certain prize from the set of possible outcomes (\(X\)). Suppose that the individual faces a choice between two urn draws, the first of which delivers $100 if a red or yellow ball is drawn and $0 otherwise, while the second urn draw delivers $100 if a black or yellow ball is drawn and $0 otherwise. Suppose further that the decision-maker is a ‘consequentialist’ in the sense that she cares only about outcomes; and, when it comes to money, she prefers more money to less.

In the situation just described, if the decision-maker sees no operational difference between objective and subjective beliefs, then it is natural to think that she will be indifferent between the two gambles, since the two gambles will seem the same to her (as they offer the same payoffs for the same odds). Experimentally speaking, this is not what Ellsberg observed. Rather, he found that decision-makers generally preferred gambles with higher objective beliefs, ceteris paribus (i.e., in those situations when the combined beliefs and payoffs are the same across urn draws). This is to say, he found that people were ambiguity averse.

In our model, we model this preference over beliefs directly. This means that in binary (win-lose) lotteries of the kind just described, decision-makers are supposed to have preferences over the two different kinds of beliefs. To see what is meant by this, consider Fig. 1. That diagram represents the vector space, \({\mathbb{R}}^{2}\), with objective beliefs regarding a ‘win’ measured on the horizontal axis and subjective beliefs regarding a win measured on the vertical axis.

Utility increases as the odds of winning increase regardless of whether the increase in odds is in objective or subjective beliefs. The downward sloping lines are ‘indifference curves’ showing the decision-maker’s preferences over those two kinds of belief. That the indifference curves have a uniform gradient of − 1 means that the decision-maker thinks that objective and subjective beliefs are equivalent. An individual with such preferences will be indifferent between the two gambles just described, since she will regard them as wholly equivalent. [Observe that the indifference curve that goes through the belief-pair, (1/3, 1/3), is the same one that goes through (2/3, 0) (where (1/3, 1/3) are the odds of winning in the first urn draw, and (2/3, 0) are the odds of winning in the second urn draw).] This is the position of a subjective Bayesian.

Next, consider Fig. 2.

In Fig. 2, the decision-maker’s indifference curves have a uniform gradient greater (in absolute terms) than − 1. This means that the decision-maker consistently discounts the subjective odds of winning relative to the objective odds of winning. Such a person exhibits ambiguity aversion and prefers the urn draw where $100 is paid on a black or yellow ball being drawn to the urn draw where $100 is paid if a red or yellow ball is drawn [the indifference curve passing through the ‘mixed’ odds of (1/3, 1/3) is lower than the indifference curve passing through the ‘pure’ objective odds of (2/3, 0)].

As our analysis in subsequent sections shows, the representation of preference by linear indifference curves on the space of binary lotteries is sufficient to capture ambiguity aversion in the cases discussed by Ellsberg. However, more general forms of preference representation are required to capture ambiguity aversion in the sense of Machina. It turns out that curvilinear indifference curve maps are needed to account for the Machina paradoxes. To grasp the intuition of how curvilinear indifference curves can explain the Machina paradox, consider Figs. 3 and 4.

In both cases, the gradients of the indifference are everywhere greater than − 1 (in absolute terms), so the decision-maker is ambiguity averse (i.e., subjective beliefs are discounted relative to objective beliefs). However, in the former case, the indifference curves are strictly convex to the origin, and, in the latter, they are strictly concave. Convex preferences indicate that the decision-maker has a diminishing marginal rate of substitution between beliefs. A decision-maker with such preferences, and who is indifferent between two gambles (say, gambles ‘1’ and ‘2’), will strictly prefer a third gamble (‘3’) whose odds of winning are given by a convex combination of the odds of the two indifferent gambles (i.e., in standard notation: 3 \(\succ\) 1 \(\sim\) 2). Conversely, concave preferences indicate an increasing marginal rate of substitution between beliefs. A decision-maker with such preferences, and who is indifferent between two gambles (‘1’ and ‘2’), will strictly prefer both of those gambles against a third gamble (‘3’) whose odds of winning are given by a convex combination of the odds of the two indifferent gambles (i.e., \(1 \sim 2\succ 3\)).

Next, consider the following diagram, Fig. 5.

In Fig. 5, we have a linear indifference curve and a concave indifference curve, each representing the preferences of different ambiguity averse individuals. Both curves go through the points (1/3, 1/3) and (1/2, 0). This means that each person is indifferent to the following two urn draws: in the first urn draw, $100 is paid if a red or yellow ball is drawn from the Ellsberg urn described above; and, in the second urn draw, there is another urn where there is an objective 50% chance of winning $100 (this other urn contains, say, 50 white balls and 50 blue balls, and $100 is paid if a blue ball is drawn and $0 is paid otherwise).

According to our representation of Ellsberg’s model, an individual who is indifferent between these two gambles ought also to be indifferent to an urn draw whose objective and subjective odds of winning $100 are given by the ordered pair: (5/12, 1/6). These odds are given at point A in the diagram.Footnote 10 On our interpretation, what Machina shows by way of several examples, is that a person who is indifferent to the gambles whose odds of winning are (1/3, 1/3) and (1/2, 0) need not necessarily be indifferent to the gamble indicated by point A. Such a person might have, for example, the strictly convex indifference curve indicated in Fig. 5; in which case, she strictly prefers the gamble with odds of (5/12, 1/6) to either of the gambles between which she is indifferent [i.e., the gambles with winning odds of (1/3, 1/3) and (1/2, 0)]. One could, alternatively, imagine an individual with concave indifference curves who prefers either of the two indifferent gambles to the one whose winning odds are given by (5/12, 1/6). Machina’s own reflective discussion of his thought experiments suggests that he thinks that people have convex (rather than concave) preferences over ambiguity (to use the language of the model we are discussing).

To summarize, in the terms of the model described in this paper, Ellsberg found that subjective beliefs trade at a discount relative to objective beliefs—which is to say, he found ambiguity aversion as such. In his approach to decision-making, he assumed that the marginal rate of substitution between the two kinds of belief was constant (i.e., indifference curves over the odds of winning are linear). On our understanding, Machina has shown that the marginal rate of substitution is not necessarily constant.

This concludes our informal discussion of the model of the paper. We turn, now, for the next three sections, to a more formal analysis before proceeding to look at applications of the theory to instances of the paradoxes in the final two sections.

4 Beliefs

To begin our analysis, take a measurable space, \((\Omega ,\mathcal{F})\), where \(\Omega\) is the sample space and \(\mathcal{F}={2}^{\Omega }\) (\(\mathcal{F}\) is finite). Next, suppose that there is a mapping, \(\mathfrak{B}\), with the following characteristics.

Definition 1

An objective information mapping, \(\mathfrak{B}:\mathcal{F}\to [{0,1}]\), is a convex capacity, normalized to unity (\(\mathfrak{B}\left(\Omega \right)=1\)), and whose empty set is null (\(\mathfrak{B}\left({\varnothing }\right)=0\)).

The interpretation of \(\mathfrak{B}\) goes as follows: \(\mathfrak{B}\left(A\right)\) is the objective belief in favour of \(A\); which is to say, it is the belief in \(A\) that is warranted by the objective information to which decision-makers have access. For example, in the classic Ellsberg urn described in Table 1 of the previous section, the objective beliefs are: \(\mathfrak{B}\left(\varnothing \right)=0\),\(\mathfrak{B}\left({\text{red}}\right)=1/3\), \(\mathfrak{B}\left(\text{black}\right)=\mathfrak{B}\left({\text{yellow}}\right)=0\), \(\mathfrak{B}\left({\text{red}}\cup {\text{black}}\right)=\mathfrak{B}\left({\text{red}}\cup {\text{yellow}}\right)=1/3\), \(\mathfrak{B}\left({\text{black}}\cup {\text{yellow}}\right)=2/3\), and \(\mathfrak{B}\left({\text{red}}\cup {\text{black}}\cup {\text{yellow}}\right)=1\).

Given a convex capacity, \(\mathfrak{B}\), there is set of numbers, \({\left\{\beta \left(A\right)\right\}}_{A\in \mathcal{F}}\), with \(\beta \left(A\right)\in [{0,1}]\), so that \(\mathfrak{B}+\beta\) is a probability. The set of all such probabilities is referred to as the core of \(\mathfrak{B}\) (and is denoted: \({\text{core}}(\mathfrak{B})\)). In general, this set of probabilities is not a singleton; \(\mathfrak{B}\) is its lower bound. The interpretation of \(\beta\) goes as follows: \(\beta \left(A\right)\) is the subjective belief in favour of \(A\); which is to say, it is the belief in \(A\) that is attributed by the decision-maker on subjective grounds. For example, in the Ellsberg urn described in Table 1 of the previous section, the decision-maker’s subjective beliefs are as follows: \(\beta \left(\varnothing \right)=\) 0, \(\beta \left({\text{red}}\right)=0\), \(\beta \left({\text{black}}\right)=\beta \left({\text{yellow}}\right)=1/3\), \(\beta \left({\text{red}}\cup {\text{black}}\right)=\beta \left({\text{red}}\cup {\text{yellow}}\right)=1/3\), \(\beta \left({\text{black}}\cup {\text{yellow}}\right)=0\), \(\beta \left(\Omega \right)=0\).

The objective and subjective beliefs of the decision-maker can be represented as a (row) vector in the following way.

Definition 2

An imprecise belief is a vector-valued mapping, \(\mu :{\mathcal{F}} \to {\mathbb{R}} \times {\mathbb{R}}\), with: \(\mu \left( A \right) = \left( {{\mathfrak{B}}\left( A \right),\beta \left( A \right)} \right) \equiv \left( {{\mathfrak{B}},\beta } \right)\left( A \right)\), for all \(A \in {\mathcal{F}}\); and \(\left\lfloor \mu \right\rfloor \triangleq {\mathfrak{B}} + \beta\) is a probability (i.e., \(\left\lfloor \mu \right\rfloor \left( \emptyset \right) = 0\), \(\left\lfloor \mu \right\rfloor \left( {\Omega } \right) = 1\), \(\left\lfloor \mu \right\rfloor \left( A \right) \ge 0\), for all \(A \in {\mathcal{F}}\), and \(\left\lfloor \mu \right\rfloor \left( {A \cup B} \right) = { }\left\lfloor \mu \right\rfloor \left( A \right) + \left\lfloor \mu \right\rfloor \left( B \right)\) when \(A \cap B = \emptyset\) for all \(A,B \in {\mathcal{F}}\)).

For example, in the urn described above, the beliefs of the individual are: \(\mu \left(\varnothing \right)=(0, 0)\),\(\mu \left({\text{red}}\right)=(1/3, 0)\), \(\mu \left({\text{black}}\right)=\mu \left({\text{yellow}}\right)=(0, 1/3)\), \(\mu \left({\text{red}}\cup {\text{black}}\right)=\mu \left(red\cup {\text{yellow}}\right)=(1/3, 1/3)\), \(\mu \left({\text{black}}\cup {\text{yellow}}\right)=\left(2/3, 0\right),\mu \left(\Omega \right)=(1, 0).\)

In other words, objective beliefs are measured on the first dimension of the vector space, while subjective beliefs are measured on the second dimension. As mentioned in the previous section, we shall use the portmanteau, capability, to describe an imprecise belief, since the vector-valued mapping, \(\mu\), explicitly describes a capacity, \(\mathfrak{B}\), and implicitly describes a probability, \(\mathfrak{B}+\beta\).

5 Artefacts

In this section, we describe the objects that decision-makers are cognizant of when making choices. In the next section, we propose a set of axioms which describe how decision-makers configure these artefacts in their reasoning.

We suppose that there is a function: \(X:\Omega \to {\mathbb{R}}\), which determines the allocation of ‘outcomes’ or ‘consequences’ or ‘prizes’ to states. In a minor abuse of notation, we let \(X\) also denote the set of all possible prizes, with the typical prize being: \({x}_{i}\in X\). We suppose that prizes are totally ordered, with: \({x}_{1}\ge 0\); \({x}_{i}>{x}_{i-1} i=2,\dots ,n\); and \(n<\infty\).

It is natural to take \(X\) to be a set of dollar prizes as we have done in Sect. 3. We shall use one of the simple binary Ellsberg urn draws described in that section to provide examples of the definitions given immediately below. The particular urn draw we use is the one where $100 is paid if a red or yellow ball is drawn and $0 is paid otherwise.

The objective belief that \(X\) takes on a particular value, say \({x}_{i}\), is given by

For example, in our Ellsberg urn draw, we have: \(\mathfrak{B}\left(X=0\right)=0\); and: \(\mathfrak{B}\left(X=100\right)=1/3\).

The objective and subjective belief that \(X\) takes on a particular value, \({x}_{i}\), is given by

For example, in our Ellsberg urn draw, we have: \(\left(\mathfrak{B},\beta \right)\left(X=0\right)=(0, 1/3)\); and: \(\left(\mathfrak{B},\beta \right)\left(X=100\right)=(1/3, 1/3)\).

And the combined belief that \(X\) takes on a particular value, \({x}_{i}\), is given by

For example, in our Ellsberg urn draw, we have: \(\left\lfloor \mu \right\rfloor \left( {X = 0} \right) = 1/3\); and: \(\left\lfloor \mu \right\rfloor \left( {X = 100} \right) = 2/3\).

We also make use of the following notation to describe complementary cumulative distribution functions:

For example, in our Ellsberg urn draw, we have: \({\mathfrak{B}}\left( {X \ge 0} \right) = 1\); and \({\mathfrak{B}}\left( {X \ge 100} \right) = 1/3\).

For example, in our Ellsberg urn draw, we have: \(\mu \left(X\ge 0\right)=(1, 0)\); and: \(\mu \left(X\ge 100\right)=(1/3, 1/3)\).

Finally, we have

For example, in our Ellsberg urn draw, we have: \(\left\lfloor \mu \right\rfloor \left( {X \ge 0} \right) = 1\); and: \(\left\lfloor \mu \right\rfloor \left( {X \ge 100} \right) = 2/3\).

The expression \(\left\lfloor \mu \right\rfloor \left( {X \ge x_{i} } \right)\) is a probability value, since \(\left\lfloor \mu \right\rfloor\) is a probability. We use this notation when \(\left\lfloor \mu \right\rfloor\) is constructed from a given mapping, \(\mu\). In more general settings, we shall have cause to use the following notation (noting that \(p\) is a probability):

In general, there will be more than one function that maps from states to prizes. The different functions are indexed by \(h\). In our discussion below, when the context is clear that we are talking about a particular function, we will drop this indexing subscript to avoid clutter.

A function, \({X}_{h}\), defines a ‘lottery’, which is a distribution of beliefs across the range of prizes. Formally, we have the following definition(s).

Definition 3

A capacity lottery, \(\mathfrak{L}\), is a (column) vector of \(n\) capacity values

with: \(1\ge {\sum }_{i=1}^{n}\mathfrak{B}\left({x}_{i}\right).\)

For example, for our Ellsberg urn draw which pays $100 on the drawing of a red or yellow ball (indexed, \(h=1\)), we have: \({\left[\mathfrak{B}\left(0\right), \mathfrak{B}\left(100\right)\right]}_{1}={[0, 1/3]}_{1}\).

It is worth noting that in our model, a decision-maker may discriminate between two capacity lotteries, even though they generate the same vector of capacities over prizes. For example, let there be two urns, one with black balls and white balls, and the other with red balls and blue balls. In each case, the proportion of balls is unknown and $100 is paid on the draw of a black ball in the first instance and a red ball in the second instance. The decision-maker could, in principle, strictly prefer one urn draw to the other.

The set of capacity lotteries is denoted by \(\mathfrak{L}\), with: \(\mathfrak{L}\in \mathfrak{L}\).

A special subset of \(\mathfrak{L}\) is the set of capacity lotteries that satisfy: \({\sum }_{i=1}^{n}\mathfrak{B}\left({x}_{i}\right)=1.\) This is the set of probability lotteries (also referred to as ‘roulette lotteries’); which is to say, it is the set of lotteries where the objective information allows the distribution of prizes to be represented by a canonical probability. Formally, we have the following definition.

Definition 4

A probability lottery, \(L\in {\varvec{L}}\subset \mathfrak{L}\), is a (column) vector of \(n\) probability values:

with: \(1={\sum }_{i=1}^{n}p\left({x}_{i}\right)\).

Let us take \(\left\lfloor \mu \right\rfloor \left( {X = x_{i} } \right)\) as defined for the Ellsberg urn draw as our example probability, then we would have: \(\left[p\left(0\right), p\left(100\right) \right]=[1/3, 2/3]\).

In general, decision-makers do not discriminate between probability lotteries that yield the same vector of objective probabilities over prizes.

Finally, we will make use of the following concept.

Definition 5

A capacity-and-probability lottery—also known as a capability lottery—is a (column) vector of \(n\) pairs

with: \(1 = \sum\nolimits_{i = 1}^{n} {\left\lfloor \mu \right\rfloor \left( {x_{i} } \right)} .\)

For example, for our Ellsberg urn draw, we have: \(\left[\mu \left(0\right), \mu \left(100\right) \right]=[(0, 1/3), (1/3, 1/3)\)].

In this last kind of lottery, \(\left\lfloor \mu \right\rfloor = {\mathfrak{B}} + \beta\) is a probability; however, the objective part of belief (\(\mathfrak{B}\)) and the subjective part of belief (\(\beta\)) are kept separate. This is the key distinction between the approach to belief given in this paper and canonical decision theory.

If we let \({L}_{{x}_{i}}\equiv {{\varvec{x}}}_{{\varvec{i}}}\) be the probability lottery that puts 1 in the ith row of the vector and 0 in all the other rows—so that \({{\varvec{x}}}_{{\varvec{i}}}\) is the lottery that yields the prize, \({x}_{i}\), for sure—then the given definitions of capacity, probability, and capability lotteries allow us to write

where \(\otimes\) is the Kronecker product; and we have dropped the subscript, \(h\), in the first equality.

Note that each of these expressions and the corresponding expressions given in definition 3–5 are the same objects (respectively) from the decision-maker’s point of view—each is a vector that defines, respectively, a capacity lottery, a probability lottery, and a capability lottery.

We also make use of the following notation.

Definition 6

The (column) vector of complementary cumulative probabilities for each prize is denoted by: \({M} \triangleq \left[p\left({X}_{1}\right),p\left({X}_{2}\right), \dots ,p\left({X}_{i}\right),\dots ,p\left({X}_{n}\right)\right]\).

This definition then allows us to define the relevant concept of dominance.

Definition 7

A (probability) lottery, \(L^{\prime\prime}\), dominates lottery, \(L^{\prime}\), if: \(M^{\prime\prime} > M^{\prime}\); i.e., if \(p^{{L^{\prime\prime}}} \left( {X_{i} } \right) \ge p^{{L^{\prime}}} \left( {X_{i} } \right)\) for all \(i\), and \(p^{{L^{\prime\prime}}} \left( {X_{i} } \right) > p^{{L^{\prime}}} \left( {X_{i} } \right)\) for some \(i\).

For future reference, we denote the vector of complementary cumulative probabilities for a degenerate lottery, \(\user2{x^{\prime}}\), by: \(M_{x^{\prime}}\); and we note that: \(M_{x^{\prime\prime}} > M_{x^{\prime}}\) if \(x^{\prime\prime} > x^{\prime}\).

Finally, we introduce an alternative way of representing capability lotteries in which the complementary cumulative capabilities express the likelihoods of obtaining increments in prizes. In that case, the capability of getting at least the increment \({x}_{1}-0\) is: \(\mu \left({X}_{1}\right);\) and the capability of getting at least the increment \({x}_{2}-{x}_{1}\) is: \(\mu \left({X}_{2}\right);\) and so on. The object which ‘delivers’ the increment, \(({x}_{i}-{x}_{i-1}),\) is the difference between the lotteries \({{\varvec{x}}}_{{\varvec{i}}}\) and \({{\varvec{x}}}_{{\varvec{i}}-1}\), and is denoted by: \({{\varvec{x}}}_{{\varvec{i}}}-{{\varvec{x}}}_{{\varvec{i}}-1}\). Consequently, we have the following.

Definition 8

The alternative representation of the capability lottery, \(\Lambda\), is given by:\(\underline {{\Lambda }}\triangleq\)

As with \(\Lambda\), \(\underline {{\Lambda }}\) is a vector of vectors; specifically, it is an \(n\)-dimensional vector of pairs. Re-arranging terms on the right-hand side of the above expression allows us to re-write \(\underline {{\Lambda }}\) as a vector of differences of complementary cumulative capabilities

For example, for our Ellsberg urn draw, we have, as noted above: \(\Lambda =\left[\mu \left(0\right), \mu \left(100\right)\right]=[(0, 1/3), (1/3, 1/3)\)] and \(\mu \left(X\ge 0\right)=\left(1, 0\right),\) \(\mu \left(X\ge 100\right)=(1/3, 1/3)\). Hence, it is easy to verify that: \(\underline {{\Lambda }}=[(2/3, -1/3), (1/3, 1/3)\)].Footnote 11

The intuition with regards to the alternative representation of a lottery can be grasped if we think of the special case where capabilities are probabilities. In that case, the representation implies that: \(\mu \left({X}_{i}\right)=\left(p\left({X}_{i}\right),0\right).\) We then immediately see that: \(\underline {{\Lambda }}=\Lambda\), since: \(\mu \left({X}_{i}\right)-\mu \left({X}_{i+1}\right)=\mu \left({x}_{i}\right)\in {\mathbb{R}}\times 0\). In the general case, where capabilities are given on \({\mathbb{R}}\times {\mathbb{R}}\), this equality does not hold, although we do always have \(\left\lfloor \mu \right\rfloor \left( {X_{i} } \right) - \left\lfloor \mu \right\rfloor \left( {X_{i + 1} } \right) = \left\lfloor \mu \right\rfloor \left( {x_{i} } \right)\); and we can always recover the capabilities of \(\Lambda\) from the complementary cumulative capabilities of \(\underline {{\Lambda }}\) given our knowledge of the function, \(X\), and the objective information, \(\mathfrak{B}\).

We are now able to state the axioms.

6 Axioms

In this section of the paper, we discuss the axioms that govern the decision-maker’s behaviour. Our approach here is neither prescriptive—as per canonical decision theory—nor purely descriptive—as per behavioural economics. Rather, we take a middle ground whereby we suppose that there are principles of behaviour that are coherent and plausible, and which allow us to understand decision-makers’ behaviour; however, we do not argue that a decision-maker must act in accord with those principles under pain of acting irrationally. Nor, conversely, do we think that decision-makers are consistently incoherent (as behavioural economics allows). Perhaps, the best way to understand our approach is by way of analogy with grammar—we suppose that there are rules of grammar that allow us to infer the speaker’s intended meaning, but we do not demand, for example, that infinitives never be split.

With regards to the formalism of what follows, the arguments are broadly in the spirit of Sarin and Wakker (1997).

The first of our axioms states the nature of the decision-maker’s preferences over \(\mathfrak{L}\).

Axiom 1

Ordering: there is a complete and transitive preference ordering, \(\succsim\), over \(\mathfrak{L}\).

The second axiom—continuity over probability lotteries—provides the relevant continuity axiom.

Axiom 2

Continuity: for any probability lotteries, \(L,L^{\prime},L^{\prime\prime} \in {\varvec{L}}\) with: Lʺ ≿ L ≿ Lʹ, there is a unique real number: \(\lambda \in \left[ {0,1} \right] \subset {\mathbb{R}}\), such that: \(\lambda L^{\prime\prime} + \left( {1 - \lambda } \right)L^{\prime} \sim L\).

The next axiom connects the preferences over lotteries to a numerical order normalized with respect to best and worst lotteries. To state the axiom, we assume that there is a best possible prize, \({x}^{*}\), which is identified with the best degenerate lottery, \({L}_{{x}^{*}}\equiv {{\varvec{x}}}^{\boldsymbol{*}}\); and there is also a worst possible prize, \({x}_{*}\), which is identified with the worst degenerate lottery, \({L}_{{x}_{*}}\equiv {{\varvec{x}}}_{\boldsymbol{*}}\); with \({{\varvec{x}}}^{\boldsymbol{*}}\succ {{\varvec{x}}}_{\boldsymbol{*}}\) and \({{\varvec{x}}}^{\boldsymbol{*}}\succsim {{\varvec{x}}}_{{\varvec{i}}}\succsim {{\varvec{x}}}_{\boldsymbol{*}}\) for all \(i\). We then have the following definition.

Definition 9

The monotonicity property holds if, whenever we have: \(L{^{\prime}}\sim {\lambda }^{{^{\prime}}}{{\varvec{x}}}^{\mathbf{*}}+\left(1-\lambda {^{\prime}}\right){{\varvec{x}}}_{\mathbf{*}}\) and \(L{^{\prime}}{^{\prime}}\sim {\lambda }^{{^{\prime}}{^{\prime}}}{{\varvec{x}}}^{\mathbf{*}}+\left(1-{\lambda }^{{^{\prime}}{^{\prime}}}\right){{\varvec{x}}}_{\mathbf{*}}\), then: \(L{^{\prime}}{^{\prime}}\succsim L{^{\prime}}\iff \lambda {^{\prime}}{^{\prime}}\ge \lambda {^{\prime}}\).

Axiom 3

Monotonicity: the monotonicity property holds, and preferences are monotone with respect to the total order on prizes, i.e., \(L{^{\prime}}{^{\prime}}\succsim L{^{\prime}}\iff \lambda {^{\prime}}{^{\prime}}\ge \lambda {^{\prime}}\) and \(M_{x^{\prime\prime}} > M_{x^{\prime}} \Rightarrow x^{\prime\prime} \succ x^{\prime}\).

The next two axioms deal with the way in which the decision-maker compares capacity lotteries and probability lotteries and how she uses that comparability to construct capability lotteries. To state the axioms, we need the following definition.

Definition 10

A binary lottery yields the maximum prize, \({x}^{*}\), in the case of a specified event and the minimum prize, \({x}_{*}\), otherwise; there are three subordinate denotational definitions which are as follows.

Definition 10.1

A binary capacity lottery for specified event, A, is denoted: \({\left[{\mathfrak{B}}_{A},.\right]}_{{A}^{*}}\equiv {[\mathfrak{B}\left({x}^{*}\right),\mathfrak{B}\left({x}_{*}\right)]}_{{A}^{*}} \in \mathfrak{L}\), where \({h=A}^{*}\) denotes the fact that \({x}^{*}\) is delivered on event \(A\) and \({x}_{*}\) is delivered on event \({A}^{C}\) (the complement of \(A\)).

For example, the binary capacity lottery that delivers \({x}^{*}\) if a yellow ball is drawn from the Ellsberg 3-colour urn is denoted: \({\left[{\mathfrak{B}}_{y},.\right]}_{{y}^{*}}={\left[0,.\right]}_{{y}^{*}}\in \mathfrak{L}\), and, similarly, the binary capacity lottery that delivers \({x}^{*}\) if a red ball is drawn from the Ellsberg 3-colour urn is denoted: \({\left[{\mathfrak{B}}_{r},.\right]}_{{r}^{*}}={\left[1/3,.\right]}_{{r}^{*}}\in \mathfrak{L}\). As noted above, we will drop the subscript index when the context allows.

Definition 10.2

A binary probability lottery that yields probability pʹ of delivering \({x}^{*}\) is denoted: \([{p}^{^{\prime}}\left({x}^{*}\right),.]\in {\varvec{L}}\); and, whenever we have: \({\left[{\mathfrak{B}}_{A},.\right]}_{{A}^{*}}\sim [{p}^{^{\prime}}\left({x}^{*}\right),.]\), the latter (i.e., the probability) lottery is denoted as: [\({p}_{A\sim },.]\).

For example, the binary probability lottery that delivers \({x}^{*}\) on the call of heads on a fair coin toss is denoted:\(\left[{p}^{^{\prime}}\left({x}^{*}\right),.\right]=[1/2,.]\), and, if drawing a red or yellow ball from the Ellsberg 3-colour urn delivers \({x}^{*}\) and if this capacity lottery is indifferent to the coin toss that delivers \({x}^{*}\) on a correct call, then we have:\({\left[{\mathfrak{B}}_{r\cup y}, .\right]}_{{r\cup y}^{*}}={\left[1/3,.\right]}_{{r\cup y}^{*}} \sim \left[1/2,.\right]=[{p}_{r\cup y\sim },.]\).

Definition 10.3

A binary capability lottery that yields capability \((\mathfrak{B},\beta ){^{\prime}}\) of delivering \({x}^{*}\) is denoted: \([(\mathfrak{B},\beta ){^{\prime}}\left({x}^{*}\right),.]\).

For example, the binary capability lottery for the draw of a red or yellow ball is \(\left[(1/{3,1}/3),.\right]\).

We can now state the promised axiom.

Axiom 4

Comparability: for each binary capacity lottery, \({\left[{\mathfrak{B}}_{A},.\right]}_{{A}^{*}}\), there is a unique binary probability lottery, [\({p}_{A\sim },.]\), such that: \({\left[{\mathfrak{B}}_{A},.\right]}_{{A}^{*}}\sim [{p}_{A\sim },.]\), with \([{p}_{A\sim },1-{p}_{A\sim }]\ge {[\mathfrak{B}\left({x}^{*}\right),\mathfrak{B}\left({x}_{*}\right)]}_{{A}^{*}}\).

For example, in our Ellsberg example, we have: \({\left[{\mathfrak{B}}_{r\cup y},.\right]}_{{r\cup y}^{*}}\sim [{p}_{r\cup y\sim },.]\), \(=[1/2,.]\); and we observe that: \(\left[{p}_{r\cup y\sim },1-{p}_{r\cup y\sim }\right]=\left[1/2, 1/2\right]\ge {\left[1/3, 0\right]}_{{r\cup y}^{*}}={[\mathfrak{B}\left({x}^{*}\right),\mathfrak{B}\left({x}_{*}\right)]}_{{r\cup y}^{*}}\).

Now, whenever \({\left[{\mathfrak{B}}_{A},.\right]}_{{A}^{*}}\sim [{p}_{A\sim },.]\), we can always find a pair of real numbers, \(({\alpha }_{A}, {\beta }_{A})\) with \({\alpha }_{A}>0\) and \({1\ge \beta }_{A}\ge 0\), such that: \({\mathfrak{B}}_{A}+{\beta }_{A}/{\alpha }_{A}={p}_{A\sim }\). Let the set of such pairs be denoted: \(\{({\alpha }_{A}, {\beta }_{A})\}\).

The next axiom provides further constraints on decision-makers’ preferences.

Axiom 5

Additivity: for disjoint \(A\) and \(B\) where: \({\left[{\mathfrak{B}}_{A},.\right]}_{{A}^{*}}\sim [{p}_{A\sim },.]\), \({\left[{\mathfrak{B}}_{B},.\right]}_{{B}^{*}}\sim [{p}_{B\sim },.]\) and \({\left[{\mathfrak{B}}_{A\cup B},.\right]}_{{A\cup B}^{*}}\sim [{p}_{A\cup B\sim },.]\), there is a triplet of pairs of real numbers, \((\left({\alpha }_{A}, {\beta }_{A}\right),\left({\alpha }_{B}, {\beta }_{B}\right), \left({\alpha }_{A\cup B}, {\beta }_{A\cup B}\right)),\) such that

A partial example of this axiom is given by our Ellsberg urn draw. Let \(A={\text{red}}\), \(B={\text{yellow}}\), and set \({\alpha }_{A}={\alpha }_{B}=2\) (i.e., the indifference curves in the Ellsberg example all have a slope of − 2 (as depicted in Fig. 2)). We have the objective beliefs: \({\mathfrak{B}}_{A}=1/3\), \({\mathfrak{B}}_{B}=0\), and \({\mathfrak{B}}_{A\cup B}=1/3\). The following subjective beliefs and probabilities are consistent with the axiom: \({\beta }_{A}=0\), \({\beta }_{B}=1/3\), \({\beta }_{A\cup B}=1/3\), \({p}_{A\sim }=1/3\), \({p}_{B\sim }=1/6\), and \({p}_{A\cup B\sim }=1/2\).

There are a couple of remarks to be made about this axiom. First, all the lotteries stated in the four indifference relations given above are probability lotteries. Second, the first three indifference relations in the axiom follow directly from the reflexivity of \(\sim\) (since \({\mathfrak{B}}_{A}+{\beta }_{A}/{\alpha }_{A}={p}_{A\sim }\), etc. by construction). Finally, the import of the axiom lies in the final indifference relation, which allows us to state the following proposition (proof in Appendix 1).

Proposition 1

\(\mathfrak{B}+\beta\) is a probability.

Axiom 5 implies that we focus our attention on the set of pairs in \(\{({\alpha }_{A}, {\beta }_{A})\}\) that make \(\mathfrak{B}+\beta\) a probability. This set is not a singularity—there may be more than one set of pairs that make \(\mathfrak{B}+\beta\) a probability. However, it turns out that a further axiom will reduce the set to a unique set of pairs.

Before stating that axiom, it is worth noting that ambiguity averse decision-makers prefer probability lotteries to capacity lotteries in some well-defined sense, since the former provide a complete characterization of risk, whereas the latter provide only a partial characterization of risk. The next axiom provides the appropriate definiteness of the sense in which decision-makers prefer probability lotteries to capacity lotteries (and, thereby, the sense in which they are ambiguity averse).

We proceed by supposing that a decision-maker’s relative aversion to ambiguity gives rise to a ‘discount factor’, meaning that a lower expected payoff on a probability lottery is indifferent to a higher expected payoff on an otherwise equivalent capacity lottery. Specifically, we make the following supposition.

Axiom 6

Aversion: for any binary capacity lottery,\({\left[{\mathfrak{B}}_{A},.\right]}_{{A}^{*}}\sim [{p}_{A\sim },.]\), there exists a unique real number, \({\gamma }_{A}\), with\(1\ge {\gamma }_{A}>0\), such that:\(\left[{p}_{A\sim },.\right]\sim \left[{\mathfrak{B}}_{A}+{\beta }_{A}/{\alpha }_{A}, .\right]\sim [({\mathfrak{B}}_{A}+{\beta }_{A}){\gamma }_{A},.]\).

The import of the axiom lies in it allowing us to state the following proposition (proof in Appendix 1).

Proposition 2

For each set, \(A\) , the pair, \(({\alpha }_{A}, {\beta }_{A})\) , is unique.

The implication of proposition 2 is that each binary capacity lottery, \({\left[{\mathfrak{B}}_{A},.\right]}_{{A}^{*}}\), can be identified with a binary capability lottery, \({\left[({\mathfrak{B}}_{A},{\beta }_{A}),.\right]}_{{A}^{*}}\). Moreover, since binary capacity lotteries are ordered by a preference relation (\(\succsim\)), there is an implied preference relation (\(\succcurlyeq\)) between binary capability lotteries, such that: \({\left[{\mathfrak{B}}_{A},.\right]}_{{A}^{*}}\succsim {\left[{\mathfrak{B}}_{B},.\right]}_{{B}^{*}}\iff {\left[({\mathfrak{B}}_{A},{\beta }_{A}),.\right]}_{{A}^{*}}\succcurlyeq {\left[({\mathfrak{B}}_{B},{\beta }_{B}),.\right]}_{{B}^{*}}\). Furthermore, the implied preference relation over binary capability lotteries contains information about the decision-maker’s degree of ambiguity aversion.

To see this, let us introduce the function, \(\varphi\), that sends: \(({\mathfrak{B}}_{A},{\beta }_{A})\mapsto {p}_{A\sim }\) for all \(A\in \mathcal{F}\). We shall suppose that \(\varphi\) has a special quasi-linear form: \(\varphi \left({\mathfrak{B}}_{A},{\beta }_{A}\right)={\mathfrak{B}}_{A}+\phi ({\beta }_{A})\), so that: \(\phi \left({\beta }_{A}\right)={\beta }_{A}/{\alpha }_{A}\). The function, \(\varphi\), tells us the rate at which the decision-maker transforms the uncertainty of a capability lottery into the equivalent risk in a probability lottery. ‘Equivalent’ here means that the binary capability lottery whose odds of winning are given by, \(({\mathfrak{B}}_{A},{\beta }_{A})\), is indifferent to the binary capability lottery whose odds of winning are given by, \({(\mathfrak{B}}_{A}+\phi \left({\beta }_{A}\right),0)\), which is the representation of the winning odds of the probability lottery, \({[\mathfrak{B}}_{A}+\phi \left({\beta }_{A}\right),0]\). The example described immediately below will clarify this logic.

Take the simplest kind of case, which is where: \({\alpha }_{A}=\alpha\) for all \(A\in \mathcal{F}\), so that \(\phi (0)=0\), and \({\phi }^{^{\prime}}={\alpha }^{-1}<1\), \(\alpha \in {\mathbb{R}}\). An instance of this kind of case is the Ellsberg urn draw considered in Sect. 3 and depicted in Fig. 2. We recall that the Ellsberg urn that we have been using awards the best prize if a red or yellow ball is drawn from the urn and awards the worst prize if a black ball is drawn, where the urn contains 90 balls, of which 30 are red, and 60 are either black or yellow in unknown proportions. In such a situation as that, the constant value, \(\alpha\), measures the rate of exchange or the trade-off between uncertainty and risk for the decision-maker. Recalling Table 1, we see that the decision-maker determines that the capability of winning (i.e., of getting \({x}^{*})\) is: \(\left(\mathfrak{B},\beta \right)\left({x}^{*}\right)=(1/3, 1/3)\).Footnote 12 Now, if the decision-maker has: \(\alpha =2\), then the (somewhat uncertain) binary capability lottery is equivalent to the (purely risky) binary probability lottery that has probabilities of \(1/2\) for winning and \(1/2\) for losing. Note that: \(1/3+1/3=2/3>1/2\), which implies that the combined probability of winning in the original, capability lottery is greater than the probability of winning in the equivalent probability lottery. This implies that the decision-maker has discounted the odds of winning given by the uncertain lottery, so that it is equivalent to a risky lottery with lower total odds of winning. This is characteristic of an ambiguity averse decision-maker.

Speaking more generally, if a decision-maker dislikes ambiguity, we have: \(\phi (\beta )<\beta\) over the whole domain; and if \(\phi \left(\beta \right)>\beta\), the decision-maker prefers ambiguity. If the individual is indifferent to ambiguity, we have: \(\phi \left(\beta \right)=\beta\). Figure 1 in Sect. 3 depicts this last situation of indifference to ambiguity. Figure 2 in that section depicts a situation where the decision-maker is ambiguity averse and has: \(\phi (0)=0\), \({\phi }^{^{\prime}}={\alpha }^{-1}<1\). This is the simplest possible case of ambiguity aversion. However, as noted in our discussion of Sect. 3, the ‘indifference curves’ that characterize the decision-maker’s attitude to ambiguity will not be linear in general. Consider, again, the preferences depicted in Figs. 3 and 4 in that earlier section. In both cases, we everywhere have: \(\phi (\beta )<\beta\), and, furthermore, we suppose that: \(\phi (0)=0\), \({\phi }^{^{\prime}}(\beta )<1\) over the whole domain. Therefore, the decision-maker is ambiguity averse; but in the former case, the indifference curves are convex to the origin, and, in the latter, they are concave.

In all these cases, the decision-maker is everywhere ambiguity averse. However, it may be that she is everywhere ambiguity avid. In that case, her indifference curves will ensure that: \(\phi \left(\beta \right)>\beta\), but their curvature may be linear or convex or concave, depending on her attitude to increasing amounts of uncertainty.

Finally, we note that the decision-maker needs not be firmly ambiguity avid or ambiguity averse over the whole domain. Rather, her attitude may vary, so that, for example, she becomes relatively more ambiguity averse as the capability, \(\left(\mathfrak{B},\beta \right)\), increases. A set of preferences consistent with this thought is depicted in Fig. 6.

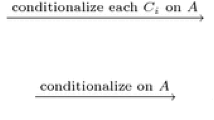

The final axiom is the substitution axiom, and it is best understood with the help of a diagram along with the definition(s) given immediately below which amplify the diagrammatic logic. The relevant diagram is given in Fig. 7.

Before outlining the logic of the axiom, we note that it has a more ‘dynamic’ structure than is usual for axioms of this type. Two comments are worth making here. The first is that, owing to the non-modularity of decision-makers’ beliefs under uncertainty, operations on beliefs do not commute, in general, so the logical sequence of the way in which decision-makers structure their thinking becomes more salient. The second point is that we are trying to recover the tacit nature of decision-makers’ logic rather than prescribing norms; therefore, if decision-makers have dynamic thought processes, then that is how we must proceed.

We proceed as follows. First, we have the following definition.

Definition 11

A capacity lottery, \(\mathfrak{L}\), is decomposable into sub-lotteries if there are lotteries, \(\{{\mathfrak{L}}_{1},{\mathfrak{L}}_{2},\dots {\mathfrak{L}}_{j},\dots \}\) and probabilities, \(\{{p}_{1},{p}_{2},\dots {p}_{j},\dots \},\) such that: \(\mathfrak{L}={\sum }_{j}{p}_{j}{\mathfrak{L}}_{j}\).

It is sufficient for our purposes in this section and the next to take the relevant decomposition to be unique. The more general case is discussed in Appendix 2.

Second, to understand a key step in the subsequent definition and axiom, we need to know how to construct the capability lottery that corresponds with a capacity lottery. To see how this is done, consider the capacity lottery

Label the events that ‘deliver’ the prizes, \({x}_{1},{x}_{2},\dots ,{x}_{i},\dots ,{x}_{n}\) as, respectively, events: 1, 2, …, i, …, n. For each event, i, construct the binary capacity lottery, \({\left[{\mathfrak{B}}_{i},.\right]}_{{i}^{*}}\). Utilize the discussion leading up to and including proposition 2 to find the subjective belief associated with event i, \(\beta \left(i\right)\). Then, the capability lottery, \(\Lambda\), that corresponds to the capacity lottery, \(\mathfrak{L}\), is

Further, if \({\sum }_{j}{p}_{j}{\mathfrak{L}}_{j}\) is a decomposed capacity lottery, then \({\sum }_{j}{p}_{j}{\Lambda }_{j}\) is the corresponding decomposed capability lottery.

We are now able to understand the following definition, which characterizes the components of Fig. 7 in order of implication.

Definition 12

Given a capacity lottery, \(\mathfrak{L}\), (such as lottery, \(\mathfrak{L}\mathfrak{^{\prime}}\mathfrak{^{\prime}}\), in Fig. 7):

-

1.

\({\sum }_{j}{p}_{j}{\mathfrak{L}}_{j}\) is the representation of the lottery obtained by decomposing \(\mathfrak{L}\) into its \(J\) component sub-lotteries;

-

2.

\({\sum }_{j}{p}_{j}{\Lambda }_{j}\) is the decomposed capability lottery that corresponds to \({\sum }_{j}{p}_{j}{\mathfrak{L}}_{j}\);

-

3.

\({\sum }_{j}{p}_{j}\underline {{\Lambda }}_{j}\) is the alternative representation of the decomposed capability lottery, \({\sum }_{j}{p}_{j}{\Lambda }_{j}\);

-

4.

\({\sum }_{j}{p}_{j}\underline {{\Lambda }}_{*j}\) is the alternative representation of the decomposed capability lottery that results from substituting \({\lambda }_{i}{{\varvec{x}}}^{\boldsymbol{*}}+\left(1-{\lambda }_{i}\right){{\varvec{x}}}_{\boldsymbol{*}}\) for \({{\varvec{x}}}_{{\varvec{i}}}\) in each \(\underline {{\Lambda }}_{j}\), where we note that:

$${{{\varvec{x}}}_{{\varvec{i}}}\sim \lambda }_{i}{{\varvec{x}}}^{\boldsymbol{*}}+\left(1-{\lambda }_{i}\right){{\varvec{x}}}_{\boldsymbol{*}}$$\(\underline {{\Lambda }}_{*j} = \left( {1,0} \right) \otimes \left( {{\varvec{x}}_{\user2{*}} - {0}} \right) + ({\mathfrak{B}}_{*j} ,\beta_{*j} ) \otimes \left( {{\varvec{x}}^{\user2{*}} - {\varvec{x}}_{\user2{*}} } \right)\), and:

\({(\mathfrak{B}}_{*j},{\beta }_{*j})= {\left(\sum_{i=1}^{n}\mathfrak{B}\left({X}_{i}\right)\left({\uplambda }_{i}-{\uplambda }_{i-1}\right),\sum_{i=1}^{n}\beta \left({X}_{i}\right)\left({\uplambda }_{i}-{\uplambda }_{i-1}\right)\right)}_{j}\);

-

5.

\({\sum }_{j}{p}_{j}{\Lambda }_{*j}\)is the standard representation of \({\sum }_{j}{p}_{j}\underline {{\Lambda }}_{*j}\), obtained by setting: \({\Lambda }_{*j} = ({\mathfrak{B}}_{*j} ,\beta_{*j} ) \otimes {\varvec{x}}^{\user2{*}} + \left( {1 - ({\mathfrak{B}}_{*j} + \beta_{*j} ),{ }0} \right) \otimes {\varvec{x}}_{\user2{*}}\) for each \(j\);

-

6.

\({\sum }_{j}{p}_{j}{L}_{*j}\) is the sum of probability sub-lotteries obtained by setting: \({L}_{*j}\equiv \left[{p}_{*j}\left({x}^{*}\right),.\right]={[\mathfrak{B}}_{*j}+\phi \left({\beta }_{*j}\right),.]\) where: \({[(\mathfrak{B}}_{*j},{\beta }_{*j})\left({x}^{*}\right),.]\sim {[(\mathfrak{B}}_{*j}+\phi \left({\beta }_{*j}\right),0)\left({x}^{*}\right),.]\) for each \(j\) (where: \({[(\mathfrak{B}}_{*j},{\beta }_{*j})\left({x}^{*}\right),.]\equiv {\Lambda }_{*j}\); and we note that setting \({L}_{*j}\) given \({\Lambda }_{*j}\) in this step (i.e., implementing: \({\Lambda }_{*j}\to {L}_{*j}\)) is executed before the probability-weighted sub-lotteries are summed (the execution of the sum occurs in the next step))Footnote 13;

-

7.

\({L}_{*}\triangleq {\sum }_{j}{p}_{j}{L}_{*j}\) is a binary probability lottery.

With that in hand, we can state what the diagram depicts, viz.:

Axiom 7

Substitution: given a pair of capacity lotteries, \({\mathfrak{L}}^{\prime}\) and \({\mathfrak{L}}^{\prime\prime}\), we can construct a pair of binary probability lotteries, \(L_{*}^{^{\prime}}\) and \(L_{*}^{^{\prime\prime}}\), such that \(\mathfrak{L}\mathfrak{^{\prime}}\mathfrak{^{\prime}}\succsim \mathfrak{L}\mathfrak{^{\prime}}{\iff L}_{*}^{ {^{\prime}}{^{\prime}}}\succsim {L}_{*}^{ {^{\prime}}}\).

In other words: there is an isotone correspondence between the given capacity lotteries and the constructed binary probability lotteries.

The intuition for the logic underlying axiom 7 goes as follows. The decision-maker begins using the objective information to construct a capacity lottery. Where possible, this lottery is broken up into its modular components (the decomposition into component sub-lotteries). The motivation here is to make explicit the objective probabilities of the decision problem. The decision-maker then forms subjective beliefs to put alongside the objectively given information (and this allows for the formation of capability lotteries). These lotteries are then put in a form that makes clear the incremental impacts of beliefs on obtaining prizes (which warrants the use of the alternative representation of the capability lotteries).Footnote 14 To allow a comparison across lotteries, this representation is normalized by putting all lotteries in terms of the capabilities of obtaining best and worst prizes (by way of substitution). Subsequent parts of the logical structure (on the right-hand side of the diagram) ultimately render all lotteries in the form of binary probability lotteries for purposes of comparison.

Two points are worth making about axiom 7 and the axioms preceding it. First, the axioms are not taken to be constitutive of ‘rational’ decision-making—they merely describe a kind of ‘grammar of decision making’ which is internally coherent and behaviourally plausible. Second, it is not supposed that decision-makers are explicitly aware of the logic of their decision-making processes. Just as competent language speakers need not be grammarians, neither need competent decision-makers be logicians.

That being said, we are now in a position to state the following theorem, which follows from the seven axioms stated above (proof in Appendix 1).

Theorem

Axioms 1–7 imply that there exists a vector-valued belief function, \(\mu :\mathcal{F}\to {\mathbb{R}}\times {\mathbb{R}}\) ; a real-valued utility function, \(u:X\to {\mathbb{R}}\) ; and a probability-weighted, ambiguity-adjusted, rank-dependent utility function such that, for any two (capacity) lotteries

Remark

If we let: \({\mathbf{E}}_{{\varvec{\mu}}}\left[u\left(x\right)\right]\triangleq \sum_{i=1}^{n}{\mu }^{\Lambda }\left({X}_{i}\right)\left(u\left({{\varvec{x}}}_{{\varvec{i}}}\right)-u\left({{\varvec{x}}}_{{\varvec{i}}-1}\right)\right)\), and let \(\mathbf{E}[.]\) denote the standard expectation operator, then the decision-maker’s problem is to find:

A few special cases are of interest. If: \(\varphi \left(\mathfrak{B},\beta \right)=\mathfrak{B}+\phi (\beta )\), and \(\phi \left(0\right)=0\) and \({\phi }^{^{\prime}}=1\) over the whole domain (\({\mathbb{R}}_{+}^{2}\) under the unit simplex), then \(\varphi \left( {{\mathbf{E}}_{{\varvec{\mu}}} \left[ {u\left( x \right)} \right]} \right) = {\mathbf{E}}\left[ {\left\lfloor u \right\rfloor \left( x \right)} \right] \triangleq \sum\nolimits_{i = 1}^{n} {\left\lfloor {\mu^{{\Lambda }} } \right\rfloor \left( {x_{i} } \right)u\left( {{\varvec{x}}_{{\varvec{i}}} } \right)}\), and the problem reduces to expected utility maximization. This is the case assumed in subjective expected utility theory where any difference between objective and subjective beliefs is elided, and the former are subsumed by the latter. It is the canonical case of ambiguity neutrality.

More generally, if \(\phi \left(0\right)=0\) and \({\phi }^{^{\prime}}={\alpha }^{-1}, \alpha \in {\mathbb{R}}\) over the whole domain, then: \(\varphi \left( {{\mathbf{E}}_{{\varvec{\mu}}} \left[ {u\left( x \right)} \right]} \right) = {\mathbf{E}}\left[ {\left\lfloor u \right\rfloor \left( x \right)} \right] + \left( {\alpha - 1} \right){\mathbf{E}}_{{\mathbf{\mathfrak{B}}}} \left[ {u\left( x \right)} \right]\) where: \({\mathbf{E}}_{\mathfrak{B}}\left[u\left(x\right)\right]\triangleq \sum_{i=1}^{n}{\mathfrak{B}}^{\mathfrak{L}}\left({X}_{i}\right)\left(u\left({{\varvec{x}}}_{{\varvec{i}}}\right)-u\left({{\varvec{x}}}_{{\varvec{i}}-1}\right)\right)\). The problem then reduces to maximizing a weighted sum of expected utility and Choquet expected utility:

In this special case, the greater is the degree of ambiguity aversion—as given by the parameter, \(\alpha\)—the greater is the emphasis on Choquet expected utility. This puts increasing emphasis on the objective information and diminishes the significance of purely subjective beliefs in the decision maker’s appraisal. If \(\alpha =1\), the Choquet term vanishes, and the decision problem becomes one of maximizing expected utility as earlier noted. Notably, this additive form corresponds to—i.e., generalizes—the functional form suggested by Ellsberg (1961, pp. 664–65). As we shall now see, this is the form appropriate for solving his paradoxes, although the more general form is needed to solve Machina’s paradoxical cases.

7 Paradoxes

In this section, we successively explore the paradoxes posed by Ellsberg and extended by Machina. The first case is the familiar Ellsberg three-colour paradox (Ellsberg, 1961), and the second and third cases are Machina’s ‘reflection’ and ‘50:51’ examples (Machina, 2009). Finally, we examine Blavatskyy’s (2013a) interesting twist of the reflection example. These cases are sufficiently representative of the paradoxes to be adequate for our arguments here. The reader will see that the logic applies to other examples provided by Ellsberg and Machina.

In each case, we proceed in three stages: first, a verbal description of the decision situation is given along with a table that represents the situation; second, the beliefs of the decision-maker are specified for the relevant events; and, finally, the decision rule is used to determine the optimal (pairwise) choices.

7.1 The Ellsberg 3-colour problem

In this decision problem, there is a single urn containing 90 balls. 30 of those balls are red, and the remaining 60 are black or yellow in unknown proportions. The payoffs are as given in the table. Acts \(f\) and \(g\) are to be compared, and \(f{^{\prime}}\) and \(g{^{\prime}}\) are to be compared. Decision-makers who maximize expected utility utilizing (real-valued) probabilities obey the choice relation: \(f\) is chosen over \(g\) if and only if \(f{^{\prime}}\) is chosen over \(g{^{\prime}}\). Evidence has shown that this implication often fails to hold in practice (Table 2).

The beliefs of the decision-maker which are relevant to her decision-making are

We may assume that the decision-maker has: \(\phi (0)=0\), \({\phi }^{^{\prime}}={\alpha }^{-1}<1\) over the whole domain; furthermore, we may, without prejudice, identify utilities with dollar payoffs, in which case, we have the following (the magnitude following each lottery is the value of the lottery):

Thus, \(f\) is chosen over \(g\), and \(g{^{\prime}}\) is chosen over \(f{^{\prime}}\), which is the behaviour to be explained. The kinds of preferences that give rise to this choice are depicted in Fig. 2.

This example has become the canonical example of Ellsberg’s paradox. Less well known is the fact that we can account for the decision-maker’s choices by utilizing the additive formula that Ellsberg proposes. This is equivalent to saying that the decision-maker’s degree of ambiguity aversion can be reduced to the parameter \(\alpha\).

7.2 The Machina reflection example

In this decision problem, there is a single urn, containing 100 balls. 50 of the balls are labelled E1 or E2 and are distributed in unknown proportions, while the remaining 50 balls are labelled E3 and E4, and are also distributed in unknown proportions. The payoffs are as given in the table. Acts \(f\) and \(g\) are to be compared, and \(f{^{\prime}}\) and \(g{^{\prime}}\) are to be compared. Decision-makers who maximize expected utility utilizing (real-valued) probabilities obey the choice relation: \(f\) is chosen over \(g\) if and only if \(f{^{\prime}}\) is chosen over \(g{^{\prime}}\). Evidence has shown that this implication can fail to hold in practice (l’Haridon & Placido, 2010) (Table 3).

The beliefs of the decision-maker which are relevant to her decision-making are

We may assume that \(\phi (0)=0\), \({\phi }^{^{\prime}}(b)<1\); furthermore, and without prejudice, we may identify utilities with dollar payoffs (divided by 1000), in which case, we have

Thus, \(g\) is chosen over \(f\), if and only if \(f{^{\prime}}\) is chosen over \(g{^{\prime}}\); and \(f\) is chosen over \(g\), if and only if \(g{^{\prime}}\) is chosen over \(f{^{\prime}}\), which is the behaviour to be explained. Note that if we have: \(\phi \left(4\right)<\phi \left(2\right)+\phi (2)\), then the indifference curves will be convex to the origin as in Fig. 3, and the decision-maker will prefer \(f\) to \(g\) and \(g{^{\prime}}\) to \(f{^{\prime}}\); conversely, if: \(\phi \left(4\right)>\phi \left(2\right)+\phi (2)\), then the indifference curves will be concave to the origin as in Fig. 4, and the decision-maker will prefer \(g\) to \(f\) and \(f{^{\prime}}\) to \(g{^{\prime}}\).

These explanations accord with Machina’s own. Formally speaking, we note that the general representation of preferences is used—the special case that utilizes the Choquet expectation, \({\mathbf{E}}_{\mathfrak{B}}\left[.\right]\), does not give the requisite pairs of choices. Second, and speaking now intuitively, the motivation for choosing, say \(g\) against \(f\), is that \(g\) concentrates all the ambiguity over events E3 and E4, while the outcome of events E1 and E2 is unambiguous. This accords with a preference for situations that are clearly uncertain or are clearly risky rather than a mixture of the two. This is just as depicted in Fig. 4, and the explanation accords with our standard intuition about the shape of indifference curves.

7.3 The Machina 50:51 example

In this decision problem, there is a single urn, containing 101 balls. 50 of the balls are labelled E1 or E2 and are distributed in unknown proportions, while the remaining 51 balls are labelled E3 and E4, and are also distributed in unknown proportions. The payoffs are as given in the table. Acts \(f\) and \(g\) are to be compared, and \(f{^{\prime}}\) and \(g{^{\prime}}\) are to be compared. Decision-makers who maximize expected utility utilizing (real-valued) probabilities obey the choice relation: \(f\) is chosen over \(g\) if and only if \(f{^{\prime}}\) is chosen over \(g{^{\prime}}\). Hypothetical reasoning suggests that this implication might be expected to fail to hold in practice; specifically, it is reasonable to suppose that an ambiguity averse decision-maker will prefer \(f\) to \(g\), but will generally prefer \(g{^{\prime}}\) to \(f{^{\prime}}\) (Machina, 2009) (Table 4).

The beliefs of the decision-maker which are relevant to her decision-making are

We may, without prejudice, identify utilities with dollar payoffs, in which case, we have

Thus: \(f\) is chosen over \(g\) if \(200/101>\phi (2)\), and \(g{^{\prime}}\) is chosen over \(f{^{\prime}}\) if \(\phi \left(4\right)>200/101+\phi (2)\). These conditions will occur if: \(\phi \left(4\right)>400/101>\phi \left(2\right)+\phi (2)\). This accounts for the behaviour to be explained. The preferences that are consistent with this outcome are depicted in Fig. 4.

7.4 The Blavatskyy twist of the Machina reflection example

In this decision problem, there is a fair coin, and a bag containing an unknown number of black and white marbles. There are four possible states: \(\{\text{heads and black},\text{ heads and white},\text{ tails and black},\text{ and tails and white}\}\). In the table, the top-left quadrant describes act, \({f}_{1}\), which is to be compared to act, \({g}_{1}\), in the top right of the table; while acts, \({f}_{2}\) and \({g}_{2}\), in the bottom half of the table, are to be compared. It is assumed that $4,000 < $x. Hypothetical reasoning suggests that, if \({f}_{1}\) is chosen over \({g}_{1}\), then \({f}_{2}\) is chosen over \({g}_{2}\); however, many decision models cannot generate this choice profile (Blavatskyy, 2013a) (Table 5).

The beliefs of the decision-maker which are relevant to her decision-making are:\(\mu \left({\varnothing }\right)=(0, 0)\),\(\mu \left(hb\right)=\mu \left(hw\right)=\mu \left(tb\right)=\mu \left(tw\right)=(0, 1/4)\), \(\mu \left("\text{heads}"\right)=\) \(\mu \left("\text{tails}"\right)\) \(=(1/2, 0)\), and \(\mu \left("{\text{black}}"\right)=\mu \left("{\text{white}}"\right)=(0, 1/2)\).

We may, without prejudice, identify utilities with dollar payoffs; and we may delete the magnitude, \({u}_{0}\), which is common to all payoffs. In that case, we have

Thus: \({f}_{1}\) is chosen over \({g}_{1}\) if the decision-maker is ambiguity averse; and \({f}_{2}\) is chosen over \({g}_{2}\) if the decision-maker has an increasing marginal rate of substitution between beliefs, which is implied by: \(\phi \left(\frac{1}{2}\left({u}_{x}-{u}_{0}\right)\right)>\phi \left(\frac{1}{2}\left({u}_{x}-{u}_{4}\right)\right)+\phi \left(\frac{1}{2}\left({u}_{4}-{u}_{0}\right)\right)\). This accounts for the behaviour to be explained. The preferences that are consistent with this outcome are depicted in Fig. 4.

7.5 Observations

We conclude this section by delivering on our introductory promissory note, which warranted two claims. First, we were supposed to explain both the Ellsberg and the Machina paradoxes in terms consistent with the authors’ own proposals. Second, we were supposed to be able to discriminate between the two paradoxes.

With regards to the first point, we have already observed that the functional form proposed by Ellsberg, suitably generalized, is appropriate for explaining his paradox. This form is the additive function

Moreover, we have shown that this form is inadequate to account for the paradox(es) of Machina. In general, a non-additive functional form, derived from non-linear indifference curves on the space of binary capability lotteries is required to account for the Machina examples. In accounting for these cases, we have tried to adhere to the author’s admonition that: “the study of choice under uncertainty should proceed in a manner similar to standard consumer theory” (Machina, 2009, p. 390). Our discussion of varying degrees of ambiguity aversion in terms of the decision-maker’s indifference curves over the space of binary beliefs has been undertaken with this advice in mind.

With regards to the matter of distinguishing between the Ellsberg and the Machina paradoxes, we summarize the conclusions in the following Table 6.

The model we have proposed allows us to discriminate between the two paradoxes, and it allows us to place them in a comprehensible architecture of decision-making.

8 Further paradoxes

In this section, we want to look at two further paradoxes and show that our model can elucidate these cases, too. The first paradox is another one of Ellsberg’s—viz., the n-colour problem.

8.1 The Ellsberg n-colour problem

In his 1962 dissertation, but not in his 1961 article, Ellsberg posed what has come to be known as the n-colour problem (Ellsberg, 1962, pp. 199–209; see also the discussion in Machina & Siniscalchi, 2014, pp. 747–48, which our presentation largely follows). In this problem, which is described in Table 7, there are two urns, each containing 100 balls. In urn I, 10 balls are coloured red, 10 balls are coloured yellow, 10 balls are coloured black, etc., through to the 10 balls that are coloured mauve. In urn II, the range of the colours of the balls is the same as in urn I, but they are distributed in unknown proportions. The payoffs are as given in the table. Options \(f\) and \(f{^{\prime}}\) are to be compared, and \(g\) and \(g{^{\prime}}\) are to be compared.

Ellsberg argued that decision-makers whose degree of ambiguity aversion is constant for all their decisions must obey the choice relation: \(f\) is chosen over \(f{^{\prime}}\) if and only if \(g\) is chosen over \(g{^{\prime}}\). This is so since the degree of ambiguity aversion that makes \(f\) more attractive than \(f{^{\prime}}\) is the same degree of ambiguity aversion that makes \(g\) more attractive than \(g{^{\prime}}\). He also suggested that, in practice, while decision-makers might prefer \(f\) over \(f{^{\prime}}\), because they are ambiguity averse in that instance, and they might also prefer \(g{^{\prime}}\) over \(g\), because they are ambiguity avid at the low levels of expected utility that characterize this choice. Thus, he argued that the decision-makers’ attitude to ambiguity changes as the value of the lottery changes, and, specifically, that decision-makers become relatively more ambiguity averse as value increases. This hypothesis has received some support in the subsequent empirical literature (see Machina & Siniscalchi, 2014, pp. 747–48, for a thorough review).

We may, without prejudice, identify utilities with dollar payoffs, and we have two different values for the gradients of the linear indifference curves, \({\alpha }_{90}\) and \({\alpha }_{10}\), as per the fanning out hypothesis; consequently, we have the following expected utilities for each act:

Thus, if \(f\) is chosen over \(f{^{\prime}}\), we have \({\alpha }_{90}>1\), and the decision-maker is ambiguity averse; and, if \(g{^{\prime}}\) is chosen over \(g\), we have \({\alpha }_{10}<1\), in which case the decision-maker is ambiguity avid. This is the variation in \(\alpha\) values that our fanning out hypothesis supposes. Hence, our account is consistent with the behaviour predicted by Ellsberg.

Our account is also consonant with the recent analysis of Baillon and Placido (2019), whose empirical investigations lead them to remark: “Our findings seem to encourage the use of ambiguity models that are flexible enough to accommodate changes in ambiguity attitudes at increased utility levels” (Baillon & Placido, 2019, p. 325). The model of fanning out preferences is an instance of just the flexibility that their empirical results warrant.

8.2 The Allais paradox

Interestingly, this case brings us to the Allais paradox (Allais, 1953). We do not wish to undertake a total treatment of that paradox here, but we do want to show how the model proposed in this paper might be able to account for it. The argument here is necessarily of a more provisional kind than that made above for reasons that will become clear as the argument progresses. Nevertheless, the proposed account is suggestive of fruitful ways of thinking about the Allais paradox. We restrict ourselves to the common consequence version—the reader will see that the suggested method applies also to the common ratio version.

Here is the Allais paradox in tabular form. Canonical decision theory requires that, if \(f\) is chosen in preference to \(g\), then \(f{^{\prime}}\) must be chosen over \(g{^{\prime}}\), and vice versa. It is well known that this is often honoured in the breach (Table 8).

The crucial issue is how decision-makers construct beliefs. The supposition is that individuals treat higher order magnitudes of fractions qualitatively differently from lower order ones. The reason for this is that higher order magnitudes are felt to be more ‘robust’ or ‘reliable’, whereas the lower order magnitudes are felt to be less definite. On the supposition that decision-makers treat given probabilities in this way in the Allais case gives us the following set of beliefs:\(\mu \left({\varnothing }\right)=(0, 0)\), \(\mu \left(A\right)=(0, 0.01)\), \(\mu \left(B\right)=(0.8, 0.09)\), \(\mu \left(C\right)=(0.1, 0)\), \(\mu \left(A\cup B\right)=(0.9, 0)\), \(\mu \left(A\cup C\right)=(0.1, 0.01)\), \(\mu \left(B\cup C\right)=(0.9, 0.09)\), and \(\mu \left(A\cup B\cup C\right)=1\).

In what follows, utilities of dollar amounts are identified by their subscript, and we suppose that the rate at which ‘unreliable’ stochastic values are discounted relative to ‘reliable’ stochastic values is given by the parameter, \(\alpha\). Consequently, we have the following (rank-dependent) expected utilities for each act:

The first thing to say is that if \(\alpha =1\), so that there is no discounting of ‘unreliable’ stochastic information, then if \(f\) is chosen over \(g\), we must have \(f{^{\prime}}\) chosen over \(g{^{\prime}}\) (i.e., we obtain the choice pairs predicted by expected utility theory). However, we will have the pairing: \(f\) is chosen over \(g\), and yet \(g{^{\prime}}\) is chosen over \(f{^{\prime}}\) under the following configuration of parameters: \(\alpha \to \infty\) and \(0.1{u}_{1}+0.1{u}_{5}\ge 0.2{u}_{1}\ge 0.1{u}_{0}+0.1{u}_{5}\) (where we note that \(\alpha \to \infty\) implies that ‘unreliability aversion’ is very high).

It should be emphasized here that this way of explaining the Allais paradox is consistent with the broadly non-judgmental, ‘grammatical’ approach that we have applied hitherto. In the Allais case, we have supposed that there is a propensity of individuals to treat different orders of magnitudes of objective probabilities in different ways, which accords with a widespread intuition about what motivates the paradox. It may or may not be rational to behave in this way—it is an open question as to whether the appropriate algebra for epistemic calculations in this kind of case should be \({\mathbb{R}}\), or \({\mathbb{R}}\times {\mathbb{R}}\) (or perhaps even higher orders of direct products of \({\mathbb{R}}\)).Footnote 15 For our earlier paradoxes, however—those of Ellsberg and Machina—it seems that the algebra, \({\mathbb{R}}\times {\mathbb{R}}\), has found its métier.

Data availability

No new datasets or experimental results were generated during the current study.

Notes

The normative approach is not universally popular—Al-Najjar and Weinstein (2009) forcefully argue that while the Bayesian approach to beliefs is normative, the Ellsberg paradox identifies computational errors only, and the subsequent theoretical literature does not model rational beliefs.

The nature of the tail separability issue is, perhaps, best stated by Dillenberger and Segal (2014, pp. 4–5): “if two acts pay the same on some event E and if the payoff on E affects the value of each act independently of its payoffs on other events, then the comparison of these two acts should not depend on the exact magnitude of the payoff on E, as long as it is the same in both. But changes of the payoffs on E may change the ambiguity properties of the two acts (e.g., transform any of the acts from being fully objective to subjective, or vice versa), causing an ambiguity averse decision maker to alter their ranking.” For a full discussion of this issue, see Machina (2009) and (2014), L’Haridon and Placido (2010), and Baillon, L’Haridon and Placido (2011).

Interestingly, Dillenberger and Segal make use of an earlier paper by Gul (1991) to provide a particular version of their model that survives the Machina filter.

In this paper, we shall generally assume that the set of states is finite in number.

A capacity, \(\mathfrak{B}\), is a real-valued function on the event space that satisfies: \(\mathfrak{B}\left({\varnothing }\right)=0\), \(\mathfrak{B}\left(\Omega \right)=1\), and \(\mathfrak{B}\left(B\right)\ge \mathfrak{B}\left(A\right)\) whenever \(B\supseteq A\), where \({\varnothing },A,B,\Omega \in \mathcal{F}\). In our analysis below, we use a particular kind of capacity to represent objective stochastic information, namely a convex capacity, which is a capacity that in addition to satisfying the above criteria satisfies the following weak inequality: \(\mathfrak{B}\left(A\cup B\right)+\mathfrak{B}\left(A\cap B\right)\ge \mathfrak{B}\left(B\right)+\mathfrak{B}\left(A\right)\).

The algebraically minded reader may prefer to refer to such beliefs as basic kinds of tensor probabilities for reasons that will become obvious in Sect. 5.