Abstract

Online surveys are a popular tool in sex research and it is vital to understand participation bias in these surveys to improve inferences. However, research on this topic is limited and out of date given the increase in online survey methodology and changes in sexual attitudes. This study examined whether demographics and sexual abuse and assault history predict opting into online survey questions about sex. The sample was recruited for a longitudinal mental health study using a probability-based sampling panel developed to represent the US household population. Participants were masked to the inclusion of sexual content and given a choice to opt into sex questions. Analyses were run on raw and weighted responses to adjust for sampling bias. Of the total sample (n = 476, 62.6% female), 69% opted into sex questions. Raw analysis showed that participants were more likely to be younger, have higher education and income, and have a history of sexual abuse or assault. No racial, gender, relationship status, or regional differences were found. After weighting, effect sizes were reduced for most predictor variables, and only a history of sexual abuse or assault still significantly predicted participation. Results suggest that key demographic features do not have a strong association with participation in sex survey questions. Reasons for participation bias stemming from sexual abuse or assault history should be examined further. This study demonstrates how researchers should continue to monitor participation bias in sex survey research as online methodologies and sexual attitudes evolve over time.

Similar content being viewed by others

Introduction

Sex research benefits significantly from online surveys, which have become a popular tool for gathering self-reported sexuality data. With the advent of online survey-building tools, online survey development has become more accessible for researchers (Evans & Mathur, 2018; Nayak & Narayan, 2019). Online survey research can be cost-effective, efficient, and convenient, because participants can participate at a time and place of their choosing and researchers do not have to wait for participants to receive and return postal surveys (Mustanski, 2001; Wright, 2005). It increases research accessibility and desirability for marginalized groups (McInroy, 2016; Riggle et al., 2005) and creates an opportunity for researchers to connect with virtual communities of underrepresented or stigmatized individuals (e.g., kink communities or individuals with paraphilias; Mustanski, 2001). Online research also provides researchers with quick access to large samples of participants, making them suitable for studies of population-level attitudes and behaviors (Evans & Mathur, 2018; Nayak & Narayan, 2019). Finally, online research provides a private, anonymous space for subjects to participate in survey research, which makes this method desirable for studies that involve reporting private and sensitive sexual information.

However, online research remains susceptible to sampling problems and participation bias, affecting research outcomes (Evans & Mathur, 2018; Nayak & Narayan, 2019). Participation bias occurs when the sample of participants that elect to take part in a study differs systematically from the researcher-defined population. This bias limits the generalizability of survey results to the target population and can skew inferences that are made from data. Participation bias is assumed to be especially relevant in human sex research due to the nature of disclosing information about one’s sexuality, a topic that is considered by many to be private (Morokoff, 1986; Rosenthal & Rosnow, 1975; Strassberg & Lowe, 1995). The results of studies on sexuality and sexual behavior have important implications for research in this field, the provision of mental and physical healthcare services, and policy development. Survey results can be especially significant in sexual health research because they can imply specific behavioral risk outcomes that can provide the basis for policies and healthcare services. It is thus vital that the participants of a survey accurately represent the population being researched.

Along with the shift toward online methodologies, sexual attitudes that may influence decisions to participate in sex research are continuously evolving. For example, research has shown that between the early 1970s to the mid-2010s, American adults became more accepting of same-sex and most non-marital sexual activity (Twenge et al., 2015, 2016). Because sex research methods and factors that may influence participation continue to transform over time, it is important to continue to monitor changes in participation bias in sex research samples.

Findings on rates of participation in sexuality surveys have varied. Dawson et al. (2019) demonstrated a general increase in the proportion of individuals willing to participate in sexuality studies compared to findings from past research and reported that almost all participants (97.75% of men and 98.61% of women) in their Canadian study were hypothetically willing to participate in an online survey about sex. Older research has demonstrated similarly high rates of participation. A study by Wiederman (1999) found that nearly all participants in an anonymous sexuality questionnaire were willing to participate in a similar follow-up sexuality survey. A national US study involving face-to-face interviews saw only 8.3 percent of participants declining to answer or complete an additional self-administered questionnaire (Johnson & DeLamater, 1976). In contrast, some studies have shown lower rates of participation. Strassberg and Lowe (1995) found that only a third of American undergraduates participating in a sexual attitude survey were willing to participate in an additional questionnaire study. An Australian study conducted by Dunne et al. (1997) found that 27% of individuals contacted to participate in a postal survey study of sexual behaviors and attitudes explicitly refused to consent.

Prior research has shown that participants in sex research surveys systematically differ in their demographic characteristics from non-participants. Survey participation and response to questions about sex have been found to be associated with younger age (Bouchard et al., 2019; Copas et al., 1997; Johnson & DeLamater, 1976; Wiederman, 1993), higher income or occupational status (Copas et al., 1997; Wiederman, 1993), and higher education (Dunne et al., 1997; Johnson & DeLamater, 1976; Wiederman, 1993). Some research has indicated that participation may be influenced by participants’ reading comprehension (Copas et al., 1997; Johnson & DeLamater, 1976), which may explain the relationship of age and education to participation. While Catania et al. (1986) found no relationship between education level and response to survey questions about sex, this research sample consisted of undergraduate students, limiting the generalizability of these results to a broader population.

Research has shown that gender differences in participation willingness may occur with changes to study methodology (Strassberg & Lowe, 1995; Wolchik et al., 1985) and that gender may influence response to specific questions (Wiederman, 1993). However, no significant gender differences have been found regarding participating in sex surveys specifically (Catania et al., 1986; Dawson et al., 2019; Strassberg & Lowe, 1995; Wiederman, 1999).

Mixed results have been found with regard to race and ethnicity in relationship to participation in sex research. In a study on characteristics of responders to sexual experience items in a national US survey, no effects of ethnicity on response were found in the male sample, but white women were more likely to respond to certain questions about their sexual experiences than non-white women (Wiederman, 1993). Additionally, a British interview study demonstrated that non-white participants were more likely to refuse response to a self-report questionnaire booklet with more invasive questions about sex (Copas et al., 1997).

The effects of relationship status on participation in sex research surveys have also been found to be inconsistent. The same interview study by Copas et al. (1997) described above found that refusal of the questionnaire booklet was similar among single and married individuals, but widowed, divorced, or separated participants were less likely to refuse. However, Wiederman (1993) found that married and widowed women were less likely to respond to certain questions about their sexual experiences than separated, divorced, or never-married women.

Finally, an Australian study found that participation in a postal sexuality survey was associated with higher likelihood of having a history of sexual abuse or assault (Dunne et al., 1997). Some studies have shown that undergraduate female volunteers for laboratory-based physiological sex research are also more likely to have a history of sexual assault or abuse (Wolchik et al., 1983, 1985). Given the limited research in this area, it is important to examine whether these findings extend to current online survey research as well.

While participation bias in sex research has been abundantly studied in the context of multiple methodologies (Barker & Perlman, 1975; Bogaert, 1996; Farkas et al., 1978; Gaither et al., 2003; Griffith & Walker, 1976; Morokoff, 1986; Plaud et al., 1999; Saunders et al., 1985; Wiederman, 1999; Wolchik et al., 1983, 1985), there is a large gap in research on participation bias in sex research surveys, with only a few studies published since 2000 (Bouchard et al., 2019; Dawson et al., 2019). Furthermore, few studies (Johnson & DeLamater, 1976; Wiederman, 1993) have examined sex survey bias in the national US population. Older research may generalize to current online survey methodology and past findings require replication with more representative and contemporary samples to match population changes in sexual attitudes. Available research is further limited by reliance on undergraduate populations that do not accurately reflect the national population and the use of hypothetical scenarios to measure participation. The limitations of available research illustrate the need for studies that utilize realistic, rather than hypothetical, methods to examine how individual characteristics contribute to participation bias in online sex survey research conducted on the US population.

Current Study

The current study provides an examination of differences in demographic characteristics and sexual abuse and assault history between participants in a national US sample that do and do not opt into online survey questions about sex. To gauge participation in sex survey questions, participants are asked to opt in or out of questions rather than providing them with a hypothetical scenario. This study additionally demonstrates the use of survey weighting to reduce bias in online samples as a tool to address participation bias in research samples. Using weighting to adjust for nonresponse bias aligns the sample with nationally representative population parameters to better estimate survey responses of the general US population.

Based on prior research, it was predicted that opting into sex questions would be associated with younger age, greater income, and higher education and not with gender and relationship status. Due to the mixed findings regarding race, two competing hypotheses were formed: white participants would be more likely to participate in sex questions or there would be no effect of racial identity on participation. Finally, it was predicted that participants reporting a history of sexual abuse or assault would be more likely to participate.

Method

Participants

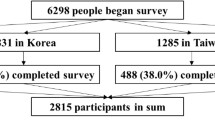

Data were collected as part of a longitudinal study of mental health and adversity history during the COVID-19 pandemic from July 2020 to January 2021. Recruitment for this study was conducted through the AmeriSpeak panel managed by NORC (formerly the National Opinion Research Center) at the University of Chicago (https://amerispeak.norc.org/). This probability-based panel was developed to produce samples representative of approximately 97% of the US household population. For more information about the use of NORC’s Amerispeak panel in this study, see the Supplemental Materials. All participants provided informed consent to participate in the study, and the protocol was approved by the Indiana University Institutional Review Board (#2005785481).

Measures

Demographics including age, gender, race, residential region, relationship status, education, and income level were self-reported prior to study participation as part of NORC’s panel in July of 2020. Participants had the option to adjust demographics that may have changed (ex. income) at each time point in the study.

Willingness to participate in sex survey research was operationalized using an “opt-in” question that allowed participants to opt in or out of a section of survey questions about sexual experience and function in the fourth timepoint of the study (December 2020–January 2021). The opt-in language did not include an approximation of the length of the opt-in section. For the full “opt-in” text, see Supplemental Materials. Participants were initially masked to the inclusion of sex questions at the beginning of the study. However, participants were made aware that the study would include sensitive questions as part of the study introduction.

Adversity experiences were surveyed in the first time point of the study from July 2020 to September 2020 and the fourth timepoint of the study. Sexual abuse and assault history were surveyed using the Childhood Trauma Questionnaire (CTQ; Bernstein & Fink, 1998) and the Adverse and Traumatic Experiences Scale (Dale et al., 2020; Kolacz et al., 2020a). The CTQ is a retrospective survey tool developed to measure childhood sexual abuse, physical abuse and neglect, and emotional abuse and neglect, and has demonstrated strong reliability, validity, and convergence with therapist reports (Bernstein et al., 1997, 2003). The Adverse and Traumatic Experiences Scale was created to measure a variety of adverse and traumatic experiences described by other trauma history questionnaires. This scale investigates a range of experiences throughout the lifespan including childhood adverse experiences and maltreatment, other forms of maltreatment, personal health difficulties, traumatic loss, and life-threatening experiences. For example items from these questionnaires, see Supplemental Materials.

Data Analysis

Prior to analysis, data were checked for quality and responses were dropped from the sample if they were missing more than 50% of question data or if the response completion time fell under 1/3 of the median completion time for the survey. Responses were also checked for straightlining (i.e., the same response was given for an entire question block). Responses with excessive straightlining were flagged for manual inspection to determine if they were plausible based on internal consistency.

Data analysis was conducted in R 4.1.2 and RStudio (R Core Team, 2021; RStudio Team, 2021). Group differences in continuous variables were examined using Welch’s unequal variances t-tests, categorical variables were examined using Chi-squared tests, and ranked categorical variables (i.e., education and income level) were tested using the Wilcoxon rank-sum test. A p-value less than 0.05 was used to determine statistical significance. Cohen’s d was used to compute standardized mean difference. Effect sizes of d = 0.2 were considered small, d = 0.5 medium, and d = 0.8 large (Cohen, 1988) although these values should be interpreted carefully and in reference to other studies. The effects of ordinal variables (i.e., education and income level) and sexual abuse and assault were determined by calculating relative opt-in ratio (RR, i.e., relative risk or risk ratio). Relative opt-in ratio indicates the strength of association between a binary condition and a binary outcome through comparison of group proportions. Some variables (income, education level, race, marital status, and sexual abuse and assault history) were reduced to binary or ternary categories to provide adequate cell sizes at each variable level. For original categories and examples of categorical groupings, see Supplemental Materials.

To adjust for bias within the sampling frame, analyses were run on both the original unweighted sample and the weight-adjusted sample for comparison. Weighting assigns each unit in the research sample a weight that is used to adjust the sample to reflect national population parameters of interest (for example, the average age or income of a population). These weighted values are then incorporated into statistical analysis. This technique is used to adjust for nonresponse and to reduce bias when estimating parameters of the target population (Brick & Kalton, 1996). By aligning core demographics of survey participants with those of the target population, responses to survey items should also better represent those of the target population. For more information on how the statistical weights for this study were obtained from the Amerispeak Panel, see Supplemental Materials. Weighted analysis was conducted using the “survey” R package (Version 4.1-1; Lumley, 2004).

Results

The final sample consisted of 476 participants. Two participants were removed from the original sample due to nonresponse to the opt-in question. Participant retention was high between timepoints, with 80.1% of participants from T1 responding to the T4 survey. There were no significant demographic differences between the starting samples at T1 and the final sample at T4. The samples were comparable in age, education, marital status, region, race, income, and gender. Unweighted and weighted descriptive sample statistics are presented in Table 1.

Unweighted Analysis

Sixty-nine percent of the sample opted into the sex questions. Two participants who opted into the sex section did not respond to any questions. Analyses predicting opting in and answering sex questions produced comparable responses.

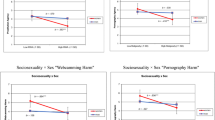

Analysis of the original, unweighted sample showed that opting into the questions was predicted by younger age (p = .01, d = 0.25, Fig. 1), higher education (Fig. 2A), and higher income (Fig. 2B).

A compares weighted and unweighted proportions of participants that opted into sex questions across education levels and B compares weighted and unweighted proportions across income levels. The effects of ordinal variables education and income level on participation were determined by calculating relative opt-in ratio (RR)

Education level had a small effect on participation with participants with a bachelor’s degree or higher being 1.22 times more likely than participants with no college education (p = .02) and 1.16 times more likely than those with some college education but no degree (p = .03) to opt into the sex questions. Participants with some college education but no degree were not significantly more likely than those with no college education to opt into the sex questions (RR = 1.05, p = .7). Income level also had a small effect on participation. Participants with an income greater than $100k were 1.18 times more likely to opt into the sex questions than participants with an income of $50k–$100k (p = .05) and an income less than $50k (p = .03). Participants with an income of $50k–$100k were not significantly more likely than those with an income of less than $50k to opt into the questions (RR = 1.01, p > .99).

No differences in gender, relationship or marriage status, or race were found between those that did and did not opt into the sex questions. Of the original sample, 28.6% reported having a history of sexual abuse or assault. Participants that endorsed a history of sexual abuse or assault were 1.18 times more likely to opt into the sex questions (p = .02, 77.2% vs. 65.6%, Fig. 3).

Weighted Analysis

The addition of survey weights changed the distribution of demographic variables to better align the sample with the US population (see Table 1; U.S. Census Bureau, 2020). Of the weighted sample, 69.5% opted into the sex questions. The addition of survey weights reduced effects for most predictor variables, and few comparisons resulted in significant associations. Participation was no longer significantly predicted by age (p = .10, d = 0.21, Fig. 1), education (Fig. 2A), or income (Fig. 2B). After weighting, the effect of education level on participation remained small and was non-significant. Participants with a bachelor’s degree or higher were not more likely than participants with no college education (RR = 1.18, p = .13) or some college education but no degree (RR = 1.18, p = .11) to opt into the sex questions. Participants with some college education but no degree were not more likely than those with no college education to opt into the sex questions (RR = 1.01, p = .96). Income level also continued to have a small and insignificant effect on participation. Participants with an income greater than $100k were not more likely than participants with an income of $50k–$100k (RR = 1.14, p = .19) or an income less than $50k (RR = 1.14, p = .06) to opt into the sex questions. Participants with an income of $50k–$100k were not more likely than those with an income of less than $50k to opt into the questions (RR = 1, p = .54).

Having a history of sexual abuse or assault remained a significant predictor of participation in sex questions (p = .04, sexual abuse or assault history = 78.1%, no sexual abuse or assault history = 66.3%, RR = 1.18, Fig. 3).

Discussion

To our knowledge, this is the first study to examine participation bias in online sex surveys using a nationally representative US sample that incorporates survey weighting to adjust for sampling bias. Additionally, this study utilizes realistic, rather than hypothetical, methods to predict participation in sex surveys. About two-thirds of the sample from this study elected to opt into the sex portion of the survey, and opting in was found to be predicted by younger age and higher education and income level in the unweighted sample. As predicted, gender, race, and relationship status were not associated with participation in the sex questions. However, the use of survey weighting to better align the sample with the national US population revealed a reduction in the effects of these demographic characteristics on participation in sex survey questions, and participation was no longer predicted by age, education, or income level.

Both weighted and unweighted analyses revealed that participants that opted into the sex questions were more likely to have a history of sexual assault or abuse, indicating that subjects with these experiences are a potential source of bias in sex research. These results align with past research showing that participation in a postal sex survey was associated with a history of adverse sexual experiences (Dunne et al., 1997). Sexual abuse and assault experiences are known to impact many commonly studied topics in sex research including sexual function, health, behaviors, and attitudes (Kolacz et al., 2020b; Loeb et al., 2002; Maniglio, 2009; Redfearn & Laner, 2000; Van Berlo & Ensink, 2000). Due to the psychological and physiological effects of sexual assault and abuse, overrepresentation of sexual abuse and assault survivors in survey research samples may influence study results. Past research suggests this bias may be due to an increased desire to monitor responses or report experiences following abuse or assault (Wolchik et al., 1983, 1985), but other reasons for this bias and its potential impact on research results should be examined further. Surveys may benefit victims of sexual abuse and assault by providing a safe, anonymous space to share their story without fear of repercussion, so it is vital to understand how these experiences bias outcomes from survey research.

Before weighted analyses, the current study initially replicated past findings relating participation to younger age and higher education and income level (Bouchard, et al., 2019; Copas et al., 1997; Johnson & DeLamater, 1976; Wiederman, 1993). However, with the addition of weights to the analysis, these results no longer align with past findings. The proportion of participants that opted into the sex survey questions also differed from a recent Canadian study by Dawson et al. (2019) in which almost all their research subjects (97.75% of men and 98.61% of women) indicated that they would be hypothetically willing to participate in a sexuality questionnaire. The current findings better align with results from an Australian postal survey study conducted by Dunne et al. (1997) in which 27% of individuals contacted for the study explicitly refused to consent to participate. The small effects in this study reflect some previous research showing small effects in the relationships between some demographic characteristics and willingness to participate in sex research (Dunne et al., 1997; Wiederman, 1993), and like some past findings, the relationship between some individual demographic characteristics and participation appears inconsistent. Results from the weighted analysis in this sample demonstrate how changes in statistical methodology that lead to even minor shifts in the distribution of a sample can alter the way findings are interpreted.

Individual demographic characteristics are not thought to cause participation bias, but rather may predispose subjects to other characteristics that could influence decisions to participate in a study (Groves et al., 1992). Over- or under-representation of demographic parameters in sex research samples due to participation bias may therefore lead to skewed sampling of commonly studied sexual characteristics. For example, research has shown that factors related to age such as birth cohort and physical health may influence sexual attitudes and behaviors (Gewirtz-Meydan et al., 2019; Twenge et al., 2017). Demographics additionally offer a targetable parameter for adjusting samples to reflect research populations and reducing bias. Given inconsistent findings on the effects of individual demographic characteristics on participation in sex research, it is important to continue to examine whether demographic characteristics may relate to participation in sex research.

This study benefited from its reliance on actual survey participation to gauge bias. Rather than asking subjects whether they would hypothetically participate in a survey, subjects were asked to opt in or out of a survey section in a study they were already participating in. While this study did not provide a direct measure of willingness to participate in an entire study on sex, it did provide a measure of willingness to participate in survey questions about sex, giving a proxy for sex survey participation. All but two of the participants that opted into the sex section responded to at least one question, showing how opting in was a reasonable estimate of willingness to engage with questions about sex. Other research studies have relied on presenting participants with hypothetical vignettes to measure willingness to participate in sex research because they are a useful way to collect data from non-participants for comparison (Bouchard et al., 2019; Dawson et al., 2019; Wiederman, 1999; Wolchik et al., 1985). However, hypotheticals only provide a substitute for participation and while difficult to do, it may be useful to explore participation bias further in more non-hypothetical research. Future research may benefit from exploring differences between real and hypothetical participation in sex survey research to determine whether hypothetical willingness is an accurate predictor of participation. An additional strength of this study is that participants were masked to the inclusion of sex questions when they initially consented to the study. Past research has shown that participants who volunteer for research studies advertised as sex studies differ from those that volunteer for other types of studies (Bogaert, 1996; Saunders et al., 1985) and that there are benefits to masking participants to the inclusion of sexual content in a study (i.e., covert advertisement) to buffer against self-selection bias (Wiederman, 1993). Masking participants to sexual content may have thus ensured that participation bias stemming from attitudes about sex questions only influenced participants after they had already agreed to be a part of the study and that the level of sex-related bias is likely similar between the sample and the population.

The use of the Amerispeak sampling panel allowed for a demographically diverse and representative US sample. The panel uses a method that involves randomly selected participants who did not respond from the first recruitment phase. This method has been shown to increase sample representativeness, particularly in groups that are more reluctant to respond (Bilgen et al., 2018). However, sample bias cannot be completely eliminated and it is possible that panel participation may influence the study estimates. Furthermore, as with all studies on participation bias, the data need to be interpreted in the context of the historical moment in which the research was conducted. This study was conducted during the COVID-19 pandemic in 2020–2021, creating a potential for extrinsic circumstances to influence participants’ willingness to participate in online survey research. For this reason, it is thus important to continue to study changes in participation bias over time.

Participants were informed that the opt-in portion of the survey included “sensitive” questions about participants’ overall “sexual experience, sexual function, and specific body parts”. While broad, the wording of the opt-in question for the sex questions may have potentially biased participation decisions (see supplemental materials for full question wording).

This study demonstrated the benefit of using statistical weighting to address bias in research samples intended to represent large, national populations. However, this analytical method assumes similar response patterns between participants and non-participants only based on known population characteristics. Weighting cannot account for bias stemming from characteristics beyond these known demographic parameters such as attitudes, which may be important to address in sex research (Copas et al., 1997; Fenton et al., 2001). Weighting can additionally increase variance in estimates, decreasing power for hypothesis testing and estimating effect sizes (Brick & Kalton, 1996). Nevertheless, weighting is a useful tool for combatting nonresponse bias and adjusting samples to reflect known properties of populations, especially in the study of demographic characteristics. Weighting also provides a point of comparison between original samples and samples that represent the target population that is being studied.

Replication and extension of this methodology and these findings are needed, with further exploration on the effects of gender, sexual orientation, ethnicity, and race on participation. Future research on participation bias would also benefit from comparing sex item participation rates with that of non-sexual topics and exploration of potential mediators such as sexual attitudes. The potential influence of participation bias in sex survey research should be considered in study design and interpretation alongside other challenges to validity like response accuracy (e.g., social desirability effects; King, 2022). Overall, it is important for sex researchers to acknowledge and evaluate participation bias in their reporting and to be transparent about sample representativeness to guide interpretation and utilization of research findings. Because of the important and far-reaching implications that sex survey results have in policy development and mental and physical healthcare, researchers must consistently evaluate the representativeness of samples and continually monitor participation bias as sex research methodologies evolve.

References

Barker, W. J., & Perlman, D. (1975). Volunteer bias and personality traits in sexual standards research. Archives of Sexual Behavior, 4(2), 161–171.

Bernstein, D. P., Ahluvalia, T., Pogge, D., & Handelsman, L. (1997). Validity of the Childhood Trauma Questionnaire in an adolescent psychiatric population. Journal of the American Academy of Child and Adolescent Psychiatry, 36(3), 340–348.

Bernstein, D. P., & Fink, L. (1998). Childhood Trauma Questionnaire: A retrospective self-report manual. The Psychological Corporation.

Bernstein, D. P., Stein, J. A., Newcomb, M. D., Walker, E., Pogge, D., Ahluvalia, T., & Zule, W. (2003). Development and validation of a brief screening version of the Childhood Trauma Questionnaire. Child Abuse & Neglect, 27(2), 169–190.

Bilgen, I., Dennis, J. M., & Ganesh, N. (2018). Nonresponse follow-up impact on Amerispeak panel sample composition and representativeness. Chicago, IL: NORC. Retrieved November 30, 2022 from https://amerispeak.norc.org/content/dam/amerispeak/research/pdf/Bilgen_etal_WhitePaper1_NRFU_SampleComposition.pdf

Bogaert, A. F. (1996). Volunteer bias in human sexuality research: Evidence for both sexuality and personality differences in males. Archives of Sexual Behavior, 25(2), 125–140.

Bouchard, K. N., Stewart, J. G., Boyer, S. C., Holden, R. R., & Pukall, C. F. (2019). Sexuality and personality correlates of willingness to participate in sex research. Canadian Journal of Human Sexuality, 28(1), 26–37.

Brick, J. M., & Kalton, G. (1996). Handling missing data in survey research. Statistical Methods in Medical Research, 5(3), 215–238.

Catania, J. A., McDermott, L. J., & Pollack, L. M. (1986). Questionnaire response bias and face-to-face interview sample bias in sexuality research. Journal of Sex Research, 22(1), 52–72.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences. Lawrence Erlbaum Associates.

Copas, A. J., Johnson, A. M., & Wadsworth, J. (1997). Assessing participation bias in a sexual behaviour survey: Implications for measuring HIV risk. AIDS, 11(6), 783–790.

Dale, L. P., Davidson, C., & Kolacz, J. (2020). Adverse Traumatic Experiences Scale. Unpublished manual.

Dawson, S. J., Huberman, J. S., Bouchard, K. N., McInnis, M. K., Pukall, C. F., & Chivers, M. L. (2019). Effects of individual difference variables, gender, and exclusivity of sexual attraction on volunteer bias in sexuality research. Archives of Sexual Behavior, 48(8), 2403–2417.

Dunne, M. P., Martin, N. G., Bailey, J. M., Heath, A. C., Bucholz, K. K., Madden, P. A., & Statham, D. J. (1997). Participation bias in a sexuality survey: Psychological and behavioural characteristics of responders and non-responders. International Journal of Epidemiology, 26(4), 844–854.

Evans, J. R., & Mathur, A. (2018). The value of online surveys: A look back and a look ahead. Internet Research, 28(4), 854–887.

Farkas, G. M., Sine, L. F., & Evans, I. M. (1978). Personality, sexuality, and demographic differences between volunteers and nonvolunteers for a laboratory study of male sexual behavior. Archives of Sexual Behavior, 7(6), 513–520.

Fenton, K. A., Johnson, A. M., McManus, S., & Erens, B. (2001). Measuring sexual behaviour: Methodological challenges in survey research. Sexually Transmitted Infections, 77(2), 84–92.

Gaither, G. A., Sellbom, M., & Meier, B. P. (2003). The effect of stimulus content on volunteering for sexual interest research among college students. Journal of Sex Research, 40(3), 240–248.

Gewirtz-Meydan, A., Hafford-Letchfield, T., Ayalon, L., Benyamini, Y., Biermann, V., Coffey, A., Jackson, J., Phelan, A., Voß, P., Geiger Zeman, M., & Zeman, Z. (2019). How do older people discuss their own sexuality? A systematic review of qualitative research studies. Culture, Health & Sexuality, 21(3), 293–308.

Griffith, M., & Walker, C. E. (1976). Characteristics associated with expressed willingness to participate in psychological research. The Journal of Social Psychology, 100(1), 157–158.

Groves, R. M., Cialdini, R. B., & Couper, M. P. (1992). Understanding the decision to participate in a survey. Public Opinion Quarterly, 56(4), 475–495.

Johnson, W. T., & DeLamater, J. D. (1976). Response effects in sex surveys. Public Opinion Quarterly, 40(2), 165–181.

King, B. M. (2022). The influence of social desirability on sexual behavior surveys: A review. Archives of Sexual Behavior, 51, 1495–1501.

Kolacz, J., Dale, L. P., Nix, E. J., Roath, O. K., Lewis, G. F., & Porges, S. W. (2020a). Adversity history predicts self-reported autonomic reactivity and mental health in US residents during the COVID-19 pandemic. Frontiers in Psychiatry, 11, 1–11.

Kolacz, J., Hu, Y., Gesselman, A. N., Garcia, J. R., Lewis, G. F., & Porges, S. W. (2020b). Sexual function in adults with a history of childhood maltreatment: Mediating effects of self-reported autonomic reactivity. Psychological Trauma: Theory, Research, Practice, and Policy, 12(3), 281–290.

Loeb, T. B., Rivkin, I., Williams, J. K., Wyatt, G. E., Carmona, J. V., & Chin, D. (2002). Child sexual abuse: Associations with the sexual functioning of adolescents and adults. Annual Review of Sex Research, 13(1), 307–345.

Lumley, T. (2004). Analysis of complex survey samples. Journal of Statistical Software, 9, 1–19.

Maniglio, R. (2009). The impact of child sexual abuse on health: A systematic review of reviews. Clinical Psychology Review, 29(7), 647–657.

McInroy, L. B. (2016). Pitfalls, potentials, and ethics of online survey research: LGBTQ and other marginalized and hard-to-access youths. Social Work Research, 40(2), 83–94.

Morokoff, P. J. (1986). Volunteer bias in the psychophysiological study of female sexuality. Journal of Sex Research, 22(1), 35–51.

Mustanski, B. S. (2001). Getting wired: Exploiting the Internet for the collection of valid sexuality data. Journal of Sex Research, 38(4), 292–301.

Nayak, M. S. D. P., & Narayan, K. A. (2019). Strengths and weaknesses of online surveys. Technology, 6(7), 0837–2405053138.

Plaud, J. J., Gaither, G. A., Hegstad, H. J., Rowan, L., & Devitt, M. K. (1999). Volunteer bias in human psychophysiological sexual arousal research: To whom do our research results apply? Journal of Sex Research, 36(2), 171–179.

R Core Team. (2021). R: A language and environment for statistical computing (Version 4.1.2). Vienna, Austria: R Foundation for Statistical Computing. https://www.R-project.org/

Redfearn, A. A., & Laner, M. R. (2000). The effects of sexual assault on sexual attitudes. Marriage & Family Review, 30(1–2), 109–125.

Riggle, E. D., Rostosky, S. S., & Reedy, C. S. (2005). Online surveys for BGLT research: Issues and techniques. Journal of Homosexuality, 49(2), 1–21.

Rosenthal, R., & Rosnow, R. L. (1975). The volunteer subject. Wiley.

RStudio Team. (2021). RStudio: Integrated development environment for R. Boston, MA: RStudio, PBC. http://www.rstudio.com/.

Saunders, D. M., Fisher, W. A., Hewitt, E. C., & Clayton, J. P. (1985). A method for empirically assessing volunteer selection effects: Recruitment procedures and responses to erotica. Journal of Personality and Social Psychology, 49(6), 1703–1712.

Strassberg, D. S., & Lowe, K. (1995). Volunteer bias in sexuality research. Archives of Sexual Behavior, 24(4), 369–382.

Twenge, J. M., Sherman, R. A., & Wells, B. E. (2015). Changes in American adults’ sexual behavior and attitudes, 1972–2012. Archives of Sexual Behavior, 44(8), 2273–2285.

Twenge, J. M., Sherman, R. A., & Wells, B. E. (2016). Changes in American adults’ reported same-sex sexual experiences and attitudes, 1973–2014. Archives of Sexual Behavior, 45(7), 1713–1730.

Twenge, J. M., Sherman, R. A., & Wells, B. E. (2017). Declines in sexual frequency among American adults, 1989–2014. Archives of Sexual Behavior, 46(8), 2389–2401.

U.S. Census Bureau. (2020). Current population survey. Retrieved from https://www.census.gov/programs-surveys/cps.html

Van Berlo, W., & Ensink, B. (2000). Problems with sexuality after sexual assault. Annual Review of Sex Research, 11(1), 235–257.

Wiederman, M. W. (1993). Demographic and sexual characteristics of nonresponders to sexual experience items in a national survey. Journal of Sex Research, 30(1), 27–35.

Wiederman, M. W. (1999). Volunteer bias in sexuality research using college student participants. Journal of Sex Research, 36(1), 59–66.

Wolchik, S. A., Braver, S. L., & Jensen, K. (1985). Volunteer bias in erotica research: Effects of intrusiveness of measure and sexual background. Archives of Sexual Behavior, 14(2), 93–107.

Wolchik, S. A., Spencer, S. L., & Lisi, I. S. (1983). Volunteer bias in research employing vaginal measures of sexual arousal. Archives of Sexual Behavior, 12(5), 399–408.

Wright, K. B. (2005). Researching Internet-based populations: Advantages and disadvantages of online survey research, online questionnaire authoring software packages, and web survey services. Journal of Computer-Mediated Communication, 10(3). https://doi.org/10.1111/j.1083-6101.2005.tb00259.x

Acknowledgements

The authors would like to thank Evan Nix for assistance with data cleaning and preparation.

Funding

None.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by OR, JK, and XC. The first draft of the manuscript was written by OR and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Human and Animals Rights

The questionnaire and methodology for this study were approved by the Indiana University Institutional Review Board (Study #2005785481).

Informed Consent

Informed consent was obtained from all participants included in this study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Roath, O.K., Chen, X. & Kolacz, J. Predictors of Participation for Sexuality Items in a U.S. Population-Based Online Survey. Arch Sex Behav 52, 1743–1752 (2023). https://doi.org/10.1007/s10508-023-02533-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10508-023-02533-6