Abstract

The metaverse integrates physical and virtual realities, enabling humans and their avatars to interact in an environment supported by technologies such as high-speed internet, virtual reality, augmented reality, mixed and extended reality, blockchain, digital twins and artificial intelligence (AI), all enriched by effectively unlimited data. The metaverse recently emerged as social media and entertainment platforms, but extension to healthcare could have a profound impact on clinical practice and human health. As a group of academic, industrial, clinical and regulatory researchers, we identify unique opportunities for metaverse approaches in the healthcare domain. A metaverse of ‘medical technology and AI’ (MeTAI) can facilitate the development, prototyping, evaluation, regulation, translation and refinement of AI-based medical practice, especially medical imaging-guided diagnosis and therapy. Here, we present metaverse use cases, including virtual comparative scanning, raw data sharing, augmented regulatory science and metaversed medical intervention. We discuss relevant issues on the ecosystem of the MeTAI metaverse including privacy, security and disparity. We also identify specific action items for coordinated efforts to build the MeTAI metaverse for improved healthcare quality, accessibility, cost-effectiveness and patient satisfaction.

Similar content being viewed by others

Main

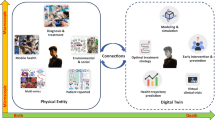

The metaverse has been a major focus of interest from both industry and academia in recent years, as extensively documented in scientific review articles such as refs. 1,2, spanning the years 1991 to 2022. The term ‘metaverse’ was originally coined in the 1992 science fiction novel Snow Crash3. According to the Oxford English Dictionary, which first listed the term in 2008, a metaverse is “[a] computer-generated environment within which users can interact with one another and their surroundings, a virtual world”4. Although the definition is easy to understand, it does not capture the richness of this evolving concept. The metaverse is often misrepresented as merely an extension of computer gaming and social media, or sometimes is dismissed as an overhyped rebranding of virtual reality (VR) and augmented reality (AR)5. To realize its widely promised benefits, the metaverse needs to integrate the full capabilities of supporting technologies (high-speed internet involving 5G/6G, VR, AR, mixed reality (MR), extended reality (XR), digital twins, haptics, holography, secure computation and artificial intelligence (AI)) on a massive social and economic scale, enabling people to interact among themselves and with avatars, AI agents and algorithms, as well as medical devices and facilities. As a parallel experiential dimension, the metaverse is intended to enhance the physical world, and our actions and decisions in it, rather than replacing it. Here, we envision a ‘medical technology and AI’ (MeTAI) ecosystem, rendered in Fig. 1, including key elements such as virtual comparative scanning with digital twins of scanners on individualized patient avatars, ubiquitous and secure medical data access including raw tomographic data sharing, an enhanced regulatory framework to accommodate this transformation, and ‘metaversed’ medical intervention for unprecedented accessibility and superior performance. We believe that now is the time to develop the MeTAI metaverse, well beyond current medical VR, AR and telemedicine6,7,8,9.

a, Virtual comparative scanning (to find the best imaging technology in a specific situation). b, Raw data sharing (to allow controlled open access to tomographic raw data). c, Augmented regulatory science (to extend virtual clinical trials in terms of scope and duration). d, ‘Metaversed’ medical intervention (to perform medical intervention aided by metaverse). In an exemplary implementation of the MeTAI ecosystem, before a patient undergoes a real CT scan, his/her scans are first simulated on various virtual machines to find the best imaging result (a). On the basis of this knowledge, a real scan is performed. Then, the metaverse images are transferred to the patient’s medical care team, and upon the patient’s agreement and under secure computation protocols, the images and tomographic raw data can be made available to researchers (b). All these real and simulated images and data as well as other medically relevant information can be integrated in the metaverse and utilized in augmented clinical trials (c). Finally, if it is clinically indicated, the patient will undergo a remote robotic surgery aided by the metaverse and followed up in the metaverse for rehabilitation (d). Each of the four applications is further described in the main text.

Our proposed MeTAI metaverse has a number of precursors. In 1983, Vannier, a co-author of this Perspective, was among the first to generate three-dimensional (3D) computed tomography (CT) visualizations of the human skull for surgical planning10. ‘Medicine Meets Virtual Reality’ conferences were held from 1996 to 201611. Among the most influential participants was R. Satava, who led the effort to improve combat casualty care at the Telemedicine and Advanced Technology Research Center of the US Army12. Other conferences include the Medical Imaging and Augmented Reality series13. In 2012, a virtual environment was developed on the Second Life platform for the advanced X-ray CT facility at Virginia Tech14 to facilitate immersive training of nano-CT users (Fig. 2a). (This was one of many non-entertainment-oriented primitive virtual worlds on Second Life and similar platforms15.) In 2010, a Laparoscopic Adjustable Gastric Banding Simulator was prototyped at Rensselaer Polytechnic Institute in collaboration with Brigham and Women’s Hospital (Fig. 2b), synergistic to the Advanced Multimodality Image-Guided Operating suite (AMIGO)16.

a, The Second Life Aided Training and Education (SLATE) system at Virginia Tech. b, The Laparoscopic Adjustable Gastric Banding Simulator at Rensselaer Polytechnic Institute. Panel a reproduced with permission from ref. 14, IOS Press. Panel b courtesy of Dr Suvranu De with FAMU-FSU College of Engineering.

Although the above and other precursors contributed value, the MeTAI metaverse should ideally facilitate improving and integrating isolated individual systems into a unified healthcare infrastructure. As the technologies enabling the metaverse advance, novel aspects of the metaverse will emerge to redefine biomedicine and society. We envision the benefits of a biomedical metaverse as analogous to those provided by computer-aided-design software in aerospace system engineering, where digital avatars of aircraft and spacecraft are rigorously tested and improved before fabrication in the physical world17. These technologies offer the ability to interrogate, compare and improve complex systems efficiently and effectively to consider a broader spectrum of alterations, unhindered by the requirement to build and test each option before making a design choice.

The next section envisions important metaverse opportunities that are either novel or expand the scope of current instantiations. We then consider some of the important technical, social and ethical issues associated with the development of a healthcare metaverse, and how these may be addressed. As the metaverse is still in its infancy, it is hoped that our opinions will stimulate imaginations, and spur actions towards an optimal realization of the metaverse of this type.

MeTAI applications

Given the revolutionary role that the metaverse can play in the healthcare domain, four important applications, with an emphasis on medical imaging, are identified that exemplify MeTAI: virtual comparative scanning, raw data sharing, augmented regulatory science and metaversed medical intervention. Although there are precursors to these applications, the novel aspects of these are exciting in terms of scope, scale, depth, and mechanisms of integration.

Virtual comparative scanning

The continued development of advanced computer models of the human body and the machines that image them18 has culminated in the digital twin computing paradigm, which integrates data from physical and virtual objects19. To leverage these innovations further for medical imaging, we need to build vendor-specific virtual tomographic scanners, individualized computational avatars with anatomical properties resolvable by the scanners, and AI-enabled graphical tools to insert, remove and modify diseases in these avatars20. If a patient has a heart problem and is referred for a CT scan, potential pathologies can be simulated within the patient’s avatar and scanned using each virtual CT scanner type. The images can be virtually reconstructed using competing deep reconstruction methods21 and analysed using AI diagnostic tools22. In addition, physical avatars23 can be 3D-printed and scanned to emulate the real scans and improve the associated models and analyses. One advantage of MeTAI-deployed XR over isolated XR is the availability of a workflow based on large-scale collaboration so that various brands of hardware and software can be fairly compared and refined, with vivid and timely interactions between patients and professionals.

The above scenario will bring clear clinical benefits. First, every patient can be matched to the best-performing imaging resource and most appropriate imaging protocol. With virtual scanning capabilities, comprehensive performance assessments will strongly drive improvements in imaging and AI technologies. The resultant hybrid datasets, residing in the cloud, will support much larger virtual and mixed clinical trials to evaluate imaging devices and algorithms in terms of accuracy, robustness, uncertainty and generalizability. Furthermore, a patient avatar allows a ‘fantastic voyage’ to view any pathology identified by human or AI radiologists, as demonstrated in ref. 24. This can help physicians explain medical issues and interventional options, which in turn helps reduce the anxiety of patients and improves their outcomes. As an analogy, it is unlikely that individuals would invest as much in cosmetics to enhance their superficial appearance without the availability of mirrors. The healthcare metaverse has the capability to visually inform an individual of the current and future health conditions—an internal mirror to focus attention inwards25. MeTAI coupled with activity trackers could engage people into living healthier lifestyles. Eventually, patients facing multiple options will launch parallel worlds to assess their outcomes. These projections should elevate the level of trust in a prescribed treatment and facilitate compliance.

Raw data sharing

The main source of medical imaging data is medical tomographic scanners, which generate a large amount of multi-dimensional measurements that are reconstructed into images using sophisticated algorithms. Lack of research access to tomographic raw data such as CT sinograms has been a long-standing problem for researchers and becomes more detrimental in the era of AI-based medical imaging. The need for raw data was recognized decades ago, because not all information in raw data can be preserved after tomographic image reconstruction, and the raw data are needed for algorithmic optimization. Furthermore, efforts were recently made by several groups on the so-called end-to-end deep learning workflow from raw data to diagnostic findings, which is also referred to as ‘rawdiomics’26, demanding access to raw data.

Magnetic resonance imaging vendors democratized raw data in the International Society for Magnetic Resonance in Medicine (ISMRM) format in 201627. Vendor-agnostic public software for image reconstruction is available28 and promoted by industry29. However, these and other data-sharing and open-source efforts have not yet gained much traction in many cases. For example, projection-domain CT datasets remain generally unavailable outside CT manufacturing companies, except for their academic collaborators. There are two types of CT raw dataset: the ‘raw’ detector measurements that require corrections, and the corrected datasets that can be directly used for image reconstruction. The situation is even more complex with photon-counting spectral CT scanners currently under development, which suffer from tremendous inhomogeneity and nonlinearity across detector elements. From an industrial perspective, the main challenge to sharing measurement data is the unwillingness to expose proprietary information. For example, each CT vendor has developed its own approach for system calibration and data correction. Without these proprietary steps, raw data cannot be reconstructed into images. One solution for CT raw data sharing is for vendors to publish the post-correction data. To do this, a standard should be defined for what corrections are to be performed on raw data30. One limitation for this solution is that after the corrections some of the original information may be lost. In some studies, researchers would like to turn on or off certain correction steps. To resolve these issues, academic–industrial partnerships are needed to define data sharing and conversion protocols.

In MeTAI, the virtual twins of all physical CT scanner models can scan digital patients to produce virtual ‘raw’ data. AI analyses can be then performed in the virtual data and image domains. Without disclosing vendor-specific sensitive information, such realistically simulated data should be easier to share than the original data from a real machine. Currently, the application of image-domain modelling and simulation methods, such as HeartFlow31, has provided valuable diagnostic information. Synergistically, deep learning-based rawdiomics in the raw data domain may provide further information. For example, coronary artery stenoses may be better quantified in the sinogram domain, because motion artefacts could contaminate reconstructed images due to high and irregular heart rates from atrial fibrillation32. In this regard, MeTAI can be a great facilitator to enable deep learning-based analyses on real and realistically simulated raw data directly or in dual domains (data and images).

Augmented regulatory science

AI, especially deep learning, has greatly advanced medical imaging, testing the limits of regulatory science applied in the pre-market review of such AI-enabled medical devices. It has become widely recognized that deep neural networks often have generalizability issues and are vulnerable to adversarial attacks. These challenges must be addressed to optimize benefits and safety. Last year, the Food and Drug Administration (FDA) published an action plan for furthering the oversight of AI-based software as medical devices (SaMDs)33. An action underlined in the plan is “regulatory science methods related to algorithm bias and robustness”. Diverse, high-quality and cost-effective big datasets are needed to optimize the overall workflow from data acquisition, through image reconstruction and analysis, to diagnostic finding. In practice, it is costly to obtain large clinical datasets. In this context, the aforementioned virtual and emulated scans will be invaluable.

As a proof of concept of the value of digital twin technology, an FDA team simulated the Virtual Imaging Clinical Trial for Regulatory Evaluation (VICTRE) study, in which 2,986 in silico patients were created to evaluate the performance of digital breast tomosynthesis (DBT) as a replacement of full-field digital mammography for breast cancer screening34. The entire imaging chain was simulated and validated, including virtual patients with and without breast cancer, the 2D and 3D X-ray mammographic image acquisition processes (shown in Fig. 3), and image interpretation. The increased lesion detectability of DBT in the VICTRE trial was consistent with the results from a comparative trial using human patients and radiologists that was submitted to the FDA in a pre-market application for approval of a DBT device34. The study demonstrated that simulation tools may be viable sources of evidence for regulatory evaluation of imaging devices. Another example is the Living Heart Project led by Dassault Systemes, in collaboration with the FDA and other leading research groups35 to examine the use of heart simulation as a source of digital evidence for cardiovascular device approval.

To increase confidence in the use of computational modelling in regulatory submissions, the FDA issued a draft guidance for ‘Assessing the Credibility of Computational Modeling and Simulation in Medical Device Submissions’36. Also, the FDA launched the ‘Medical Device Development Tools’ (MDDT) programme. Non-clinical assessment models such as animal or computational models and datasets can be qualified as MDDTs. Medical device vendors and developers can use qualified tools within the specified context to support the pre-market review of their products, without further validation evidence. Along this direction, MeTAI can generate datasets more comprehensively from patients, their avatars and phantoms (such as what was mentioned in the first application), integrate these datasets seamlessly, share the information broadly and enable dynamic evaluation of AI-based SaMDs. Furthermore, MeTAI can synergize datasets for multi-tasking and systems biomedicine. The use of computational methods in an unprecedented hybrid environment such as MeTAI might be more informative, more robust and less burdensome than a traditional clinical trial, either real or virtual, to evaluate the performance of medical devices.

Metaversed medical intervention

A most profound impact of MeTAI will be on how practitioners and patients use medical data and apply tools to understand diseases, select therapies and perform interventions. For example, such a virtual world allows us to plan complex cases in surgery and other therapies. When practiced virtually, the trial-and-error method can be repeated to rehearse procedures. This is synergistic with current surgical systems, such as da Vinci (https://www.davincisurgery.com), which allow a surgeon to work either from an adjacent room or across the globe via high-speed internet. In MeTAI, surgeons can attempt different approaches (for example, plastic surgery) on avatars. Radiotherapy is another example. Treatment plans are already routinely optimized via patient-specific computer simulations, a practice that MeTAI would expand to all medical interventions. Biological responses to radiation delivery could also be simulated before and during a course of therapy to optimize the treatment response based on the patient’s genetic information and previous patients’ response data. Currently, computational limitations and uncertainties in biological models make this approach impractical for routine treatment planning. In the future, harm to organs at risk during radiation therapy could be substantially reduced by leveraging repositories of simulators and clinical knowledge that are rendered immediately useful through their incorporation in the metaverse.

None of these potential benefits comes for free, and busy practitioners could be stressed or distracted by MeTAI in the early phases of adoption37. As MeTAI is developed and deployed, a need to train and certify practitioners will emerge. A surgeon or interventional radiologist might initially find it awkward to use unfamiliar tools or robots. This is analogous to the danger that is posed by an aircraft that incorporates new types of automation, which could lead an untrained pilot to catastrophe. Surgical robotic simulators and curricula were developed to aid the introduction of new systems38. Some medical schools are introducing cadaver-less anatomy education initiatives using VR and AR platforms39. Also, human–computer interaction in the metaverse has stimulated computer scientists to assemble a Metaverse Knowledge Center (https://metaverse.acm.org/). Some companies are making progress in this area. For example, OSSO VR is developing means to learn new surgical procedures using VR (https://www.ossovr.com/). Naturally, MeTAI is compatible with collaborative and continuous learning and multi-institutional projects, and convenient for team training and co-development through metaversed interactions comparable to those in the real world.

Of special interest is embodied AI40, in which AI agents learn not only from data but also through interactions. Avatars in MeTAI can be upgraded to embodied AI agents, facilitating the bidirectional value alignment so that avatars may have preferences of radiation dosage, medical cost and the side-effect profiles of various therapeutic options41,42. Such avatar personalities also enable metaverse-based surveys, policymaking and interest group formation.

MeTAI ecosystem

In the same way that social media became ubiquitous, as the above and other use cases are realized, MeTAI can become a transformative backbone of healthcare. Importantly, the MeTAI ecosystem is differentiated from other medical imaging simulation pipelines by the incomparable number and diverse types of datasets, its massive social scale, and its emphasis on user immersion, interaction and collaboration. As such, the metaverse requires an architecture and infrastructure that harmoniously integrates patients, physicians, researchers, algorithms, devices and data. Given the revolutionary nature of MeTAI, there are clearly foreseeable challenges that call for our prompt actions to chart an optimal course for development of the MeTAI metaverse.

Privacy and security

Privacy and confidentiality are of critical importance for MeTAI. Some medical data acquired in the metaverse must be protected by existing or future privacy legislation, such as the Health Insurance Portability and Accountability Act (HIPAA) in the United States. Secure computation techniques, including blockchains, are important tools in a zero-trust environment43,44. A well-designed MeTAI system with secure computing can utilize raw data without disclosing sensitive or private information. With federated learning as an initial step45,46,47, many further opportunities can be explored to preserve the integrity of patients’ data and utilize this information to advance clinical practice and healthcare. We advocate that the patients take control of their own data and avatar(s), supported by the blockchain technology, so that their own digital healthcare properties can be shared as they wish.

There would, at least initially, be heterogeneous and hierarchical dataset structures and accessibility options. For example, many de-identified datasets are already or will eventually be publicly available, such as datasets used in various deep imaging challenges. However, some high-value or sensitive datasets may be shared only within a consortium, a healthcare system or a multi-institutional project, between which some paywalls may be feasible and beneficial. New sharing models are already emerging. For example, companies such as Segmed currently sell anonymized patient data to AI developers (https://www.segmed.ai). Our proposed MeTAI should facilitate evolution of different paywalls, such as subscription, pay per use, and limited trial. Non-fungible tokens are also a viable option48. Just as for software and services, digital properties can be priced using algorithms49.

Cybersecurity is a well-established field50 that continues evolving to address a constant barrage of new challenges. Social metaverses have already encountered harassment issues. In MeTAI, harassment can manifest as adversarial attacks on algorithms, modifications to avatars and conventional human misbehaviours. These are inherent in all metaverses. Methods and rules are being developed to address these problems. For example, Meta established a four-foot personal zone to deter VR groping51. For adversarial defence in medical imaging, recently we published two papers to stabilize image reconstruction neural networks by synergizing analytic modelling, compressed sensing, iterative refinement and deep learning (ACID)52,53. Also, it was reported that the trustworthiness of AI model explainability could be vulnerable to subtle perturbations in the input54. MeTAI is subject to the same safety concerns as any other software or hardware products. However, we are optimistic that all these issues can be gradually addressed. The promise is that the quality of the evidence derived from MeTAI will rapidly improve, and the resultant digital evidence, once validated, will facilitate clinical translation of various innovations.

Management and investment

When metaverse-based interactions and interventions using XR and other tools are available, our envisioned metaverse-based applications and new scenarios will bring additional responsibilities and operational overheads. Eventually, healthcare savings will be realized, and these, combined with the monetization of some MeTAI data through paywalls (as discussed above), will compensate for the augmented workflow. As AI becomes more advanced, the need for human review of MeTAI-derived images and analyses may be reduced or obviated, and the information provided by MeTAI will become essential to guide healthcare. Distributed, cloud and edge-computing systems are well suited to accommodating diverse and massive datasets. The current Medical Imaging and Data Resource Center (MIDRC) effort (https://www.midrc.org/), which was funded with over US$20 million to build a COVID-19 dataset of tens of thousands of cases, is an excellent example to follow for other diseases and imaging modalities. Making use of the infrastructure, data structures and AI tools already developed by the MIDRC, it is estimated that the cost for building a future dataset of comparable size would be far less, roughly in the range of US$3 million to US$6 million—not as large a sum of money as one might think. Legislation relating to data sharing and metaverse development will be needed. As an example, stipulations that a dataset or an algorithm cannot be deleted within a well-defined term may be required. The blockchain technology, often regarded as a highly desirable component of the metaverse, is still under active development. Its issues with computational efficiency will be addressed55. In a blockchain platform, data provenance can be established by developers and interested users via proof of work, proof of stake or proof of history56. It is yet to be seen how this ecosystem will evolve. However, we do not consider the blockchain technology as indispensable to MeTAI.

The adoption of MeTAI will require investment in software, hardware and infrastructure. One question is how MeTAI development will be funded. It is envisioned that the development and adoption of MeTAI will proceed in phases, each of which could be financed differently. First, in the exploratory phase, many technologies to enable MeTAI will need to be developed by companies and universities. At this phase, funding would mainly come as grants from government and industry. Once the feasibility is established, MeTAI enters an early adoption phase, where applications of MeTAI that offer incremental improvements with evident benefits could be adopted to enable cost savings. Due to its immediate value and potential financial savings, established companies and venture capitalists will have strong incentives to fund MeTAI development. In the next phase, more MeTAI technologies would be adopted to have high potential payoff, but possibly with risks. Such efforts are likely to be funded through venture capital. Technology innovators and early adopters will develop, test and demonstrate benefits to justify and attract investment57. The assessment methods developed for other situations58,59 can be adapted to determine the value of MeTAI.

Disparity reduction

The aforementioned application of identifying the optimal scanner might not be relevant to patients in regions where there is only one machine available. However, the imaging data acquisition, reconstruction and post-processing parameters can still be optimized for the patient on the available scanner. Academia and industry should rise to this disparity challenge by working towards an equitable healthcare. Cost-effective scanners, such as low-cost CT, low-field magnetic resonance imaging and tablet-based ultrasound systems, are under active development60, along with additional software for scanner fleet management. Medical imaging companies will continue addressing device accessibility, as demonstrated by the specific design and marketing of super-value products in countries such as India over the past 15 years61. The relevant research and development require iterations, and MeTAI provides an ideal environment with prompt feedback paths. As MeTAI is likely to boost the performance of low-cost scanners further, the resultant disparity reduction can be expected. Currently, scanners are still physically operated by technologists. MeTAI provides a venue to share high-quality resources and expertise. In MeTAI, AI models and experts can supervise the use of imagers anywhere. On the therapy side, the efforts improving global health over the past decades have dramatically enhanced software and hardware in low- and middle-income countries, although the need for providing human expertise to best utilize these remains challenging. MeTAI will potentially help overcome these barriers. It is predicted that knowledge obtained from MeTAI will make the evolution of devices and algorithms a faster, cheaper and better process. Our hope is that disparities in healthcare will be eventually eliminated in the future, with MeTAI as part of the driving force.

Conclusion

The metaverse is the confluence of rapid and profound technical and sociological developments. It contains avatars that represent us and duplicate many objects around us such as medical imaging equipment, and has the potential to encompass many disciplines62,63,64. In addition to entertainment and social networking, metaverse applications include professional training, K-12 (from kindergarten to 12 years of basic education) and college education, supply chains, real estate marketing, and MeTAI as envisioned here. The 38-fold growth in telemedicine during the COVID-19 pandemic65 suggests how quickly elements of a metaverse can gain traction. This indication is strongly supported by the latest perspectives on medical applications of the metaverse66,67. We are confident that MeTAI, the healthcare metaverse, will eventually become a reality. It is high time to initiate collective efforts to pioneer such a metaverse. These efforts include but are not limited to harvesting low-hanging fruits such as advanced XR-based healthcare experience, demonstrating the feasibility of new metaverse applications such as virtual comparative scanning and raw data sharing, developing MeTAI to be cost-effective, user friendly, high performance, reliable, safe, equitable and ethical, while moderating ‘metaverse hype’ with expectations that are both measured and measurable.

References

Huynh-The, T. et al. Artificial intelligence for the metaverse: a survey. Preprint at https://arxiv.org/abs/2202.10336 (2022).

Park, S. M. & Kim, Y. G. A metaverse: taxonomy, components, applications, and open challenges. IEEE Access 10, 4209–4251 (2022).

Stephenson, N. Snow Crash (Bantom Books, 1992).

Oxford English Dictionary (Oxford Univ. Press, 1989).

Bar-Zeev, A. The metaverse hype cycle. Medium https://medium.com/predict/the-metaverse-hype-cycle-58c9f690b534 (2022).

Venkatesan, M. et al. Virtual and augmented reality for biomedical applications. Cell Rep. Med. 2, 100348–100348 (2021).

Ghaednia, H. et al. Augmented and virtual reality in spine surgery, current applications and future potentials. Spine J. 21, 1617–1625 (2021).

Lungu, A. J. et al. A review on the applications of virtual reality, augmented reality and mixed reality in surgical simulation: an extension to different kinds of surgery. Expert Rev. Med. Devices 18, 47–62 (2021).

Taylor, S. & Soneji, S. Bioinformatics and the metaverse: are we ready? Front Bioinform. 2, 863676 (2022).

Vannier, M. W., Marsh, J. L. & Warren, J. O. Three dimensional CT reconstruction images for craniofacial surgical planning and evaluation. Radiology 150, 179–184 (1984).

Weghorst, S. J., Sieburg, H. B. & Morgan, K. S. Health Care in the Information Age, Technology and Informatics: Medicine Meets Virtual Reality (IOP, 1996).

Satava, R. M. Robotic surgery: from past to future—a personal journey. Surg. Clin. North Am. 83, 1491–1500 (2003).

Peters, T. M. et al. Mixed and Augmented Reality in Medicine (CRC Press, 2018).

Mishra, S. et al. SLATE: virtualizing multiscale CT training. Xray Sci. Technol. 20, 239–248 (2012).

Chandra, Y. & Leenders, M. A. A. M. User innovation and entrepreneurship in the virtual world: a study of Second Life residents. Technovation 32, 464–476 (2012).

Jolesz, F. A. Intraoperative Imaging and Image-Guided Therapy (Springer, 2014).

Glaessgen, E. & Stargel, D. The digital twin paradigm for future NASA and US Air Force vehicles. In 53rd AIAA/ASME/ASCE/AHS/ASC Structures, Structural Dynamics and Materials Conference AIAA 2012-1818 (AIAA, 2012).

Human digital twins: creating new value beyond the constraints of the real world. NTT https://www.rd.ntt/e/ai/0004.html (2022).

Fuller, A. et al. Digital twin: enabling technologies, challenges and open research. IEEE Access 8, 108952–108971 (2020).

Ruiz, N. et al. DreamBooth: fine tuning text-to-image diffusion models for subject-driven generation. Preprint at https://arxiv.org/abs/2208.12242 (2022).

Wang, G., Ye, J. C. & De Man, B. Deep learning for tomographic image reconstruction. Nat. Mach. Intell. 2, 737–748 (2020).

Litjens, G. et al. A survey on deep learning in medical image analysis. Med. Image Anal. 42, 60–88 (2017).

Jahnke, P. et al. Radiopaque three-dimensional printing: a method to create realistic CT phantoms. Radiology 282, 569–575 (2017).

McGhee, J. et al. Journey to the centre of the cell (JTCC): a 3D VR experience derived from migratory breast cancer cell image data. In SIGGRAPH ASIA 2016 VR Showcase 11 (ACM, 2016).

Bosworth, H. B. et al. The role of psychological science in efforts to improve cardiovascular medication adherence. Am. Psychol. 73, 968–968. (2018).

Kalra, M., Wang, G. & Orton, C. G. Radiomics in lung cancer: its time is here. Med. Phys. 45, 997–1000 (2018).

Inati, S. J. et al. ISMRM raw data format: a proposed standard for MRI raw datasets. Magn. Reson. Med. 77, 411–421 (2017).

Hansen, M. S. & Sorensen, T. S. Gadgetron: an open source framework for medical image reconstruction. Magn. Reason. Med. 69, 1768–1776 (2013).

Open-Source Software Tools for MR Pulse Design, Simulation & Reconstruction (ISMRM, accessed 1 October 2022); https://www.ismrm.org/19/program_files/WE21.htm

Chen, B. et al. An open library of CT patient projection data. Proc SPIE. 9783, 97831B (2016).

Gaur, S. et al. Rationale and design of the HeartFlowNXT (HeartFlow analysis of coronary blood flow using CT angiography: NeXt sTeps) study. J. Cardiovasc. Comput. Tomogr. 7, 279–288 (2013).

De Man, Q. et al. A two-dimensional feasibility study of deep learning-based feature detection and characterization directly from CT sinograms. Med. Phys. 46, e790–e800 (2019).

Artificial Intelligence and Machine Learning (AI/ML) Software as a Medical Device Action Plan (FDA, 2021).

Badano, A. et al. Evaluation of digital breast tomosynthesis as replacement of full-field digital mammography using an in silico imaging trial. JAMA Netw. Open 1, e185474 (2018).

The Living Heart Project (Dassault Systèmes, accessed 1 October 2022); https://www.3ds.com/products-services/simulia/solutions/life-sciences-healthcare/the-living-heart-project/

Assessing the Credibility of Computational Modeling and Simulation in Medical Device Submissions (FDA, 2021); https://www.fda.gov/media/154985/download

Xi, N. et al. The challenges of entering the metaverse: an experiment on the effect of extended reality on workload. Inf. Syst. Front. https://doi.org/10.1007/s10796-022-10244-x (2022).

Chen, R. et al. A comprehensive review of robotic surgery curriculum and training for residents, fellows, and postgraduate surgical education. Surg. Endosc. 34, 361–367 (2020).

Cleveland Clinic creates e-anatomy with virtual reality. Cleveland Clinic https://newsroom.clevelandclinic.org/2018/08/23/cleveland-clinic-creates-e-anatomy-with-virtual-reality/ (2018).

Duan, J. et al. A survey of embodied AI: from simulators to research tasks. IEEE Trans. Emerg. Top. Comput. Intell. 6, 230–244 (2022).

Wiedeman, C., Wang, G. & Kruger, U. Modeling of moral decisions with deep learning. Vis. Comput. Ind. Biomed. Art 3, 27 (2020).

Yuan, L. et al. In situ bidirectional human-robot value alignment. Sci. Robot. 7, eabm4183 (2022).

Yao, A.C.-C. How to generate and exchange secrets. In 27th Annual Symposium on Foundations of Computer Science 162–167 (IEEE, 1986).

Zhang, Y. X. Blockchain viewed from mathematics. Am. Math. Soc. 68, 1740–1751 (2021).

Adnan, M. et al. Federated learning and differential privacy for medical image analysis. Sci. Rep. 12, 1953 (2022).

Dayan, I. et al. Federated learning for predicting clinical outcomes in patients with COVID-19. Nat. Med. 27, 1735–1743 (2021).

Kaissis, G. A. et al. Secure, privacy-preserving and federated machine learning in medical imaging. Nat. Mach. Intell. 2, 305–311 (2020).

Nadini, M. et al. Mapping the NFT revolution: market trends, trade networks, and visual features. Sci. Rep. 11, 20902 (2021).

Yao, L. et al. A decentralized private data transaction pricing and quality control method. In 2019 IEEE International Conference on Communications 18866587 (IEEE, 2019).

Ghafur, S. et al. The challenges of cybersecurity in health care: the UK National Health Service as a case study. Lancet Digit. Health 1, e10–e12 (2019).

Frenkel, S. & Browning, K. The metaverse’s dark side: here come harassment and assaults. The New York Times https://www.nytimes.com/2021/12/30/technology/metaverse-harassment-assaults.html (2021).

Wu, W. et al. Stabilizing deep tomographic reconstruction: Part A. Hybrid framework and experimental results. Patterns 3, 100474 (2022).

Wu, W. et al. Stabilizing deep tomographic reconstruction: Part B. Convergence analysis and adversarial attacks. Patterns 3, 100475–100475 (2022).

Zhang, J. et al. Overlooked trustworthiness of explainability in medical AI. Preprint at medRxiv https://doi.org/10.1101/2021.12.23.21268289 (2021).

Matheson, R. A faster, more efficient cryptocurrency. MIT News https://news.mit.edu/2019/vault-faster-more-efficient-cryptocurrency-0124 (2019).

Blake, T. Proof of work vs. proof of stake vs. proof of history. Cult of Money https://www.cultofmoney.com/proof-of-work-vs-proof-of-stake-vs-proof-of-history/ (2021).

Talamini, M. A. et al. A prospective analysis of 211 robotic-assisted surgical procedures. Surg. Endosc. Other Interv. Tech. 17, 1521–1524 (2003).

Leape, L. L. & Berwick, D. M. Five years after to err is human: what have we learned? JAMA 293, 2384–2390 (2005).

Friedman, C. P., Wyatt, J. C. & Ash, J. S. Evaluation Methods in Biomedical and Health Informatics (Springer, 2022).

Peng, Y. et al. Top-level design and simulated performance of the first portable CT-MR scanner. IEEE Access 10, 102325–102333 (2022).

Angeli, F., Metz, A. & Raab, J. Organizing for Sustainable Development: Addressing the Grand Challenges (Routledge, 2022).

Lee, L.-H. et al. All one needs to know about metaverse: A complete survey on technological singularity, virtual ecosystem, and research agenda. Preprint at https://arxiv.org/abs/2110.05352 (2021).

Wong, K. C. et al. Review and future/potential application of mixed reality technology in orthopaedic oncology. Orthop. Res. Rev. 14, 169–186 (2022).

Genske, U. & Jahnke, P. Human Observer Net: a platform tool for human observer studies of image data. Radiology 303, 524–530 (2022).

Bestsennyy, O., Gilbert, G., Harris, A. & Rost, J. Telehealth: a quarter-trillion-dollar post-COVID-19 reality? McKinsey https://www.mckinsey.com/industries/healthcare-systems-and-services/our-insights/telehealth-a-quarter-trillion-dollar-post-covid-19-reality (2021).

Skalidis, I., Muller, O. & Fournier, S. CardioVerse: the cardiovascular medicine in the era of metaverse. Trends Cardiov. Med. https://doi.org/10.1016/j.tcm.2022.05.004 (2022).

Yang, D. et al. Expert consensus on the metaverse in medicine. Clin. eHealth 5, 1–9 (2022).

Acknowledgements

This work was supported in part by NIH grants R01CA237267, R01HL151561, R01CA227289, R37CA214639, R01CA237269, R01CA233888, R01EB026646 and R01EB032716. We acknowledge discussions on data sharing with S. Zuehlsdorff at Siemens Medical Solutions USA. Figure 1 originally designed by the authors was artistically rendered by M. Esposito (https://www.markesposito.me). The mention of commercial products, their sources or use in connection with materials reported herein is not to be construed as either an actual or implied endorsement of such products by the Department of Health and Human Services.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Ioannis Skalidis and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, G., Badal, A., Jia, X. et al. Development of metaverse for intelligent healthcare. Nat Mach Intell 4, 922–929 (2022). https://doi.org/10.1038/s42256-022-00549-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-022-00549-6

This article is cited by

-

Hybrid disease prediction approach leveraging digital twin and metaverse technologies for health consumer

BMC Medical Informatics and Decision Making (2024)

-

An architecture for collaboration in systems biology at the age of the Metaverse

npj Systems Biology and Applications (2024)

-

Telehealth and Virtual Reality Technologies in Chronic Pain Management: A Narrative Review

Current Pain and Headache Reports (2024)

-

Recent Advances in Patterning Strategies for Full-Color Perovskite Light-Emitting Diodes

Nano-Micro Letters (2024)

-

Two Metaverse Dystopias

Res Publica (2024)