Abstract

The integration of small-scale Photovoltaics (PV) systems (such as rooftop PVs) decreases the visibility of power systems, since the real demand load is masked. Most rooftop systems are behind the metre and cannot be measured by household smart meters. To overcome the challenges mentioned above, this paper proposes an online solar energy disaggregation system to decouple the solar energy generated by rooftop PV systems and the ground truth demand load from net measurements. A 1D Convolutional Neural Network (CNN) Bidirectional Long Short-Term Memory (BiLSTM) deep learning method is used as the core algorithm of the proposed system. The system takes a wide range of online information (Advanced Metering Infrastructure (AMI) data, meteorological data, satellite-driven irradiance, and temporal information) as inputs to evaluate PV generation, and the system also enables online and offline modes. The effectiveness of the proposed algorithm is evaluated by comparing it to baselines. The results show that the proposed method achieves good performance under different penetration rates and different feeder levels. Finally, a transfer learning process is introduced to verify that the proposed system has good robustness and can be applied to other feeders.

Similar content being viewed by others

Introduction

Motivation

The global cumulative Photovoltaics (PV) capacity increased from 480 GW in 2018 to 583.5 GW by the end of 2019, representing a 21% growth over the previous year [1, 2]. Among all newly installed capacities, rooftop PV (residential PV) increased by 16 GW, occupying 15% of all solar power capacity that was installed in 2019. Although high PV penetration reduces greenhouse gas emissions and leads to an environmentally friendly world, it also significantly changes the existing power system structure. One significant problem, the California "duck curve", is caused by the high penetration of sustainable energy (solar energy, wind energy, etc.) into the netload. A significant drop is observed midday, causing an imbalance between demand and supply. It is vital to increase the visibility of these renewable energy generations to better manage the power system. Among all installed solar panels, small-scale PV or rooftop PV occupies nearly 50% of the overall capacity. Unlike the large-scale PV stations that are measured individually, most rooftop PV is behind the meter, which means that the power generated by PV cannot be recorded by residential smart meters. A lack of visibility would limit demand-side management, including the scheduling of the short-term operations implemented by grid operators. Although PV meters are installed in some countries to measure the solar energy generation, the consumers may choose to not report their PV generation to the Distribution Network Operator (DNO), and the consumers may install additional PV panels which are not registered, which would cause mismatch between the real PV generation and the estimated generation [3]. Moreover, referring to Data Access and Privacy Framework published by the Department for Business, Energy & Industrial Strategy (DBEIS)) in the U.K. [4], to better protect consumers’ privacy, the DNO is prevented from accessing individual’s meter reading directly, hence obtaining the PV generation of a regional area is challenging both technically and ethically. The conventional method requires installing an electricity meter beside the solar panel of each house, which requires extra measurement devices and communication channels. These devices largely increase budgets and invade personal privacy [5]. Moreover, the ownership of these devices also raises conflicts among stakeholders.

Problem statement

The feeder system is shown in Fig. 1. Considering a Grid Supply Point (GPS), the feeder connects a few houses and unmonitored rooftop PV systems. The power utility has the authority to access the real-time grid measurement of a feeder/substation (active power \({P}_{\mathrm{Net}}(t)\), reactive power \({Q}_{\mathrm{Net}}(t)\), etc.). The components of the feeder include the total demand load of all residences served by the feeder, \({P}_{\mathrm{Load}}\); the power generated by the rooftop PVs in this area, \({P}_{PV}\); and power loss \(\epsilon \):

The target of the proposed system is to decouple \({P}_{\mathrm{Net}}(t)\) into \({P}_{\mathrm{Load}}(t)\) and \({P}_{PV}(t)\), as shown in Fig. 2. It is observed that the penetration of solar energy distorts the original demand load curve, making it difficult to recover the original demand load from the masked netload.

Example of time series \({P}_{PV}\left(t\right), {P}_{\mathrm{Net}}\left(t\right), \mathrm{and }{P}_{\mathrm{Load}}(t)\) under different weather conditions (Data source: Pecan Street Dataport [6])

There are two research topics which are similar to the solar energy disaggregation task investigated in this paper, which are solar energy forecasting and house-level Energy disaggregation (also called Non-Intrusive Load Monitoring (NILM) [7]); however, there are significant differences between these problems, as shown in Table 1. The difference between NILM and solar energy disaggregation at GSP techniques is the hierarchical level, while solar energy disaggregation at GSP focuses on large-scale areas, such as the distribution network or the feeder head with megawatts (MW) or even gigawatt (GW) consumption, the NILM technique aims to analyse a small-scale area, such as a single house or building with kilowatt (kW) consumption. As for solar energy forecasting, the target is to forecast the future solar energy given historical PV generation data [8], the input of the solar energy disaggregation model is the netload measured at the feeder head, while the historical PV generation data is not available.

Related work

Methods to decouple solar energy and the demand load are discussed in the literature. These methods can be divided into three categories: model-based methods, upscaling methods, and data-driven methods, as shown in Table 2. Model-based methods estimate the total solar energy in a region by constructing an equivalent PV system. Taking grid measurements, weather information, and irradiance information as inputs, the parameters of the equivalent system are optimized by solving an optimization problem. Few regression methods, including the Contextually Supervised Source Separation (CSSS) methodology [3] and its extension [9], convex optimization [10, 11] have been proposed. In addition, the ambient temperature is also adopted as a correction factor to improve the model accuracy in some works [9, 11]. In summary, model-based methods require knowledge of the PV module model (angle of solar radiation, series resistance, etc.). Lacking vital information would lead to a large error between the ground truth and estimation.

Upscaling methods select a small number of representative PV systems as the reference to estimate the overall PV generation of the entire area. In [12, 13], a method that combines a data dimension reduction procedure and a mapping function (linear regression and Kalman filter) are proposed. Moreover, a hybrid method that combines upscaling with satellite-derived data is proposed in [14, 15]. Next-generation high-resolution satellites produce highly accurate irradiance estimates that can improve performance. An unsupervised upscaling method is introduced in [16], the method first determines the upscaling factor by comparing the netload with/without PV panels installed, and then the PV generation is estimated with the solar irradiance data and the upscaling factor. This method has high adaptivity and does not require train the model with historical data. However, the results show that the accuracy of this unsupervised learning method is much lower than traditional supervised learning methods. Moreover, this method requires measurements from a small group of PV systems in the target area, and this information is not always available for rooftop PV.

To overcome the shortcomings of the approaches mentioned above, data-driven methods utilize high-resolution data that are correlated with solar energy from a variety of data resources to estimate solar energy. The power data come from Micro Phasor Measurement Units (\(\mu \) PMUs), Distribution Supervisory Control and Data Acquisition (DSCADA), and smart meters. Furthermore, the meteorological data come from National Centres for Environmental Information (NCEI) (US) [17] or the Climatological Observers Link (COL) (UK) [18]. In addition, satellite irradiance variables that influence the PV generation, e.g., Global Horizontal Irradiance (GHI), Direct Normal Irradiance (DNI), and Diffuse Horizontal Irradiance (DHI), are also included in the literature. The satellite data come from The National Solar Radiation Database (NSRDB) (US) [25] or RE Data Explorer (Central Asia) [26]. As supervised learning approaches, the data-driven methods require a training model with historical netload data and applying the trained model to the real-time netload. Depending on the types of algorithms, the data-driven methods are divided into machine learning-based methods and deep learning-based methods further. In turns of the machine learning approaches, both Gradient Boosting Regression Tree (GBRT), K-Nearest Neighbours (KNN), Random Forest (RF), Support Vector Machine (SVM) and Hidden Markov Model Regression (HMMR). In [19], the authors develop an SVM model with a sliding window. An OpenPMU sensor is installed to measure a 3.5‐kW peak (kWp) PV system and the net demand with a sampling rate of 50 Hz. This method treats energy disaggregation as a classification problem by finding the boundary between the demand load and the PV generation. Based on the results in [19], KNN and RF regression models are proposed by combining Principal Component Analysis (PCA) techniques [20]. PCA is a technique for reducing the dimensionality of input features while minimizing information loss. The authors utilize KNN, an instance-based learning method, to compute the mean of the closest neighbours and to make an estimation of a new sample. Meanwhile, the RF regression model which consists of many decision trees is employed to make predictions by taking the average or mean of the output from a few trees. The authors in [21] integrate a physical PV model with a statistical model for estimating electric load, and an HMMR is employed to predict demand load under different energy consumption states. In [22], Wang et al. proposed a hybrid method that combines a model-based method and data-driven load/PV forecasting techniques. First, the PV generation and demand load are decoupled, and each component is forecasted individually via a neural network and GBRT. However, the data set utilized in this work is synthetic which adds the demand load and the PV generation data. In reality, the consumption pattern will alter as the consumers tend to save energy by shifting the appliance usage period to the duration when PV generates more electricity. As a result, such a synthetic data set cannot reflect the real net load with PV penetrated. In summary, although machine learning-based approaches have simpler model structures and lower computation complexity, these models have limited ability in capturing nonlinear correlations between the input features and the outputs.

As for deep learning-based methods, both the naive neural network model Multilayer Perceptron (MLP) and advanced neural networks such as the Convolutional Neural Network (CNN) and the Recurrent Neural Network (RNN) are covered in the literature, see Table 2. A Multilayer Perceptron (MLP) neural network was proposed in [23], and measurement data from various sources were fed into the network to implement disaggregation. The results show that the hybrid model achieves better performance than models that utilize only one data source. A One-dimensional (1D) CNN model is employed to detect a PV output pattern in [24], this work treats the PV disaggregation as a classification problem to classify the pattern with/without PV generations. However, it is noticed that the PV output changes along with the weather conditions (sunny, rainy, or cloudy). Hence, the PV disaggregation task is not equal to a simple binary classification problem. Long–Short-Term Memory (LSTM), an advanced RNN model, is developed by the authors in [25], the model takes both active/reactive power of the net load of the analysed node as well as the consumption of the neighbouring nodes to improve the prediction accuracy. The knowledge gaps in the existing methods discussed above can be summarized as follows.

-

1.

The CNN-based PV disaggregation model has limited ability in adapting to long temporal dependencies, while the LSTM-based model unable to extract local features. A new PV disaggregation model is expected to be designed to take advantage of both the CNN and LSTM models.

-

2.

Existing models are not constructed for the net load with a certain PV penetration rate, a more robust model is expected to self-adopting to the net loads with different penetration rates.

-

3.

The transferability of the PV disaggregation model is not well discussed in the literature, since the supervised learning approaches require historical PV generation data for training, such data is not always available for the network operators.

Contributions

To overcome the drawbacks of existing approaches, a solar energy disaggregation system that enables both online and offline modes is proposed in this paper. Instead of installing measurement devices at each house, the proposed method only utilizes measurements from one smart metre installed in the feeder or substation to estimate the solar energy generation in the entire area. Other relevant variables, such as meteorological, irradiance, and temporal data, are also collected as the inputs of the disaggregation system. The main novelties of this paper are as follows:

-

1.

A CNN–Bidirectional LSTM (BiLSTM)-based solar energy disaggregation system that enables online and offline modes are established. Instead of installing a meter for each rooftop PV, the proposed system can decouple the PV generation and real demand load of a geographical area by installing only a feeder/substation-level smart meter.

-

2.

Compared to traditional bidirectional LSTM that can only enable offline learning, and online BiLSTM algorithm that uses a rolling window is adopted.

-

3.

A case study demonstrates that the proposed disaggregation system achieves high accuracy under different solar energy penetration rates and different feeder models.

-

4.

A transductive transfer learning approach that utilizes synthetic data to evaluate real-time generation at other substations or feeders is established.

Organization of the paper

The resulting paper is organized as follows. The data sets and features related to solar energy generation are presented in “Data description”, In “Solar energy disaggregation system”, the PV energy disaggregation model is proposed. The case studies are presented in “Results and discussion”. The conclusion and final discussion are drawn in the last section.

Data description

Feeder-level measurement

In this paper, feeder models R1-12.47-4, R2-25.00-1, and R4-25.00-1 provided by GridLAB-D are employed as the model for the case study [33], see Table 3. The feeder types are classified depending on the residential description, ranging from light rural to moderate urban (with apparent power from 948 to 17,021 kW). The feeder level measurement is available from physical aggregation equipment, such as DSCADA, smart feeder meter. In this chapter, active power \({P}_{grid}\), reactive power \({Q}_{grid}\) are chosen as the grid measurement variables. The duration of peak PV generation (10 a.m.–3 p.m.) and peak load is different (7–10 a.m., and 5–10 p.m.).

Load and PV data set

The consumer-level smart meter data is employed to construct the feeder model. In this chapter, two data sets are used for model training and testing, which are Dataport and System Advisor Model (SAM) simulation data:

Data Set 1 (Dataport)

Dataport [6] is used as the data set to train the proposed model. The household-level measurements are added together to construct a synthetic feeder model. In this chapter, a Dataport data set with an interval of 15 min is employed, 75 houses are aggregated to build a feeder with a capacity of 100 kW during Jan 2018 and Dec 2018 in Austin, Texas, US (Fig. 3). The PV penetration rate of the feeder is controlled by limiting the percentages of houses with PV installed.

Data Set 2 (SAM simulation data)

For areas where historical PV outputs are not available, a synthetic data generation approach introduced in [22] can generate training data. SAM [30] is a techno-economic software developed by The National Renewable Energy Laboratory (NREL). The software can simulate the distribution network with the integration of the renewable energy system given the geographical location (longitude and latitude) and historical weather data set. The approach can generate the netload of the distribution network with detailed PV and combines the synthetic PV outputs with historical demand load data to simulate the feeder with solar energy penetrated.

As a supervised learning method, the proposed model requires both the netload (input vector) and PV generation (label) under the feeder are required at the training stage. The data set is split into a training data set (90%) and a testing data set (10%).

Meteorological data set

The 1-hourly meteorological data set comes from National Centres for Environmental Information (NCEI) (US) [34] given specific locations and durations are adopted. The variables include temperature T, humidity \(U\), weather conditions (e.g., sunny, rainy, snowy, and cloudy) \(W\), cloud cover rate \(D\), surface albedo, pressure, wind speed, etc. Figure 4 shows the Pearson correlation coefficients of the solar energy generation and relevant numerical variables. When the coefficient is greater than 0.3, a positive correlation exists between the two variables. From the figure, it is observed that among all variables, the temperature, GHI, DNI, DHI, and cloud cover rate have the greatest impact on the energy generated by the PV panel.

Figure 5 compares the average PV output under different weather conditions. It is observed that the output power of the PV panels reaches a maximum during clear days, and less power is produced during bad weather conditions, such as rainy and snowy conditions. Hence, the weather condition is also a vital variable in this case.

a PV output under different weather conditions; b probability density distributions under different weather conditions (Data source: Pecan Street Dataport [6])

Satellite-driven irradiance data set

The 1-hourly irradiance measurements at the same location are obtained from the National Climatic Data Center (NCDC) [35]. The satellite-driven data include GHI, DNI, DHI, latitude, longitude, etc.

GHI

The total amount of shortwave radiation received from above by a surface horizontal to the ground:

DNI

Amount of solar radiation received per unit area by a surface that is always held perpendicular (or normal) to the rays that come in a straight line from the sun’s direction at its current position in the sky.

DHI

The amount of radiation received per unit area by a surface (not subject to any shade or shadow) that does not arrive on a direct path from the sun but has been scattered by molecules and particles in the atmosphere and comes equally from all directions.

Figure 6 shows the heatmap of GHI and PV output throughout the entire year. From the figure, it is found that the PV output is strongly correlated to the GHI value. The duration of the peak values of GHI/PV output almost overlaps. The explanation of such a correlation between the solar irradiance and the module’s solar power generation \({P}_{t}\) and the solar irradiance incident on the module \({I}_{PV,t}\) is presented in Eqs. (3) and (4):

where \({T}_{PV,t}\) is the temperature of the solar module, \({T}_{a,t}\) is the ambient air temperature, \({N}_{\mathrm{OCT}}\) is the nominal operating cell temperature, and \(48^\circ{\rm C} \) is selected as a typical value. In addition, \({I}_{PV,t}\) sum of GHI, DNI, and DHI:

where \({\tau }_{b,t}\) and \({\rho }_{t}\) indicates the atmospheric transparent coefficient and surface albedo, α and A are the elevation and azimuth of the sun; and β and γ denote the tilt angle and azimuth of the solar panel.

Heatmap of a GHI/b PV output throughout the entire year (Data source: NCDC [35])

Temporal-related features

The temporal variables include the number of hours in a day \(H\), the month of the year \(M\), and the quarter of the year \(R\). Examples of average PV outputs and probability density distributions during different months are presented in Fig. 7. It is observed that both the month and the quarter of the year influence PV output. Normally, the maximum output throughout the year appears between June and August.

a Bar chart of PV outputs in different months, b probability density distributions during different months (Data source: Pecan Street Dataport [6])

Data preparation

The data collected from various resources should be processed before sending it to the solar energy decoupling model. The data preparation process includes data cleaning, data synchronization, and one-hot encoding.

Data cleaning

The purpose of data cleaning is to generate clean and structured data. The original data contains high-frequency noise, missing values, wrong labels, and duplicates. The missing values are filled by the neighbouring values.

Data synchronization

It should be noticed that the different data sets measured with various sampling rates (15 min for the load, 1 h for weather and irradiance data), so the data from all data sets should be synchronized. In this work, all data is aligned using the timestamps from each source and resamples the interval of the data to 15 min.

One-hot encoding

Before feeding the data to the DNN model, all categorical variables should be converted to numerical forms via one-hot encoding. A new binary variable is used to represent the original variable [36, 37]. In this work, the categorical variable matrix \({{\varvec{C}}}_{{\varvec{t}}}\) contains:

By implementing one-hot encoding, the variables are transformed to

where \({f}^{O}\) is the one-hot encoding function, and \({{\varvec{C}}}_{{\varvec{t}}}^{{\varvec{O}}}\) is the one-hot encoding matrix. Hence, the overall input matrix D is shown as follows:

where \({{\varvec{N}}}_{{\varvec{t}}}\) is the numerical variable.

Solar energy disaggregation system

Overall system

The proposed system is assumed to have the authority to access real-time grid measurements by AMI/SCADA. In addition, the system can also obtain measurements from weather stations and satellites. The target of the system is to separate the net load into the demand load and solar energy generation. The proposed system enables two operating modes: online and offline modes, as shown in Fig. 8. The online mode can provide solar energy disaggregation on a real-time basis, and the offline mode implements algorithms using a historical database.

Offline mode

Real-time measurements are not always available to power utilities; therefore, the offline mode is adopted to analyze the load components based on historical data. Historical data are generated from DSCADA and the relevant data centre and stored in the database. In the offline mode, the input sequence is provided by the database, and conventional BiLSTM is adopted, since the entire input sequence is available. The offline mode does not require real-time communication with DSCADA or weather stations but decouples the historical grid measurements into solar energy and demand load locally. The offline mode can help the power utility construct the local PV and load model, contributing to the demand-side management effort. Since the historical PV generation data is not always available for the energy utility, there are several approaches to obtain such label training data: (1) utilize the public data set at the research location, such as Dataport [125] for the distribution network in Texas, US. Such a public data set is anonymized and under the permission of consumers. (2) Utilize software simulation software to generate synthetic netload along with PV generation data. (3) Utilize the transfer learning method. (4) Select a small group of volunteers under the distribution network, and the energy utility collects the smart meter data and the PV generations inside the volunteers’ houses under their permission. Since the trials already are under the consent of the volunteers, such trials will not cause privacy issues.

Online mode

The online mode part of the system consists of three components: real-time measurement, a cloud server, and power utility. In the online mode, the PV disaggregation system can access real-time measurements from both distribution feeders and weather stations/satellite systems. The grid measurements include active power, reactive power, voltage, current, etc. The weather-related measurements include temperature, humidity, cloud cover, etc. After data are gathered from the sensors/stations, the data are pre-processed. The processed data are then fed into the core part of the system, the cloud platform, which implements deep learning algorithms (introduced in the next subsection) to decouple the original net load into PV generation and the real demand load. Conventional BiLSTM cannot support online learning due to the delay problem. This is because, during the online mode, we assume the length of the input sequence is unknown and it is impossible to learn the input sequence from both forward and backward directions. Hence, an online BiLSTM algorithm that was originally used for online speech recognition is adopted [38]. A sliding window moves over the real-time input sequence, and then the BiLSTM is implemented for each sliding window. In this case, the model can learn bidirectionally, and the time delay is reduced to \({T}_{w}\). The \({T}_{w}\) sliding window moves at time step \({T}_{s}\). Therefore, the original online sequence can be split into a few chunks, and the \(i\)th window is

and a maximum number of \({T}_{w}/{T}_{s}\) windows overlap at time stamp \(t\). The final output at time stamp \(t\) is evaluated by averaging the output of overlapping windows at \(t\). Note that the online system results in a delay of \({T}_{w}\), since the system should lookup the timestamp \({T}_{w}-1\) in the future to determine the output at time stamp \(t\).

Hybrid CNN bidirectional LSTM neural network

1D CNN–BiLSTM combines BiLSTM RNN with a one-dimensional CNN and provides a deeper learning ability for regression tasks with time-series data [37]. 1D CNN model is efficient in extracting the important features from the 1D time-series data and able to filter out the noise of the input data. However, the 1D CNN model only focuses on the local trend and has limited ability in adapting to long temporal dependencies [39]. In contrast, the LSTM model achieves superior performance in tracking long-term dependencies from the input sequence, but the ability to extract local features is limited. Hence, a model that combines the 1D CNN model and LSTM model is expected to take advantage of these two deep learning techniques and achieve higher prediction accuracy [40].

1D CNN

A convolutional layer processes data only for its receptive fields [41] (the first convolutional layer can learn small local patterns, such as edges, colours, gradient orientation, etc., and the next convolutional layer can assemble these low-level features into larger level features, such as eyes, noses, ears, etc., and so on). After the data passes each convolutional layer, the original data is abstracted to a feature map with the shape of (samples, feature map height, feature map width, and the number of filters). The 1D CNN likes the 2D/3D CNN but with a different input structure. In a 1D CNN, the kernel moves along one dimension, and the shapes of the input and output data are both two dimensions [42]. A 1D CNN is extremely suitable for processing 1D data (such as time-series data, text, and audio). The kernel size represents the width of the kernel, and the height of the kernel is the amount of data at each step. In a 1D CNN layer, the output of the layer \(z(n)\) is computed by convolving the signal \(x(n)\) with the convolution kernel \(w(n)\) of size \(l\) [43]:

where the number of convolution kernels of the 1D CNN layer is 16 with a ReLU activation function.

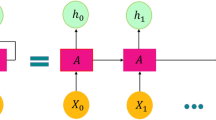

BiLSTM

The structure of the LSTM block is illustrated, since LSTM is the basic component of the BiLSTM model. The structure of a typical LSTM block is shown in Fig. 9, and the cell state \({c}_{t}\) is different from the output \({h}_{t}\). As demonstrated, the components inside the block include an input node \({g}_{t}\), input gate \({i}_{t}\), internal gate \({c}_{t}\), forget gate \({f}_{t}\), output gate \({o}_{t}\), and output \({h}_{t}\). The nature of gate is a sigmoid unit (output range between [0,1]). It can recognize and pass important information and block unimportant information. Once both the input and output gates are closed, the flow will be blocked inside the memory cell and will not affect the following time steps until the gate reopens. As indicated in the state-space Eq. (12), \({g}_{t}\), \({i}_{t}\), \({f}_{t}\) and \({o}_{t}\) are functions of the data in the current time step input \({x}_{t}\) and the output of the previous time step \({h}_{t-1}\). Furthermore, \({b}_{i},{b}_{f},{\mathrm{and }b}_{o}\) are bias parameters of nodes. After the values of gates have been determined, the candidate value \({\widetilde{c}}_{t}\) is calculated and compared with the previous cell state \({c}_{t-1}\). With the gate status \({i}_{t}\) and \({f}_{t}\), the memory cell determines whether to update its value. Finally, by regulating the current cell state \({c}_{t}\) with the tanh activation function \(\phi \) and multiplying by the output gate, the output value is calculated:

where \(\odot \) represents elementwise multiplication; \({W}_{i}\), \({W}_{f}\), \({W}_{o}\), \({W}_{c}\) are the weight matrices; and \({b}_{i},{b}_{f},{b}_{o},{b}_{c}\) are the bias parameters. In a BiLSTM structure (Fig. 9b), given a minibatch input \({{X}^{^{\prime}}}_{t}\), the forward and backward hidden states at time step \(t\), i.e., \({\overrightarrow{H}}_{t}\) and \({\overleftarrow{H}}_{t}\) can be expressed as

where \({W}_{xh}^{(f)},{W}_{xh}^{(b)}\), \({W}_{hh}^{(f)},{W}_{hh}^{(b)}\) represent the weights of the model; and \({b}_{h}^{(f)},{b}_{h}^{(b)}\) are the biases of the model. Then, by integrating the forward and backward hidden states, the hidden state is obtained as \({H}_{t}.\) Finally, \({H}_{t}\) is fed to the output layer to compute the output of the BiLSTM block \({O}_{t}\):

where \({W}_{hq}\) is the weight; and \({b}_{q}\) is the bias of the output layer.

1D CNN BiLSTM model

The structure of the 1D CNN–BiLSTM network utilized in this paper is presented in Fig. 10; the network contains six layers, which are one input layer, two 1D convolutional layers, two BiLSTM layers, two fully connected layers, and one output layer:

-

1.

Input Layer: The network’s input is the multivariate data set which contains the features from four data sets as illustrated in “Data Description”.

-

2.

1D Convolutional Layer: The input layer is followed by two 1D convolutional layers with 64 and 32 filters, respectively. The kennel size of the first 1D convolutional layer is 5 with strides = 1 and the padding type is set as “causal”; the kennel size of the second 1D convolutional layer is 3 with strides = 1 and the padding type is set as “causal”. In addition, each convolutional layer is linked with a 1D max-pooling layer. The function of the max-pooling is to calculate the maximum value in each patch of each feature map. In this model, a 1D max-pooling layer with kernel size 2 and stride 1 was constructed.

-

3.

BiLSTM Layer: Two BiLSTM layers with 256 units are stacked to enable the long-term temporal dependencies on the feature extracted by the convolutional layer. Each BiLSTM layer contains two LSTM layers of opposite directions to the same output. This structure enables the output layer to learn both forward and backward information.

-

4.

Flatten Layer: After BiLSTM layers, a flatten layer is employed to flatten the 3 × 512 matrix into a vector with a size of 1536. The flatten layer is normally used in the transition from LSTM or convolutional layer to make the multi-dimensional input one-dimensional.

-

5.

Fully Connected Layer: The flatten layer is then connected with two fully connected layers with 64 and 16 nodes, respectively. To avoid overfitting problems during the training process, two dropout layers with rates 0.2 and 0.1 are stacked after each fully connected layer.

-

6.

Output Layer: The final fully connected layer is connected to the output layer with two nodes which estimate the PV generation as well as the demand load, respectively.

Model complexity

The model complexity (O) is determined by computing the parameters of each layer. Referring to [44], for the 1D CNN layer, the computation complexity for each sample is computed by the following equation:

where \({K}_{c}\) is the number of the convolutional layers, \({F}_{c,k}\) indicates the number of filters in the \(k\)th layer, \({L}_{c,k}\) is the kernel length, and \({I}_{c,k}\) denotes the number of input channels. As for the BiLSTM layer, the computation complexity is

where \({K}_{L}\) is the number of the BiLSTM layers, \({I}_{B,k}\) is the number of input channels, \({M}_{B,k}\) denotes the number of LSTM units. In turns of the FC layer, the computation complexity is computed as

where \({K}_{L}\) is the number of FC layers, \({{N}^{\mathrm{in}}}_{F,k}\) is the number of the input neurons, and \({{N}^{out}}_{F,k}\) denotes the number of the input neurons. Hence, the overall computation complexity of the 1D CNN–BiLSTM model is

In this work, \({K}_{C}\) equals to 2, \({K}_{B}\) is chosen as 1, and \({K}_{F}\) is 2. Based on the computation complexity in Eq. (20), the model parameters of the proposed 1D CNN–BiLSTM model are summarized in Table 4, both the type of hyperparameter, shape, and number of parameters are concluded. The number of the total parameters of the proposed model is 2,285,906, and 12.5 MB (32-bit floats) is required to store all parameters. The solar energy disaggregation will be implemented by the Distribution Network Operator (DNO), which utilizes Energy Management Systems (EMS) to operate the proposed solar energy disaggregation model [45]. EMS is equipped with a high computational machine that enables various machine learning/deep learning-based applications.

Results and discussion

Software and hardware

The simulation and computations are conducted on a Dell laptop equipped with a Core i7–7700HQ CPU, an NVIDIA GTX 1060 GPU, and 8 GB RAM. The deep learning algorithm runs on Python 3.6, and the TensorFlow framework is adopted to train the DNN model.

Benchmark models

From Table 2, following models in the literature are employed as the benchmark models in this work: the conventional model-based method proposed by [27], upscaling method introduced in [16], machine learning-based methods, including KNN + PCA [20], SVM + PCA [19], RF + PCA [20], GBRT [22], deep learning-based methods, i.e., MLP [23], CNN [24], LSTM [25], and GRU [25].

Evaluation criteria

To evaluate the performance of the proposed disaggregation algorithms, three evaluation metrics, which are the root mean squared error (RMSE), normalized RMSE (nRMSE), and R-squared (R2), are adopted. The detailed metrics are as follows:

-

(1)

Root Mean Squared Error (RMSE):

$$\mathrm{RMSE}=\sqrt{\frac{\sum_{t=1}^{T}({\widehat{P}}_{PV,t}-{P}_{PV,t})}{T}}.$$(21) -

(2)

Normalized RMSE (nRMSE):

$$n\mathrm{RMSE}=\frac{\mathrm{RMSE}}{{\overline{P} }_{PV}}.$$(22) -

(3)

Mean Absolute Error (MAE):

$$\mathrm{MAE}=\frac{\sum_{i=1}^{N} |{y}_{i}-{\widehat{y}}_{i}|}{N}.$$(23) -

(4)

R-Squared (\({R}^{2}\)):

$${R}^{2}=\frac{\sum_{i=N}^{N}({t}_{i}-\overline{t })({y}_{i}-\overline{y })}{\sqrt{\sum_{i=1}^{N}{({t}_{i}-\overline{t })}^{2}\sum_{i=1}^{N}{({y}_{i}-\overline{y })}^{2}}}$$(24)

Case study I: performance under different PV penetration rates

In this case study, the proposed 1D CNN–BiLSTM solar energy disaggregation model is compared to state-of-the-art solar energy disaggregation models. Three feeder models (the light rural feeder model with \({\overline{P} }_{L}=\) 948 kW, heavy suburban feeder model with \({\overline{P} }_{L}=\) 5335 kW, and the moderate urban feeder model with \({\overline{P} }_{L}=\) 17,021 kW) with different PV penetration rates (5%, 10%, 20%, 30%, 40%, 50%) described in Table 3 are studied. Regarding the net load, the PV penetration rate is defined as the percentage of PV output compared to the peak demand load:

The accuracies and the required Computation time of the estimated solar energy by different models are shown in Tables 5, 6 and 7. In the table, all models can be divided into three groups: model-based method, upscaling method, and data-driven methods. Whist data-driven methods are classified into machine learning-based models and deep learning-based models further. From the tables, it is observed that the superiority of the deep learning-based models over other models in terms of estimation error and accuracy. In contrast, the deep learning models require much longer Computation time and larger memory space. From Tables 5, 6 and 7, the model-based model, which constructs the mathematical models referring to the configuration of PV panels and meteorological variables, shows a lower accuracy among all models. In addition, the machine learning methods including KNN regression, SVM regression, RF regression, as well as GBRT achieve better estimation results than model-based and upscaling methods. However, conventional machine learning models cannot capture high-dimensional nonlinear patterns from the input netload, which limits the accuracy of the models. When comparing the five deep learning-based models, the naive model, MLP, performs the worst in all cases, this result is due to the MLP model cannot capture the long-term temporal dependencies. In contrast, the memory cells inside the LSTM/GRU models make the models computationally more efficient. In addition, the 1D CNN model achieves similar accuracy as the LSTM/GRU models, the 1D convolutional layers help the model extract the complex pattern from the 1D time-series data. The proposed 1D CNN–BiLSTM takes advantage of the LSTM and 1D CNN models to extract the local pattern and capture the long-term dependencies simultaneously. From Tables 5, 6 and 7, it is observed that the proposed 1D CNN–BiLSTM performs the best among all models for all cases. For instance, the 1D CNN–BiLSTM improves the MAE and nRMSE of PV estimation by 55.89% and 53.74% compared to the conventional model-based model when α = 5% and \({\overline{P} }_{L}=\) 948 kW.

The estimation results of the PV generation and the demand load are visualized in Figs. 11 and 12, these two figures show the cases of \({\overline{P} }_{L}=\) 948 kW, α = 20% and \({\overline{P} }_{L}=\) 17,021 kW and α = 5% cases, respectively. In addition, the results estimated by GBRT, LSTM, and MLP are shown in the same figure to make a comparison to the proposed 1D CNN–BiLSTM model. In the top plot of Fig. 11, the original net load curve is presented. Compared to the net load without PV systems, a dramatic drop is observed between 8:00 a.m. and 5:00 p.m., which is a typical characteristic to identify PV generation. The middle plot shows the estimations of the PV outputs performed by the four algorithms, and the bottom plot shows the decoupled demand load curve. The figure shows that although the PV outputs inferred by all algorithms closely match the ground truth curve, the proposed CNN–BiLSTM presents the best estimation. In this case, the \({R}^{2}\) for CNN–BiLSTM is approximately 0.89, and the nRMSE is 1.23%. In turns of Fig. 12, a case with a low PV penetration rate (5%), the figure shows that although the benchmark models (GBRT, LSTM and MLP) can estimate the PV generation with high accuracy during the time with abundant sunlight, e.g., sunny days, the accuracy is reduced in bad weather conditions, such as rainy and cloudy days. However, the proposed model can make a great estimation in almost all-weather conditions, which demonstrates the improvement of the proposed model compared to the existing works.

Several estimation examples under different weather conditions are presented in Fig. 13. There are three weather conditions: clear sky, overcast, and rain. Three typical days are given for each weather condition category. From the figure, it is found that all four algorithms track the ground truth curve very well on clear sky days. However, on rainy and overcast days, since the sun is covered intermittently, these algorithms cannot always catch up with the actual PV outputs. Poor performance is observed in the case of the MLP algorithm as a huge error is detected between the actual curve and the estimated curve. The proposed CNN–BiLSTM, which is the orange solid line in Fig. 13, can track the ground truth PV outputs precisely, even during rain and overcast days.

Case study II: transductive transfer learning

In the previous case study, the training and testing data used are consistently from the same data set. However, it is difficult to obtain labelled data at target locations. To overcome this limitation, which forms a major hurdle for real-world industrial applications, a transductive transfer learning approach is proposed. The definition of transfer learning is as follows:

Definition 1 (Transfer Learning)

[46]. Given a source domain \({\mathcal{D}}_{s}\) and learning task \({\mathcal{T}}_{s}\) and a target domain \({\mathcal{D}}_{T}\) and learning task \({\mathcal{T}}_{T}\), the purpose of transfer learning is to help improve the performance of the target function \({\mathcal{F}}_{T}\) in \({\mathcal{D}}_{T} \)using the knowledge in \({\mathcal{D}}_{s}\) and \({\mathcal{T}}_{s}\), where \({\mathcal{D}}_{s}\ne {\mathcal{D}}_{T}\) or \({\mathcal{T}}_{s}\ne {\mathcal{T}}_{T}\).

Furthermore, in a transductive transfer learning task, a source learning task \({\mathcal{T}}_{s}\) and target learning task \({\mathcal{T}}_{T}\) are the same (to implement the decoupling task), but the source and target domains may be different (\({\mathcal{T}}_{s}\) is a synthetic data set, and \({\mathcal{T}}_{T}\) is real-time data in this case) [47]. In this paper, the transductive transfer learning framework can be split into four steps (see Fig. 14):

Step 1—Synthetic database generation

Referring to the local conditions (such as the load capacity, geographical information, the portion of PV tilt angles, meteorological data, etc.), simulation software is adopted to generate synthetic solar energy and demand load data sets. Hence, the data set is labelled and can be used for supervised learning.

Step 2—Data analysis

As introduced in “Data Description”, the generated data set is pre-processed to provide normalized, featured extracted data.

Step 3—Model generation

With processed data, the CNN–BiLSTM neural network model is trained, and the model parameters are stored in a cloud server.

Step 4—Transfer learning

The unlabelled real-time data from the target area are then sent to the trained model, and the cloud server decouples the net load into solar energy and the demand load.

In the simulation, two cases, Austin, Texas and New York are studied to investigate the proposed transfer learning method; and a detailed description of the cases is shown in Table 8. The SAM simulation software [30] is adopted to generate a synthetic residential solar energy data set according to the given relevant information. The data provided by Dataport are adopted as real-time measurements. The performance of the disaggregation system in transfer learning is presented in Fig. 15 and Table 9. The CNN–BiLSTM model is pre-trained via the synthetic data set and then applied to the aggregated real-measured demand load. In Case 1, where the target area selected is Austin, Texas, transfer learning achieves almost equal performance. As for Case 2, where the target area selected is in New York, the performance is slightly worse than the model that is trained via the real measured data set. This is because there is a minor geographical information error between the location of the synthetic data and real measured sites. The two cases show that the proposed transfer learning method is easy to implement anywhere else and that desirable accuracy can be achieved (Fig. 15).

Conclusions

This paper develops a deep learning-based solar energy disaggregation system to decouple the solar energy generated by rooftop PV systems and the real demand load from the net load measured by a feeder-level smart meter. The system collects various information from different resources, including AMI data, meteorological data, satellite-driven irradiance, and temporal information, as inputs. A 1D CNN bidirectional LSTM algorithm is developed to estimate the solar energy generated in the target area. Compared to the benchmark algorithms (model-based method, upscaling model, machine learning-based models and deep learning-based methods), the precision and effectiveness of the proposed method are verified via several case studies. Both the influence of the PV penetration rate and feeder load capacity on the proposed system are fully investigated. The results show that the proposed method can decouple solar energy with a low error, even at a low penetration rate (5%). Moreover, the method is robust, since it can be adapted to different feeder models, and the model can be trained via a synthetic data set and still achieves desirable performance in real-world measurement. These characteristics enable the proposed system to be widely adopted and practical in implementation. In future work, this study can be extended to consider energy storage systems and other renewable energy. In addition, an unsupervised learning algorithm is developed to simplify the training process.

References

Irena GEC (2020) Renewable capacity statistics 2020. International renewable energy agency

DrIncecco M, Squartini S and Zhong M (2019) Transfer learning for non-intrusive load monitoring. IEEE Trans Smart Grid 1–1 (08/28)

Wytock M and Kolter J (2014) Contextually supervised source separation with application to energy disaggregation. In Proceedings of the AAAI Conference on Artificial Intelligence

"SMART METERING IMPLEMENTATION PROGRAMME: review of the data access and privacy framework," E. I. S. Department for Business, UK, ed., 2018

Zhang XY, Kuenzel S, Córdoba-Pachón J-R and Watkins C (2020) Privacy-functionality trade-off: a privacy-preserving multi-channel smart metering system. Energies 13

Street P (2015) Dataport: the world's largest energy data resource. Pecan Street Inc

Angelis G-F, Timplalexis C, Krinidis S, Ioannidis D and Tzovaras D (2022) NILM applications: literature review of learning approaches, recent developments and challenges. Energy Build 111951

Yang H-T, Huang C-M, Huang Y-C, Pai Y-S (2014) A weather-based hybrid method for 1-day ahead hourly forecasting of PV power output. IEEE Trans Sustain Energy 5(3):917–926

Vrettos E, Kara EC, Stewart EM, Roberts C (2019) Estimating PV power from aggregate power measurements within the distribution grid. J Renew Sustain Energy 11(2):023707

Cheung CM, Zhong W, Xiong C, Srivastava A, Kannan R and Prasanna VK (2018) Behind-the-meter solar generation disaggregation using consumer mixture models. In: 2018 IEEE International Conference on Communications, Control, and Computing Technologies for Smart Grids (SmartGridComm), pp 1–6

Sossan F, Nespoli L, Medici V and Paolone M (2017) Unsupervised disaggregation of photovoltaic production from composite power flow measurements of heterogeneous prosumers. IEEE Trans Indust Inform 06/15

Shaker H, Zareipour H, Wood D (2016) Estimating power generation of invisible solar sites using publicly available data. IEEE Trans Smart Grid 7(5):2456–2465

Shaker H, Zareipour H, Wood D (2015) A data-driven approach for estimating the power generation of invisible solar sites. IEEE Trans Smart Grid 7(5):2466–2476

Bright J, Killinger S, Lingfors D and Engerer N (2018) Improved satellite-derived PV power nowcasting using real-time power data from reference PV systems. Solar Energy 168:118–139 (07/01)

Pierro M, De Felice M, Maggioni E, Moser D, Perotto A, Spada F, Cornaro C (2017) Data-driven upscaling methods for regional photovoltaic power estimation and forecast using satellite and numerical weather prediction data. Sol Energy 158:1026–1038

Zhang X-Y, Watkins C and Kuenzel S (2022) Multi-quantile recurrent neural network for feeder-level probabilistic energy disaggregation considering roof-top solar energy. Eng Appl Artif Intell 110:104707 (2022/04/01/)

"NOAA National Centers for Environmental Information (NCEI)," N. S. US Department of Commerce and N. C. f. E. I. Information Service, eds.

Burt S (2020) The climatological observers link (COL) at 50. Weather 75(5):137–144

Jaramillo AFM, Laverty DM, Del Rincón JM, Brogan P and Morrow DJ (2020) Non-intrusive load monitoring algorithm for PV identification in the residential sector. In: 2020 31st Irish Signals and Systems Conference (ISSC), pp 1–6

Moreno Jaramillo AF, Lopez‐Lorente J, Laverty DM, Martinez‐del‐Rincon J, Morrow DJ and Foley AM (2022) Effective identification of distributed energy resources using smart meter net‐demand data. IET Smart Grid 5(2):120–135

Kabir F, Yu N, Yao W, Yang R and Zhang Y (2019) Estimation of behind-the-meter solar generation by integrating physical with statistical models. In: 2019 IEEE International Conference on Communications, Control, and Computing Technologies for Smart Grids (SmartGridComm), pp 1–6

Wang Y, Zhang N, Chen Q, Kirschen DS, Li P, Xia Q (2018) Data-driven probabilistic net load forecasting with high penetration of behind-the-meter PV. IEEE Trans Power Syst 33(3):3255–3264

Zhang XY, Kuenzel S and Watkins C (2020) Feeder-level deep learning-based photovoltaic penetration estimation scheme. In: 2020 12th IEEE PES Asia-Pacific Power and Energy Engineering Conference (APPEEC), pp 1–5

Vejdan S, Mason K, Grijalva S (2021) Detecting behind-the-meter PV installation using convolutional neural networks. IEEE Texas Power Energy Conf (TPEC) 2021:1–6

Cheung CM, Kuppannagari SR, Kannan R and Prasanna VK (2019) Towards improved real-time observability of behind-meter photovoltaic systems: a data-driven approach. In: Proceedings of the Tenth ACM International Conference on Future Energy Systems, pp 447–455

P. S. Incorporation (2015) “Dataport,” Pecan Street Inc., Austin, TX, 11:2018

Kara EC, Roberts CM, Tabone M, Alvarez L, Callaway DS, Stewart EM (2018) Disaggregating solar generation from feeder-level measurements. Sustain Energy Grids Netw 13:112–121

Vrettos E, Kara EC, Stewart EM and Roberts C (2019) Estimating PV power from aggregate power measurements within the distribution grid. J Renew Sustain Energy 11(2):023707

I. N. England. ISO New England Zonal information. https://www.iso-ne.com/isoexpress/web/reports/pricing/-/tree/zone-info.

Blair N, Dobos AP, Freeman J, Neises T, Wagner M, Ferguson T, Gilman P and Janzou S (2014) System advisor model, sam 2014.1. 14: General description, National Renewable Energy Lab.(NREL), Golden, CO (United States)

"Smart* Data Set for Sustainability," 2019.

Saffari M, Khodayar M and Khodayar ME (2022) Deep recurrent extreme learning machine for behind-the-meter photovoltaic disaggregation. Electricity J 35(5):107137 (2022/06/01/)

Schneider KP, Chen Y, Chassin DP, Pratt RG, Engel DW and Thompson SE (2008) Modern grid initiative distribution taxonomy final report. In Pacific Northwest National Lab.(PNNL), Richland, WA (United States)

Oceanic N and Administration A (2016) National Centers for Environmental Information (NCEI). Historical Palmer Drought Indices

Data IS (2001) National climatic data center (NCDC). Asheville

C. Seger, "An investigation of categorical variable encoding techniques in machine learning: binary versus one-hot and feature hashing," 2018.

Géron A (2019) Hands-on machine learning with Scikit-Learn, Keras, and TensorFlow: concepts, tools, and techniques to build intelligent systems: O'Reilly Media

Zeyer A, Schlüter R and Ney H (2016) Towards online-recognition with deep bidirectional LSTM acoustic models. Interspeech 2016

Alhussein M, Aurangzeb K, Haider SI (2020) Hybrid CNN-LSTM model for short-term individual household load forecasting. IEEE Access 8:180544–180557

Kim T-Y, Cho S-B (2019) Predicting residential energy consumption using CNN-LSTM neural networks. Energy 182:72–81

Kim P (2017) Convolutional neural network. In: MATLAB deep learning. Springer, pp 121–147

Albawi S, Mohammed TA, Al-Zawi S (2017) Understanding of a convolutional neural network. Int Conf Eng Technol (ICET) 2017:1–6

Zhao J, Mao X, Chen L (2019) Speech emotion recognition using deep 1D & 2D CNN LSTM networks. Biomed Signal Process Control 47:312–323

Xie J, Fang J, Liu C, Li X (2020) Deep learning-based spectrum sensing in cognitive radio: A CNN-LSTM approach. IEEE Commun Lett 24(10):2196–2200

Meng L, Sanseverino ER, Luna A, Dragicevic T, Vasquez JC and Guerrero JM (2016) Microgrid supervisory controllers and energy management systems: a literature review. Renew Sustain Energy Rev 60:1263–1273 (2016/07/01/)

Pan SJ, Qiang Y (2010) A survey on transfer learning. IEEE Trans Knowl Data Eng 22(10):1345–1359

Arnold A, Nallapati R and Cohen WW (2007) A comparative study of methods for transductive transfer learning. In Workshops Proceedings of the 7th IEEE International Conference on Data Mining (ICDM 2007), October 28–31, 2007, Omaha, Nebraska, USA

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, XY., Kuenzel, S., Guo, P. et al. A hybrid data-driven online solar energy disaggregation system from the grid supply point. Complex Intell. Syst. 9, 3695–3716 (2023). https://doi.org/10.1007/s40747-022-00842-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-022-00842-2

Keywords

- Behind-the-metre solar generation

- Rooftop PV

- Deep neural network

- Transfer learning

- Energy disaggregation

- Data-driven analysis