Abstract

Vectorial optical field-based spatially polarization modulation has been widely studied for polarization measurement due to its simple system structure. In this system, the polarization information is encoded in the irradiance image, and polarization measurement can be realized by image processing. The classical image processing methods could not meet the increasing demand of practical applications due to their poor computational efficiency. To address this issue, a new image processing method, combining the rapidity of local radon transform (LRT) and the precision of error correction (EC), was proposed in this paper. Firstly, the polarization direction of the light was coarsely estimated from pixels on several circles. Then, the LRT of the input image was completed while the coarsely estimated direction was the center angle for LRT. Finally, the EC was conducted to get the accurate direction depending on the quantitative link between the error of the coarse estimation and the correlation between the LRTs. Experiments on synthetic and real data demonstrate that, compared to the other state-of-the-art methods, our proposed algorithm is more robust and less time-consuming.

Similar content being viewed by others

1 Introduction

Polarization is an essential property of the light. It is important to measure the polarization state in many applications, such as remote sensing [1,2,3], biomedicine [4, 5], sky polarized light navigation [6], fluorescence polarization immunoassay [7], ellipsometry [8,9,10], seismic acquisition [11] and so on.

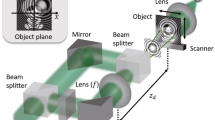

The state-of-the-art methods for polarization measurement can be summarized as four typical classes, interferometric polarimeter [12, 13], temporally modulated polarimeter [14, 15], division-of-amplitude polarimeter [16, 17] and spatially modulated polarimeter [6, 10, 18,19,20,21,22,23]. The first three families of methods need to carry out a serial of measurements with different orthogonal states of polarization. They usually suffer from high computation, poor stability, and complex system structure [9]. Contrarily, spatially modulated polarimeter methods are more simple and efficient. There is no need of optical components twisting, and polarization direction can be obtained by a single measurement [24]. However, the classical spatial modulated polarimetry methods are highly dependent on spatial modulation devices. They were difficult to be deployed in the realistic scenarios.

To address the issue of the classical spatial modulated polarimetry methods, vectorial optical field-based spatially polarization modulated polarimetry methods have been proposed recently [6, 18, 21,22,23]. In the strategy, the polarization information is recorded by the modulated intensity pattern of the input light. In this way, when the pattern of the light is captured by a camera, the polarization measurement can be transformed into the problem of analyzing the irradiance image. In [22, 23], when a zero-order vortex quarter-wave retarder was used as a space variant birefringence device to achieve spatial modulation for all polarization components, a normalized least-squares method and a hybrid gradient descent algorithm were proposed, respectively, to calculate the polarization state from the irradiance images. Researchers have found that, when the input light was analyzed by an azimuthally (a radially) spatial modulator, the irradiance image has hourglass-shaped gray distribution. In other words, the darkest line of the irradiance image is parallel (or perpendicular) to the polarization direction [21]. Consequently, the polarization direction of the input light can be captured by extracting the darkest line from the image. In [6, 10], the global Radon transform (GRT) was adopted. Gao and Lei [18] also chosen GRT to get the intensity modulation curve from which the four Stokes parameters of the input light can be measured. Lei and Liu [21] compared the accuracy and cost time of different image processing algorithms such as interesting area detection (IAD), local correlation (LC), GRT and so on. They found that the precision of the IAD was low, and the Radon transform was quite sensitive to image noise. The LC had more stable and higher accuracy, but it was time-consuming like IAD and Radon transform.

To measure the polarization direction more robust and faster, a novel method is presented in this paper. Motivated by GRT and LC, the method contains three stages: coarse estimation, local Radon transform (LRT), and error compensation (EC). At the first, the coarse direction is estimated based on threshold segmentation. Then, LRT is performed in a local angle range while the coarse estimated direction is taken as the center angle. In the end, the accurate darkest direction (parallel or perpendicular to the polarization direction) is gotten by EC, which establishes a quantitative link between the error of coarse estimation and the correlation between LRTs. The advantages of our algorithm are fourfold.

Firstly, the proposed method is robust to noise owing to the gray integral operation in LRT.

Secondly, the utilization of EC makes the proposed method highly precise.

Thirdly, different to the GRT with small angle interval [6, 10, 18], LRT is only need to be computed in a local angle domain with large angle interval. It is therefore more computationally efficient.

Finally, since most of the processing can be done by looking up tables generated offline, our algorithm is suitable for real-time task for its high speed.

The outline of this article is as follows. In Sect. 2, the coarse estimation, LRT, and EC are introduced, followed by a flowchart to summarize our method. Section 3 shows several experimental results. Finally, some conclusions are drawn in Sect. 4.

2 Method

It has been verified that, when the input light was analyzed by an azimuthally (or a radially spatial modulator, the hourglass-shaped intensity pattern of the modulated light satisfies Malus’s law [21]. In other words, the gray distribution of the irradiance image, as shown in Fig. 1, is directly proportional to the square of the cosine of the angle between the azimuthal angle and the darkest direction. The darkest direction, which is parallel (or perpendicular) to polarization direction, has the minimum radial integral value of the image. To capture the darkest direction accurately and quickly, our method include three stages: coarse estimation, LRT and EC. They are introduced as follow.

2.1 Coarse estimation

In our algorithm, the darkest direction is first coarsely estimated based on threshold segmentation. To reduce the computational complexity, threshold segmentation is processed on the pixels on the circles with certain radiuses rather than all the pixels in the image. Given a set of radiuses (e. g, \(r_{1} ,r_{2} ,r_{3} , \ldots ,r_{N}\)), the pixels on the circles with different radiuses are collected. Then, the pixels are divided into two parts (i.e., bright area and dark area) based on a predefined threshold \(T\). The average azimuthal angle of pixels in the dark area, denoted by \(\theta_{{\text{c}}}\), is treated as the coarse darkest direction, i.e.,

where \(I(r,\theta )\) is the gray value of the pixel with the coordinate \((r,\theta )\).

2.2 Local Radon transform

In this stage, Radon transform [25] is adopted to compute the integral of an image along specified directions. Suppose that \(f\) is a 2-D function, the integral of \(f\) along the radial line \(l(\theta_{i} ) = \left\{ {x,y:x\sin \theta_{i} - y\cos \theta_{i} = 0} \right\}\) is given by

For digital images, Eq. (2) can be transferred as

In Eq. (3), \(I(x,y)\) is the gray of the pixel with the rectangular coordinate \((x,y)\). \(W(\xi )\), the weight of the pixel \((x,y)\) for integration along \(l(\theta_{i} )\), can be obtained by

\(d\) is the distance threshold to determine whether the pixel \((x,y)\) is on the line \(l(\theta_{i} )\).

Obviously, GRT needs to compute the integral of the image along radial lines orientated from \(0^{ \circ }\) to \(180^{ \circ }\). Moreover, to have the accurate result, the angle interval that the GRT adopts should be as small as possible. Different from GRT, LRT only needs to capture the integral of the image in a local angle range, in which, the coarse darkest direction (i.e., \(\theta_{{\text{c}}}\)) is taken as the center angle. For example, assuming the angle range and angle interval for LRT are \(\pm \theta_{T}\) and \(\theta_{s}\), LRT is gotten while the radial integral values are arranged in azimuth order. It is \(G(\theta_{{\text{c}}} ) = \left\{ {g(\theta_{i} )} \right\}\;\left( {\theta_{i} = \theta_{{\text{c}}} - \theta_{T} + (i - 1)\theta_{s} ,i = 1,2 \ldots ,2\theta_{T} /\theta_{s} + 1} \right)\).

As illustrated in Fig. 1, the actual darkest direction of the irradiance image is \(25^{ \circ }\). As the image is disturbed by Gaussian white noise (\(\mu = \sigma^{2} = 0.01\)), the darkest direction calculated by coarse estimation is \(25.06^{ \circ }\)(the white solid line in Fig. 1), LRT is composed of the normalized integral values of the image along the radial lines (the white dotted line in Fig. 1) counterclockwise oriented from \(145.06^{ \circ }\) to \(85.06^{ \circ }\). Here, \(\theta_{T}\) is set to be \(60^{ \circ }\).

2.3 Error correction

Theoretically, the darkest direction has the minimum value in LRT. It is regrettable that, the radial integral value of the image is always disturbed by the noise. For instance, the LRT of the image (shown in Fig. 1) is displayed in Fig. 2. The actual darkest direction of the image is \(25^{ \circ }\), yet the direction that has the minimum value in LRT is \(25.6^{ \circ }\). Apparently, the direction with the minimum value is not the actual darkest direction under the noise. To address this issue, EC is developed to explore the error of coarse estimation.

The normalized LRT of the image in Fig. 1

Assuming we have two modulate irradiance images (\({\text{Im}}_{1}\) and \({\text{Im}}_{2}\)) with hourglass-shaped gray distribution, and the darkest directions of two images are \(\theta_{d1}\) and \(\theta_{d2}\), respectively, \(G_{1} (\theta_{d1} - \theta_{a} )\) and \(G_{2} (\theta_{d2} - \theta_{a} )\) has the best correlation. That is,

\(G_{1} (\theta_{d1} - \theta_{a} )\) and \(G_{2} (\theta_{d2} - \theta_{a} )\) denote the LRTs of \({\text{Im}}_{1}\) and \({\text{Im}}_{2}\) while \(\theta_{d1} - \theta_{a}\) and \(\theta_{{d_{2} }} - \theta_{a}\) are the centers of the local angle ranges for integration, i.e., \(G_{1} (\theta_{d1} - \theta_{a} ) = \{ g_{1} (\theta_{i} )\} {\kern 1pt} {\kern 1pt} {\kern 1pt} (\theta_{i} = \theta_{d1} - \theta_{a} - \theta_{T} + (i - 1)\theta_{s} )\), and \(G_{2} (\theta ) = \{ g_{2} (\theta_{i} )\} {\kern 1pt} {\kern 1pt} {\kern 1pt} (\theta_{i} = \theta - \theta_{T} + (i - 1)\theta_{s} )\). Similarly, \(G_{1} (\theta )\) is the LRT of the image \({\text{Im}}_{1}\) while the center of the local integral angle range is \(\theta\). \(\theta_{a}\) is an arbitrary angle.

Let the coarsely estimated darkest direction for \({\text{Im}}_{2}\) is \(\theta_{c}\), the error of the coarse estimation is \(\theta_{e}\). From Eq. (5), we can infer that, the LRT of \({\text{Im}}_{1}\) that has the best correlation with \(G_{2} (\theta_{c} )\) is \(G_{1} (\theta_{d1} - \theta_{e} )\). This inference can be represented as

In Eq. (5), the range for \(\theta\) is \([0,\pi )\). In fact, the optimal \(\theta\) fluctuates around \(\theta_{d1}\) as a result of the small error of coarse estimation. To reduce calculation, the range for \(\theta\) can be decreased to \(\left[ {\theta_{d1} - \theta_{M} ,\theta_{d1} + \theta_{M} } \right]\). Substituting \(\theta_{e} = \theta_{d2} - \theta_{c}\) into Eq. (5), we can have

Equation (6) explores the link between the error of coarse estimation and the correction between LRTs. Based on Eq. (6), the actual darkest direction of \({\text{Im}}_{2}\) can be captured by

In Eq. (7), the range for \(\theta\) is \([0,\pi )\). In fact, the optimal \(\theta\) fluctuates around \(\theta_{d1}\) as a result of the small error of coarse estimation. To reduce calculation, the range for \(\theta\) can be decreased to \(\left[ {\theta_{d1} - \theta_{M} ,\theta_{d1} + \theta_{M} } \right]\).

In practice, according to Malus’s law, \({\text{Im}}_{1}\) can be generated and treated as the model image. Apparently, LRTs of \({\text{Im}}_{1}\) also satisfies Malus’s law. That is, the integral of the image at the direction \(\theta_{i}\) is

\(A\) is a coefficient decided by the image brightness. \(\theta_{d1}\) is the darkest direction of the image. Depending on Eq. (8), a set of LRTs of \({\text{Im}}_{1}\) (i.e., \(G_{1} (\theta_{i} )\),\(\theta_{i} = \theta_{d1} - \theta_{M} + (i - 1)\theta_{r}\)) can be gotten. For the input image \({\text{Im}}_{2}\), substituting \(G_{2} (\theta_{c} )\) into Eq. (7), the corrected darkest direction can be captured.

Taking the image in Fig. 1 as an example, the working mechanism is illustrated in Fig. 3. In this experiment, the darkest direction of the model image is \(0^{ \circ }\). According to Eq. (8), a set of LRTs of the model image are generated while the center angle changes from \(- 5^{ \circ } (175^{ \circ } )\) to \(5^{ \circ }\), in \(0.01^{ \circ }\) increment. For the input image shown in Fig. 1, the predicted darkest direction estimated by coarse estimation is \(\theta_{{\text{c}}} = 25.6^{ \circ }\). In EC stage, we found that, \(G_{1} (0.6^{ \circ } )\) has the best correlation value with \(G_{2} (\theta_{c} )\). G1(□) and G2(□) denote the LRTs of the model image and the input image, respectively. Finally, according to Eq. (7), the estimated darkest direction of the input image is corrected to be \(25^{ \circ }\).

2.4 Implementation details

In practice, once the parameters of the algorithm are given, some intermediate data including the coordinates of pixels used for the coarse estimation, the coordinates and weights of pixels for LRT computation keep unchanged while different input images are treated. Hence, these data can be computed ahead and saved in tables which are named as circle pixel coordinate table (CPCT), integral pixel coordinate table (IPCT), and integral pixel weight table (IPWT), respectively. It should be noted that, due to the different darkest direction of the input images, the coordinates and weights of pixels for gray integration should be saved while the azimuth angle changes from \(0^{ \circ }\) to \(180^{ \circ }\). In addition, the LRTs of the model images with different center angles, which are independent to the input image, also can be captured offline using Eq. (8) and saved.

The flow chart and pseudo code of our method are shown in Fig. 4.

3 Results and discussion

In this section, the performance of algorithms is tested on synthetic data and real data. We first evaluate the sensitivity of our algorithm (LRT + EC) to the angle range (i.e., \(\theta_{T}\)), the angle interval (i.e., \(\theta_{s}\)) and the image noise, and then the performance of LRT + EC is compared with two state-of-the-art methods, including GRT [18] and IAD + LC [21]. The CUP of our computer is Intel (R) Core (TM) i7-10710U@1.10G, the RAM is 1.61 GHz.

In practice, the marginal and central parts are not well modulated owing to the vignetting effect of optical system and the imperfection of optical components [18], so the algorithms are performed on the ring area with the inner and outer radiuses are 300 pixels and 500 pixels, respectively. In the following experiments, the radiuses of circles (denoted by \(r\) in Eq. (1)) used for coarse estimation changes from 300 to 450 pixels in steps of 5 pixels, and the gray threshold for segmentation is 0.4. The image, in which the darkest direction of hourglass-shaped gray distribution pattern is \(0^{ \circ }\), is taken as the model image. A set of LRTs of the model image are generated while the center angle changes from \(- 0.3^{ \circ } (179.7^{ \circ } )\) to \(0.3^{ \circ }\) in step of \(0.01^{ \circ }\).

3.1 Experiments on synthetic data

In this section, experiments are performed on synthetic data. The images are all generated according to Malus’s law [26]. We first evaluate the sensitivity of our algorithm (LRT + EC) to some parameters and noise. Moreover, the computational complexity is tested. All experimental results in this section are counted based on 500 Monte Carlo simulations.

3.1.1 Performance to angle range and angle interval of LRT

Generally, the larger the angle range (\(\theta_{T}\)) and the smaller the angle interval (\(\theta_{s}\)), the algorithm will get the higher accuracy. However, the complexity of the algorithm will increase significantly. To get appropriate parameters to guarantee the algorithm acts well in both accuracy and runtime, the performance of the algorithm to \(\theta_{T}\) and \(\theta_{s}\) are tested, respectively. In this set of experiments, the Gaussian white noise (\(\mu = 0.01\) and \(\sigma^{2} { = }0.005\)) is added on the synthetic images. Figure 5 compared the mean absolute error (MAE) of the results when \(\theta_{s}\) is 0.6 and \(\theta_{T}\) changes from \(30^{ \circ }\) to \(85^{ \circ }\). It reviews that the MAE of the algorithm increases rapidly with the increase of \(\theta_{T}\), and then becomes flat when \(\theta_{T}\) is larger than \(60^{ \circ }\). Hence, to have low computation complexity, \(\theta_{T}\) is suggested to be \(60^{ \circ }\). Moreover, the MAE of our algorithm while \(\theta_{s}\) changes from \(0.1^{ \circ }\) to \(2^{ \circ }\) is summarized in Fig. 6, and experimental results imply that the smaller \(\theta_{s}\) is, the better our algorithm performs.

As described above, in the following experiments, \(\theta_{T}\) and \(\theta_{s}\) are setted to be \(60^{ \circ }\) and \(0.6^{ \circ }\), respectively.

3.1.2 Performance to image noise

Apparently, it is easy to extract the darkest direction precisely from the images without noise, but it is more difficult to have the same precision on the real images which are usually disturbed by the noise. As the noise including shot noise, thermal noise, and dark current noise generally obey the Gaussian distribution [10], to effect the performance of our algorithm to noise, the Gaussian white noise is added while \(\mu = 0.01\) and \(\sigma^{2}\) changes from 0 to 0.01. MAE of different algorithms are compared in Fig. 7. It can be observed that the performance of all algorithms decrease when the \(\sigma^{2}\) increases, and LRT + EC and IAD + LC outperform GRT. For fair comparison, in this set of experiments, the gray threshold for ROI extraction in IAD + LC is also setted to be 0.4.

Furthermore, comparing the performance of coarse estimation (the green line shown in Fig. 7) and LRT + EC, we can note that, the MAE of LRT + EC is much smaller. To test the effect of integration on the performance of our algorithm, in EC, we choose the gray of pixels on the circle (\(r = 400\)) to replace LRT. The result of this method (the rose line in Fig. 7) explores that the accuracy can be highly improved by gray integration.

3.1.3 Processing time

To make an overall comparison, it is necessary to analyze the processing time of each algorithm. Table 1 indicates that, LRT + EC has the lowest computation complexity.

3.2 Experiments on real data

To verify the accuracy of algorithms on real data, 20 modulated intensity images are captured continuously in the same state. One of these images is shown in Fig. 8. The 20 images are analyzed by three different algorithms, and the calculated results are illustrated in Fig. 9. Apparently, LRT + EC is most robust. Furthermore, this conclusion also can be verified by the root mean square error (RMSE) of the results (given in Table 2).

4 Conclusions

In some spatial polarization modulated polarimetry schemes, the polarization direction of the input light can be achieved by image processing algorithms. In this paper, an efficient image processing algorithm, named LRT + EC, is proposed to extract the polarization direction from the irradiance image of the modulated input light. Different to GRT which performs the Radon transform in the global angle range, LRT is obtained by integrating the image along the radial lines orientated in a local angle range. In addition, LRT and EC can be completed by looking up tables generated offline. Therefore, time consumption of our algorithm is reduced to less than 0.01 s, which meet the real-time requirement well. Moreover, owing to the EC which establish the link between the error of coarse estimation and the correlation between LRTs, the accuracy and robustness of the algorithm are highly improved. The experimental results on synthesized and real data verify that, our proposed algorithm outperforms the state-of-the-art methods including GRT and IAD + LC.

Availability of data and materials

The datasets used during the current study are available from the corresponding author on reasonable request.

Abbreviations

- LRT:

-

Local radon transform

- EC:

-

Error correction

- GRT:

-

Global Radon transform

- LC:

-

Local correlation

- IAD:

-

Interesting area detection

- CPCT:

-

Circle pixel coordinate table

- IPCT:

-

Integral pixel coordinate table

- IPWT:

-

Integral pixel weight table

- MAE:

-

Mean absolute error

- RMSE:

-

Root mean square error

References

Z. Sun, Y. Huang, Y. Bao, D. Wu, Polarized remote sensing: a note on the Stokes parameters measurements from natural and man-made targets using a spectrometer. IEEE Trans. Geosci. Remote 55, 4008–4021 (2017)

Z. Sun, D. Wu, Y. Lv, S. Lu, Optical properties of reflected light from leaves: a case study from one species. IEEE Trans. Geosci. Remote 57, 4388–4406 (2019)

Q.Y. Gu, Y. Han, Y.P. Xu, H.Y. Yao, H.F. Niu, F. Huang, Laboratory research on polarized optical properties of saline-alkaline soil based on semi-empirical models and machine learning methods. Remote Sens. 14, 226 (2022)

J.W. Song, N. Zeng, W. Guo, J. Guo, H. Ma, Stokes polarization imaging applied for monitoring dynamic tissue optical clearing. Biomed. Opt. Express 12, 4821–4836 (2021)

P. Schucht, H.R. Lee, M.H. Mezouar, E. Hewer, T. Novikova, Visualization of white matter fiber tracts of brain tissue sections with wide-field imaging Mueller polarimetry. IEEE Trans. Med. Imaging 12, 4376–4382 (2020)

W. Zhang, X. Zhang, Y. Cao, H. Liu, Z. Liu, Robust sky light polarization detection with an S-wave plate in a light field camera. Appl. Opt. 55, 3516–3525 (2016)

L. Oberleiner, U. Dahmen-Levison, L.A. Garbe, R.J. Xchneider, Application of fluorescence polarization immunoassay for determination of carbamazepine in wastewater. J. Environ. Manage 193, 92–97 (2017)

S.W. Lee, S.Y. Lee, G. Choi, H.J. Pahk, Co-axial spectroscopic snap-shot ellipsometry for real-time thickness measurements with a small spot size. Opt. Express 28, 25879–25893 (2020)

D.H. Goldstein, Polarized Light, 3rd edn. (CRC Press, 2017), pp. 327–351

C. Gao, B. Lei, Spatially polarization-modulated ellipsometry based on the vectorial optical field and image processing. Appl. Opt. 51, 5377–5384 (2020)

C. Paulus, J.I. Mars, Vector-Sensor array processing for polarization parameters and DOA estimation. EURASIP J. Adv. Signal Process. (2010). https://doi.org/10.1155/2010/850265

G.P. Lemus-Alonso, C. Meneses-Fabian, R. KantunMontiel, One-shot carrier fringe polarimeter in a double aperture common-path interferometer. Opt. Express (2018). https://doi.org/10.1364/OE.26.017624

M. Eshaghi, A. Dogariu, Single-shot omnidirectional Stokes polarimetry. Opt. Lett. 45, 4340–4343 (2020)

Y. Liang, Z. Qu, Y. Zhong, Z. Song, S. Li, Analysis of errors in polarimetry using a rotating waveplate. Appl. Opt. 58, 9883–9895 (2019)

A. Lizana, J. Campos, A.V. Eeckhout, A. Márquez, Influence of temporal averaging in the performance of a rotating retarder imaging Stokes polarimeter. Opt. Express 28, 10981–11000 (2020)

W. Liu, J. Liao, Y. Yu, X. Zhang, High-efficient and high-accurate integrated division-of-time polarimeter. APL Photon. 6, 071302 (2021). https://doi.org/10.1063/5.0057625

R.M.A. Azzam, A. De, Optimal beam splitters for the division-of-amplitude photopolarimeter. J. Opt. Soc. Am. A (Opt. Image Sci. Vis.) 20(5), 955–958 (2003)

C. Gao, B. Lei, Spatially modulated polarimetry based on a vortex retarder and Fourier analysis. Chin. Opt. Lett. 19, 19–24 (2021)

J. Bo, W. Xing, Y. Gu, C. Yan, X. Wang, X. Ju, Spatially modulated snapshot imaging polarimeter using two Savart polariscopes. Appl. Opt. 59, 9023–9031 (2020)

J. Zhang, C. Yuan, G. Huang, Y. Zhao, W. Ren, Q. Cao, J. Li, M. Jin, Acquisition of a full-resolution image and aliasing reduction for a spatially modulated imaging polarimeter with two snapshots. Appl. Opt. 57, 2376–2382 (2018)

B. Lei, S. Liu, Efficient polarization direction measurement by utilizing the polarization axis finder and digital image processing. Opt. Lett. 43, 2969–2972 (2018)

T.L. Ning, Y. Li, G.D. Zhou, K. Liu, J.Z. Wang, Single-shot measurement of polarization state at low light field using Mueller-mapping star test polarimetry. Opt. Commun. (2021). https://doi.org/10.1016/j.optcom.2021.127130

T.L. Ning, Y.Q. Li, G.D. Zhou, Y.Y. Sun, K. Liu, Optimized spatially modulated polarimetry with an efficient calibration method and hybrid gradient descent reconstruction. Appl. Opt. 61, 2267–2274 (2020)

G. Biener, A. Niv, V. Kleiner, E. Hasman, Near-field Fourier transform polarimetry by use of a discrete space-variant subwavelength grating. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 20, 1940–1948 (2003)

J. Coetzer, B.M. Herbst, J.A.D. Preez, Offline signature verification using the discrete Radon transform and a hidden Markov model. EURASIP J. Adv. Signal Process. (2004). https://doi.org/10.1155/S1687617204309042

A.E. Collett, Field Guide to Polarization (SPIE Press, Bellingham Washington, 2005), pp. 3–3

Acknowledgements

The authors would like to thank the handing Associate Editor and the anonymous reviewers for their valuable comments and suggestions for this paper.

Funding

This work was supported by the National Natural Science Foundation of China under Grant 61975235.

Author information

Authors and Affiliations

Contributions

XY and WW proposed the LRT + EC algorithm. CG contributed to experimental data acquisition and literature review. WW wrote the majority of the manuscript. JS revised the content. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This work doesn’t involve human participants, human data or human tissue.

Consent for publication

This work doesn’t contain any individual person’s data in any form.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, W., Gao, C., Yan, X. et al. A new polarization direction measurement via local Radon transform and error correction. EURASIP J. Adv. Signal Process. 2022, 70 (2022). https://doi.org/10.1186/s13634-022-00897-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13634-022-00897-w