Abstract

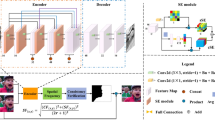

In the multi-focus image fusion task, how to better balance the clear region information of the original image with different focus positions is the key. In this paper, a multi-focus image fusion model based on unsupervised learning is designed, and the image fusion task is carried out by two-stage processing. In the training phase, the encoder–decoder structure is adopted and the multi-scale structural similarity is introduced as the loss function for image reconstruction. In the fusion stage, the trained encoder is used to encode the feature of the original image. The spatial frequency is used to distinguish the clear area of the image from the two scales of channel and space, and the pixels with inconsistent discrimination are checked and processed to generate the initial decision diagram. The final image fusion task is carried out after mathematical morphology optimization. The experimental results show that this method has good effect on preserving the texture details and edge information of the focused area of the original image. Compared with the five advanced fusion algorithms, the proposed algorithm has achieved preferential fusion performance.

Similar content being viewed by others

References

Lixia, Zhang, Guangping, Zeng, Zhaocheng, Xuan: Research Review of Multi-source Image Fusion Methods. Comput. Eng. Sci. 44(02), 321–334 (2022)

Shuaiqi, Liu, Jie, Wang, Yanling, An., Li Ziqi, Hu., Shaohai, Wang Wenfeng: Nonsubsampled Shearlet Domain Multifocus Image Fusion Based on CNN[J]. Journal of Zhengzhou University (Engineering Edition) 40(04), 36–41 (2019)

Gang, Chen.: Research on Multi-Focus Image Fusion Algorithm[D]. China University of Mining and Technology, (2018)

Xixi, Nie, Bin, Xiao, Xiuli, Bi., Weisheng, Li.: Multi-focus image fusion algorithm based on superpixel convolutional neural network. Electr. Inform. 43(04), 965–973 (2021)

Jiang Feng, Gu., Qing, Hao Huizhen, Na, Li., Yanwen, Guo, Daozhi, Chen: Overview of content-based image segmentation methods. Softw. J. 28(01), 160–183 (2017)

Li, S., Yang, B., Hu, J.: Performance comparison of different multi-resolution transforms for image fusion. Inform. Fus. 12(2), 74–84 (2011)

Mo, Y., Kang, X., Duan, P., et al.: Attribute filter based infrared and visible image fusion. Inform. Fus. 75, 41–54 (2021)

Shreyamsha Kumar, B.K.: Multifocus and multispectral image fusion based on pixel significance using discrete cosine harmonic wavelet transform. Signal, Image and Video Process. 7(6), 1125–1143 (2013)

Zhang, Q., Liu, Y., Blum, R.S., et al.: Sparse representation based multi-sensor image fusion for multi-focus and multi-modality images: a review. ProcessingInform. Fus. 40, 57–75 (2018)

Paramanandham, N., Rajendiran, K.: Multi sensor image fusion for surveillance applications using hybrid image fusion algorithm. Multimed. Tools Appl. 77(10), 12405–12436 (2018)

Yang, L., Guo, B., Ni, W.: Multifocus image fusion algorithm based on contourlet decomposition and region statistics[C]//Fourth international conference on image and graphics (ICIG 2007). IEEE, (2007): 707-712

Zhang, Y., Liu, Y., Sun, P., et al.: IFCNN: a general image fusion framework based on convolutional neural network. Inform. Fus. 54, 99–118 (2020)

Zhang, H., Xu, H., Tian, X., et al.: Image fusion meets deep learning: a survey and perspective. Inform. Fus. 76, 323–336 (2021)

Liu, Y., Chen, X., Peng, H., et al.: Multi-focus image fusion with a deep convolutional neural network. Inform. Fus. 36, 191–207 (2017)

Qingjiang, Chen, Zebai, Wang, Yuzhou, Chai: Improved VGG network multi-focus image fusion method. Appl. Opt. 41(03), 500–507 (2020)

Qingjiang, Chen, Yi, Li., Yuzhou, Chai: A multifocus image fusion algorithm based on deep learning. Prog. Laser Optoelectron. 55(07), 246–254 (2018)

Ram Prabhakar, K., Sai Srikar, V., Venkatesh Babu R.: Deepfuse: A deep unsupervised approach for exposure fusion with extreme exposure image pairs[C]//Proceedings of the IEEE international conference on computer vision. (2017): 4714-4722

Li, H., Wu, X.J.: Densefuse: a fusion approach to infrared and visible images. IEEE Trans. Image Proc. 28(5), 2614–2623 (2018)

Ma, B., Zhu, Y., Yin, X., et al.: Sesf-fuse: an unsupervised deep model for multi-focus image fusion. Neural Comput. Appl. 33(11), 5793–5804 (2021)

Zhang, H., Le, Z., Shao, Z., et al.: MFF-GAN: an unsupervised generative adversarial network with adaptive and gradient joint constraints for multi-focus image fusion. Inform. Fus. 66, 40–53 (2021)

Ma, J., Le, Z., Tian, X., et al.: SMFuse: Multi-focus image fusion via self-supervised mask-optimization. IEEE Trans. Comput. Imaging 7, 309–320 (2021)

Xu, H., Ma, J., Jiang, J., et al.: U2Fusion: a unified unsupervised image fusion network. IEEE Trans. Pattern Anal. Mach. Intell. 44(1), 502–518 (2020)

Xu, H., Ma, J., Le, Z., et al.: Fusiondn: A unified densely connected network for image fusion[C]. In: Proceedings of the AAAI Conference on Artificial Intelligence. (2020) , 34(07): 12484-12491

Nejati, M., Samavi, S., Shirani, S.: Multi-focus image fusion using dictionary-based sparse representation. Inform. Fus. 25, 72–84 (2015)

Zhao, H., Gallo, O., Frosio, I., et al.: Loss functions for image restoration with neural networks. IEEE Trans. Comput. Imaging 3(1), 47–57 (2016)

Wang, Z., Simoncelli, E. P., Bovik, A. C.: Multiscale structural similarity for image quality assessment[C]//The Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, (2003). Ieee, 2003, 2: 1398-1402

Suzhen, Lin, Ze, Han: Image fusion based on deep stacked convolutional neural network. J. Comput. Sci. 40(11), 2506–2518 (2017)

Yonghong, J.: Fusion of landsat TM and SAR images based on principal component analysis. Remote Sens. Technol. Appl. 13(1), 46–49 (2012)

Hossny, M., Nahavandi, S., Creighton, D.: Comments on’Information measure for performance of image fusion. Electr. Lett. 44(18), 1066–1067 (2008)

Qu, G., Zhang, D., Yan, P.: Information measure for performance of image fusion. Electr. Lett. 38(7), 313–315 (2002)

Petrović, V.: Subjective tests for image fusion evaluation and objective metric validation. Inform. Fus. 8(2), 208–216 (2007)

Ma, K., Duanmu, Z., Yeganeh, H., et al.: Multi-exposure image fusion by optimizing a structural similarity index. IEEE Trans. Comput. Imaging 4(1), 60–72 (2017)

Aslantas, V., Bendes, E.: A new image quality metric for image fusion: the sum of the correlations of differences. Aeu-international J. Electr. Commun. 69(12), 1890–1896 (2015)

Rana, A., Arora, S.: Comparative analysis of medical image fusion. Int. J. Comput. Appl. 73(9), 10–13 (2013)

Xydeas, C.S., Petrovic, V.: Objective image fusion performance measure. Electr. Lett. 36(4), 308-309 (2000)

Li, S., Kang, X., Hu, J.: Image fusion with guided filtering. IEEE Trans. Image Proc. 22(7), 2864–2875 (2013)

Lin, T. Y., Maire, M., Belongie, S., et al.: Microsoft coco: Common objects in context[C]//European conference on computer vision. Springer, Cham, (2014): 740-755

Woo, S., Park, J., Lee, J .Y., et al.: Cbam: Convolutional block attention module[C]//Proceedings of the European conference on computer vision (ECCV). (2018): 3-19

Cao, Y., Xu, J., Lin, S., et al.: Gcnet: Non-local networks meet squeeze-excitation networks and beyond[C]//Proceedings of the IEEE/CVF international conference on computer vision workshops. (2019): 0-0

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No.61966022), the Natural Science Foundation of Gansu Province (21JR7RA300) and the Open Project of the Dunhuang Cultural Heritage Protection Research Center of Gansu Province (o.Gdw2021Yb15).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wu, K., Mei, Y. Multi-focus image fusion based on unsupervised learning. Machine Vision and Applications 33, 75 (2022). https://doi.org/10.1007/s00138-022-01326-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-022-01326-6