Abstract

This paper introduces a new UAV path planning method for creating high-quality 3D reconstruction models of large and complex structures. The core of the new method is incorporating the topology information of the surveyed 3D structure to decompose the multi-view stereo path planning into a collection of overlapped view optimization problems that can be processed in parallel. Different from the existing state-of-the-arts that recursively select the vantage camera views, the new method iteratively resamples all nearby cameras (i.e., positions/orientations) together and achieves a substantial reduction in computation cost while improving reconstruction quality. The new approach also provides a higher-level automation function that facilitates field implementations by eliminating the need for redundant camera initialization as in existing studies. Validations are provided by measuring the variance between the reconstructions to the ground truth models. Results from three synthetic case studies and one real-world application are presented to demonstrate the improved performance. The new method is expected to be instrumental in expanding the adoption of UAV-based multi-view stereo 3D reconstruction of large and complex structures.

Similar content being viewed by others

Introduction

Significant growth of unmanned aerial vehicle (UAV) applications has been witnessed in land surveying [1], urban surveillance [2], post-disaster evaluation [3], and structural inspections [4]. High-accuracy 3D reconstruction is often required for many UAV applications. Meanwhile, multi-view stereo (MVS) [5] was recognized as a viable tool for reconstructing high-quality three-dimensional (3D) models utilizing images from different viewpoints. However, using aerial MVS to reconstruct 3D models of large complex structures remains a challenge primarily due to the lack of appropriate path planning tools to determine the optimal UAV cameras’ poses to acquire MVS images of the surveyed structures.

In MVS, images must satisfy quality criteria [6] to obtain high-quality models. These criteria may slightly vary in different MVS algorithms, but there are some standard criteria such as the coverage/visibility, resolution, incidence angle, baseline, and parallax [7,8,9]. When a 3D model of the surveyed structure is available (i.e., 3D Computer-aided Design (CAD), building information modeling (BIM), 2.5D digital elevation model, rough photometric reconstruction), the UAV’s camera views/paths can be designed in a model-based fashion where the optimal trajectories can be computed by maximizing the MVS quality at each observed surface of the 3D structures [10]. Among the model-based path planning methods, Next-Best-View (NBV) was recognized as the state-of-the-art [11,12,13,14]. NBV is a recursive view selection process: at each step, NBV selects the best view from an ensemble of candidate camera views based on a quality criterion. This approach has been used in several existing studies: from the early works where the focus was the indoor active range imaging in a controlled environment [15, 16], to the more recent applications of the outdoor aerial MVS reconstruction [17,18,19,20,21]. Roberts et al. [19] modeled the MVS quality of a UAV trajectory as to how well the cameras cover the structure’s surface light field. The method formulated the coverage function as submodular and found the near-optimal trajectories by (1) finding the best orientation at each candidate camera and (2) selecting the best subset of the camera positions based on the UAV constraints. Hepp et al. [20] also employed the submodularity and used the information gain (IG) to quantize the goodness of each camera viewpoint. Based on the volumetric representation of the structure’s geometry, the method combined the optimization of camera positions and orientations into an integrated view selection process. One issue in these NBV methods is that the greedily selected viewpoints do not guarantee the optimal quality. In addition, these methods assumed that a single camera view could determine a point in 3D space, which contradicts the basic MVS quality assurance principle that at least two different views are required.

Smith et al. [22] studied a reconstruction heuristic that measured the MVS quality based on the two-view geometric analysis [7, 23]. The two-view quality heuristic measures not only the visibility/coverage of the cameras to the surface points but also the geometries concerning other views. Based on the heuristic, the method adaptively adjusted the cameras from a user-defined flight path for high-quality reconstruction of urban scenes. Zhou et al.’s [24] study started with a dense camera set sampled along the normal of the urban structures. Based on the quality heuristic, the method greedily selected and adjusted vantage cameras in sequence to reduce the image redundancy while increasing the MVS quality. One major limitation of these studies is that the final reconstruction heavily relies on the quality of the initialized cameras because the methods only adjusted the cameras locally in space. Therefore, the camera initialization can be a challenge when dealing with more complex geometries (such as residential buildings, chemical plants, etc.) where sharp corners, concave regions, and uneven surfaces exist across the model, making the initialization of good camera viewpoints difficult. Furthermore, the quality heuristic was evaluated based on all available cameras in the existing methods, which makes it computationally expensive since it requires quadratic operations to find the best quality matches at each point. The path optimization based on this heuristic requires re-evaluating the model surface quality at each iteration. These optimization methods often include infeasible view candidates per multi-view stereo criteria, where computation time can grow significantly as the size of the surveyed object increase.

A topology-based UAV path planning method is presented in this paper to improve both the scalability and reconstruction quality of the existing 3D path planning methods. The proposed method outperforms existing methods in the presented cases in terms of the computational cost, the reconstruction quality, and the camera initialization. Unlike the prior works that recursively select/adjust the cameras from a user-defined discrete candidate set, the proposed method continuously optimizes a set of randomly sampled cameras in the continuous space. The core of the technique lies in introducing the topology parameters to guide the maximization of the two-view quality metric at each model surface solely based on the closely located cameras. The method designs one admissible camera to cover each model surface and, at the same time, cluster its neighbor cameras based on the topology of the surface connectivity. The global view planning problem is then decomposed into a collection of local optimization problems to find the optimal matched camera positions/orientations within each cluster such that the MVS quality is maximized. The reformulated optimization is computationally tractable and inherently parallel. Therefore, an adapted particle swarm optimization (PSO) framework is used to seek optimal solutions (i.e., camera views) efficiently. The result is an optimized camera trajectory that a rotorcraft UAV can fly for high-quality 3D reconstructions of the inspected structure.

Three contributions are expected from the developed new path planning method:

-

(1)

By utilizing the topology information of the model surfaces’ connectivity to cluster the nearby cameras, a significant reduction in the computation cost is achieved in finding the best camera matches at each model surface (i.e., time complexity reduced from cubic to linear).

-

(2)

The method formulates the global view planning as the collection of independent local multi-view optimization problems that maximizes the MVS quality at each surface solely based on the clustered cameras. The reformulated problems are solved simultaneously by an adapted PSO framework, enabling the efficient computation of the optimal path.

-

(3)

Based on a model’s topology, our method avoids the inclusion of redundant candidates in the initial set as in the existing studies. Instead, only a desired number of candidate cameras is sampled, which reduces the unnecessary cost (e.g., visibility detection) while increasing the convergence speed.

The rest of this paper is organized as follows. “Methodology” introduces the overview and the detailed steps of the proposed UAV path planning method. In “Evaluation and results”, the evaluation of the presented method is performed based on three geometric variant synthetic scenes and one complex real-world case study. Finally, we conclude our work and point out the future research directions in “Conclusions”.

Methodology

Figure 1 is an overview of the proposed method. Given a rough model (e.g., a 3D model from Google Map) of the target structure and the selected UAV, the method starts by processing the input model into a triangular surface mesh (“Input model triangulation”). The processed mesh contains the desired number/size of the triangle surfaces, enabling the per surface coverage planning. A topology-based coverage algorithm is proposed to design one admissible camera to cover each model surface while clustering the candidate matching cameras (“Topology-based coverage modeling”). Based on the presented coverage algorithm, the optimal camera views are found by simultaneously maximizing the two-view quality metric within each cluster (“Multi-view optimization”). The proposed optimization method integrates an approximated camera model (“Reconstruction metric”) and a two-view reconstruction metric (“Approximated camera model”) into an adapted PSO (“Optimization procedure”), enabling a randomly initialized camera set to be optimized efficiently and continuously. After the optimization, a fast algorithm is presented to assess the MVS quality performance of the optimized cameras and samples complementary camera views, guaranteeing the reconstruction quality even in the challenging regions (Sect. 2.3.5). Finally, the cameras are converted into a shortest flight trajectory (“Sampling complementary cameras”) and then uploaded onto a ground control station for the automated UAV inflight mission execution and the post-flight multi-view stereo reconstruction.

The proposed method can be iterated if the quality of the output model needs further improvements. The subpar output model can be used as a rough input model in the new iteration. Theoretically, the iterations can go on until the quality of the output model reaches a satisfactory level. The method provides flexibility for generating 3D models at different quality levels. The details of the proposed method are discussed step-by-step in the following sections.

Input model triangulation

A rough 3D model is needed as an input model for the coverage planning. The coarse model can be downloaded from Google Earth/Map or can be reconstructed from a simple nadir (2D) flight if a Google Earth/Map model is not available. Then, the raw input model needs to be triangulated such that each model surface can be covered by one viewpoint with the specific sensor model [25, 26]. Preprocessing of the input model is needed to convert the raw model (in point cloud format or 3D CAD/BIM format) into a triangulated surface mesh and make the sizes of triangulated surfaces more evenly covered by the selected cameras. The proposed triangulation method is an iterative mesh resampling algorithm and is developed based on two criteria: (1) the triangulated model must be geometrically consistent with the raw input model; (2) each triangulated surface must fit into the camera’s FOV at the required distances (i.e., Ground Sampling Distance (GSD)). The first criteria guarantee the high fidelity of the processed model compared to the inputs, while the second criteria ensure the baseline quality of the collected images.

Starting from a baseline model surface size, the algorithm incrementally resamples the input model with the reduced size until the model reaches the required fidelity. The model fidelity is computed as an error metric that measures the nearest neighbor distance (NND) of the point correspondences between the resampled mesh and the input. Based on this metric, a residual map is generated, and the ratio of outliers is computed based on a distance measurement \(\omega \). The mesh is considered high fidelity if the ratio is less than a pre-defined threshold τ. Otherwise, the surface size is reduced (at certain step \(\lambda \)), and the above steps are repeated until the stopping condition is met. Approximated Centroidal Voronoi Diagrams (ACVD) [27] is employed for the model surfaces resampling at each iteration, and the Poisson Disk Sampling [28] is used to represent the point set for fidelity measurement. The baseline surface size is the minimal surface area covered by each camera view. It can be either manually determined or estimated based on the area scanned by the camera footprints at the baseline GSD (i.e., 0.02 m/pixel) [29]. In this study, we set \(\omega \) as 1 m, and \(\tau \) equals 15%. This setup preserves the major geometric components (e.g., façade, roof, etc.) of building structures (typically at a scale 100–5000 m2) but might need to be slightly varied for other types of geometries. The algorithm outputs a mesh with a relatively small number of surfaces, thus encouraging fewer camera views, and increasing the efficiency of the inflight image acquisition and the post-flight 3D reconstruction. Figure 2 presents an example of the proposed method for triangulating an orbiting flight reconstructed residual building. The input is a dense mesh containing over 300,000 triangle surfaces. Based on the stopping condition, the detailed geometries that have less effect on the building’s shape, such as the drip edges on the roofs or the footings of the wall foundations, are considered outliers in the residual map (as in Fig. 2b). The output is a downsampled surface mesh that approximates the input geometry with only 122 triangles.

Workflow of the model preprocessing algorithm on a residential building: a the input geometry (over 300,000 triangles, downsampled and color-coded for demonstration purposes); b the residual map when the stopping condition is met (points in blue denote the inliers; points in red denote the outliers; c the resampled mesh (122 triangles)

Topology-based coverage modeling

The proposed coverage algorithm defines the MVS camera search spaces based on the topology of the model surfaces connectivity. The algorithm includes two major steps: (1) sampling a set of overlapped cameras with each camera covering a unique set of mesh surfaces; and (2) clustering the neighborly located cameras as the candidate matches for the 3D triangulation at each surface.

Sampling overlapped cameras

For each mesh surface \({\mathcalligra{s}}\Bigl({\mathcalligra{s}}\in \mathcal{S}\Bigr)\), a camera view \({{\mathcalligra{v}}}_{{\mathcalligra{s}}}\) is admissible if it is located within a hemisphere centered at the centroid of the mesh surface (\({{\mathcalligra{c}}}_{{\mathcalligra{s}}}\)) with the camera field-of-view (FOV) covers the adjacent triangles of \({\mathcalligra{s}}\) (as in Fig. 3). It is also required that each admissible view satisfies the camera gimbal constraints (e.g., camera pitch rotation constraint) and the UAV safety clearance from the surveyed object [29]. Equation (1) presents the constraints that formulate the camera search space:

where \({\mathcalligra{d}}\) measures the Euclidean distance between \({{\mathcalligra{v}}}_{{\mathcalligra{s}}}\) and \({{\mathcalligra{c}}}_{{\mathcalligra{s}}}\), \(\mathcal{R}\) is the radius of the hemisphere which is defined by the baseline GSD given the camera focal length. \(\dot{{\mathcalligra{d}}}\) computes the perpendicular distance between the camera and the surface, and \({{\mathcalligra{d}}}_{s}\) is the safety tolerance for the aircraft. \(\theta \) measures the observation between the camera ray and the surface normal \({{\mathcalligra{n}}}_{{\mathcalligra{s}}}\), and \({\theta }_{max}\) indicates the maximal acceptable observation angle. \(\pi \) is the Boolean function that denotes the complete coverage of a camera view to the mesh surfaces. \({\gamma }_{{\mathcalligra{k}}}\left({\mathcalligra{s}}\right)\) is a collection of the neighborly connected mesh surfaces centered at \({\mathcalligra{s}}\). We set \({\mathcalligra{k}}\) equals 1 to ensure minimal overlapping between the sampled cameras. The definition of \({\gamma }_{{\mathcalligra{k}}}\left({\mathcalligra{s}}\right)\) and the selection of \({\mathcalligra{k}}\) are discussed in the next section.

Clustering candidate matchings

In MVS, triangulating 3D points from the closely located images can reduce the cost of the redundant image selection while filtering out the erroneous points [6,7,8]. In this study, the topology of the mesh surface connections is used to guide the clustering of the neighbor cameras for the per surface MVS quality maximization (detailed in “Multi-view optimization”). Given the mesh, we define a graph \(\mathcal{G}\left(\mathcal{S},\mathcal{E}\right)\) with each node denoted as a triangle surface and each edge as the common edge that connects the surfaces. By defining every edge in the graph as a unit distance, the proximity of the surfaces can be computed by counting the minimal number of the common edges traversed between the surfaces. As shown in Eq. (2), the neighborhood of \({\mathcalligra{s}}\) is defined as the collection of the mesh surfaces with distance less than \({\mathcalligra{k}}\).

where \(\widehat{{\mathcalligra{d}}}\) is the distance of the shortest path between \({\mathcalligra{s}}\) and \({\mathcalligra{s}}{^{\prime}}\), and \({\mathcalligra{k}}\) is a coefficient that controls the number of the neighboring surfaces. Due to one camera view is defined at each surface, thus the topological structure (i.e., neighborhood) of the mesh surfaces can be used to map the closeness of the sampled cameras (as in Eq. 3).

where \({{\mathcalligra{s}}}_{{\mathcalligra{v}}}\) and \({{\mathcalligra{v}}}_{{\mathcalligra{s}}}\), respectively, denotes the correspondent mesh surface and the camera. \({\gamma }_{{\mathcalligra{k}}}\left({{\mathcalligra{v}}}_{{\mathcalligra{s}}}\right)\) is a cluster of closely located cameras with each one points towards a unique surface in \({\gamma }_{{\mathcalligra{k}}}\left({{\mathcalligra{s}}}_{{\mathcalligra{v}}}\right)\). Because the influence of a camera is spatially limited (i.e., FOV, GSD), there is a large chance that the best-matched camera for triangulating \({{\mathcalligra{s}}}_{{\mathcalligra{v}}}\) belongs to \({\gamma }_{{\mathcalligra{k}}}\left({{\mathcalligra{v}}}_{{\mathcalligra{s}}}\right)\). With greater \({\mathcalligra{k}}\) means more neighbor cameras are clustered, which increases the possibility of covering the real best matches. The extreme case is that all cameras are included in each cluster which is identical to perform the stereo-matching globally. In this study, \({\mathcalligra{k}}=3\) is used empirically to ensure good matching results for complex scenes and, at the same time, keeps the computational load small. Figure 4a shows an example of the clustered neighbor surfaces with \({\mathcalligra{k}}=2\) for demonstration purposes.

a Top: the current mesh surface (in red) and the clustered neighbor surfaces (in blue); Bottom: the correspondent topology graph, denoting the current view (in red) and the clustered candidate matches (in blue); b two cases of the neighbor surface selection: Top: only \({{\mathcalligra{s}}}_{{\varvec{n}}1}\) is considered as the neighbor of \({{\mathcalligra{s}}}_{1}\); Bottom: neither \({{\mathcalligra{s}}}_{{\varvec{n}}1}\) nor \({{\mathcalligra{s}}}_{{\varvec{n}}2}\) is the neighbor of \({{\mathcalligra{s}}}_{1}\)

In most cases, the growth of cluster size follows the properties of the triangular mesh (i.e., each triangle surface is connected to at most three other surfaces through common edges). However, there are cases when a single edge is connected with more than one surface plane, such as at the concave area. Due to the occluded camera ray, the neighbor surfaces might be invisible by the selected camera, leading to clustering the falsely matched cameras. In this study, a simple mechanism is defined to select the neighbor surfaces. Given a surface plane \({{\mathcalligra{s}}}_{1}\) and a point along the surface normal (denoted as \({p}_{1}\)), we define \({{\mathcalligra{s}}}_{n}\) is a neighbor of \({{\mathcalligra{s}}}_{1}\) if there is no intersection between the line connecting \({p}_{1}{p}_{n}\) and the model geometry. As shown in Fig. 4b, \({{\mathcalligra{s}}}_{n1}\) is a neighbor of \({{\mathcalligra{s}}}_{1}\) only when \({p}_{1}{p}_{n1}\) is not intersected with the model, and \({{\mathcalligra{s}}}_{n2}\) is not a neighbor of \({{\mathcalligra{s}}}_{1}\) due to the intersection between \({p}_{1}{p}_{n2}\) and \({{\mathcalligra{s}}}_{n1}\) in both cases.

Multi-view optimization

Problem formation

Given a model (i.e., triangular mesh) and a designed number of camera views, the objective is to find the optimal views (\({\mathcal{V}}^{*}\)) in terms of the positions and the orientations where the MVS quality at each model surface is maximized (as in Eq. 4):

where \({\mathcalligra{v}},{\mathcalligra{u}}\) \(({\mathcalligra{u}}\ne \nu )\) consist of the potentially matched cameras, and \({\mathcalligra{h}}\) is the metric that models the MVS quality based on the matched views. The computational complexity of finding the optimal solution is \({\rm O}(\left|\mathcal{S}\right|{\left|\mathcal{V}\right|}^{2})\) since we need to traverse through every camera combination to find the best one [22]. This formation, based on the proposed coverage algorithm (“Topology-based coverage modeling”), can be reformulated as in Eq. (5):

Due to \({\mathcalligra{s}}\) and \({{\mathcalligra{v}}}_{{\mathcalligra{s}}}\) are exclusively correlated, and \({\mathcalligra{k}}\) is a constant, the complexity of the problem is reduced to \({\rm O}(\left|\mathcal{S}\right|)\). This strategy regularizes the search space needed to find the best matches, making the multi-view optimization problem computational tractable even when dealing with the cameras in the continuous space.

Optimization procedure

Figure 5 shows the workflow of the proposed optimization method, which is based on a priorly developed PSO framework [26]. The framework is adapted to incorporate the proposed coverage model, a reconstruction quality metric, and an approximated camera model for efficient and continuous MVS optimization.

The optimization method starts with initializing a population of particles with each particle denoting a set of randomly sampled camera views based on the triangulated model (as in “Input model triangulation”) and the coverage constraints (“Topology-based coverage modeling”). Next, the fitness of each particle is evaluated through a reconstruction metric (detailed in “Reconstruction metric”). The set of the camera views with the maximal reconstruction quality is selected as the best particle. In [26], a greedy heuristic was developed to enhance the best particle and avoid the optimization being trapped at the local optima. However, this step is slow in the current form because the cameras' visibility/quality was sequentially rendered/evaluated.

In this study, an approximated camera model is developed to reduce the cost of visibility detection and speed up the optimization. Based on the updated global best particle, each particle in the population is recomputed using the PSO mechanism [30]. Each camera in the updated particle must also lie in the admissible space defined by the coverage constraints (“Topology-based coverage modeling”). The above steps iterate until the maximal number of iterations is reached (i.e., 15) or the fitness value of the global best particle does not improve for three consecutive iterations (i.e., convergence). The output is the desired set of camera views where the MVS quality at every model surface is maximized.

In the following sections, we first discuss how the reconstruction metric is calculated and then present the approximated camera model that significantly accelerates the optimization.

Reconstruction metric

In this subsection, we quantify the reconstruction metric \({\mathcalligra{h}}\) at each surface given a set of cameras. The metric estimates the MVS quality based on the two-view geometric analysis [22]. Technically, the metric is composed of two terms: the view-to-surfaces observation and the view-to-views triangulation. We discuss each term in detail as follows.

View-to-surfaces observation \(\left({{\mathcalligra{h}}}_{{\mathcalligra{o}}}\right)\): the view-to-surface observation measures the distance and the incidence angle between each camera view to the covered mesh surfaces (as presented in Eq. 6).

where \({{\mathcalligra{h}}}_{res}\) is the image resolution factor that measures the camera to surface distance, and \({{\mathcalligra{h}}}_{ang}\) is the image distortion factor that indicates the incidence angle between the camera direction and the surface normal. We constrain both factors within the range of \(\left[0, 1\right]\). Noted we do not provide the visibility check in Eq. (6) because the sampled cameras must already satisfy the coverage constraint (Eq. 1).

View-to-views triangulation \(\left({{\mathcalligra{h}}}_{{\mathcalligra{t}}}\right)\): for each camera view, the view-to-view triangulation factor seeks the \({\mathcalligra{m}}\) best matches from the candidate matching cameras in terms of the sufficient overlapping, the appropriate parallax, and the small baseline (as in Eq. 7):

where \({{\mathcalligra{h}}}_{bas}\) evaluates the baseline effects of a camera pair and \({{\mathcalligra{h}}}_{par}\) models the parallax dependency on the depth error. The \({{\mathcalligra{h}}}_{par}\) is measured as a Gaussian function defined based on [7]. We empirically set \(\rho \) as 28° for additional tolerance at large angles and let \({\mathcalligra{m}}\) equal to 4 as suggested in [6].

Finally, we concatenate the defined terms in Eqs. (6) and (7), and the reconstruction metric at each camera view/model surface is obtained, as shown in Eq. (8).

Approximated camera model

In MVS planning, visibility detection has been considered as the most expensive step [20, 22]. Given a set of cameras and the surface points, the visibility of each camera is computed through the ray casting operations at every surface point in two steps: (1) measuring if the point is located within the camera FOV; (2) checking whether there is an obstacle between the camera and the surface point. The time complexity of this operation is quadratic in terms of the number of cameras and the surface points. The proposed camera model reduces the computation cost while assuring the visibility detection quality by actively restricting the camera \({\mathcalligra{v}}\) visible points within \(\gamma \left({{\mathcalligra{s}}}_{{\mathcalligra{v}}}\right)\). To ensure the approximated camera does not exclude the good quality points, a photometric metric [31] is used for the evaluation.

Figure 6a shows an example of presented method on a building-scale geometry. We denote the surface points as \(P\) and the points within the neighbors of the camera covered surface as \({P}_{n} \left({P}_{n}\subset P\right)\), thus computation can be reduced to \(\left|{P}_{n}\right|/\left|P\right|\). Compared to the conventional method (i.e., full camera FOV), the proposed method only processes 16% of the computation (as in Fig. 6b) but covers more than 80% of the regions with the best observation quality (as in Fig. 6c). This strategy would perform even better for large-scale structures because the visibility calculation does not grow as the model size increases. Since only the central piece of the camera FOV is utilized for the computation, the proposed camera model increases the robustness to the real-world UAV surveying applications where the collected images might be shifted from the original capturing spots due to the existence of the localization errors.

Evaluation of the approximated camera model: a Model geometry with the camera covered surfaces and the neighbor surfaces (\(\left|{\varvec{P}}\right|:\) 12,700, \(\left|{{\varvec{P}}}_{{\varvec{n}}}\right|:\) 2376); b comparison of the visibility detection using the conventional (left) and the approximated (right) camera model; c comparison of the observation quality using the conventional (left) and the approximated (right) camera model

Based on the approximated camera model, the optimization can be facilitated by simultaneously evaluating the non-overlapped cameras. To identify such cameras, we construct a new graph \(\mathcal{G}{^{\prime}}\) where each node denotes a cluster of surfaces/cameras in \(\mathcal{G}\) (constructed in “Clustering candidate matchings”). Then, the edges in \(\mathcal{G}{^{\prime}}\) are denoted as the connections between the clusters. Based on the approximated camera model, the non-overlapped cameras can be incrementally selected by breadth-first searching (BFS) through the disconnected nodes in \(\mathcal{G}{^{\prime}}\). To avoid the order dependency of the selected cameras, a random seed is used as the starting node at each iteration. After each search, a set of non-overlapped cameras is obtained. Then, we subtract the correspondent nodes in \(\mathcal{G}{^{\prime}}\), and use the same strategy to recursively select the non-overlapped cameras from the rest nodes. The recursion terminates until the number of unselected cameras is less than \(\varepsilon \). For a mesh model that contains 100 triangular surfaces, normally this procedure reduces 6–9 times of the optimization when compared to the existing framework where the camera views are evaluated/updated sequentially. Based on this strategy, our method can compute the optimal cameras in minutes even on a personal PC with limited computational resources (e.g., memory, GPU, etc.).

Sampling complementary cameras

In most cases, we obtain high-quality, dense reconstruction from the optimized results. However, artifacts and disconnected reconstructions might exist at scenes containing locally sharp corners or deeply concave regions [20]. To account for this issue, a heuristic is proposed to adaptively sample complementary cameras at the poorly reconstructed regions. A workflow of the proposed heuristic is shown in Fig. 7. First, the reconstruction quality at each mesh surface, given a set of optimal camera views, is evaluated. The model surfaces with insufficient reconstruction quality (i.e., \({\mathcalligra{h}}<0.15\)) are identified. For each extracted surface, we add a complementary camera which forms good geometric reasoning with the existing camera. To achieve that, we sample several admissible cameras at the surface through the coverage model with the constraints of the parallax angle \(\varphi \) and baseline distance \({\mathcalligra{d}}\) between each newly sampled view and the existing camera for good image matching. Next, we evaluate the quality of each admissible camera based on the observation quality \({{\mathcalligra{h}}}_{{\mathcalligra{o}}}\). The camera view with the best quality is selected as the complementary camera. We iterate this procedure until complementary camera views are provided at every extracted surface. Finally, we merge the complementary cameras into the optimized camera set. In theory, we can repeat this process by re-evaluating the reconstruction quality at the complex regions. However, adding redundant cameras often result in diminished returns. Thus, we perform this process only once for all the experiments in this study.

Trajectory generation

For automated aerial reconstruction of real-world structures, the computed camera viewpoints need to be converted into a safe and efficient trajectory that a rotorcraft UAV can follow. For rotorcraft UAVs, the major constraint for designing a path connecting the viewpoints is the speed limit [25]. Thus, slow maneuvering is desired to increase the accuracy of the path following.

In this study, the trajectory generation process is divided into three steps. First, we construct a complete graph with each node representing a camera position and each edge indicating the Euclidian distance between the cameras. The start and end positions are determined based on the UAV take-off/landing spots. We employ the LKH-TSP solver [32] to compute the shortest path travels through all the camera positions without considering the site obstacles (Fig. 8a). Then, the collision detection is performed at each path segment using the hierarchy OBBTree [33]. If a collision is found, motion planning algorithms can be applied to reroute the path segment. The sampling-based stochastic searches such as rapidly exploring random tree star (RRT*) [34] can find the near-optimal solutions in short time. However, RRT* suffers from a slow convergence rate in the high state dimensions and large space, making the method inefficient to find the collision-free trajectory. In this study, we employ the informed RRT* [35] to speed up the pathfinding process while ensuring path optimality. The method biased the sampling domain within a decreased ellipsoidal subset, enabling the linear convergences of the optimal solution. After that, a collision-free path can be obtained by connecting every refined path segment (Fig. 8b). The connected path is piecewise linear and not tractable by a UAV with dynamic constraints. Thus, in the last step, we convert the path into a smooth trajectory using B-spline Curve interpolation [36]. The cubic curve is selected to connect each path segment with great flexibility. The output is a smoothed trajectory that allows a rotorcraft UAV to tightly follow at a relative low speed (e.g., 1–2 m/s).

Evaluation and results

Experimental setup

The selected scenes

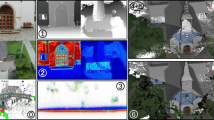

As shown in Fig. 9, three synthetic scenes and one real-world structure are selected to evaluate the proposed method. Using the synthetic scenes for evaluation has two advantages: (1) the ground truth models of the synthetic scenes are noise-free as opposed to other measurement tools (e.g., ground control points, GNSS-based measurement, or laser scanning), which enables the precise evaluation of the reconstruction quality (i.e., accuracy and completeness); (2) the synthetic environment is controlled so ensures the image quality is consistent and not affected by the random real-world environmental factors (e.g., sunlight direction, shadows, wind, and moving objects in the field). In this way, the performance evaluation of different path planning algorithms can be compared without concerning random impact from environmental factors. Due to these two advantages, synthetic models are widely adopted in performance evaluation in MVS reconstruction [19, 20, 22, 24]. The selected synthetic scenes are from free online sources and rendered in Unreal Engine 4: a 3D game engine with rich support of photo-realistic scenes. Since these scenes are not originally designed for multi-view reconstruction, we adjust the original modular templates so that sufficient salient features can be extracted from the rendered cameras. The three synthetic scenes are objects of (a) Res. [37], (b) Com. [38], and (c) Ind [39] (as in Fig. 9a–c), each with unique geometrical properties. Specifically, (a) is a two-story residential house. It is a small building structure containing many geometrical complex regions, such as the gable roof, the dormers, and the balcony, that are hard to be fully covered by the aerial images; (b) is a commercial building with a flat roof and rich surface textures. The model contains a concave area with sharp corners that is difficult to be triangulated due to the large parallax. The last scene (c) is an industrial oil/natural gas facility. It contains both the texture-less twin tank structures and the surrounded slim pipelines that are considered challenging geometries for multi-view reconstruction.

To validate the proposed method in the real-world environment, an actual church building (as in Fig. 9d) is selected for the evaluation. The building is larger compared to the synthetic scenes. It also contains rich geometrical details and surface variations across the building façades/roofs, making it a good fit for the evaluation under physical world condition.

Input parameters

Table 1 lists the input parameters of the proposed method. Compared to the synthetic scenes, additional tolerance is given to the real-world case study for the safety concerns due to the existence of the external noises (e.g., GPS precision, wind, ground effect, site obstacles, etc.).

Different types of input models are selected to evaluate the proposed method. For the Res. and Church, image reconstruction from an orbit flight path is used to obtain the rough geometries of the structures. However, we found the orbit paths fail to generate complete models for Com. and Ind (i.e., missing walls at the concave regions and the low coverage of the self-occluded pipe structures). Thus, we manually create the 2.5D models of these structures based on a rough estimation of the occupied area and the height of the model. These input models only provide the coarse estimates of the ground truths, which need to be further refined using the presented methods.

Images acquisition

For the synthetic scenes, the virtual camera is rendered through UnrealCV [40]. We set the camera at the resolution of \(4000\times 3000\) with the horizontal FOV as \({90}^{^\circ }\). The sunlight shadows, the light reflection, and the camera exposure are disabled throughout the image collection.

For the real-world application, the first step is to convert the computed trajectories from the local coordinates to the World Geodetic System (WGS-84). Next, the converted geographic coordinates are imported into a ground control station software. In this study, we select UgCS [41] from SPH Engineering as the ground station due to its flexibility in importing a sequence of waypoints with designated camera motions and the support of various types of drones. DJI Mavic 2 Zoom is selected as the aerial platform for the automated waypoints following. The onboard camera is a 4 K resolution CMOS image sensor. The drone is designed to fly at a low speed (1.5 m/s) to ensure the accurate path following and stay still at each viewpoint for 0.5 s to avoid the image blur. Due to the limited battery capacity as opposed to the designed path, multiple flights are needed to travel through every designed waypoint. We manually set the drone return to home when the battery level is less than 30%. After the battery replacement, we fly the drone to the last visited waypoint and continue the mission until every waypoint is visited.

3D reconstruction

After the image acquisition, the collected images are imported into Agisoft Metashape [42] for dense 3D reconstruction. The positions of the camera views (GPS for the real-world environment) are used to facilitate the photo-alignment steps. The repeated textures in the synthetic scenes can cause false alignments between the images. Such alignments need to be reset and re-aligned to avoid misaligned models. We set the quality as high both in the image alignment and dense point cloud reconstruction steps. For the 3D surface reconstruction, the interpolation mode and hole filling function are disabled to perverse the geometric details. We only output the dense point cloud for the Ind. scene since it is very costly to convert the slim structures (i.e., railings, pipelines, joints, ladders, etc.) into surface meshes without producing additional artifacts. Table 2 presents the general information and statistic of the selected structures, the type of the input geometry, and the reconstruction output.

Comparisons

Two methods are selected for benchmarking our approach: (1) the baseline Overhead and (2) the state-of-the-art NBV. Overhead is a commonly used path planning method for UAV photogrammetry and is widely used for benchmarking path planning algorithms [43]. Same as the prior work [19], the Overhead flight is designed as a lawnmower flight at a safe height above the scene followed by an orbit path to capture the oblique images. We uniformly distributed the camera views on both the lawnmower and the orbit path with camera views oriented towards the center of the scene. The \(80/80\) image overlapping between the adjacent views is required to ensure complete reconstruction. In this study, we implement a naive NBV method as presented in [19, 20, 44] to greedily select the vantage camera viewpoints from an ensemble of candidate cameras. The two-view quality evaluation [22] is used to guide the view selection process such that the compared results are majorly affected by the optimization methods instead of the quality criterion being selected. To make a fair comparison and avoid the unbounded images being collected in NBV, the number of the camera views estimated in our method is used as an additional termination condition in NBV optimization.

Evaluation methods

To quantitatively evaluate the reconstruction quality, the 3D models need to be initially transformed to the same coordinates of the ground truth. Such transformations are computed in a coarse-to-fine fashion: First, we roughly align the two models based on several well-distributed points (e.g., corners) both from the reconstructed model and the ground truth. Next, we refine this rough alignment through the iterative closest point (ICP) registration [45]. To provide a robust registration result, we embed the RANSAC [46] into the ICP to remove the outliers and guarantee the registration convergence. Based on the aligned models, we compare the quality of the reconstruction models based on two indicators: accuracy and completeness as in the previous works [22]. The accuracy (also denoted as precision) measures the maximal geometric distance between the reconstruction to the ground truth, given a percentage of the points. To measure the accuracy, we compute the error map which is the Euclidean distance between each point on the surface of the reconstructed model to the closest point on the ground truth. Then, we collect the distances from the whole point set, and compute the histogram map at 90%, 95%, and 99% of the point set. The completeness (also denoted as recall) measures the coverage ratio of the reconstruction to the ground truth, given a distance threshold. To calculate the completeness, we use the same strategy to compute the closest point error map between the point sets but count the percentage of the points within the pre-defined distances. We empirically set the thresholds as: 0.02 m, 0.05 m, and 0.2 m, to evaluate the completeness at different levels of details (LoDs). In the following, we use Acc. and Comp. as the accuracy and the completeness metrics, and ErrorAcc and ErrorComp as the correspondent error maps.

Terrestrial laser scanning

For the real-world experiment, terrestrial laser scanning (TLS) is used to obtain the actual geometry of the church. In this study, the selected laser scanner is Leica BLK360. The scanner ensures 4-mm accuracy at distances of up to 10 m. Due to the limited sensing range of the scanner, it is required to register multiple scans to obtain a complete model. We set the quality setting of each scan as high to ensure the high density, and make sure sufficient overlaps between two scans for accurate registration.

Results

Synthetic scenes

In Fig. 10, the input geometry, and the camera trajectories of the three synthetic scenes computed based on our method and the two comparison methods are presented. Same UAV departure and landing spots are used such that the distances of the trajectories computed using different methods are comparable. Table 3 presents the statistics of the collected images and the distance of the flight trajectories on different scenes. The results show that Overhead produces relatively more images due to the required image overlay. NBV is clamped at the designated cameras before the convergence, which validates that our method keeps the number of the collected images small. Among the three methods, Overhead generates the shortest trajectory due to the closeness of the arranged cameras. Compared to the NBV, the trajectories generated from our method are slightly shorter even though we do not constrain the travel budget in either implementation.

Figure 11 illustrates the 3D reconstructions of the synthetic scenes. One challenging region for 3D reconstruction (the yellow box) at each scene is picked to exemplify the details of the evaluations. Specifically, for Res., the selected region (as in Fig. 12) is the front wall and balcony. It is observed that Overhead fails to reconstruct the walls beneath the roof and the balcony due to these regions being obstructed from the overhead flight; NBV presents better overall results than Overhead, especially for covering the wall sections. However, the balcony is still poorly reconstructed. In contrast, our method is capable of fully recovering these regions and shows the best result at both the ErrorAcc and ErrorComp maps. For Com., a concave area is chosen (as in Fig. 13). Both NBV and our method present fewer errors at the building corners than Overhead. Compared to NBV, our method shows slightly better results in the model accuracy, especially in reconstructing the slope window awnings. For Ind., the gas tank (in Fig. 14) is selected due to the geometric complexity and the texture-less at the structure surface. The slim structures (e.g., railing, ladders, pipelines) located around the tanks make it even harder for photogrammetric reconstruction. Compared to the Overhead and NBV, our method shows the best results, especially at the model completeness. However, the method still fails to fully recover the detailed structures (e.g., ladders, railings). This might be caused by the relatively low detailed levels of the input geometries. Table 4 summarizes the quantitative evaluations of the reconstructions as opposed to the ground truth. The results further validate the observations: our method shows superior results at both Acc. and Comp., especially for the Res. and Com. scenes. We observe that the NBV shows slightly better Acc. (i.e., 95%, 99%) in the Ind. scene. It might be partially caused by the fact that more structures are recovered by our method (i.e., higher Comp.). In addition, both methods are primarily affected by the fidelity of the input geometry. The relatively lower Acc., especially at 95% marks compared to the other scenes, shows the potential improvements of the methods. Overall, the proposed method presents the superiority on the all-around reconstruction quality in terms of the more visual details and the fewer artifacts. This can be attributed to the proposed view planning strategy that cohesively optimizes the surface coverage and the stereo-matching quality at each model surface.

Real-world application

In this section, we discuss the results obtained from the real-world experiment (i.e., church). Figure 15 shows the trajectories uploaded to UgCS desktop for mission execution. To minimize the effects of the weather conditions, the images are collected in the mornings of three consecutive sunny days. Table 5 shows the statistics of the missions, including the number of images being collected, the number of flights needed (for battery replacement), and the overall duration of the mission (e.g., inflight duration and time required for battery replacement).

Figure 16 shows the 3D reconstruction based on the proposed method. Two regions (\({R}_{1}\) in blue and \({R}_{2}\) in red) are highlighted and scanned with the TLS for detailed comparison. Figure 17 shows the visual comparison of these highlighted regions between different methods. Among these methods, the proposed method generates fewer artifacts and recovers more geometric details such as the wall textures and the HVAC units in \({R}_{1}\), and the rooftop and the curved wall as in \({R}_{2}\)

To perform the quantitative evaluation, we manually crop the image-based reconstructions and compute the accuracy and completeness based on the scanning results. Table 6 shows the computed Acc. and Comp. For the sake of brevity, we only select the middle thresholds: 95% accuracy and 0.05 completeness, for evaluation. The results validate that there are noticeable improvements in the reconstruction quality between our method and the other two. Note that our method is inherently more robust to the positions/orientations errors (GPS errors, wind, UAV control system, etc.) because the approximated camera model only uses the central pieces of the camera FOV.

Figures 18 and 19 further demonstrated the color-coded ErrorAcc and ErrorComp map at each highlighted region. Compared to the other two methods, our method can better recover the building facades and corners (in ellipses). However, it is noticed that large errors still existed at areas closed to the windows/doors where the glass and mirrors can bring additional noises to the model quality. Adaptive strategies might be needed to handle such areas for accurate reconstruction.

Further evaluations

Finally, we evaluate the proposed method's optimization performance and runtime. In Fig. 20a, we visualize the convergence of the normalized reconstruction metric \({{\mathcalligra{h}}}^{*}\) over iterations for the selected scenes. It is observed that every optimization is converged by the end of the iteration. The fast convergence at the early iterations (i.e., 1–5) is inherited from the parallelizable PSO [26]. To properly compare the runtime efficiency of our algorithm as opposed to the existing state-of-the-arts (i.e., NBV), it is vital to implement the algorithms on the same hardware and software configurations. We implement both algorithms using Python 3.8 with the enabled CPU parallel computing using OpenMP [47]. VTK 9.0.3 [48] is utilized to render cameras' visibility and detect collisions. We perform all the experiments on a PC desktop with Intel CPU E5-2630 processor, 64 GB (DDR4) memory running on Ubuntu 18.04. Figure 20b compares the computation time (averaged from five runs) between NBV and the proposed method. The result demonstrates that our method generally takes significantly less time (around \(17\%\)) on all the selected scenes. Better results are obtained on larger structures (i.e., Church) which validates the scalability of the presented method. It is worth noting that recent works enabled aerial path planning within minutes [22], which is comparable to ours. However, the method requires a good initial flight and cutting-edge GPU support. In contrast, our method is fully automated (e.g., no user input) and can reach similar time spans on a personal desktop where only a multi-thread CPU is needed.

Conclusions

The results from the four studied cases suggest that the proposed method outperforms the baseline approach (i.e., Overhead) and the state-of-the-art (i.e., NBV) in terms of computational efficiency, level of automation, and 3D reconstruction quality. The versatility and robustness of the method are evaluated based on three selected synthetic structures and one real-world application.

Although this study is based on implementing a single UAV scenario, multi-UAV implementations can also be achieved by cooperatively optimizing the paths of each UAV while ensuring safety constraints (e.g., collision avoidance between the paths). Because this paper focuses on demonstrating the advantages of incorporating topology information in path planning, multi-UAV implementation will not be further discussed.

There are cases where the proposed method may not apply. First, the proposed method requires the input geometry triangulated as a connected surface mesh. Therefore, path planning needs to be calculated for each object individually for objects with disconnected surfaces (e.g., a group of multiple disconnected buildings). Second, the method assumes sufficient textures throughout the model surfaces (for feature extractions). This assumption might not hold for certain building envelope materials such as glass and mirrors, which can cause misalignments during the image registration.

Data availability

All data, models, or codes that support the findings of this study are available upon request.

References

el Meouche R, Hijazi I, Poncet PA, Abunemeh M, Rezoug M (2016) UAV photogrammetry implementation to enhance land surveying, comparisons and possibilities. In: International archives of the photogrammetry, remote sensing and spatial information sciences—ISPRS Archives, vol. 42(2W2). 10.5194/isprs-archives-XLII-2-W2-107-2016

Bulatov D, Häufel G, Meidow J, Pohl M, Solbrig P, Wernerus P (2014) Context-based automatic reconstruction and texturing of 3D urban terrain for quick-response tasks. ISPRS J Photogram Remote Sens. 93:1. https://doi.org/10.1016/j.isprsjprs.2014.02.016

Valkaniotis S, Papathanassiou G, Ganas A (2018) Mapping an earthquake-induced landslide based on UAV imagery; case study of the 2015 Okeanos landslide, Lefkada Greece. Eng Geol 245:1. https://doi.org/10.1016/j.enggeo.2018.08.010

Rakha T, Gorodetsky A (2018) Review of unmanned aerial system (UAS) applications in the built environment: towards automated building inspection procedures using drones. Autom Constr 93:1. https://doi.org/10.1016/j.autcon.2018.05.002

Seitz SM, Curless B, Diebel J, Scharstein D, Szeliski R (2006) A comparison and evaluation of multi-view stereo reconstruction algorithms. In: Proceedings of the IEEE computer society conference on computer vision and pattern recognition, vol. 1. https://doi.org/10.1109/CVPR.2006.19

Goesele M, Snavely N, Curless B, Hoppe H, Seitz SM (2007) Multi-view stereo for community photo collections. https://doi.org/10.1109/ICCV.2007.4408933

Furukawa Y, Curless B, Seitz SM, Szeliski R (2010) Towards internet-scale multi-view stereo. https://doi.org/10.1109/CVPR.2010.5539802

Schönberger JL, Zheng E, Frahm JM, Pollefeys M (2016) Pixelwise view selection for unstructured multi-view stereo. In: Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics), vol 9907. LNCS. https://doi.org/10.1007/978-3-319-46487-9_31

Mostegel C, Fraundorfer F, Bischof H (2018) Prioritized multi-view stereo depth map generation using confidence prediction. ISPRS J Photogram Remote Sens 143:1. https://doi.org/10.1016/j.isprsjprs.2018.03.022

Munkelt C, Breitbarth A, Notni G, Denzler J (2010) Multi-view planning for simultaneous coverage and accuracy optimisation. https://doi.org/10.5244/C.24.118

Banta JE, Wong LM, Dumont C, Abidi MA (2000) A next-best-view system for autonomous 3-D object reconstruction. IEEE Trans Syst Man Cybern Part A Syst Humans. 30(5):589. https://doi.org/10.1109/3468.867866

Wenhardt S, Deutsch B, Angelopoulou E, Niemann H (2007) Active visual object reconstruction using D-, E-, and T-optimal next best views. https://doi.org/10.1109/CVPR.2007.383363

Dunn E, Frahm JM (2009) Next best view planning for active model improvement. In: British machine vision conference, 2009, pp 1–11. https://doi.org/10.5244/C.23.53

Vasquez-Gomez JI, Sucar LE, Murrieta-Cid R (2014) View planning for 3D object reconstruction with a mobile manipulator robot. https://doi.org/10.1109/IROS.2014.6943158

Martins FAR, García-Bermejo JG, Casanova EZ, Perán González JR (2005) Automated 3D surface scanning based on CAD model. Mechatronics 15:7. https://doi.org/10.1016/j.mechatronics.2005.01.004

Fan X, Zhang L, Brown B, Rusinkiewicz S (2016) Automated view and path planning for scalable multi-object 3D scanning. ACM Trans Graph 35:6. https://doi.org/10.1145/2980179.2980225

Schmid K, Hirschmüller H, Dömel A, Grixa I, Suppa M, Hirzinger G (2012) View planning for multi-view stereo 3D reconstruction using an autonomous multicopter. J Intell Robot Syst Theory Appl 65:1–4. https://doi.org/10.1007/s10846-011-9576-2

Hoppe C et al (2012) Photogrammetric camera network design for micro aerial vehicles. In: Computer vision winter workshop, 2012, pp 1–3

Roberts M et al. (2017) Submodular trajectory optimization for aerial 3D scanning. In: Proceedings of the IEEE international conference on computer vision. https://doi.org/10.1109/ICCV.2017.569

Hepp B, Niebner M, Hilliges O (2018) Plan3D: viewpoint and trajectory optimization for aerial multi-view stereo reconstruction. ACM Trans Graph 38(1):1. https://doi.org/10.1145/3233794

Koch T, Körner M, Fraundorfer F (2019) Automatic and semantically-aware 3D UAV flight planning for image-based 3D reconstruction. Remote Sens 11:13. https://doi.org/10.3390/rs11131550

Smith N, Moehrle N, Goesele M, Heidrich W (2018) Aerial path planning for urban scene reconstruction: a continuous optimization method and benchmark. https://doi.org/10.1145/3272127.3275010

Scharstein D, Szeliski R (2002) A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. Int J Comput Vision 47:7–42. https://doi.org/10.1023/A:1014573219977

Zhou X et al (2020) Offsite aerial path planning for efficient urban scene reconstruction. ACM Trans Graph 39(6):1–16

Bircher A et al (2015) Structural inspection path planning via iterative viewpoint resampling with application to aerial robotics. In: Proceedings–IEEE international conference on robotics and automation. https://doi.org/10.1109/ICRA.2015.7140101

Shang Z, Bradley J, Shen Z (2020) A co-optimal coverage path planning method for aerial scanning of complex structures. Expert Syst Appl 158:113535. https://doi.org/10.1016/j.eswa.2020.113535

Valette S, Chassery JM (2004) Approximated centroidal voronoi diagrams for uniform polygonal mesh coarsening. Comput Graph Forum 23:3. https://doi.org/10.1111/j.1467-8659.2004.00769.x

Bridson R (2007) Fast poisson disk sampling in arbitrary dimensions. In: ACM SIGGRAPH sketches, p 1. https://doi.org/10.1145/1278780.1278807

Tziavou O, Pytharouli S, Souter J (2018) Unmanned Aerial Vehicle (UAV) based mapping in engineering geological surveys: considerations for optimum results. Eng Geol 232:1. https://doi.org/10.1016/j.enggeo.2017.11.004

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: IEEE international conference on neural networks—conference proceedings, vol 4. https://doi.org/10.4018/ijmfmp.2015010104.

Wang C, Qi F, Shi G (2011) Observation quality guaranteed layout of camera networks via sparse representation. https://doi.org/10.1109/VCIP.2011.6116043

Helsgaun K (2017) An extension of the Lin–Kernighan–Helsgaun TSP solver for constrained traveling salesman and vehicle routing problems. Rosklide, 2017. [Online]. Available: http://akira.ruc.dk/~keld/research/LKH/LKH-3_REPORT.pdf

Gottschalk S, Lin MC, Manocha D (1996) OBBTree: a hierarchical structure for rapid interference detection. In: Proceedings of the 23rd annual conference on computer graphics and interactive techniques, pp 171–180

Karaman S, Frazzoli E (2011) Sampling-based algorithms for optimal motion planning. Int J Robot Res 30:7. https://doi.org/10.1177/0278364911406761

Gammell JD, Srinivasa SS, Barfoot TD (2014) Informed RRT∗: optimal sampling-based path planning focused via direct sampling of an admissible ellipsoidal heuristic. https://doi.org/10.1109/IROS.2014.6942976

de Boor C (1972) On calculating with B-splines. J Approx Theory 6(1):1. https://doi.org/10.1016/0021-9045(72)90080-9

Dokyo (2016) Modular neighborhood pack. Unreal Engine Marketplace. https://www.unrealengine.com/marketplace/en-US/product/modular-neighborhood-pack

PolyPixel (2014) Urban city. Unreal Engine Marketplace, 2014. https://www.unrealengine.com/marketplace/en-US/product/urban-city

Yuriy B (2017) Industrial vertical vessel. Unreal engine marketplace. https://www.unrealengine.com/marketplace/en-US/product/industrial-vertical-vessel

Qiu W et al (2017) UnrealCV: virtual worlds for computer vision. https://doi.org/10.1145/3123266.3129396

SPH Engineering (2021) UgCs

Agisoft LLC. Agisoft Metashape user manual: professional edition, Version 1.6. Agisoft LLC. 2020. [Online]. Available: https://www.agisoft.com/pdf/metashape-pro_1_6_en.pdf

Cabreira TM, Brisolara LB, Ferreira Paulo R (2019) “Survey on coverage path planning with unmanned aerial vehicles. Drones 3:1. https://doi.org/10.3390/drones3010004

Peng C, Isler V (2019) Adaptive view planning for aerial 3D reconstruction. In: Proceedings—IEEE international conference on robotics and automation, vol 2019-May. https://doi.org/10.1109/ICRA.2019.8793532

Besl PJ, McKay ND (1992) A method for registration of 3-D shapes. IEEE Trans Pattern Anal Mach Intell 14(2):239–256. https://doi.org/10.1109/34.121791

Fischler MA, Bolles RC (1981) Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun ACM 24(6):381–395. https://doi.org/10.1145/358669.358692

Dagum L, Menon R (1998) OpenMP: an industry standard API for shared-memory programming. IEEE Comput Sci Eng 5:1. https://doi.org/10.1109/99.660313

Schroeder W, Martin K, Lorensen B (2018) The visualization toolkit (VTK). Open Source

Acknowledgements

We acknowledge partial support from the Competitive Academic Agreement Program (CAAP) of Pipeline and Hazardous Materials Safety Administration (PHMSA) of U.S. Department of Transportation under contract number 693JK31950006CAAP.

Funding

This article was funded by Pipeline and Hazardous Materials Safety Administration (693JK31950006CAAP).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Shang, Z., Shen, Z. Topology-based UAV path planning for multi-view stereo 3D reconstruction of complex structures. Complex Intell. Syst. 9, 909–926 (2023). https://doi.org/10.1007/s40747-022-00831-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-022-00831-5