Abstract

This paper develops a quantile hidden semi-Markov regression to jointly estimate multiple quantiles for the analysis of multivariate time series. The approach is based upon the Multivariate Asymmetric Laplace (MAL) distribution, which allows to model the quantiles of all univariate conditional distributions of a multivariate response simultaneously, incorporating the correlation structure among the outcomes. Unobserved serial heterogeneity across observations is modeled by introducing regime-dependent parameters that evolve according to a latent finite-state semi-Markov chain. Exploiting the hierarchical representation of the MAL, inference is carried out using an efficient Expectation-Maximization algorithm based on closed form updates for all model parameters, without parametric assumptions about the states’ sojourn distributions. The validity of the proposed methodology is analyzed both by a simulation study and through the empirical analysis of air pollutant concentrations in a small Italian city.

Similar content being viewed by others

1 Introduction

Since their introduction in the 1960s, Hidden Markov Models (HMMs, see MacDonald and Zucchini 1997; Cappé et al. 2006; Zucchini et al. 2016) have been successfully implemented in a wide range of applications for the analysis of time series data. This class of models is described by an observable stochastic process whose dynamic is governed, completely or partially, by a latent unobservable Markov chain. Owing to their mathematical tractability and the availability of efficient computational procedures, the use of HMMs is well justified when the researcher is interested in inference and/or predictions about the latent process based on the observed one. For a detailed survey of the literature and fields of application, please see MacDonald and Zucchini 1997; Ephraim and Merhav 2002; Cappé et al. 2006; Maruotti 2011; Bartolucci et al. 2012; Zucchini et al. 2016 and Maruotti and Punzo (2021).

One immediate consequence of the Markov property is that in any HMM, the sojourn time (also defined as state duration or dwell-time), that is, the number of consecutive time points that the Markov chain spends in a given state, is implicitly geometrically distributed (Langrock and Zucchini 2011; Zucchini et al. 2016). Despite the popularity of HMMs, this assumption may not be realistic in many applications which can lead to biased parameter estimates and deteriorate the states classification performance due to a misspecification of the dynamic of the hidden process. Bulla and Bulla (2006) and Maruotti et al. (2019), for instance, show the inability of HMMs to model temporal dependence and reproduce empirical characteristics in real-world data, especially when the probability mass function of sojourn times is far from being geometric.

Motivated by these considerations, Hidden Semi-Markov Models (HSMMs, see Yu 2015) are designed to relax this condition by allowing the Sojourn Distributions (SDs) to be modeled directly by the researcher using more flexible parametric or nonparametric distributions.

Typical choices include families of discrete (semi-)parametric distributions or, alternatively, one can avoid distributional assumptions by estimating the SD probability mass functions based on the observations (Sansom and Thomson 2001; Guédon 2003; Pohle et al. 2022). Combining the increased flexibility to capture a wide range of distributional shapes of the SDs with the well-known advantages of HMMs, HSMMs constitute a versatile framework in several spheres of application (see Guédon 2003; Barbu and Limnios 2009; Bulla et al. 2010; O’Connell and Højsgaard 2011; Yu 2015; Maruotti and Punzo 2021 and the references therein).

In the regression context where covariates are available, both HMMs and HSMMs have also been extended to include a set of predictors by introducing state-dependent regression parameters that evolve over time according to the unobserved process (Hamilton 1989; Yu 2015; Zucchini et al. 2016).

This model specification permits to investigate the dynamics of the hidden state sequence and, at the same time, allows to examine state-specific covariate effects in the observable process, providing a useful modeling framework to capture unobserved time-dependent heterogeneity. Typically, a linear model targeting the conditional mean of the dependent variable given covariates is specified. The assumptions underlying traditional linear regression models, however, are seldom satisfied in real data which often exhibit skewness, heavy tails and outliers. Moreover, the effect of the covariates can differ greatly between different parts of the response distribution. Therefore, when the aim of the research focuses not only at the center of the response distribution but also, and especially in the tails, quantile regression (Koenker and Bassett 1978) represents an interesting alternative to standard mean regression. This method provides a way to model the conditional quantiles of a response variable with respect to the covariates in order to have a more complete picture of the entire conditional distribution compared to ordinary least squares. In the univariate quantile regression framework, both the classical and Bayesian inferential approaches have been proposed in the literature to estimate the model parameters. In the frequentist setting, the inferential approach relies on the minimization of the asymmetric loss function (see Koenker and Bassett 1978) while, in the Bayesian setting and in a likelihood inferential approach, the Asymmetric Laplace (AL) distribution has been introduced as a likelihood inferential tool. The two approaches are well-justified by the relationship between the quantile loss function and the AL density. Indeed, Yu and Moyeed (2001) showed that the minimization of the quantile loss function is equivalent, in terms of parameter estimates, to the maximization of the likelihood associated with the AL density. For a detailed review and list of references, Koenker (2005); Luo et al. (2012); Bernardi et al. (2015) and Koenker et al. (2017) provide an overview of the most used quantile regression techniques in both the classical and Bayesian settings. In addition, quantile regression methods have also been generalized to account for serial heterogeneity. In the analysis of longitudinal data, Farcomeni (2012) and Marino et al. (2018) consider univariate linear quantile models where unobserved sources of time-varying heterogeneity are captured by means of state-dependent coefficients evolving according to a finite-state homogeneous hidden Markov chain. Further, Ye et al. (2016) and Maruotti et al. (2021) propose a (semi-)Markov quantile regression to model the regime-switching effect of the regression coefficients in financial and environmental time series.

When multivariate response variables are concerned, the existing literature on quantile regression is less extensive since there is no “natural” ordering in a p-dimensional space, for \(p>1\). As a consequence, the univariate quantile regression method does not straightforwardly extend to higher dimensions. Nevertheless, in most situations of practical interest, the purpose of the matter being investigated lies in describing the distribution of a multivariate response variable.

For this reason the search for a satisfactory notion of multivariate quantile has led to a flourishing literature on this topic despite its definition is still a debatable issue (see Serfling 2002; Kong and Mizera 2012; Koenker et al. 2017; Stolfi et al. 2018; Chavas 2018; Charlier et al. 2020; Merlo et al. 2021, 2022 and the references therein for relevant studies).

Recently, Petrella and Raponi (2019) generalized the AL distribution inferential approach of the univariate quantile regression to a multivariate framework by using the Multivariate Asymmetric Laplace (MAL) distribution defined in Kotz et al. (2012). Employing the MAL distribution as a likelihood based inferential tool, the authors sidestep the problem of defining the quantiles of a multivariate distribution, and instead implement joint estimation for the univariate quantiles of the conditional distribution of a multivariate response variable given covariates, accounting for possible correlation among the responses.

The purpose of this article is to extend the work of Petrella and Raponi (2019) by introducing a HSMM for the analysis of multivariate time series. More formally, we develop a Quantile Hidden Semi-Markov Model (QHSMM) to jointly estimate the quantiles of the univariate conditional distributions of a multivariate response, accounting for the dependence structure between the outcomes. In particular, to capture the temporal evolution of unobserved heterogeneity, we introduce state-dependent coefficients in the regression model that evolve over time according to a latent semi-Markov process. In order to prevent inconsistent parameter estimates due to misspecification of the SDs, we adopt the nonparametric approach of Guédon (2003) where they are left unspecified and approximated by discrete distributions concentrated on a finite set of time points estimated from the data. Within this scheme, our modeling framework can be thought of as a model-based clustering approach for data showing time-varying heterogeneity, where the interest lies in the effect of cluster-specific covariates on various quantile levels.

Throughout the paper we propose to estimate the model parameters with a Maximum Likelihood (ML) approach by using the MAL distribution as working likelihood in a regression framework. Specifically, as in Petrella and Raponi (2019) and Merlo et al. (2022), we consider the mixture representation of the MAL distribution which allows us to build an efficient Expectation-Maximization (EM) algorithm with the E- and M-step updates in closed form for all model parameters.

Using simulation experiments, we illustrate the validity of our approach under different data generating processes and evaluate its ability in recovering the true values of the regression coefficients, the true classification and number of latent states.

In the empirical analysis, we apply the proposed methodology to investigate the effect of a collection of atmospheric variables on the daily concentrations of three major pollutants, i.e., particulate matter, ozone and nitrogen dioxide measured in Rieti (Italy) from 2019 to 2021. Our method allows us to: (i) assess how the effects of atmospheric variables can vary across different (more extreme) quantiles of the conditional distribution of air pollutants, accounting for their dependence structure; (ii) summarize the data by means of a reduced number of latent regimes associated with different concentration levels of chemicals.

The paper is organized as follows. In Sect. 2, we introduce the proposed model. Sect. 3 illustrates the EM-based ML approach to estimating the model parameters and the computational details of the algorithm. In Sect. 4 we present the simulation results, while Sect. 5 discusses the empirical application. Finally, Sect. 6 concludes.

2 Methodology

Let \(\{S_{t}\}_{t = 1}^T\) denote a finite-state hidden semi-Markov chain defined over a discrete state space \({\mathcal {S}} = \{1, \dots , K \}\). The latent process \(\{S_{t}\}_{t = 1}^T\) is constructed as follows. A homogeneous hidden Markov chain with K states models the transitions between different states, with initial probabilities, \(\pi _k = \text {Pr} (S_1 = k)\), and transition probabilities

with \(\sum _{k=1}^K \pi _{jk} = 1\), \(\pi _{jk} \ge 0\), for every \(k=1,\dots ,K\) and \(\pi _{jj} = 0\), i.e., the diagonal elements of the transition probability matrix are zeros. More concisely, we collect the initial and transition probabilities in the K-dimensional vector \(\varvec{\pi }\) and in the \(K \times K\) matrix \(\mathbf {Q}\), respectively. Because the unobserved process is semi-Markovian, only transitions from one state to another are governed by the transition probabilities in (1), but the duration of a stay in a state is modeled by a separate SD. Specifically, let us denote by \(d_k(u)\) the SD, i.e., the probability the hidden process \(\{S_{t}\}_{t = 1}^T\) spends u consecutive time steps in the k-th state, as follows:

where \(U_k\) corresponds to the maximum sojourn time of the hidden chain in state k. Let us also denote \(\mathbf{U}= (U_1, \dots , U_K)\) the K-dimensional vector collecting all state-specific maximum sojourn times.

HSMMs allow for great flexibility as the SD in (2) is directly specified by the researcher and estimated from the observed data. The SD can be chosen from a large variety of parametric distributions, such as the shifted-Poisson, the shifted-negative binomial distributions or, in the particular case where \(d_k (u)\) is assumed to be geometrically distributed, a HSMM reduces to a HMM with the most likely sojourn time for every state being 1 (Zucchini et al. 2016). Parametric distributions, however, might lack the flexibility to capture key features of empirical SDs in the data, increasing state misclassification rates and inducing substantial bias. Alternatively, semi- and nonparametric data-driven approaches can be adopted (see Sansom and Thomson 2001; Guédon 2003; Langrock and Zucchini 2011; Maruotti et al. 2021) to provide sufficient additional flexibility in comparison to HMMs and accommodate complex distributional shapes.

To build the proposed model, let \({\mathbf {Y}}_{t} = (Y_{t}^{(1)}, \dots , Y_{t}^{(p)})'\) be a continuous observable p-variate response variable and \({\mathbf {X}}_{t} = (1, X_{t}^{(2)}, \dots , X_{t}^{(m)})'\) be a m-dimensional vector of covariates, with the first element being the intercept, at time \(t = 1, \dots , T\). The process \(\{\mathbf{Y}_{t}\}_{t = 1}^T\) represents the state-dependent process of the HSMM and, conditional on the hidden states, fulfills the independence property:

where \(f_{\mathbf{Y}} (\mathbf{y}_{t} \mid \mathbf {x}_{t}, S_{t} = s_t)\) is the conditional distribution of \({\mathbf {Y}}_{t}\) given the covariates \({\mathbf {X}}_{t}\) and the hidden state occupied at time t.

As mentioned in Section 1, our objective is to provide joint estimation of the p quantiles of the univariate conditional distributions of \({\mathbf {Y}}_{t}\), taking into account time-dependent heterogeneity and potential correlation among the components of \({\mathbf {Y}}_{t}\). Given p quantile indexes \(\varvec{\tau } = (\tau _1, \dots , \tau _p)\), with \(\tau _j \in (0,1)\), \(j = 1, \dots , p\), the Quantile Hidden Semi-Markov Model (QHSMM) is defined as follows:

with \(\varvec{\beta }_k (\varvec{\tau }) = (\varvec{\beta }_{k}^{(1)} (\varvec{\tau }), \dots , \varvec{\beta }_{k}^{(p)} (\varvec{\tau }))\) being a state-specific \(p \times m\) matrix of unknown regression coefficients that evolves over time according to the hidden process \(S_{t}\) and takes one of the values in the set \(\{ \varvec{\beta }_1 (\varvec{\tau }), \dots , \varvec{\beta }_K (\varvec{\tau }) \}\), and where \(\epsilon _{tk} (\varvec{\tau })\) denotes a p-dimensional vector of error terms with univariate component-wise quantiles (at fixed levels \(\tau _1,\dots , \tau _p\), respectively) equal to zero.

Generalizing the approach of Petrella and Raponi (2019), as conditional distribution of \(\mathbf{Y}_t\) we consider a Multivariate Asymmetric Laplace (MAL) distribution (see Kotz et al. 2012). In detail, based on (4) we assume \(\mathbf{Y}_t \mid \mathbf {X}_{t} = \mathbf {x}_{t}, S_{t} = k \sim \mathcal {MAL}_p (\varvec{\mu }_{tk}, \mathbf {D}_k {\varvec{\tilde{\xi }}}, \mathbf {D}_k {\varvec{\Sigma }}_k \mathbf {D}_k)\) whose probability density function is given by:

where, for each time occasion \(t=1,\dots ,T\) and state \(k=1,\dots ,K\), the location parameter \(\varvec{\mu }_{tk}\) is defined by the linear model:

with \(\mathbf {D}_{\mathbf {k}} \tilde{{\varvec{\xi }}}\) being the skewness parameter, \(\mathbf{D}_k= \text{ diag }[\delta _{k1} ,\dots , \delta _{kp}]\), \(\delta _{kj}>0\), and \( \tilde{{\varvec{\xi }}}= ({\tilde{\xi }}_1,\dots ,{\tilde{\xi }}_p)'\) having generic element \({\tilde{\xi }}_j= \frac{1- 2 \tau _j}{\tau _j(1 - \tau _j)}\), \(j=1,\dots , p\). \({\varvec{\Sigma }}_k\) is a \(p \times p\) positive definite matrix such that \({\varvec{\Sigma }}_k = \varvec{\Lambda } \varvec{\Psi }_k \varvec{\Lambda }\), with \(\varvec{\Psi }_k \) being a \(p \times p\) state-specific correlation matrix and \(\varvec{\Lambda }= \text{ diag }[\sigma _1,\dots , \sigma _p]\), with \(\sigma _j^2= \frac{2}{\tau _j (1 - \tau _j)}\), \(j=1,\dots , p\). Moreover, \({\tilde{m}}_{tk}= (\mathbf {y}_{t} - \varvec{\mu }_{tk})' (\mathbf{D}_k {\varvec{\Sigma }}_k \mathbf{D}_k)^{-1}(\mathbf {y}_{t} - \varvec{\mu }_{tk})\), \({\tilde{d}}_k =\mathbf {{\tilde{\xi }}}' {\varvec{\Sigma }}_k^{-1} {\varvec{\tilde{\xi }}}\), and \(K_{\nu }(\cdot )\) denotes the modified Bessel function of the third kind with index parameter \(\nu = (2-p)/2\).

One of the key benefits of the MAL distribution is that, using (4) and (5), and following Kotz et al. (2012), the \(\mathcal {MAL}_p (\varvec{\mu }, \mathbf {D} {\varvec{\tilde{\xi }}}, \mathbf {D} {\varvec{\Sigma }} \mathbf {D})\) can be written as a location-scale mixture with the following representation:

where \(\mathbf{Z}\sim {{{\mathcal {N}}}}_p(\mathbf{0}_p, \mathbf{I}_p)\) denotes a p-variate standard Normal distribution and \({\tilde{C}} \sim \text{ Exp }(1)\) has a standard exponential distribution, with \(\mathbf{Z}\) being independent of \({\tilde{C}}\). In particular, the constraints imposed on \( \tilde{{\varvec{\xi }}}\) and \(\varvec{\Lambda }\) guarantee that the j-th element of \(\varvec{\mu }_{tk}\), \(\mu _{tkj}\), is the \(\tau _j\)-th conditional quantile function of \(Y_{t}^{(j)}\) given \(S_{t} = k\), for \(k=1,\dots ,K\) and \(j = 1,\dots , p\), and represent necessary conditions for model identifiability for any fixed quantile level \(\tau _1, \dots , \tau _p\), as stated in the next proposition.

Proposition 1

Let \(\mathbf{Y}_t \mid \mathbf {X}_{t} = \mathbf {x}_{t}, S_{t} = k \sim \mathcal {MAL}_p (\varvec{\mu }_{tk}, \mathbf {D}_k {\varvec{\tilde{\xi }}}, \mathbf {D}_k {\varvec{\Sigma }}_k \mathbf {D}_k)\), for \(k=1,\dots ,K\), where K is a positive integer, \(\tilde{{\varvec{\xi }}}= (\tilde{\xi }_1,\dots ,{\tilde{\xi }}_p)'\) with \({\tilde{\xi }}_j= \frac{1- 2 \tau _j}{\tau _j(1 - \tau _j)}\) being known for any fixed value of \(\tau _j\). Furthermore, \({\varvec{\Sigma }}_k = \varvec{\Lambda } \varvec{\Psi }_k \varvec{\Lambda }\) is a \(p \times p\) positive definite matrix with \(\varvec{\Psi }_k\) being an unknown \(p \times p\) correlation matrix and \(\varvec{\Lambda }= \text{ diag }[\sigma _1,\dots , \sigma _p]\), with fixed element \(\sigma _j^2= \frac{2}{\tau _j (1 - \tau _j)}\), \(j=1,\dots , p\). Then, the model in (2)-(6) is identified.

Proof

See Proof of Proposition 1 in Appendix. \(\square \)

In comparison with other methods in the literature, the proposed modeling framework includes the homogeneous joint quantile regression approach of Petrella and Raponi (2019) when \(K=1\) and it reduces to the univariate hidden semi-Markov-switching quantile regression of Maruotti et al. (2021) when \(p=1\). Naturally, when a geometric SD is assumed for all latent states, we call our methodology the Quantile Hidden Markov Model (QHMM).

3 Maximum likelihood estimation and inference

In this section we introduce a ML approach to making inference on model parameters. As is usually done in the literature in the presence of latent variables, we propose a suitable likelihood-based EM algorithm (Dempster et al. 1977). To hedge against possibly biased inference from incorrect parametric assumptions on the SDs, we estimate the sojourn probabilities nonparametrically following Guédon (2003). In addition, we show that both the E- and M-step updates of the algorithm can be obtained in closed form by exploiting the hierarchical representation of the MAL distribution in (7) under the constraints on \(\mathbf {{\tilde{\xi }}}\) and \(\varvec{\Lambda }\), hence reducing the computational burden compared to direct maximization of the likelihood. We illustrate the EM algorithm to fit the more general QHSMM but it can also be employed for the QHMM by assuming a geometric SD. To ease the notation, unless specified otherwise, we omit the quantile levels vector \(\varvec{\tau }\), yet all model parameters are allowed to depend on it. All the proofs are collected in the Appendix.

Let us denote by \({\varvec{\Phi }}_{\varvec{\tau }} = (\varvec{\beta }_1,\dots ,\varvec{\beta }_K, \mathbf {D}_1,\dots , \mathbf {D}_K, \varvec{\Psi }_1, \dots , \varvec{\Psi }_K, \varvec{\pi }, \mathbf {Q}, d_1 (u), \dots , d_K (U_K))\) the set of model parameters. For any fixed \(\varvec{\tau }\), number of hidden states K and maximum sojourn times \(\mathbf{U}\), we use the MAL representation in (7) to express the complete-data likelihood as follows:

where \({\tilde{C}}\) is a latent variable that follows an exponential distribution with parameter 1, \(s^\star _r\) is the r-th visited state, \(u_r\) is the time spent in that state (i.e., the duration of the r-th visit) and \(R-1\) is the number of state changes up to time T. Following Guédon (2003), the survivor function \(D_k(u)\) for the sojourn time in state k is defined as:

The survivor function sums up the individual probability masses of all possible sojourns of length \(v \ge u\) and it has several advantages. Firstly, we do not have to assume that the process is leaving a state immediately after the upper endpoint T. Secondly, it provides a more accurate prediction of the last state visited, which is important when the data analysis wishes to estimate the most recently visited state, and improves parameter estimation (O’Connell and Højsgaard 2011).

3.1 The EM algorithm

The EM algorithm alternates between performing an expectation (E) step, which defines the expectation of the complete log-likelihood function evaluated using the current estimates of the parameters, and a maximization (M) step, which computes parameter estimates by maximizing the expected complete log-likelihood obtained in the E-step. The expected complete log-likelihood function and the optimal parameter updates are given in the following propositions.

Given the representation in (8), for the implementation of the algorithm we introduce the following quantities. We define the probability of being in state k at time t given the observed sequence as:

The probability the process left state j at time \(t-1\) and entered state k at time t given the observed sequence is:

Finally, let us denote by \(\eta _{k} (u)\) the expected number of times the process spends u consecutive time steps in state k as:

Then, the expected log-likelihood for the complete data is presented in the following proposition.

Proposition 2

For any fixed \(\varvec{\tau }=(\tau _1,\dots ,\tau _p)\), number of hidden states K and maximum sojourn times \(\mathbf{U}= (U_1, \dots , U_K)\), the expected complete log-likelihood function (up to additive constants) is:

where

with

Therefore, the EM algorithm can be implemented as follows:

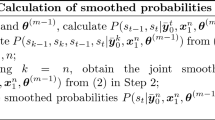

E-step: At the generic r-th iteration of the algorithm, let \(\hat{{\varvec{\Phi }}}^{(r-1)}_{\varvec{\tau }}\) denote the current parameter estimates. Then, conditionally on the observed data and \(\hat{{\varvec{\Phi }}}^{(r-1)}_\tau \), the quantities, \(\gamma _{tk}\) in (10) and \(v_{tjk}\) in (11), can be calculated via a dynamic programming method known as the forward-backward algorithm (see, e.g., Levinson et al. 1983), while \(\eta _{k} (u)\) in (12) can be computed using the efficient adaptation of the forward-backward algorithm provided by Guédon (2003). Similarly, the conditional expectations \({{\tilde{c}}}_{tk}\) and \({{\tilde{z}}}_{tk}\) in (14) are considered; see the Appendix. We denote such quantities as \(\hat{\gamma }^{(r)}_{tk}, {\hat{v}}^{(r)}_{tjk}, {\hat{\eta }}^{(r)}_{k} (u), \hat{{\tilde{c}}}^{(r)}_{tk}\) and \(\hat{{\tilde{z}}}^{(r)}_{tk}\).

M-step: Substitute them in (13) to maximize \({\mathcal {O}}({\varvec{\Phi }}_{\varvec{\tau }} \mid \hat{{\varvec{\Phi }}}^{(r-1)}_{\varvec{\tau }})\) with respect to \({\varvec{\Phi }}_{\varvec{\tau }}\), and obtain the updated parameter estimates. Because the expected complete-data log-likelihood in (13) decomposes into orthogonal subproblems, the maximization with respect to the regression coefficients, the parameters of the MAL distribution and the hidden process, can be performed separately. Thus, the initial probabilities \(\pi _j\) and transition probabilities \(\pi _{jk}\) are estimated by:

To update the state-specific SD, we follow the nonparametric approach of Guédon (2003). In particular, we set the latent state duration densities to be discrete nonparametric distributions with arbitrary point mass assigned to the feasible duration values, that is, the SD is estimated as follows:

Finally, the M-step updates of the parameters in the regression equation \(\{ \varvec{\beta }_j, \mathbf{D}_j, {\varvec{\Sigma }_j} \}_{j=1}^K\), are given in the following proposition.

Proposition 3

At the generic r-th iteration, the values of \(\varvec{\beta }_j, \mathbf{D}_j\) and \(\varvec{\Sigma }_j\) maximizing (13) are:

where \(\hat{\varvec{\mu }}^{(r)}_{tj} = \hat{\varvec{\beta }}^{(r)}_j {\mathbf {X}}_{t}\).

For the j-th state, the elements \(\delta _{jk}, k = 1,\dots ,p\), of the diagonal scale matrix \(\mathbf{D}_j\) are estimated by:

where \(\rho _\tau (\cdot )\) is the quantile check function of Koenker and Bassett (1978):

with \({\varvec{1}}(\cdot )\) being the indicator function and \(\hat{{\mu }}^{(r)}_{tjk}\) being the k-th element of the vector \(\hat{\varvec{\mu }}^{(r)}_{tj}\).

The E- and M-steps are alternated until convergence, that is when the observed likelihood between two consecutive iterations is smaller than a predetermined threshold. In this paper, we set this threshold criterion equal to \(10^{-5}\).

Following Maruotti et al. (2021), for fixed \(\varvec{\tau }\), K and \(\mathbf{U}\), we initialize the EM algorithm by providing the initial states partition, \(\{ S^{(0)}_t \}_{t=1}^T\), according to a Multinomial distribution with probabilities 1/K. From the generated partition, the off-diagonal elements of \(\hat{\mathbf{Q}}^{(0)}\) are computed as proportions of transition. We obtain \(\hat{ \varvec{\beta }}^{(0)}_k\) and \(\hat{ \mathbf{D}}^{(0)}_k\) by fitting univariate quantile regressions on observations within state k, while \(\hat{ \varvec{\Psi }}^{(0)}_k\) is set equal to the empirical correlation computed on observations in the k-th state. The initial SDs are estimated from \(\{ S^{(0)}_t \}_{t=1}^T\) assuming a geometric distribution as in HMMs. To avoid convergence to local maxima and better explore the parameter space, we fit the proposed QHSMM using a multiple random starts strategy with different starting partitions and retain the solution corresponding to the maximum likelihood value.

Once we computed the ML estimates of the model parameters \(\hat{{\varvec{\Phi }}}_{\varvec{\tau }}\), we calculate standard errors using a parametric bootstrap approach (Visser et al. 2000). That is, we refitted the model to H bootstrap samples and approximate the standard error of each model parameter with its corresponding standard deviation computed on bootstrap samples. Hence, standard error estimates for \(\hat{{\varvec{\Phi }}}_{\varvec{\tau }}\) are given by the diagonal elements of:

where \(\hat{{\varvec{\Phi }}}^{(h)}_{\varvec{\tau }}\) is the set of parameter estimates for the h-th bootstrap sample and \(\bar{{\varvec{\Phi }}}_{\varvec{\tau }}\) denote the sample mean of all \(\hat{{\varvec{\Phi }}}^{(h)}_{\varvec{\tau }}, h = 1,\dots , H\).

3.2 Model selection

In the EM algorithm discussed above, the number of hidden states K and maximum length of state durations \(\mathbf{U}= (U_1, \dots , U_K)\) are unknown. From an applied perspective, choosing an adequate number of states is a crucial aspect of the data analysis which shall take into account the data structure and research question at hand. In particular, K is typically selected using penalized-likelihood criteria or cross-validation methods, which can become demanding to fit computationally (Pohle et al. 2017). In HSMMs, not only the number of hidden states shall be selected, but also the maximum length of state durations. In practice, this amounts to fixing \(U_k = U\), \(k = 1,\dots ,K\), with U being large enough to capture the main support of the SD in each state (see Maruotti et al. 2021). The major disadvantages of this approach are the large number of parameters to be estimated and the fact that different states may require substantially different maximum sojourn times. For these reasons, herein we simultaneously select the optimal values of K and vector \(\mathbf{U}\) using penalized likelihood criteria, such as AIC (Akaike 1998), BIC (Schwarz 1978) and ICL (Biernacki et al. 2000) which penalizes the BIC for the estimated mean entropy and it is given by:

where \(BIC_{(K,\mathbf{U})}\) in (23) is defined as \(BIC_{(K,\mathbf{U})}= -2 \ell (\hat{{\varvec{\Phi }}}_{\varvec{\tau }}) + \log (T) \nu _f\), with \(\ell (\hat{{\varvec{\Phi }}}_{\varvec{\tau }}) = \log \sum _{s_1,\dots ,s_T} \sum _{u} L_c (\hat{{\varvec{\Phi }}}_{\varvec{\tau }})\) being the observed data log-likelihood in correspondence of the ML estimate of \({\varvec{\Phi }}_{\varvec{\tau }}\), T corresponds to the number of observations and \(\nu _f\) denotes the number of free model parameters in \({\varvec{\Phi }}_{\varvec{\tau }}\). Computing \(\ell (\hat{{\varvec{\Phi }}}_{\varvec{\tau }})\) may prove to be difficult to evaluate directly because it involves the sum on every possible state sequence of length T, \(\sum _{s_1,\dots ,s_T}\), and the sum on every supplementary duration from time \(T+1\) spent in the state occupied at time T, \(\sum _{u}\). Therefore, to compute \(\ell (\hat{{\varvec{\Phi }}}_{\varvec{\tau }})\) we use the variables in (10)-(12) required for the EM algorithm (please see Guédon 2003).

All criteria involve penalization terms depending on the number of parameters \(\nu _f\), which is given by the sum of:

-

the number of regression parameters in \(\{ \varvec{\beta }_1, \dots , \varvec{\beta }_K \}\): \(p \times m \times K\),

-

the number of scale parameters in \(\mathbf{D}_k\): \(p \times K\),

-

the number of correlation parameters in \(\varvec{\Psi }_k\): \(p \times K (K - 1) / 2\),

-

the number of independent transition probabilities in \(\mathbf{Q}\): \(K \times (K - 2)\),

-

the unconstrained sojourn distribution probabilities: \(\sum _{k=1}^K (U_k - 1).\)

To select the order of the hidden process, we first define a sequence of values of K and construct a K-dimensional grid of maximum sojourn distributions, \({\mathcal {U}} \subset {\mathbb {R}}_{\ge 0}^K\), and then fit the model using the EM algorithm described above for fixed \(\varvec{\tau }\), K and a vector \(\mathbf{U}\) in \({\mathcal {U}}\). Because a full search over \({\mathcal {U}}\) might be computationally infeasible, we employ the greedy search algorithm considered in Langrock et al. (2015) and Adam et al. (2019) and select the best combination of \((K, \mathbf{U})\) corresponding to the lowest value of the penalized likelihood criteria.

4 Simulation study

We conduct a simulation study to evaluate the finite sample properties of the proposed QHSMM. This simulation exercise addresses the following issues: (i) study the performance of the model under different distributional choices for the error term and SDs, when either a linear or nonlinear quantile regression function of \(\mathbf{Y}\) given \(\mathbf{X}\) is considered; (ii) assess the classification performance of the proposed model; (iii) evaluate the performance of penalized likelihood criteria in selecting the optimal number of hidden states K and maximum sojourn times \(\mathbf{U}\). Additional simulation studies are illustrated in the Supplementary Materials.

We consider \(T=1000\), a continuous response variable of dimension \(p=2\) and one explanatory variable \(X_{t} \sim {\mathcal {N}}(0,1)\). The observations are generated from a two state HSMM, i.e., \(K = 2\), using the following data generating process:

where \({\mathbf {X}}_{t} = (1, X_{t})'\) and the true values of the state-dependent parameters, \(\varvec{\beta }_1\) and \(\varvec{\beta }_2\), are given by:

We consider the following two distributions for the error terms \(\varvec{\epsilon }_{tk}\) in (24):

- (\({\mathcal {N}}\)):

-

: \(\varvec{\epsilon }_{tk}\) are generated from a bivariate Normal random variable with zero mean vector and variance-covariance matrix equal to \({\varvec{\tilde{\Omega }}}_k\), for \(k = 1,2\);

- (\({\mathcal {T}}\)):

-

: \(\varvec{\epsilon }_{tk}\) are generated from a bivariate Student t distribution with 5 degrees of freedom, zero mean and scale matrix equal to \({\varvec{\tilde{\Omega }}}_k\), for \(k = 1,2\).

The state-specific covariance matrices \({\varvec{\tilde{\Omega }}}_k, k = 1,2,\) are set equal to low (\({\varvec{\tilde{\Omega }}}_1 = \bigl ( {\begin{matrix}1 &{} 0.3\\ 0.3 &{} 1\end{matrix}}\bigr )\)) and high (\({\varvec{\tilde{\Omega }}}_2 = \bigl ( {\begin{matrix}1 &{} 0.8\\ 0.8 &{} 1\end{matrix}}\bigr )\)) correlation between the responses.

Similarly to Maruotti et al. (2021), for each scenario we further consider three SDs:

-

(SPO)

: a shifted-Poisson, i.e.:

$$\begin{aligned} d_k(u) = \exp (-\lambda _k) \frac{\lambda ^{u-1}_k}{(u-1)!}, \qquad u = 1,2,\dots , \end{aligned}$$(26)with \(\lambda _1 = 10\) and \(\lambda _2 = 5\);

-

(SNB)

: a shifted-negative binomial, i.e.:

$$\begin{aligned} d_k(u) = \frac{\Gamma (u + \lambda _k - 1)}{(u-1)! \Gamma (\lambda _k)} p^{\lambda _k}_k (1-p_k)^{u-1}, \, u = 1,2,\dots ,\nonumber \\ \end{aligned}$$(27)with \(\lambda _1 = 8, \lambda _2 = 4, p_1 = 0.5\) and \(p_2 = 0.6\);

-

(GEO)

: a geometric sojourn, i.e.:

$$\begin{aligned} d_k(u) = p_k (1-p_k)^{u-1}, \qquad u = 1,2,\dots , \end{aligned}$$(28)with \(p_1 = 0.2\) and \(p_2 = 0.3\).

We fit the proposed QHSMM for five quantile levels, i.e., \(\varvec{\tau }=(0.10, 0.10)\), \(\varvec{\tau }=(0.25, 0.25)\), \(\varvec{\tau }=(0.50,0.50)\), \(\varvec{\tau }=(0.75, 0.75)\) and \(\varvec{\tau }=(0.90, 0.90)\). For each model, we carry out \(B=1000\) Monte Carlo replications and report the following indicators. The Average Relative Bias (ARB), expressed as a percentage:

where \(\hat{{\theta }}^{(b)}_{\varvec{\tau }}\) is the estimated parameter at level \(\varvec{\tau }\) for the b-th replication and \({{\theta }}_{\varvec{\tau }}\) is the corresponding “true” value. Secondly, the Root Mean Square Error (RMSE) of model parameters averaged across the B simulations:

To assess the first and second queries of this simulation exercise, Tables 1 and 2 report the ARB and RMSE for the state-specific coefficients \(\varvec{\beta }_1\) and \(\varvec{\beta }_2\). As can be noted, the proposed model under the Normal and Student t error distributions is able to recover the true state-dependent parameters for both low and high degree of dependence and all three considered SDs. Not surprisingly, the bias effect is quite small when we analyze the median levels (see column 3). As the quantile levels become more extreme (see columns 1, 2, 4 and 5), the ARB slightly increases but it still remains reasonably small. Also, under the (\({\mathcal {T}}\)) scenario the heavier tails of the Student t contribute to higher ARB and RMSE especially at the 10-th and 90-th percentiles.

To evaluate the classification performance of the proposed model, we report the average Adjusted Rand Index (ARI) of Hubert and Arabie 1985 and the misclassification rate (MCR). Specifically, we compare the classification obtained by the QHSMM with the one obtained from a QHMM under the assumption of a geometric SD. The state partition provided by the fitted models is obtained by taking the maximum, \(\underset{k \in {\mathcal {S}}}{\max } \, \gamma _{tk}\), posteriori probability for every \(t=1,\dots ,T\). The results in Table 3 show that when the true SD of the data generating process is geometric, the QHMM provides a slightly better classification both in terms of ARI and MCR (please compare the GEO row of Panel A with that of Panel C and the GEO row of Panel B with that of Panel D). This is not surprising as the QHMM implicitly assumes geometrically distributed sojourn distributions. The QHSMM, on the contrary, outperforms the QHMM in all other cases, as it can approximate arbitrarily well any SD and does not rely on a distributional assumption for \(d_k(u)\) (compare the SPO and SNB rows of Panel A with those of Panel C, and the SPO and SNB rows of Panel B with those of Panel D), with very few exceptions at quantile levels \(\varvec{\tau }=(0.50,0.50)\) and \(\varvec{\tau }=(0.75, 0.75)\) where the two models give comparable results.

We further evaluate the QHSMM introduced when a nonlinear quantile regression function of \(\mathbf{Y}\) given \(\mathbf{X}\) is considered. Similarly to Geraci (2019), the observations are generated from a two state HSMM using the following nonlinear quantile regression models. In the first scenario, we simulated the data from the following logistic model:

where the explanatory variable is drawn from a continuous uniform distribution, \(X_{t} \sim {\mathcal {U}}(0,20)\), and where \({Y}^{(j)}_{t}\) and \({\epsilon }^{(j)}_{tk}\) denote the j-th component of \({\mathbf {Y}}_{t}\) and \(\varvec{\epsilon }_{tk}\), respectively. For each component j and hidden state k, the true values of the parameters, \(\varvec{\beta }_{jk}\), are given by \(\varvec{\beta }_{11} = (50, 12, 3)\), \(\varvec{\beta }_{21} = (10, 2 , -1)\), \(\varvec{\beta }_{12} = ( 30, 5, 2)\) and \(\varvec{\beta }_{22} = (20, 11, -3)\).

In the second scenario, following El Ghouch and Genton (2009) the data are generated according to the equation:

where \(X_{t} \sim {\mathcal {U}}(-1.1, 2.1)\) and where the parameter \(\gamma _j\) in (32) can be seen as a misspecification parameter that controls the deviation from the polynomial function. As \(\gamma _j\) increases, the data structure becomes more complicated, and approximating the true curve by a polynomial becomes increasingly difficult. In this study we chose \(\gamma _1 = 4\) and \(\gamma _2 = 8\). The true values of the parameters are given by \(\varvec{\beta }_{11} = (10, -6, 2.8)\), \(\varvec{\beta }_{21} = (3, 5, -0.5)\), \(\varvec{\beta }_{12} = (-3, 5, -2)\) and \(\varvec{\beta }_{22} = (8, 11, -3)\).

For the error terms \(\varvec{\epsilon }_{tk}\) in (31) and (32), and the SDs we considered the same distributions adopted for the linear case. Examples of the simulated data are shown in Figures 1 and 2 from the two scenarios, respectively.

Examples of data generated from the first scenario. Scatterplot of \(Y_1\) (first column) and \(Y_2\) (second column) under normal (first row) and Student t (second row) errors as a function of the included covariate, when using shifted-Poisson SDs. Red and blue data points distinguish the two latent states

Examples of data generated from the second scenario. Scatterplot of \(Y_1\) (first column) and \(Y_2\) (second column) under normal (first row) and Student t (second row) errors as a function of the included covariate, when using shifted-Poisson SDs. Red and blue data points distinguish the two latent states

We fit the proposed QHSMM for five quantile levels, i.e., \(\varvec{\tau }=(0.10, 0.10)\), \(\varvec{\tau }=(0.25, 0.25)\), \(\varvec{\tau }=(0.50,0.50)\), \(\varvec{\tau }=(0.75, 0.75)\) and \(\varvec{\tau }=(0.90, 0.90)\). For each model, we report the Proportion of Negative Residuals (PNR):

with \({\widehat{Q}}_{Y^{(j)}_{t} \mid X_t}\) being the fitted conditional quantile of \(Y^{(j)}_{t}\) at level \(\tau _j\), \(j=1,2\). If the model is correctly specified, the PNR should be approximately equal to \(\tau _j\) for each outcome. The results contained in Tables 4 and 5 are averaged over \(B=1000\) Monte Carlo replications.

By looking at the results, PNR rates are in general coherent with the selected quantile level. Further, one can observe that PNRs are typically closer to the nominal values \(\varvec{\tau }\) in the first scenario as opposed to the second one. This may be explained by the fact that the fitted model provides a better linear approximation of the true logistic quantile regression function than the more complex nonlinear model in the second scenario. Moreover, in this latter case, the PNRs obtained for the second component \(Y_2\) do worse than the corresponding ones for \(Y_1\), which is mainly due to the relatively larger variance associated to the misspecification parameter \(\gamma _j\) in (32). Overall, in both cases the PNRs are slightly, especially at the tails, below (at \(\varvec{\tau } = (0.10, 0.10)\)) or above (at \(\varvec{\tau } = (0.90, 0.90)\)) the expected proportions.

Finally, to assess the performance of penalized likelihood criteria (AIC, BIC and ICL) for selecting the number of hidden states and maximum sojourn times, we considered the same simulation experiment where the sojourns are generated from a beta-binomial distribution:

where \({\mathcal {B}}(\cdot , \cdot )\) is the beta function, \(\alpha _1 = 6, \alpha _2 = 0.7, \lambda _1 = 3\), \(\lambda _2 = 2\), \(U_1 = 20\) and \(U_2 = 10\). For each of the simulated \(B=100\) datasets, we fit the QHSMM with \(K = 2,3,4\) over the grid of state-dependent maximum sojourn times \((10, 15, 20) \times \dots \times (10, 15, 20)\), and select the best combination of \((K, \mathbf{U})\) associated to the lowest penalized likelihood criteria. Table 6 reports the number of times each criterion correctly identifies the number of latent states and maximum support points of the SDs (Panel A) and the absolute frequency distributions of the selected K for each of the three criteria (Panel B).

As one can see in Panel A, all three criteria work relatively well at \(\varvec{\tau } = (0.50, 0.50)\). By contrast, as we move towards the tails of the distribution of the responses, the ICL outperforms both the AIC and BIC and correctly identifies the pair \((K, \mathbf{U})\) that was used to generate the data more than 96% across all simulation scenarios. Moving onto Panel B, the AIC consistently performs worse than the BIC but both mostly overestimate the true number of states. These results suggest that regardless of the distribution on the error terms and SDs, the ICL yields superior performance and captures serial heterogeneity in the data in a more parsimonious manner compared to the other criteria, easing the interpretation of the latent states.

5 Application

In this section we apply the proposed methodology to air pollution data collected by the Lazio Regional Agency for Environmental Prevention and Protection (ARPA Lazio, https://www.arpalazio.it) in Italy. The ARPA Lazio provides information regarding the regional state of the environment and environmental trends, performing scientific, technical and research functions as well as assessment, monitoring, control and supporting local and health authorities. The time series used in this research are freely available from the ARPA Lazio website (https://www.arpalazio.net/main/aria/sci/basedati/chimici/chimici.php).

5.1 Data description

The data considered originate from a regional monitoring network system developed by the Lazio Region and have already been discussed in the work of Maruotti et al. (2017). This system has been organized in order to respond to the increasing demand for environmental information, but also for providing reliable data suitable for policy-related aspects. The network recorded concentrations of nine air pollutants on hourly basis at monitoring stations in the central area of the city of Rieti, Italy. The Rieti site has been chosen as it is classified as a traffic location, although it is located at a short distance from green areas and forests that facilitate the movement of air masses and removal of pollutants. In this work we consider three major air pollutants, i.e., Particulate Matter with aerodynamic diameter less than 2.5 \(\mu \)m (PM\(_{25}\)), Ozone (O\(_3\)) and Nitrogen Dioxide (NO\(_2\)) from January 01, 2019 to June 14, 2021. We averaged the pollution data to daily frequency and all concentrations are expressed in \(\mu \)g/m\(^3\).

Atmospheric variables also play a major role in determining the level of exposure to particular pollutants and capturing time dependence as proxies for seasonal variations and characteristics. Since pollution episodes are triggered by specific atmospheric factors, we include the following variables, namely the daily average wind speed, temperature, pressure and humidity. Table 7 presents the main descriptive statistics for the response variables and the set of included predictors. The asymmetry in the distributions of the pollutants is noted by examining the mean-median relationship and the five summary statistics indicate severe departures from the Gaussian assumptions, presenting high kurtosis and outlying values. In the same table we also report the empirical correlation coefficients between each response variable which clearly highlight a positive correlation between PM\(_{25}\) and NO\(_2\), and a negative association with O\(_3\). Therefore, the dependence structure among different pollutants, which cannot be detected by univariate methods, constitutes a crucial aspect of the analysis and should not be neglected.

From a graphical standpoint, Fig. 3 shows the normal QQ plots for the PM\(_{25}\), O\(_3\) and NO\(_2\) time series. These reveal the presence of potentially influential observations in the data, heavy tails and skewness for all three outcomes. This exploratory analysis and preliminary considerations motivate us to consider a joint quantile regression approach as investigative tool.

5.2 Results

We jointly model the concentrations of PM\(_{25}\), O\(_3\) and NO\(_2\) as a function of Wind Speed, Temperature, Pressure and Humidity at quantile levels \(\varvec{\tau } = (0.50,0.50,0.50)\), \(\varvec{\tau } = (0.75,0.75,0.75)\) and \(\varvec{\tau } = (0.90,0.90,0.90)\). Considering the 75-th and 90-th percentiles puts emphasis on alert thresholds for ambient air pollution associated with high levels of concentrations of chemicals. As a first step of the analysis, we fit the proposed QHSMM for a sequence of states K from 1 to 6 and a K-dimensional grid, \({\mathcal {U}} \subset {\mathbb {R}}_{\ge 0}^K\), of maximum sojourn times, \((10, 15,\dots , 60) \times \dots \times (10, 15,\dots , 60)\). To select the optimal combination of K and \(\mathbf{U}\), with \(\mathbf{U}\in {\mathcal {U}}\), and avoid the computational cost of a full grid search over \({\mathcal {U}}\), we employ the greedy search algorithm described in Sect. 3.2 with 200 starting points. Table 8 reports the log-likelihood, AIC, BIC and ICL values for the fitted models at the investigated quantile levels. The AIC selects \(K = 5\) states for all quantile levels meanwhile the BIC and ICL are more aligned in the choice of K as they identify 3, 5 and 4 states for \(\varvec{\tau } = (0.50,0.50,0.50)\), \(\varvec{\tau } = (0.75,0.75,0.75)\) and \(\varvec{\tau } = (0.90,0.90,0.90)\). This is not surprising since the AIC tends to overestimate the number of hidden states and, for this reason, we will not consider it hereafter. We can also see that the ICL values associated to \(K = 3\) and \(K = 5\) are extremely similar at \(\varvec{\tau } = (0.75,0.75,0.75)\). Following these considerations and looking at Table 8, we select the best fitted models with K equal to 3, 3 and 4 according to both the BIC and ICL criteria at \(\varvec{\tau } = (0.50,0.50,0.50)\), \(\varvec{\tau } = (0.75,0.75,0.75)\) and \(\varvec{\tau } = (0.90,0.90,0.90)\), respectively.

Figures 4, 5 and 6 report the classification results according to the selected models at the investigated quantile levels. Each plot shows the data points colored according to the estimated posterior probability of class membership, \({\widehat{\gamma }}_{tk}\), with the vertical lines separating blocks of four months. In our study, the latent components can be associated to specific exposure regimes characterized by seasonal weather conditions. Specifically, blue points (state 2) tend to cluster days in late autumn, winter, and early spring, while green ones (state 3) are generally inferred during late spring and summer. At \(\varvec{\tau } = (0.90,0.90,0.90)\) (see Figure 6), the four state QHSMM identifies a similar classification pattern with violet points (state 4) occurring mainly from spring until the end of summer, but also sporadically during the rest of the year. The overall results reflect the seasonal variation in atmospheric pollutants, with high concentrations of PM\(_{25}\) and NO\(_2\) in winter and high O\(_3\) concentrations in summer and warm months. It is also worth noting that the minimum values of particulate matter and nitrogen dioxide, and the maximum level of ozone were reached in state 1 (orange dots) during the period March-June 2020. These sudden changes may be possibly due to the implementation of lockdown measures to contain the COVID-19 outbreak in Italy (Bassani et al. 2021; Putaud et al. 2021). Indeed, in the first weeks of March, significant PM\(_{25}\) and NO\(_2\) declines were observed which led to O\(_3\) peaks resulting from reduced titration with nitrogen oxides. As restrictions were lifted in May-June, chemical concentrations settled again around the 2019 and early 2021 levels.

The estimated transition probability matrices of the latent semi-Markov chain (see Table 9) confirm that pollutant concentrations alternate between good and poor air conditions. The off-diagonal elements demonstrate that the hidden process stays in state 3 (low levels of particulate matter, nitrogen dioxide and moderate levels of ozone), and 4 in the case \(\varvec{\tau } = (0.90, 0.90, 0.90)\), with temporary changes towards more severe PM\(_{25}\) and NO\(_2\) concentrations (state 2) or higher ozone episodes (state 1). Meanwhile, direct transitions between states 1 and 2 are very unlikely at all three quantile levels.

Taking the effect of covariates into account, Table 10 shows the estimated state-specific regression parameters, \(\varvec{\beta }_k, k = 1,\dots , K\), at each quantile level, respectively. Standard errors are computed via parametric bootstrap using \(H=1000\) resamples as illustrated in Section 3 and point estimates are displayed in boldface when significant at the standard 5% level.

The estimated effects of the included covariates tend to be nonlinear, state-specific and are generally more pronounced in the upper end of the distribution of the responses. States 1 and 3 yield similar estimates as they are both associated with the best air conditions, especially when looking at effect of pressure and humidity. This is in sharp contrast with the point estimates in state 2 which is characterized by hazardous air quality. Among the four factors, wind speed and temperature exert the strongest influence on the considered pollutants in all seasons. In particular, PM\(_{25}\) and NO\(_2\) are negatively associated with both variables and such association increases during the coldest months of the year. Wind intensity, therefore, contributes considerably to the reduction of pollution, in particular in at-risk situations as identified by state 2. On the other hand, O\(_3\) concentration is positively associated with wind speed meanwhile the effect of temperature is positive in late-spring and hot weather, but negative throughout the rest of the year. Humidity can also help to decrease ozone pollution because the moisture in the air could enhance the condensation of water and slow down ozone production, but also reduce PM\(_{25}\) and NO\(_2\). Further, the concentrations of PM\(_{25}\) and NO\(_2\) are positively associated with atmospheric pressure and negatively associated with O\(_3\).

We conclude the analysis by reporting the estimated correlation matrices, \(\varvec{\Psi }_k, k = 1,\dots , K\), (see Table 11) in order to provide an indirect measure of tail dependence between the outcomes. Firstly, regardless of the state, the correlation coefficients between the air pollutants are generally significant, indicating that in this case fitting univariate quantile regressions separately would be inappropriate. Secondly, the correlation coefficients are increasing somewhat with \(\varvec{\tau }\). Finally, the estimates depict a data correlation structure that varies among the latent groups as the correlation between PM\(_{25}\), O\(_3\) and NO\(_2\) in the wintertime is substantially higher than that in spring and summer.

6 Conclusion

The study of pollution exposure is at the heart of policy attention for health and economics welfare analysis. Motivated the necessity to develop sound policies for controlling air contaminants emissions, this paper extends the joint quantile regression of Petrella and Raponi (2019) by introducing a hidden semi-Markov quantile regression for the analysis of multiple pollutants time series. The proposed model allows to capture quantile-specific effects across the entire distribution of several outcomes in one step and infer cluster-specific covariate effects at various quantile levels of interest. In order to avoid making biased inference related to incorrect distributional assumptions about the SDs, we adopt the approach of Guédon (2003) where the latent sojourn densities are approximated by using nonparametric discrete distributions and estimated directly from the data. Using simulation exercises, the proposed approach reveals promising results in reducing state misclassification rates with respect to HMMs and identifying the correct number of hidden states. In the empirical application, we employ our methodology to jointly model daily PM\(_{25}\), O\(_3\) and NO\(_2\) concentrations recorded in Rieti (Italy) as a function of wind speed, temperature, pressure and humidity. We find that seasonal changes in air pollutant concentrations are greatly affected by meteorological conditions, whose effects are generally amplified at the top end of the distribution of the responses. Moreover, the latent regimes capture seasonal variations in air pollution particles that characterize low and hazardous contamination levels.

This work could be extended further in the following directions. In particular, the method proposed could be positively applied to financial time series modeling. Indeed, financial returns often exhibit empirical characteristics, such as skewness, leptokurtosis, heteroscedasticity and clustering behavior over time, which are heavily influenced by hidden variables (e.g., the state of the market) during tranquil and crisis periods (Maruotti et al. 2021). In this context, time dependence can be further included in the regression model, allowing the quantile to vary over time according to an autoregressive process (Engle and Manganelli 2004).

The approach introduced might also be extended to other settings and data structures. Firstly, our QHSMM can be generalized to multivariate longitudinal data, extending the recent quantile mixed HMM in Merlo et al. (2022) by using more flexible sojourn distributions and, possibly, allowing the semi-Markov chain parameters to depend on observable covariates. Secondly, another extension would deal with the inclusion of spatial heterogeneity into the modeling approach to account for spatial-temporal variations of air pollutants from different monitoring sites. Lastly, while in the application we focused on daily concentrations of three air pollutants, in high-dimensional settings with a larger number of response variables and/or number of hidden states, the introduced QHSMM can be easily over-parameterized. This often occurs because of the large number of unique parameters in the covariance matrices to be estimated, implying a loss in terms of interpretability as well as numerically ill-conditioned estimators. In these cases, following Maruotti et al. (2017), we may consider a class of parsimonious HSMMs by imposing a factor decomposition on the state-specific covariance matrices. Not only would this modeling strategy provide information about the dependence between pollutants, but also a clear interpretation of the latent association structure among them.

7 Supplementary Materials

The Supplementary Materials include additional simulations that are used to support the results in the manuscript when the number of analyzed response variables p increases.

Notes

The pdf of a GIG(p, a, b) distribution is defined as \(f_{GIG}(x; p,a,b)= \frac{\left( \frac{a}{b}\right) ^{p/2}}{2K_{p}(\sqrt{ab})} x^{p-1} e^{-\frac{1}{2}\left( ax +bx^{-1} \right) }\), with \(a>0\), \(b>0\) and \(p \in {{{\mathcal {R}}}}\).

References

Adam, T., Langrock, R., Weiß, C.H.: Penalized estimation of flexible hidden Markov models for time series of counts. Metron 77(2), 87–104 (2019)

Akaike, H.: Information theory and an extension of the maximum likelihood principle, in ‘Selected papers of Hirotugu Akaike’, Springer, pp. 199–213, (1998)

Barbu, V. S. and Limnios, N.: Semi-Markov chains and hidden semi-Markov models toward applications: their use in reliability and DNA analysis, Vol. 191, Springer Science & Business Media (2009)

Bartolucci, F., Farcomeni, A. and Pennoni, F.: Latent Markov models for longitudinal data, CRC Press, (2012)

Bassani, C., Vichi, F., Esposito, G., Montagnoli, M., Giusto, M., Ianniello, A.: Nitrogen dioxide reductions from satellite and surface observations during COVID-19 mitigation in Rome (Italy). Environmental Science and Pollution Research 28(18), 22981–23004 (2021)

Bernardi, M., Gayraud, G., Petrella, L., et al.: Bayesian tail risk interdependence using quantile regression. Bayesian Analysis 10(3), 553–603 (2015)

Biernacki, C., Celeux, G., Govaert, G.: Assessing a mixture model for clustering with the integrated completed likelihood. IEEE Transactions on Pattern Analysis and Machine Intelligence 22(7), 719–725 (2000)

Browne, R.P., McNicholas, P.D.: A mixture of generalized hyperbolic distributions. Canadian Journal of Statistics 43(2), 176–198 (2015)

Bulla, J., Bulla, I.: Stylized facts of financial time series and hidden semi-Markov models. Computational Statistics & Data Analysis 51(4), 2192–2209 (2006)

Bulla, J., Bulla, I., Nenadić, O.: hsmm-an R package for analyzing hidden semi-Markov models. Computational Statistics & Data Analysis 54(3), 611–619 (2010)

Cappé, O., Moulines, E. and Rydén, T. (2006), Inference in hidden Markov models, Springer Science & Business Media

Charlier, I., Paindaveine, D., Saracco, J.: Multiple-output quantile regression through optimal quantization. Scandinavian Journal of Statistics 47(1), 250–278 (2020)

Chavas, J.-P.: On multivariate quantile regression analysis. Statistical Methods & Applications 27(3), 365–384 (2018)

Dannemann, J., Holzmann, H., Leister, A.: Semiparametric hidden Markov models: identifiability and estimation. Wiley Interdisciplinary Reviews: Computational Statistics 6(6), 418–425 (2014)

Dempster, A. P., Laird, NM and Rubin, DB.: ‘Maximum likelihood from incomplete data via the EM algorithm’, Journal of the Royal Statistical Society Series B (Methodological) pp. 1–38 (1977)

El Ghouch, A., Genton, M.G.: Local polynomial quantile regression with parametric features. J.Am. Stat. Assoc. 104(488), 1416–1429 (2009)

Engle, R.F., Manganelli, S.: CAViaR: conditional autoregressive value at risk by regression quantiles. J. Bus. Econ. Stat. 22(4), 367–381 (2004)

Ephraim, Y., Merhav, N.: Hidden Markov processes. IEEE Trans. Inf. Theor. 48(6), 1518–1569 (2002)

Farcomeni, A.: Quantile regression for longitudinal data based on latent Markov subject-specific parameters. Stat. Comput. 22(1), 141–152 (2012)

Geraci, M.: Modelling and estimation of nonlinear quantile regression with clustered data. Comput. Stat. Data Anal. 136, 30–46 (2019)

Guédon, Y.: Estimating hidden semi-Markov chains from discrete sequences. J. Comput. Graph. Stat. 12(3), 604–639 (2003)

Hamilton, J. D.:‘A new approach to the economic analysis of nonstationary time series and the business cycle’, Econometrica: Journal of the Econometric Society pp. 357–384, (1989)

Holzmann, H., Munk, A., Gneiting, T.: Identifiability of finite mixtures of elliptical distributions. Scandinav. J. Stat. 33(4), 753–763 (2006)

Hubert, L., Arabie, P.: Comparing partitions. J. Classif. 2(1), 193–218 (1985)

Koenker, R.: Quantile regression. Cambridge University Press (2005)

Koenker, R. and Bassett, G.:‘Regression Quantiles’, Econometrica: Journal of the Econometric Society 46(1), 33–50, (1978)

Koenker, R., Chernozhukov, V., He, X. and Peng, L.: Handbook of quantile regression, CRC press, (2017)

Kong, L. and Mizera, I.: ‘Quantile tomography: using quantiles with multivariate data’, Statistica Sinica pp. 1589–1610, (2012)

Kotz, S., Kozubowski, T. and Podgorski, K.:The Laplace distribution and generalizations: a revisit with applications to communications, economics, engineering, and finance, Springer Science & Business Media, (2012)

Langrock, R., Kneib, T., Sohn, A., DeRuiter, S.L.: Nonparametric inference in hidden Markov models using P-splines. Biometrics 71(2), 520–528 (2015)

Langrock, R., Zucchini, W.: Hidden Markov models with arbitrary state dwell-time distributions. Comput. Stat. Data Anal. 55(1), 715–724 (2011)

Leroux, B.G.: Maximum-likelihood estimation for hidden Markov models. Stochastic Process. Appl. 40(1), 127–143 (1992)

Levinson, S.E., Rabiner, L.R., Sondhi, M.M.: An introduction to the application of the theory of probabilistic functions of a Markov process to automatic speech recognition. Bell Syst. Tech. J. 62(4), 1035–1074 (1983)

Luo, Y., Lian, H., Tian, M.: Bayesian quantile regression for longitudinal data models. J. Stat. Comput. Simul. 82(11), 1635–1649 (2012)

MacDonald, I. L. and Zucchini, W.:Hidden Markov and other models for discrete-valued time series, Vol. 110, CRC Press (1997)

Marino, M.F., Tzavidis, N., Alfò, M.: Mixed hidden Markov quantile regression models for longitudinal data with possibly incomplete sequences. Stat. Methods Med. Res 27(7), 2231–2246 (2018)

Maruotti, A.: Mixed hidden Markov models for longitudinal data: an overview. Int. Stat. Rev. 79(3), 427–454 (2011)

Maruotti, A., Bulla, J., Lagona, F., Picone, M. and Martella, F.:‘Dynamic mixtures of factor analyzers to characterize multivariate air pollutant exposures’, The Annals of Applied Statistics pp. 1617–1648,(2017)

Maruotti, A., Petrella, L., Sposito, L.: Hidden semi-Markov-switching quantile regression for time series. Compu. Stati. Data Anal. 159, 107208 (2021)

Maruotti, A., Punzo, A.: Initialization of hidden Markov and semi-Markov models: a critical evaluation of several strategies. Int. Stat. Rev. 89(3), 447–480 (2021)

Maruotti, A., Punzo, A., Bagnato, L.: Hidden Markov and semi-Markov models with multivariate leptokurtic-normal components for robust modeling of daily returns series. J. Financ. Econom. 17(1), 91–117 (2019)

Merlo, L., Petrella, L., Raponi, V.: Forecasting VaR and ES using a joint quantile regression and its implications in portfolio allocation. J. Bank. Finan. 133, 106248 (2021)

Merlo, L., Petrella, L., Salvati, N., Tzavidis, N.: Marginal M-quantile regression for multivariate dependent data. Comput. Stat. Data Anal. 173, 107500 (2022)

Merlo, L., Petrella, L., Tzavidis, N.: ‘Quantile mixed hidden Markov models for multivariate longitudinal data: an application to children’s Strengths and Difficulties Questionnaire scores’, Journal of the Royal Statistical Society. Ser. C Appl. Stat. 71(2), 417–448 (2022)

O’Connell, J., Højsgaard, S., et al.: Hidden semi Markov models for multiple observation sequences: the mhsmm package for R. J. Stat. Softw. 39(4), 1–22 (2011)

Petrella, L., Raponi, V.: Joint estimation of conditional quantiles in multivariate linear regression models with an application to financial distress. J. Multivar. Anal. 173, 70–84 (2019)

Pohle, J., Adam, T., Beumer, L.T.: Flexible estimation of the state dwell-time distribution in hidden semi-Markov models. Comput. Stat. Data Anal. 172, 107479 (2022)

Pohle, J., Langrock, R., van Beest, F.M., Schmidt, N.M.: Selecting the number of states in hidden Markov models: pragmatic solutions illustrated using animal movement. J. Agric Biol. Environ. Stat. 22(3), 270–293 (2017)

Putaud, J.-P., Pozzoli, L., Pisoni, E., Martins Dos Santos, S., Lagler, F., Lanzani, G., Dal Santo, U., Colette, A.: Impacts of the COVID-19 lockdown on air pollution at regional and urban background sites in northern Italy. Atmosp. Chem. Phys. 21(10), 7597–7609 (2021)

Sansom, J. and Thomson, P. (2001), ‘Fitting hidden semi-Markov models to breakpoint rainfall data’, Journal of Applied Probability 38(A), 142–157

Schwarz, G., et al.: Estimating the dimension of a model. Anna. Stat. 6(2), 461–464 (1978)

Serfling, R.: Quantile functions for multivariate analysis: approaches and applications. Statist. Neerlandica 56(2), 214–232 (2002)

Stolfi, P., Bernardi, M. and Petrella, L.: ‘The sparse method of simulated quantiles: An application to portfolio optimization’, Statistica Neerlandica (2018),

Visser, I., Raijmakers, M.E., Molenaar, P.C.: Confidence intervals for hidden Markov model parameters. Br. J. Math. Statist. Psychol. 53(2), 317–327 (2000)

Ye, W., Zhu, Y., Wu, Y. and Miao, B.: ‘Markov regime-switching quantile regression models and financial contagion detection’, Insurance: Mathematics and Economics 67, 21–26,(2016)

Yu, K., Moyeed, R.A.: Bayesian quantile regression. Stat. Probab. Lett. 54(4), 437–447 (2001)

Yu, K., Zhang, J.: A three-parameter asymmetric Laplace distribution and its extension. Commun. Statist. Theory Methods 34(9–10), 1867–1879 (2005)

Yu, S.-Z.: Hidden Semi-Markov models: theory, algorithms and applications. Morgan Kaufmann (2015)

Zucchini, W., MacDonald, I. L. and Langrock, R.: Hidden Markov models for time series: an introduction using R, Chapman and Hall CRC (2016)

Acknowledgements

We would like to warmly thank the Associate Editor and two anonymous reviewers for their thoughtful comments and efforts towards improving our manuscript. This work was partially supported by the Finance Market Fund, Norway, project number 309218; “Statistical modelling and inference for (high-dimensional) financial data”.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Appendix

Appendix

Proof of Proposition 1

Firstly, we note that to ensure identifiability of the MAL density in (5) it suffices to apply Proposition 1 of Petrella and Raponi (2019). Secondly, for a general HSMM, identifiability has been proven up to label switching (Leroux 1992). Thus, to ensure identifiability, all one needs to prove is the identifiability of the marginal mixtures (Dannemann et al. 2014), which in our case are represented by the finite mixtures of MAL distributions. Based on the work of Holzmann et al. (2006), Browne and McNicholas (2015) prove identifiability of finite mixtures of multivariate generalized hyperbolic distributions. Since the MAL in (5) is a limiting case of the multivariate generalized hyperbolic distribution (see (3) and (4) in Browne and McNicholas (2015) with \(\lambda = 1, \psi = 2\) and \(\chi \rightarrow 0\)), model identifiability follows by applying Corollary 2 of Browne and McNicholas (2015).

Proof of Proposition 2

The E-step of the EM algorithm considers the conditional expectation of the complete log-likelihood function in (8) given the observed data and the current parameter estimates \(\hat{{\varvec{\Phi }}}^{(r-1)}_{\varvec{\tau }}\). At first, we recall that under the constraints imposed on \({\varvec{\tilde{\xi }}}\) and \(\varvec{\Lambda }\), the representation in (7) implies that:

This means that the joint density function of \(\mathbf{Y}\) and \({\tilde{C}}\) is:

By substituting (36) in (8) and taking the conditional expectation of the logarithm of (8), we obtain the expected complete log-likelihood function in (13).

To compute the conditional expectation of \({\tilde{c}}_{tk}\) and \({\tilde{z}}_{tk}\) in (13), \({\tilde{C}}\) is treated as an additional latent variable. Using the joint distribution of \(\mathbf{Y}\) and \({\tilde{C}}\) derived in (36) and the MAL density of \(\mathbf{Y}\) given in (5), we have that:

which corresponds to a Generalized Inverse Gaussian (GIG) distribution with parameters \(\nu , {2+{\tilde{d}}}, \tilde{m_i}\), i.e.Footnote 1

Then, it follows that

and

Denoting the two conditional expectations in (39) and (40) by \(\hat{{\tilde{c}}}\) and \(\hat{{\tilde{z}}}\) respectively, concludes the proof.

Proof of Proposition 3

Imposing the first order conditions on (13) with respect to each component of the set \({\varvec{\Phi }}_{\varvec{\tau }}\), gives the update estimates in (16), (17), (18) and (19). However, there is not closed formula solution to update the elements of the scale matrix \(\mathbf{D}_j\); hence, the M-step update requires using numerical optimization techniques to maximize (13). A considerable disadvantage of this procedure is the necessary high computational effort which could be very time-consuming. For this reason, we utilize a simpler estimator for the scale parameters \(\delta _{jk}, k = 1,\dots ,p\), which follows directly from the fact that all marginals of the MAL distribution are univariate AL distributions (see Yu and Zhang 2005):

where \(\hat{{\mu }}_{tjk}\) is the k-th element of the vector \(\hat{\varvec{\mu }}_{tj}\).

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Merlo, L., Maruotti, A., Petrella, L. et al. Quantile hidden semi-Markov models for multivariate time series. Stat Comput 32, 61 (2022). https://doi.org/10.1007/s11222-022-10130-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11222-022-10130-1