Abstract

In this paper, the complex dynamical networks (CDNs) with dynamic connections are regarded as an interconnected systems composed of intercoupling links’ subsystem (LS) and nodes’ subsystem (NS). Different from the previous researches on structural balance control of CDNs, the directed CDNs’ structural balance problem is solved. Considering the state of links cannot be measured accurately in practice, we can control the nodes’ state and enforce the weights of links to satisfy the conditions of structural balance via effective coupling. To achieve this aim, a coupling strategy between a predetermined matrix of the structural balance and a reference tracking target of NS is established by the correlative control method. Here, the controller in NS is used to track the reference tracking target, and indirectly let LS track the predetermined matrix and reach a structural balance by the effective coupling for directed and undirected networks. Finally, numerical simulations are presented to verify the theoretical results.

Similar content being viewed by others

Introduction

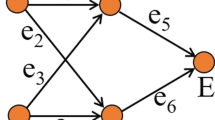

In the last 2 decades, CDNs have received considerable attention and been widely applied in many fields [1,2,3,4,5], such as social sciences [1], image processing [2] and so on. According to the graph theory, a complete CDN is represented by a set of nodes which are dynamically interacting and have links between them. In other words, NS and LS are the two subsystems of CDN, which are employed for better understanding and scrutinizing the dynamical process of a network as a whole. That is why some dynamic behaviors are expressed by nodes such as consensus [6, 7], synchronization [8, 9] and stabilization [10], while other dynamic processes are determined by links (weights of connections between nodes) such as structural balance [11,12,13].

The structural balance proposed by F. Heider [14] is a significant concept in social networks, which has been used to analyze connections (links) between individuals (nodes) and is determined by the triad’s edges. Moreover, Cartwright and Harary not only extended this definition, but also proved that the network is a structural balance, if and only if its nodes can be divided into two factions, there are only positive links within factions, and only negative links between factions [15]. According to the existing research results of structural balance theory, the linked model is mainly divided into three cases: (i) as the signed networks, the weights of links are only depended on integers 0 and 1 [16]; (ii) some time-varying models can be effectively characterized by matrix differential equation [11, 12, 17,18,19] or matrix difference equation [20], but they have ignored the influence of the node’s internal state movement on the evolution of the link; (iii) the Riccati dynamical equation with the coupling matrix regarding the internal state of nodes is employed as the model [13, 21,22,23], which means that the dynamic changes of nodes and links affect each other through effective coupling. The results of these models show that the time evolution behaviors of links converge to the structural balance.

However, the structural balance control of networks is relatively unexplored. Reference [12] shows that if we change the value of the links between the nodes and a reference agent (node), the structural balance can be reached. References [21,22,23] provide a CDN model composed of intercoupling LS and NS. We can realize the structural balance of LS by controlling the states of nodes, because the nodes’ states with effective coupling can enforce the LS to reach structural balance. Unfortunately, the method in Refs. [12, 21,22,23] only works for undirected networks, but is not valid for directed networks.

Following the above analysis, this paper focuses mainly on structural balance control problems of directed networks, which also involves intercoupling LS and NS. Considering the weights of links cannot be measured accurately and the two subsystems are coupled, we can control the NS so that the effective coupling is conducive to LS tracking a structural balance matrix, which will cause the network to achieve structural balance. For such a control objective, the structural balance control can be regarded as the tracking control problem of an under-actuated system. We noticed that the dimension of control input is less than the freedom of state in under-actuated systems, which means that the state of an under-actuated system can be divided into a directly controllable state (state of NS) and an indirectly controllable state (state of LS). The control objective of the indirectly controllable state can be achieved via a certain coupling relationship between the two states, where the dynamic changes of directly controllable state are determined by the controller [24,25,26]. This means that an under-actuated system also consists of two subsystems, which are similar to the NS and the LS.

In fact, the coupling relationship between the tracking objectives of the NS and LS exists widely in practical systems, such as in the rotational speed control problem of PMSM based on vector control theory [27]. In detail, if the direct axis current is not zero, then PMSM can be regarded as an under-actuated system consisting of a current loop (directly controllable subsystem) and speed loop (indirectly controllable subsystem), and there is a coupling relationship between the reference numerical values of rotational speed and current. Thus, we can directly control the current loop to adjust the speed of PMSM. Generally, the coupling relationships between the two reference targets can simplify the rotational speed control design of PMSM. Therefore, this method can be extended to realize control capability for structural balance in CDNs. Specially, for a structural balance matrix (the tracking target of LS), we can get the reference tracking target of NS by using the coupling relationship. Thus, we can directly design a controller for the NS, which can make the state of NS track the corresponding reference target. Then, the effective coupling allows the LS to track the structural balance matrix indirectly, and the structural balance is achieved.

The main contributions of this work are listed as follows: (i) a novel model of directed CDNs composed of intercoupling NS and LS is proposed, which is rarely seen in majority literatures. (ii) The coupling mechanism of dynamic nodes that promotes the emergence of the structural balance of links is discussed rather than ignoring the influence of nodes’ state on the links’. (iii) A coupling relationship between the tracking objectives of the NS and LS is given, and once the target matrix (structural balance matrix) of LS is given, the tracking target of NS is determined. Thus, a decentralized control strategy for the NS is used to force the NS to track its target, which implies that each node has a controller, and in which only the state information of the local node can be used. In this way, the interference of transmission can be effectively avoided, and the reliability of the CDNs can be greatly improved.

The rest of this paper is organized as follows. In the second section, we propose a mathematical model of CDNs, and give some useful definitions and theories. The third section designs the decentralized controller to ensure the structural balance of the network. The fourth section gives the simulation and experimental results. Finally, the conclusions are discussed in last section.

Notations: \(A^T \) represents the transpose of the matrix A. \(\left\| \cdot \right\| \) represents the Euclid norm of the vector or the matrix. \(R^n\) stands for the n-dimensional Euclidean space. \(I_n\) is characterized as the n-dimensional identity matrix. \(R^{n \times n} \) is the set of \(n \times n\) real matrices. \({\text {diag}}\{ \cdots \} \) stands for a diagonal matrix. Symbol \(\otimes \) stands for the Kronecker product of matrices. \({\text {vec}}(\cdot )\) is an operator which maps a matrix onto a vector composed of its rows.

Preliminaries and model description

In this paper, we consider a class of CDNs composed of NS and LS. If each node in the NS is a n-dimensional continuous-time system, then the NS can be described as [9, 13]

where the vector \(x_i = [x_{i1},x_{i2}, \ldots ,x_{in}]^T \in R^n\) indicates the i-th node’s state; \(A_i\in {R^{n\times n}}\) and \(B_i\in {R^{n\times m}}\) are constant matrices; the continuous vector function \(f_i (x_i) = [f_{i1} (x_i),f_{i2} (x_i), \ldots ,f_{im} (x_i)]^T \in R^m\); the common weight strength of links \(\rho >0\), which is a given constant; the time-varying variable \(p_{ij}(t)\in {R}\) represents the weight of link from the node j to the node i. Especially, \(p_{ij}(t)=p_{ji}(t)\) is for undirected networks, and \(p_{ij} (t) \ne p_{ji} (t)\) is for directed networks in general. In addition, if \(i=j\), \(p_{ij} (t)\) indicates the strength of self-connection about the node i; the vector function \(H_j (x_j) = [H_{j1} (x_j),H_{j2} (x_j), \ldots ,H_{jn} (x_j)]^T \) is used to adjust internal coupling relationships between nodes; and the control input of the i-th node \(u_i \in R^n \).

Remark 1

(i) The mathematical model (1) is a typical CDN model about the nodes, from the existing results, and the connection relationships \(p_{ij}(t)\) can be divided into the following three cases: \(p_{ij}(t)\) are known constants [8, 28, 29], or are time-varying and continuous deterministic [7, 30, 31], or are unknown constants [32]. (ii) From (1), it is easy to see that the node dimension n is arbitrary. However, Ref. [21] requires that the dimension of nodes is two-dimensional. Therefore, the model of NS proposed in this paper is more general compared with Ref. [21]. (iii) Clearly, the matrix \(P(t) = [p_{ij}(t) ]_{N \times N}\) can be used to express the weighted values of links between nodes. In this paper, \(P(t) = [p_{ij}(t) ]_{N \times N}\) is time-varying and described by a matrix differential equation \({\dot{P}}(t)\). Especially, If \({\dot{P}}=0\) is true, which means the connection relationships \(p_{ij}(t)\) are constants, then the static and weighted network is denoted by \(G=(V,L,P)\), consisting of the node set \(V=\{v_{1},v_{2},\ldots ,v_{N}\}\), link set \(L\subseteq V\times V\) and the links’ weights \(p_{ij}\ne 0\) if \((v_{j},v_{i})\in L\) and otherwise \(p_{ij}=0\), where \((v_{j},v_{i})\) denotes a directed link from node \(v_{j}\) to node \(v_{i}\) in G. Moreover, if \({\dot{P}}=0\) and \(p_{ii}=\sum \nolimits _{j = 1,j\ne i}^N p_{ij}\) are true, the system (2) is called as time-invariant dissipative coupled CDN in Refs. [28, 29].

Let the state vector \(x=[x_1 ^T, x_2 ^T, \ldots , x_N ^T]^{T}\in R^{nN}\), the vector functions \(F(x)=[f_1 ^T (x_1),f_2 ^T (x_2),\ldots ,f_N ^T (x_N)]^{T}\), \(H(x)=[H_1 ^T (x_1),H_2 ^T (x_2),\ldots ,H_N ^T (x_N)]^{T}\), and the control input vector \(u=[u_1 ^T,u_2 ^T,\ldots ,u_N ^T]^{T}\), thus, the NS (1) can be rewritten as

where the matrices \(A = {\text {diag}}\{ A_1 ,A_2 , \ldots ,A_N \}\) and \(B = {\text {diag}}\{ B_1 ,B_2 , \ldots ,B_N \}\).

Let \(X=[x_1,x_2,\ldots ,x_N]\in R^{n\times N}\). Considering the coupling between nodes and links, the LS is described by a matrix differential equation as follows [9]:

where \(\Theta _{1},\Theta _{2}\in R^{N\times N}\) are constant matrices, the coupling matrix satisfies \(\Phi (X)=\alpha \sum \nolimits _{j = 1}^s\Psi _{j}X\Omega _{j}+\Omega _{0}\), in which the constant \(\alpha \) is a known coupling coefficient and the constant matrices \(\Psi _{j}\in R^{N\times n}\), \(\Omega _{j},\Omega _{0}\in R^{N\times N}\), \(j=1,2,\ldots ,s\).

Remark 2

(i) In Refs. [9, 13, 21, 22], some matrix differential equations are used to describe the state changes of links in networks, however, the network composed of LS and NS in Refs. [13, 21, 22] is undirected (\(P(t)=P^T(t)\)), thus, only the structural balance of undirected networks is discussed, while the case of directed networks is ignored. It is worth noting that the LS (3) is similar to the links’ model in Ref. [9], and both of them can be used to describe the case of directed networks. Unfortunately, Ref. [9] only focuses on the synchronization of nodes, but does not consider the structural balance of the networks, which is determined by the states of links. In order to further enrich the research results, this paper mainly studies the structural balance of LS in directed networks. (ii) Generally, it is difficult to measure and obtain the weights of links accurately, fortunately, we find that the states of the nodes and links are coupled with each other from (1) and (3), which implies we can control the states of the nodes and indirectly force LS to achieve structural balance through the effective coupling \(\Phi (X)\). (iii) If LS (3) is rewritten as \({\dot{P}} = (\Theta _{1}+\Theta ^{T}_{2})P+P(\Theta ^{T}_{1}+\Theta _{2})+\Phi (X)+\Phi ^{T}(X)\), then we can obtain that \(P(t)=P^T(t)\) with initial value \(P(0)=P^T(0)\), which implies that the network becomes an undirected network. On the contrary, as long as the connection relationships \(p_{ij}(t)\) between nodes contain \(p_{ij}(t)\ne p_{ji}(t)\), the network becomes a directed network. It is not difficult to find that undirected network can be regarded as a special form of directed network.

Definition 1

[33]. A static and weighted network \(G=(V,L,P)\) is structural balance if and only if its nodes can be divided into two factions \(V_{1}\) and \(V_{2}\) via links, where \(V_{1}\cap V_{2}= \emptyset \), \(V_{1}\cup V_{2}=V\), and \(p_{ij}\ge 0\) for \(v_{i},v_{j}\in V_{z}(z=1,2)\), \(p_{ij}\le 0\) for \(v_{i}\in V_{z}\) and \(v_{j}\in V_{w}, z\ne w(z,w\in \{1,2\})\). Otherwise, the network is structural unbalance.

Lemma 1

[33]. For a static and weighted network \(G=(V,L,P)\), if one of the following conditions holds, then the network \(G=(V,L,P)\) is structural balance.

-

(1)

The feature of \(G=(V,L,P)\) conforms to Definition 3;

-

(2)

\(\exists \Upsilon \in \Delta \) such that each element of \(\Upsilon P \Upsilon \) is nonnegative; where \(\Delta = \{{\text {diag}}(\sigma )|\sigma =[\sigma _{1},\sigma _{2},\ldots ,\sigma _{N}] \}, \sigma _{i}\in \{1,-1\}, i=1,2,\ldots ,N\), is a set of diagonal matrices.

Remark 3

Clearly, the structural balance of the network \(G=(V,L,P)\) is determined by the matrix P, and Lemma 1 is true for \(p_{ii}=0\) (that is, the self-loops of nodes are not considered). However, some papers have considered the self-loops of each node in G, for example, in Refs. [11, 13, 21, 22], the diagonal elements of the weighted matrix P are positive (that is \(p_{ii}>0\)), which indicates the strength of individual self-confidence or the self-identity under the sociological sense. It is worth noting that Lemma 1 is also true for \(p_{ii}>0\). Therefore, whether \(p_{ii}=0\) or \(p_{ii}>0\), if the weighted matrix P can make the network achieve structural balance, then it is called as a structural balance matrix.

In order to complete the control design, some appropriate assumptions need to be given as follows.

Assumption 1

[13, 22] The vector function H(x) in (2) is bounded, i.e., the inequality \(\left\| H(x) \right\| \le h\) holds, where h is a known positive constant.

Remark 4

(i) The constant h is used in the controller of NS (1), therefore, we need find a way to get its value. (ii) If the vector functions \(H_j (x_j) = [H_{j1} (x_j),H_{j2} (x_j), \ldots ,H_{jn} (x_j)]^T, j=1,2,\ldots ,N\) are known, then we can get the upper bound \(h_{jk}\) of each function \(H_{jk}(x_j), k=1,2,\ldots ,n\) using its monotonicity. Then, the constant h can be obtained by calculating \(\sqrt{\sum \nolimits _{j = 1}^N \sum \nolimits _{k = 1}^n h_{jk}}\). (iii) If the vector functions \(H_j (x_j) = [H_{j1} (x_j),H_{j2} (x_j), \ldots ,H_{jn} (x_j)]^T, j=1,2,\ldots ,N\) are unknown and satisfies Lipschitz condition [28, 32]. That is, the inequality \(\Vert H_j (x_j)-H_j (0)\Vert \le \delta \Vert x_j\Vert \) holds, where \(H_i (0)\) is bounded and can be obtained by solving (1) with \(x_i(0)=0,p_{ij} = \left\{ \begin{array}{l} 1,\begin{array}{*{20}c} {} &{} {i=j} \\ \end{array} \\ 0,\begin{array}{*{20}c} {} &{} {i\ne j } \\ \end{array} \\ \end{array} \right. \) (only isolated nodes are considered). Thus, we can get \(h=\delta \sqrt{ N max(\Vert x_i\Vert ^2,i=1,2,\ldots ,N)}+\sqrt{\sum \nolimits _{i = 1}^N \Vert H_i (0)\Vert ^2}\), where \(\delta \) can be determined by observation in the process of simulation.

Assumption 2

[21, 22] The matrix pair \((A_i,B_i)\) in

-

(1)

is completely controllable.

If Assumption 2 is true, there must exist a matrix \(W_i\in R^{m\times n}\) such that the following Lyapunov equation has only one solution \(K_i>0\) for any given matrix \(Q_i>0\):

Remark 5

Let \(W = {\text {diag}}\{ W_1 ,W_2 , \ldots ,W_N \}\), \(K = {\text {diag}}\{ K_1 ,K_2 , \ldots ,K_N \}\) and \(Q = {\text {diag}}\{ Q_1 ,Q_2 , \ldots ,Q_N \}\), thus the equation \((A+BW)^{T}K+K(A+BW)=-Q\) can be obtained from (2) and (4).

Assumption 3

[21, 22] The matrices \(\Theta _{1}\) and \(\Theta _{2}\) in (3) are Hurwitz stable.

If Assumption 3 is true, then we can get the matrix \(I_{N}\otimes \Theta _{1}+\Theta _{2}^{T}\otimes I_{N}\) is also Hurwitz stable. Hence, for any given matrix \({\widehat{Q}}>0\), there must exist a matrix \(U>0\) satisfying the following Lyapunov equation:

where \({\widehat{A}}=I_{N} \otimes \Theta _{1}+\Theta _{2}^{T}\otimes I_{N}\).

Remark 6

If the network is undirected, and the LS is shown in (iii) Remark 2, then the Eq. (5) still holds, where \({\widehat{A}}=I_{N} \otimes (\Theta _{1}+\Theta _{2}^{T})+(\Theta ^{T}_{1}+\Theta _{2})\otimes I_{N}\).

Main result

Through \({\text {vec}}( \cdot )\) operator and Kronecker product, some basic properties can be obtained as follows [34]:

-

(1)

\({\text {vec}}(C Z D )=(D^{T} \otimes C){\text {vec}}(Z)\);

-

(2)

\({\text {vec}}(CZ+ZD)=(I_g\otimes C + D^{T}\otimes I_l){\text {vec}}(Z)\); where the matrix \(Z \in R^{l\times g}\), C and D are appropriate dimensional matrices.

Control objectives For a structural balance matrix \(P^{*}=[p_{ij}^{*}]_{N\times N}\), which can be obtained via Lemma 1, the controller \(u_i\) in (1) is designed to make the state of each node track a suitable target \(x_{i}^{*}\in R^{n},i=1,2,\ldots ,N\), then, the LS (3) can asymptotically track \(P^{*}\) under the influence of the coupling matrix \(\Phi (X)\), finally, the structural balance of the network is realized. That is, the purposes of correlative control are \(x_{i}(t) - x_{i}^{*} \mathop \rightarrow \limits ^{t \rightarrow + \infty } 0\) and \(P(t) -P^{*} \mathop \rightarrow \limits ^{t \rightarrow + \infty } 0\).

Let \(x^{*}=[(x_{1}^{*})^{T},\ldots ,(x_{N}^{*})^{T}]^{T}\in R^{nN}\) and \(X^{*}=[x_{1}^{*},\ldots ,x_{N}^{*}]\in R^{n\times N}\), then we can get \(x^{*}={\text {vec}}(X^{*})\). For simplicity, both \({\bar{x}}=x-x^{*}\) and \({\bar{X}}=X-X^{*}\) are used to denote the tracking error of the state in LS (3), and \(x_{i}(t) - x_{i}^{*} \mathop \rightarrow \limits ^{t \rightarrow + \infty } 0\) means \({\bar{x}}(t) \mathop \rightarrow \limits ^{t \rightarrow + \infty } 0\) or \({\bar{X}}(t) \mathop \rightarrow \limits ^{t \rightarrow + \infty } 0\).

Assumption 4

For subsystem (3) and a structural balance matrix \(P^{*}\), the tracking target matrix \(X^{*}\) of the subsystem (2) satisfies the following equation:

Remark 7

(i) If a static and weighted network \(G=(V,L,P^*)\) is known to be structural balance, where \(P^*\in R^{N\times N}\) can be obtained by lemma 1, then, in order to achieve the structural balance of the connection relationships, we only need to make the state P(t) of LS (3) track the structural balance matrix \(P^{*}\). However, as the states of links are difficult to measure accurately, we cannot directly design a controller for LS (3). Fortunately, we noticed that (1) and (3) are coupled to each other, which implies that the dynamical behaviors of LS (3) are related to the dynamical changes of NS (1) via the coupling matrix \(\Phi (X)\). Since the matrix \(P^{*}\) (tracking target of the subsystem (3)) is known, the tracking target \(X^{*}\) of the subsystem (2) can be obtained by solving the Eq. (6). This means that the tracking targets of the two subsystems are coupled with each other, and this coupling relationship conforms to the practical requirements of some networks. For example, in a web winding system [35, 36], the tensions of the web (links) need to be given first, only then can we determine the proper rotational speed of the motors (nodes). (ii) Using the properties of Kronecker product and \({\text {vec}}(\cdot )\) operator, the matrix \(X^*\) in (6) can be obtained by solving the equation \(\alpha \left[ {\sum \nolimits _{j = 1}^s {\left( {\Omega _j^T \otimes \Psi _j } \right) } } \right] {\text {vec}}\left( {X^{\mathrm{{*}}} } \right) \mathrm{{\, =\, }}{\text {vec}}\left( - \Omega _0 - \Theta _1 P^*- P^* \Theta _2 \right) \). Let \(\Gamma = \alpha \left[ {\sum \nolimits _{j = 1}^s {\left( {\Omega _j^T \otimes \Psi _j } \right) } } \right] \in R^{N^2 \times Nn}\), \( G\mathrm{{ = }}{\text {vec}}\left( { - \Omega _0 - \Theta _1 P^* - P^* \Theta _2 } \right) \in R^{N^2 }\), then, the necessary and sufficient condition for the existence of solutions of the nonhomogeneous linear equation \(\Gamma x^* = G\) is \({\text {rank}}(\Gamma ) = {\text {rank}}([\Gamma ,G])\). There are two kinds of solutions: one is that the solution \(x^{*}\) is unique, and the other is that there are infinite solutions. For the case of infinite solutions, the solution with the smallest norm can be chosen, because of the “minimum energy consumption”. For example, in the winding system, selecting the solution with the smallest norm means that the rotating roller (motor) tracks the minimum speed, thus the energy consumption of the motor is reduced.

Remark 8

For LS in undirected networks, which is shown in (iii) Remark 2, the control objectives can be achieved only if Assumption 4 is still true.

If Assumption 4 holds, then we can obtain the following error dynamical equations via (2) and (3):

where \({\bar{P}}=P(t)-P^{*}\).

In order to achieve the above control objectives, the distributed control scheme for NS (1) is proposed as follows:

where \({\tilde{u}}_i=\rho K_i^{-1}sgn({\bar{x}}_i)\left\| P^{*}\otimes I_{n} \right\| \left\| K \right\| h\) and \(sgn({\bar{x}}_i) = \left\{ \begin{array}{l} \frac{{{{\bar{x}}}_i}}{{\left\| {{{\bar{x}}}_i} \right\| }},\begin{array}{*{20}c} {} &{} {{{\bar{x}}}_i \ne 0} \\ \end{array} \\ 0,\begin{array}{*{20}c} {} &{} {{{\bar{x}}}_i = 0} \\ \end{array} \\ \end{array} \right. \).

Remark 9

Let \({\widehat{sgn}}({\bar{x}})=[(sgn({\bar{x}}_1))^T,(sgn({\bar{x}}_2))^T,\ldots ,(sgn({\bar{x}}_N))^T]^T\), then we can get \(u = -Ax^{*} + BW{\bar{x}} - BF(x) - {\tilde{u}}\) in Eq. (8), where \({\tilde{u}}=\rho K^{-1}{\widehat{sgn}}({\bar{x}})\left\| P^{*}\otimes I_{n} \right\| \left\| K \right\| h\).

Theorem 1

Consider the directed CDN composed of the NS (2) and LS (3), for a static and weighted network \(G=(V,L,P^{*})\) and each element of \(\Upsilon P^{*} \Upsilon \) is nonnegative, if Assumptions 1, 2, 3 and 4 hold and the matrix \( \left[ {\begin{array}{*{20}c} { \lambda _{\min }({\widehat{Q}})} &{} {M} \\ { M } &{} { \lambda _{\min }(Q)} \\ \end{array}} \right] > 0\) , where \(M=-|\alpha | \left\| U\sum \nolimits _{j = 1}^s\right. \left. (\Omega _{j}^{T}\otimes \Psi _{j}) \right\| - \rho h\sqrt{n}\left\| K \right\| \), then the above control objectives can be achieved via the distributed controller (9), i.e., \({\bar{x}} \mathop \rightarrow \limits ^{t \rightarrow + \infty } 0\) and \({\bar{P}} \mathop \rightarrow \limits ^{t \rightarrow + \infty } 0\).

Proof

Consider the following Lyapunov’s function:

Calculating the orbit derivative of V along (7) and (8) gives that

From (11), if the constant matrix \( \left[ {\begin{array}{*{20}c} { \lambda _{\min }({\widehat{Q}})} &{} {M} \\ { M } &{} { \lambda _{\min }(Q)} \\ \end{array}} \right] > 0\), then \(\dot{V} <0 \) can be obtained. Therefore, we can get that \( {{\bar{x}}}\) and \({{\bar{P}}}\) are bounded, and \({\bar{x}} \mathop \rightarrow \limits ^{t \rightarrow + \infty } 0\), \({\bar{P}} \mathop \rightarrow \limits ^{t \rightarrow + \infty } 0\). This means that the directed CDN composed of (2) and (3) is asymptotical structural balance. This completes the proof of Theorem 1. \(\square \)

Theorem 2

Consider the undirected CDN composed of the NS (2) and LS shown in (iii) Remark 2, for a static and weighted network \(G=(V,L,P^{*})\), where \(P^{*}=(P^{*})^T\) and each element of \(\Upsilon P^{*} \Upsilon \) is nonnegative, if Assumptions 1, 2, 3 and 4 hold and the matrix \( \left[ {\begin{array}{*{20}c} { \lambda _{\min }({\widehat{Q}})} &{} {M} \\ { M } &{} { \lambda _{\min }(Q)} \\ \end{array}} \right] > 0\) , where \(M=-2|\alpha | \left\| U\sum \nolimits _{j = 1}^s (\Omega _{j}^{T}\otimes \Psi _{j}) \right\| - \rho h \sqrt{n} \left\| K \right\| \), then the above control goal can be achieved via the distributed controller (9), i.e., \({\bar{x}} \mathop \rightarrow \limits ^{t \rightarrow + \infty } 0\) and \({\bar{P}} \mathop \rightarrow \limits ^{t \rightarrow + \infty } 0\).

Remark 10

From the model of LS in (iii) Remark 2, we can obtain \({\dot{P}}=\Theta P+P \Theta ^T+{\widetilde{\Phi }}(X)\), where \(\Theta =\Theta _1+\Theta ^T_2\) and \({\widetilde{\Phi }}(X)=\Phi (X)+\Phi ^T(X)\), thus, we get \(P(t)=P^T(t)\) with \(P(0)=P^T(0)\), which means the network is undirected. It is worth noting that if the assumptions for directed networks are true, it is also true for undirected networks, such as Assumptions 3 and 4. Therefore, under the same assumptions, we can prove the structural balance of both directed networks and undirected networks, and the proof process is similar. Rather than repeating the process and wasting space, the specific proof process will be omitted.

Simulation example

We consider a CDN consisting of 10 nodes (\(N=10\)), in which each isolated node is Lü’s chaotic attractor [37]. Thus, the i-th isolated node system can be described as

where \(x_i = \left[ {x_{i1} ,x_{i2} ,x_{i3} } \right] ^T\) indicates the state vector, \( A_i = \left[ {\begin{array}{*{20}c} { - 36} &{} {36} &{} 0 \\ 0 &{} {20} &{} 0 \\ 0 &{} 0 &{} { - 3} \\ \end{array}} \right] \) and \( B_i = \left[ {\begin{array}{*{20}c} 0 &{} 0 \\ 1 &{} 0 \\ 0 &{} 1 \\ \end{array}} \right] \) are real matrices; and \(f_i (x_i ) = \left[ {\begin{array}{*{20}c} { - x_{i1} x_{i3} } \\ {x_{i1} x_{i2} } \\ \end{array}} \right] \) is continuous vector function.

If we choose \(H_j (x_j ) = \left[ 2\tau \sin (x_{j1}),\tau \sin (x_{j3})\cos (x_{j1} x_{j2}), \right. \) \(\left. {\tau x_{j2} x_{j3} )} \right] ^T\), where \(\tau ={\text {rand}}n(1)\), then the dynamic equations of the NS and LS are expressed via (2) and (3), respectively. Through simulation observation, we can choose \(h = 10.3\).

In addition, the other simulation parameters can be chosen as follows: the links’ common weight strength \(\rho = 0.001\), the coupling coefficient \(\alpha = 0.03\) and \(s = 3\). The matrices \(\Psi _j \in R^{10 \times 3}\), \(\Theta _1 ,\Theta _2 ,P^* ,\Omega _j ,\Omega _0 \in R^{10 \times 10}\), \(X^ * \in R^{3 \times 10}\), \(W \in R^{20 \times 30}\), \(K, U \in R^{30 \times 30}\) and the initial values of states x(0) and P(0) can be generated by the following rules in Matlab:

-

(i)

Each entry of the initial matrices \(\Theta _1^* \) and \(\Theta _2^*\) is a random number in the range of \([ - 2,2]\).

-

(ii)

If the initial matrices \(\Theta _1^* \) and \(\Theta _2^*\) are Hurwitz stable, go to the next step, or repeat Step (i).

-

(iii)

Let \(\Theta _1 = \xi \cdot \Theta _1^* ,\Theta _2 = \xi \cdot \Theta _2^*\), where \(\xi \) is a positive constant and used to adjust the tracking speed. In this paper, we choose \(\xi = 5\).

-

(iv)

The structural balance matrix \(P^ * \in R^{10 \times 10}\) can be obtained by \(P^ * = \Upsilon ^{ - 1} P^ + \Upsilon ^{ - 1}\), where \(\Upsilon \in \Delta \) and each entry in \( P^ + \in R^{10 \times 10}\) is an integer and randomly generated in range [0, 5].

-

(v)

The matrices \(\Psi _j = {\text {rand}}(10,3) - 0.5\) and \(\Omega _j = {\text {rand}}(10,3) - 0.5\), where \(j = 1,2,3\) . Select matrix \(\Omega _0\) to make the equation \({\text {rank}}(\Gamma ) = {\text {rank}}([\Gamma ,G])\) satisfied. Then, we can obtain the matrix \(X^{*}\) by solving the matrix Eq. (6).

-

(vi)

By solving the Lyapunov equation (4), we can get matrices W and K. If we choose \({{\widehat{Q}}} = 100I_{100}\), then the matrix U can be obtained by solving the Lyapunov equation (5). Hence, put the corresponding matrices and parameters obtained above into the matrix \(\left[ {\begin{array}{*{20}c} {\lambda _{\min } \left( {{{\widehat{Q}}}} \right) } &{} M \\ M &{} {\lambda _{\min } \left( Q \right) } \\ \end{array}} \right] \), and check whether it is a positive definite matrix. If not, repeat Step (i).

-

(vii)

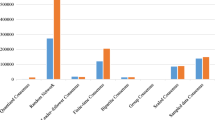

The initial values of states x(0) and P(0) are random numbers in the range of \(\left( { - 3,3} \right) \). By the effect of the controller (9) in NS (1), the simulation results are obtained and shown in Figs. 1, 2, 3 and 4 as following.

From Figs. 1, 2, 3 and 4, some conclusions can be obtained as follows:

-

(i)

Figures 2 and 4 show that the states of NS (2) and LS (3) can asymptotically track the tracking target \(x^ *\) and \(P^ *\), respectively. This means the phenomena of structural balance emerges in the network.

-

(ii)

The structural balance matrix \(P^ *\) and the tracking target \(x^ *\) of the NS are coupled to each other. It implies that the tracking target \(x^ *\) can be obtained, when the structural balance matrix \(P^ *\) is given. From Figs. 1, 2, 3 and 4, we can see the state of LS (3) can asymptotically track the structural balance matrix \(P^ *\) via the coupling matrix \(\Phi (X)\), while the state of NS (2) has tracked the target \(x^ *\) using the distributed controller (9). That is, the correlative control of the two subsystems is achieved in this paper.

-

(iii)

Compared to some existing results [12, 13, 21, 22], our advantage is that the result about structural balance control is applicable to both directed and undirected networks. Meanwhile, it is worth noting that the concept of structural balance in this paper can also apply to non-fully connected networks. This is why there exist zero weights of links in Fig. 3.

-

(iv)

Figure 2 shows that links are obviously split into two parts (positive and negative). This means that all nodes are asymptotically divided into two factions using links, so that the links between nodes in the same faction are positive and in different factors are negative. The similar results have been obtained in [11,12,13, 17,18,19, 21, 22]. However, because some models contain the clogged states, which lead to trap a social network before the result of a configuration of two factions is reached in the social network [38], they do not produce similar results.

-

(v)

From Figs. 1, 2, 3 and 4, we know that the states of nodes and links are all stable, which means that the stability of nodes contributes to the stability of connection relationships between them, and vice versa. If this phenomenon is extended to sociology, it can be further used to explain that the stable individual behavior is beneficial to social stability (structural balance).

Conclusion

This paper proposes a CDN model composed of NS and LS, and the two subsystems are coupled with each other. Different from most previous studies, we focus on the structural balance of both directed and undirected networks instead of merely focusing on that of undirected networks. We noticed that the structural balance is determined by the state of LS, and there usually exists a coupling relationship between the tracking targets of the two subsystems. That is to say, if the tracking target (a structural balance matrix) of LS is given, we can get the tracking target of NS. Meanwhile, since the states of LS are difficult to measure and distributed control is prevalent, we have designed a distributed controller for each node that tracks its own target. Through the effective coupling, the LS state asymptotically tracks the structural balance matrix, and finally realizes the structural balance. Moreover, this result can be also used as an explanation of “the stable individual behavior is beneficial to social stability” in the social sense. Therefore, the structural balance control method applied to the complex network in this paper can extend the study of the CDNs and enrich the structural balance theory in social networks.

References

Hassanibesheli F, Hedayatifar L, Gawronski P, Stojkow M, Zuchowska-Skiba D, Krzysztof Kulakowski K (2017) Gain and loss of esteem, direct reciprocity and Heider balance. Phys A 468:334–339

Chen CLP, Liu LC, Chen L, Tang YY, Zhou YC (2015) Weighted couple sparse representation with classified regularization for impulse noise removal. IEEE Trans Image Process 24(11):4014–4026

Wei YL, Park JH, Karimi HR, Tian YC, Jung H (2018) Improved stability and stabilization results for stochastic synchronization of continuous-time Semi-Markovian jump neural networks with time-varying delay. IEEE Trans Neural Netw Learn Syst 29(6):2488–2501

Kim HJ, Lee H, Ahn M, Kong HB, Lee I (2016) Joint subcarrier and power allocation methods in full duplex wireless powered communication networks for OFDM systems. IEEE Trans Wirel Commun 15(7):4745–4753

Sotiropoulos DN, Bilanakos C, Giaglis GM (2016) Opinion formation in social networks: a time-variant and non-linear model. Complex Intell Syst 2(4):269–284

Wang CM, Chen XY, Cao JD, Qiu JL, Liu Y, Luo YP (2021) Neural network-based distributed adaptive pre-assigned finite-time consensus of multiple TCP/AQM networks. IEEE Trans Circuits Syst I-Regul Pap 68(1):387–395

Wang YQ (2019) Privacy-preserving average consensus via state decomposition. IEEE Trans Autom Control 64(11):4711–4716

Liu HW, Chen XY, Qiu JL, Zhao F (2021) Finite-time synchronization of complex networks with hybrid-coupled time-varying delay via event-triggered aperiodically intermittent pinning control. Math Meth Appl Sci 2021:1–17. https://doi.org/10.1002/mma.7907

Wang YH, Wang WL, Zhang LL (2020) State synchronization of controlled nodes via the dynamics of links for complex dynamical networks. Neurocomputing 384:225–230

Liu YJ, Park JH, Fang F (2019) Global exponential stability of delayed neural networks based on a new integral inequality. IEEE Trans Syst Man Cybern -Syst 49(11):2318–2325

Marvel SA, Kleinberg J, Kleinberg RD, Strogatz SH (2011) Continuous-time model of structural balance. Proc Natl Acad Sci U S A 108(5):1771–1776

Wongkaew S, Caponigro M, Kulakowski K, Borzi A (2015) On the control of the Heider balance model. Eur Phys J-Spec Top 224:3325–3342

Gao ZL, Wang YH (2018) The structural balance analysis of complex dynamical networks based on nodes’ dynamical couplings. PLoS ONE 13(1):e0191941

Heider F (1946) Attitudes and cognitive organization. J Psychol 21(1):107–112

Cartwright D, Harary F (1956) Structural balance: a generalization of Heider’s theory. Psychol Rev 63(5):277–293

Antal T, Krapivsky PL, Redner S (2006) Social balance on networks: the dynamics of friendship and enmity. Phys D 224(1–2):130–136

Wongkaew S, Caponigro M, Borzi A (2015) On the control through leadership of the Hegselmann-Krause opinion formation model. Math Models Meth Appl Sci 25(3):565–585

Cisneros-Velarde PA, Friedkin NE, Proskurnikov AV, Bullo F (2021) Structural balance via gradient flows over signed graphs. IEEE Trans Autom Control 66(7):3169–3183

Shang YL (2020) On the structural balance dynamics under perceived sentiment. Bull Iran Math Soc 46:717–724

Mei WJ, Cisneros-Velarde P, Chen G, Friedkin NE, Bullo F (2019) Dynamic social balance and convergent appraisals via homophily and influence mechanisms. Automatica 110:108580

Gao ZL, Wang YH, Peng Y, Liu LZ, Chen HG (2020) Adaptive control of the structural balance for a class of complex dynamical networks. J Syst Sci Complex 33(3):725–742

Gao ZL, Wang YH, Zhang LL, Huang YY, Wang WL (2018) The dynamic behaviors of nodes driving the structural balance for complex dynamical networks via adaptive decentralized control. Int J Mod Phys B 32(24):1850267

Liu LZ, Wang YH, Chen HG, Gao ZL (2020) Structural balance for discrete-time complex dynamical network associated with the controlled nodes. Mod Phys Lett B 34(10):2050352

Tsiotras P, Luo JH (2000) Control of underactuated spacecraft with bounded inputs. Automatica 36(8):1153–1169

Gao TT, Huang JS, Zhou Y, Song YD (2017) Robust adaptive tracking control of an underactuated ship with guaranteed transient performance. Int J Syst Sci 48(2):272–279

Lu B, Fang YC, Sun N (2018) Continuous sliding mode control strategy for a class of nonlinear underactuated systems. IEEE Trans Autom Control 63(10):3471–3478

Stulrajter M, Hrabovcova V, Franko M (2007) Permanent magnets synchronous motor control theory. J Electr Eng 58(2):79–84

Zhang X, Zhou WN, Karimi HR, Sun YQ (2021) Finite- and fixed-time cluster synchronization of nonlinearly coupled delayed neural networks via pinning control. IEEE Trans Neural Netw Learn Syst 32(11):5222–5231

Shi L, Zhang CM, Zhong SM (2021) Synchronization of singular complex networks with time-varying delay via pinning control and linear feedback control. Chaos, Solitons Fractals 145:110805

Pei Y, Bohner M, Pi DC (2019) Impulsive synchronization of time-scales complex networks with time-varying topology. Commun Nonlinear Sci Numer Simulat 80:104981

Zhang LL, Wang YH, Huang YY, Chen XS (2015) Delay-dependent synchronization for non-diffusively coupled time-varying complex dynamical networks. Appl Math Comput 259:510–522

Xu YH, Zhou WN, Zhang JC, Sun W, Tong DB (2017) Topology identification of complex delayed dynamical networks with multiple response systems. Nonlinear Dyn 88(4):2969–2981

Altafini C (2013) Consensus problems on networks with antagonistic interactions. IEEE Trans Autom Control 58(4):935–946

Bahuguna D, Ujlayan A, Pandey DN (2007) Advanced type coupled matrix Riccati differential equation systems with Kronecker product. Appl Math Comput 194:46–53

Pagilla PR, Siraskar NB, Dwivedula RV (2006) Decentralized control of web processing lines. IEEE Trans Control Syst Technol 15(1):106–117

Abjadi NR, Soltani J, Askari J, Markadeh GRA (2009) Nonlinear sliding-mode control of a multi-motor web-winding system without tension control. IET Contr Theory Appl 3(4):419–427

Lv JH, Chen GR, Zhang SC (2002) Dynamical analysis of a new chaotic attractor. Int. J. Bifurcation Chaos 12(5):1001–1015

Marvel SA, Strogatz SH, Kleinberg JM (2009) Energy landscape of social balance. Phys Rev Lett 103(19):198701

Acknowledgements

This work was supported by the Chongqing Social Science Planning Project (2021BS038), National Science Foundation of China (61773082, 61673120, 61603098), Key Natural Science Foundation of Chongqing (cstc2017jcyjBX0018), National Key R &D Program of China (2018YFB1600502), Natural Science Foundation of Chongqing (cstc2021jcyj-bshX0035, cstc2018jcyjA2453), Humanities and Social Sciences Research Program of Chongqing Municipal Education Commission (22SKGH343), Key Project of CQUPT (A2018-02), and Key Laboratory of Chongqing Municipal Institutions of Higher Education ([2017]3).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The work described has not been submitted elsewhere for publication, in whole or in part, and all the authors listed have approved the manuscript that is enclosed. Moreover, the authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gao, Z., Li, Y., Wang, Y. et al. Distributed tracking control of structural balance for complex dynamical networks based on the coupling targets of nodes and links. Complex Intell. Syst. 9, 881–889 (2023). https://doi.org/10.1007/s40747-022-00840-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-022-00840-4