Abstract

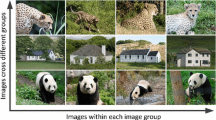

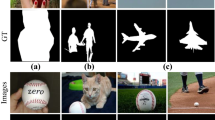

Co-salient object detection aims to find common salient objects from an image group, which is a branch of salient object detection. This paper proposes a global-guided cross-reference network. The cross-reference module is designed to enhance the multi-level features from two perspectives. From the spatial perspective, the location information of objects with similar appearances must be highlighted. From the channel perspective, more attention must be assigned to channels that indicate the same object category. After spatial and channel cross-reference, the features are enhanced to possess the consensus representation of image group. Next, a global co-semantic guidance module is built to provide hierarchical features with the location information of co-salient objects. Compared with state-of-the-art co-salient object detection methods, our proposed method extracts collaborative information and obtains better co-saliency maps on several challenging co-saliency detection datasets.

Similar content being viewed by others

References

Cheng, M.-M., Mitra, N., Huang, X., Hu, S.: SalientShape: group saliency in image collections. Vis. Comput. 30, 443–453 (2013)

Mechrez, R., Shechtman, E., Zelnik-Manor, L.: Saliency driven image manipulation. Mach. Vis. Appl. 30(2), 189–202 (2019)

Hsu, K.-J., Lin, Y.-Y., Chuang, Y.-Y.: DeepCO3: deep instance co-segmentation by co-peak search and co-saliency detection. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 8838–8847 (2019)

Wang, W., Shen, J.: Higher-order image co-segmentation. IEEE Trans. Multimedia 18, 1011–1021 (2016)

Wang, W., Shen, J., Sun, H., Shao, L.: Video co-saliency guided co-segmentation. IEEE Trans. Circuits Syst. Video Technol. 28, 1727–1736 (2018)

Jerripothula, K.R., Cai, J., Yuan, J.: Cats: co-saliency activated tracklet selection for video co-localization. In: European Conference on Computer Vision (ECCV), pp. 187–202 (2016)

Subramaniam, A., Nambiar, A., Mittal, A.: Co-segmentation inspired attention networks for video-based person re-identification. 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 562–572 (2019)

Yan, X., Chen, Z., Wu, Q.J., Lu, M., Sun, L.: 3MNet: multi-task, multi-level and multi-channel feature aggregation network for salient object detection. Mach. Vis. Appl. 32(2), 1–13 (2021)

Wang, Y., Peng, G.: Salient object detection based on compactness and foreground connectivity. Mach. Vis. Appl. 29(7), 1143–1155 (2018)

Fu, H., Cao, X., Tu, Z.: Cluster-based co-saliency detection. IEEE Trans. Image Process. 22, 3766–3778 (2013)

Li, B., Sun, Z., Li, Q., Wu, Y., Hu, A.: Group-wise deep object co-segmentation with co-attention recurrent neural network. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 8519–8528 (2019)

Li, B., Sun, Z., Tang, L., Sun, Y., Shi, J.: Detecting robust co-saliency with recurrent co-attention neural network. In: International Joint Conferences on Artificial Intelligence Organization (IJCAI), pp. 818–825 (2019)

Wei, L., Zhao, S., El Farouk Bourahla, O., Li, X., Wu, F.: Group-wise deep co-saliency detection. In: International Joint Conference on Artificial Intelligence (IJCAI), pp. 3041–3047 (2017)

Zhang, K., Li, T., Liu, B., Liu, Q.: Co-saliency detection via mask-guided fully convolutional networks with multi-scale label smoothing. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3090–3099 (2019)

Zhang, Z., Jin, W., Xu, J., Cheng, M.-M.: Gradient-induced co-saliency detection. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 455–472 (2020)

Jin, W., Xu, J., Cheng, M.-M., Zhang, Y., Guo, W.: ICNet: Intra-saliency correlation network for co-saliency detection. In: Advances in Neural Information Processing Systems (NeurIPS), pp. 18749–18759 (2020)

Fan, D.-P., Li, T., Lin, Z., Ji, G.-P., Zhang, D., Cheng, M.-M., Fu, H., Shen, J.: Re-thinking co-salient object detection. IEEE Trans. Pattern Anal. Mach. Intell. 44, 4339–4354 (2021)

Fan, Q., Fan, D.-P., Fu, H., Tang, C.-K., Shao, L., Tai, Y.-W.: Group collaborative learning for co-salient object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12288–12298 (2021)

Zhang, N., Han, J., Liu, N., Shao, L.: Summarize and search: Learning consensus-aware dynamic convolution for co-saliency detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 4167–4176 (2021)

Zhang, Q., Cong, R., Hou, J., Li, C., Zhao, Y.: CoADNet: collaborative aggregation-and-distribution networks for co-salient object detection. In: Advances in Neural Information Processing Systems (NeurIPS), pp. 1–12 (2020)

Zhang, K., Dong, M., Liu, B., Yuan, X.-T., Liu, Q.: DeepACG: co-saliency detection via semantic-aware contrast Gromov–Wasserstein distance. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13703–13712 (2021)

Siam, M., Oreshkin, B.N., Jagersand, M.: Amp: Adaptive masked proxies for few-shot segmentation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 5249–5258 (2019)

Ma, Y.-F., Zhang, H.-J.: Contrast-based image attention analysis by using fuzzy growing. In: Proceedings of the Eleventh ACM International Conference on Multimedia (ACM MM), pp. 374–381 (2003)

Wei, Y., Wen, F., Zhu, W., Sun, J.: Geodesic saliency using background priors. In: European Conference on Computer Vision (ECCV), pp. 29–42 (2012)

Liu, N., Han, J., Yang, M.-H.: PiCANet: learning pixel-wise contextual attention for saliency detection. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3089–3098 (2018)

Qin, X., Zhang, Z., Huang, C., Gao, C., Dehghan, M., Jägersand, M.: BASNet: boundary-aware salient object detection. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7471–7481 (2019)

Zhao, J., Liu, J., Fan, D.-P., Cao, Y., Yang, J., Cheng, M.-M.: EGNet: edge guidance network for salient object detection. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 8778–8787 (2019)

Zhang, D., Meng, D., Han, J.: Co-saliency detection via a self-paced multiple-instance learning framework. IEEE Trans. Pattern Anal. Mach. Intell. 39, 865–878 (2017)

Zha, Z., Wang, C., Liu, D., Xie, H., Zhang, Y.: Robust deep co-saliency detection with group semantic and pyramid attention. IEEE Trans. Neural Netw. Learn. Syst. 31, 2398–2408 (2020)

Jiang, B., Jiang, X., Tang, J., Luo, B., Huang, S.: Multiple graph convolutional networks for co-saliency detection. In: 2019 IEEE International Conference on Multimedia and Expo (ICME), pp. 332–337 (2019)

Hu, J., Shen, L., Albanie, S., Sun, G., Wu, E.: Squeeze-and-excitation networks. IEEE Trans. Pattern Anal. Mach. Intell. 42, 2011–2023 (2020)

Woo, S., Park, J., Lee, J.-Y., Kweon, I.-S.: CBAM: convolutional block attention module. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 3–19 (2018)

Chen, S., Tan, X., Wang, B., Hu, X.: Reverse attention for salient object detection. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 234–250 (2018)

Shaban, A., Bansal, S., Liu, Z., Essa, I., Boots, B.: One-shot learning for semantic segmentation. In: Proceedings of the British Machine Vision Conference (BMVC), pp. 1–13 (2017)

Zhang, X., Wei, Y., Yang, Y., Huang, T.: SG-one: similarity guidance network for one-shot semantic segmentation. IEEE Trans. Cybern. 50, 3855–3865 (2020)

Wang, L., Lu, H., Wang, Y., Feng, M., Wang, D., Yin, B., Ruan, X.: Learning to detect salient objects with image-level supervision. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3796–3805 (2017)

Fan, D.-P., Lin, Z., Ji, G.-P., Zhang, D., Fu, H., Cheng, M.-M.: Taking a deeper look at co-salient object detection. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2916–2926 (2020)

Winn, J., Criminisi, A., Minka, T.: Object categorization by learned universal visual dictionary. In: Tenth IEEE International Conference on Computer Vision (ICCV’05) Volume 1, vol. 2, pp. 1800–1807. IEEE (2005)

Borji, A., Cheng, M.-M., Jiang, H., Li, J.: Salient object detection: a benchmark. IEEE Trans. Image Process. 24, 5706–5722 (2015)

Fan, D.-P., Cheng, M.-M., Liu, Y., Li, T., Borji, A.: Structure-measure: a new way to evaluate foreground maps. In: 2017 IEEE International Conference on Computer Vision (ICCV), pp. 4558–4567 (2017)

Fan, D.-P., Gong, C., Cao, Y., Ren, B., Cheng, M.-M., Borji, A.: Enhanced-alignment measure for binary foreground map evaluation. In: International Joint Conferences on Artificial Intelligence Organization (IJCAI), pp. 698–704 (2018)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778 (2016)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. In: Proceedings of the 3rd International Conference on Learning Representations (ICLR), pp. 1–15 (2015)

Liu, J.-J., Hou, Q., Cheng, M.-M., Feng, J., Jiang, J.: A simple pooling-based design for real-time salient object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3917–3926 (2019)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: Convolutional networks for biomedical image segmentation. In: International Conference on Medical Image Computing and Computer-assisted Intervention (MICCAI), pp. 234–241. Springer (2015)

Acknowledgements

This work was supported by Natural Science Foundation of Anhui Province and National Natural Science Foundation of China.

Funding

Zhengyi Liu received the support of Natural Science Foundation of Anhui Province (1908085MF182); Yun Xiao received the support of National Natural Science Foundation of China (61602004).

Author information

Authors and Affiliations

Contributions

Zhengyi Liu contributes to the main design ideas for the method. Hao Dong is responsible for coding and experimental testing. Zhili Zhang is responsible for the statistics and drawing of experimental data. Yun Xiao is responsible for project administration.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or nonfinancial interests to disclose.

Code availability

The source code is available at https://github.com/liuzywen/CoSOD.

Ethics approval

This article does not contain any studies with human or animal participants.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, Z., Dong, H., Zhang, Z. et al. Global-guided cross-reference network for co-salient object detection. Machine Vision and Applications 33, 73 (2022). https://doi.org/10.1007/s00138-022-01325-7

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-022-01325-7