Abstract

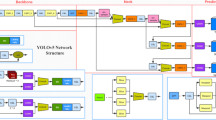

The accuracy of visibility detection greatly affects daily life and traffic safety. Existing visibility detection methods based on deep learning rely on massive haze images to train neural networks to obtain detection models, which are prone to overfit in dealing with small samples cases. In order to overcome this limitation, a large amount of measured data are used to train and optimize the convolutional neural network, and an improved DiracNet method is proposed to improve the accuracy of the algorithm. On this foundation, combined multi-mode algorithm is proposed to achieve small samples fitting and train an effective model in a short time. In this paper, the proposed improved DiracNet and the combined multi-mode algorithm are verified by using the measured atmospheric fine particle concentration data (pm1.0, pm2.5, pm10) and haze video data. The validation results demonstrate the effectiveness of the proposed algorithm.

Similar content being viewed by others

References

Graves, N., & Newsam, S. (2011). Using visibility cameras to estimate atmospheric light extinction, Applications of Computer Vision (WACV). Kona: IEEE.

Varjo, S., Kaikkonen, V., Hannuksela, J., et al. (2015). All-in-focus image reconstruction from in-line holograms of snowflakes, 2015 IEEE International Instrumentation and Measurement Technology Conference (I2MTC) Proceedings, 2015. Pisa: IEEE.

Li, G., Wu, J. F., & Lei, Z. Y. (2014). Research progress on image smog grade evaluation and defogging technology. Laser Journal, 9(1), 1–6.

Cheng, X., Yang, B., Liu, G., et al. (2018a). A total bounded variation approach to Low visibility estimation on expressways. Sensors, 18(2), 392–392.

Zhou, J. (2017). Study of visibility detection algorithm based on traffic image. Techniques of Automation and Applications, 36(10), 100–103.

Chen, Z., Yan, Z., & Yao, Z. (2019). Visibility measurement using dark channel prior method based on adaptive fog concentration coefficient. Modern Electronics Technique, 42(9), 39–45.

Liu, J., & Liu, X. (2015). Visibility estimation algorithm for fog weather based on inflection point line. Journal of Computer Applications, 35(2), 528–530.

Chen, Z. (2016). PTZ visibility detection based on image luminance changing tendency. International Conference on Optoelectronics & Image Processing. IEEE.

Hautiere, N., Labayrade, R., & Aubert, D. (2006a). Real-Time Disparity Contrast Combination for Onboard Estimation of the Visibility Distance. IEEE Transactions on Intelligent Transportation Systems, 7(2), 201–212.

Babari, R., Hautiere, N., Dumont, £., et al. (2011). A model-driven approach to estimate atmospheric visibility with ordinary cameras. Atmospheric Environment, 45(30), 5316–5324.

Cheng, X., Yang, B., Liu, G., et al. (2018b). A variational approach to atmospheric visibility estimation in the weather of fog and haze. Sustainable Cities & Society, 39(1), 215–224.

Wang, T. (2019). Research on haze visibility detection based on spectrum analysis and improved inception. Nanjing University of Posts and Telecommunications.

Hongjun, L. V. (2019). Research on fog visibility detection algorithms based on deep learning. Nanjing University of Posts and Telecommunications.

Hautiére, N., Tarel, J. P., Lavenant, J., et al. (2006b). Automatic fog detection and estimation of visibility distance through use of an onboard camera. Machine Vision and Applications, 17(1), 8–20.

Koschmieder, H. (1924). Theorie der horizontalen Sichtweite: Kontrast und Sichtweite. Keim & Nemnich, pp. 171–181.

He, K., Zhang, X., Ren, S., et al. (2016). Deep Residual Learning for Image Recognition, 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Las Vegas: IEEE Computer Society.

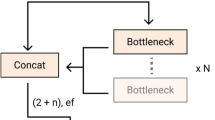

Zagoruyko, S., & Komodakis, N. (2018) DiracNets: Training Very Deep Neural Networks Without Skip-Connections, 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018. Salt Lake: IEEE Computer Society.

Zamir, A., Sax, A., Shen, W., et al. (2018). Taskonomy: Disentangling Task Transfer Learning, 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018. Salt Lake: IEEE Computer Society.

Acknowledgements

This work is supported by the opening fund for State Key Laboratory of Severe Weather of China Meteorological Administration (Grant No.2021LASW-A07), the Universities Natural Science Research project of Jiangsu Province (Grant No.19KJB510048), the Opening fund for National Key Laboratory of Solid Microstructure Physics in Nanjing University (Grant No.M30006), the Post-doctoral fund of Jiangsu Province (Grant No.1701132B).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xiyu, M., Qi, X., Qiang, Z. et al. A Combined Multi-Mode Visibility Detection Algorithm Based on Convolutional Neural Network. J Sign Process Syst 95, 49–56 (2023). https://doi.org/10.1007/s11265-022-01792-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-022-01792-1