Abstract

Holographic three-dimensional display is an important display technique because it can provide all depth information of a real or virtual scene without any special eyewear. In recent years, with the development of computer and optoelectronic technology, computer-generated holograms have attracted extensive attention and developed as the most promising method to realize holographic display. However, some bottlenecks still restrict the development of computer-generated holograms, such as heavy computation burden, low image quality, and the complicated system of color holographic display. To overcome these problems, numerous algorithms have been investigated with the aim of color dynamic holographic three-dimensional display. In this review, we will explain the essence of various computer-generated hologram algorithms and provide some insights for future research.

Similar content being viewed by others

Introduction

Display technology is very important for humans to acquire information. However, traditional two-dimensional (2D) display technology can only display a 2D projection image from one side of the three-dimensional (3D) scene, which loses the depth information and affects the 3D spatial information acquisition. In recent years, 3D display technology has attracted more and more attention. Among all existing 3D display technologies, holographic 3D display is regarded as the most promising 3D display technology because it can reconstruct all information of a real or virtual scene and bring no visual fatigue1.

Holography was invented by Denis Gabor in 1947 to improve the resolution of electron microscopy2. Like his name representing the meaning of whole, holography is a technique to record and reconstruct all the physical information of a 3D scene based on interference and diffraction theory as shown in Fig. 1. In recording process, the object light O and the reference light R interfere in the hologram plane and the interference fringe I is recorded on the hologram as shown in Eq. (1). In reconstruction process, the hologram is illuminated by the reconstruction light Re. If the reconstruction light Re is chosen as the reference light R, the third term of the diffraction light U is, up to a multiplicative constant, an exact duplication of the original object light O as shown in Eq. (2), which indicates that the original object can be reconstructed successfully. Due to the invention of holography, Denis Gabor won the Nobel Prize in Physics in 1971. However, the application of holography was restricted in many years because on-axis holography has inability to solve the problem of the twin image. In 1962, Leith and Upatnieks separated the twin image by increasing the carrier frequency of the reference light, thereby greatly expanding the applicability of holography3,4,5. In the same year, Denisyuk invented the reflection hologram, which can be reconstructed by white light because of the high degree of selectivity to wavelength6,7. Afterwards, Benton invented the two-step rainbow hologram8. Rainbow hologram can be reconstructed by white light because it minimizes the blurring caused by the dispersion of the transmission hologram via abandoning the parallax information in the vertical direction.

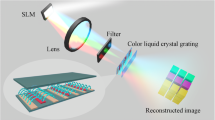

The traditional optical holography relies on the optical systems and photosensitive materials to complete recording and reconstruction process as shown in Fig. 2a and 2d. Optical holograms (OHs) are usually static holograms and have strict requirements on the stability of the optical systems, which restrict the application of optical holography in dynamic holographic 3D display. With the development of computer and optoelectronic technology, computer-generated holography has become an international research hotpot. In computer-generated holography, the recording process can be simulated by computers and the reconstruction can be realized by loading the computer-generated holograms (CGHs) on the spatial light modulators (SLMs) with coherent light illumination as shown in Fig. 2b and 2e. Compared with optical holography, computer-generated holography can record not only real objects but also virtual objects without complex optical systems and can realize dynamic holographic 3D display with the help of refreshable SLMs9,10,11,12,13. Because of these advantages, computer-generated holography is a future-oriented 3D display technology and can be used in many fields, such as education, entertainment, military and medical treatment14,15. In addition, digital holography, which records digital holograms (DHs) by sensors in optical systems and reconstructs optical wavefronts digitally as shown in Fig. 2c and 2f, has also been presented and applied in many fields, such as quantitative phase imaging and optical metrology16,17.

In 1960s, Kozma and Kelly combined computer and spatial frequency domain filtering technology to design matched spatial filters artificially18, which laid the foundation for computer-generated holography. In 1966, Lohmann and Brown devised detour phase method to encode the optical wavefronts into holograms by computer19. In 1967, Lohmann and Paris applied the fast Fourier transform (FFT) algorithm to calculate the Fourier transform CGH20, which greatly shortened the calculation time. In 1974, Lesem et al. proposed kinoform, which has high diffraction efficiency and holds a very important status in current CGH technology21. Since 1980s, various CGH algorithms have emerged in succession22,23,24,25,26,27,28. Meanwhile, novel modulation devices, such as acousto-optic modulators29, digital micromirror devices30, and liquid crystal displays31, were applied in computer-generated holography. In the last decade, with the rapid development of computer and material, computer-generated holography has made great progress and is expected to be commercialized in a near future32.

At present, the difficulties faced in computer-generated holography mainly focus on the following aspects: Firstly, the current calculation algorithms are not fast enough; secondly, the existing algorithms have the problem of limited reconstruction quality; thirdly, the traditional color holographic display system is complicated; finally, the unwanted terms and modulation accuracy of the SLM hinder the holographic display effect. In this paper, we provide a review of various CGH algorithms which aim to overcome the above difficulties. Organization of the paper is given as follows. Following the introduction, we review three main fast calculation algorithms in Section 2. In Section 3, we discuss various CGH optimized algorithms which aim to enhance the image quality and suppress the speckle noise. In Sections 4, we introduce some color-multiplexing coding (CMC) methods. Then we report some technologies to eliminate the unwanted terms and achieve accurate modulation in Section 5. Finally, conclusions and suggestions for future work are provided in Section 6.

Fast calculation

In CGH calculation, the 3D object is always decomposed into numerous primitives and the hologram is obtained by superposing the fringe patterns (FPs) of all primitives in the hologram plane. Therefore, the computation involved in CGH generation is huge and it is still a great challenge to realize dynamic holographic 3D display, especially when the 3D object is complicated and the size of the hologram is large. In the last decades, numerous fast computation algorithms have been proposed to accelerate CGH generation. From the perspective of the computational primitive, the algorithms can be divided into three main categories: (a) point-based method33, (b) polygon-based method34 and (c) layer-based method as shown in Fig. 3.

Point-based method

Point-based method, where the 3D object is represented by millions of points, is a simple and widely used method to calculate the CGHs. In calculation, each object point is regarded as a self-luminous point source and emits spherical wave irradiating the hologram plane. The complex amplitude distribution in the hologram plane can be obtained by superposing the FPs of all object points. From above, it can be easily seen that the main calculation involved in point-based method is the generation of the FPs. Hence, the computation burden can be reduced dramatically if the FPs of all possible object points can be calculated in advance and stored in the computer. Inspired by this idea, Lucente proposed look-up table (LUT) method22, which consists of off-line computation and on-line computation. In off-line computation, the FPs of all possible object points are pre-calculated and stored in a table. In on-line computation, the CGHs are generated by reading and superposing the pre-stored FP of each object point after being multiplied by its amplitude. The LUT method speeds up the hologram generation compared with point-based method and opens a door in terms of trading memory usage for computation speed. However, the required memory usage to store the pre-calculated FPs in LUT method is too large.

In order to reduce the memory usage of LUT, novel-LUT (N-LUT) method35 was proposed. In N-LUT method, a 3D object is divided into multiple 2D sliced planes along the axial direction, and only the FP of the center object point in each sliced plane, called principal fringe pattern (PFP), is pre-calculated and stored in a table. The FPs of other object points can be calculated by shifting the PFP in the same sliced plane according to the relative position relation in space coordinates, and the CGHs are obtained by summing the shifted PFPs of all object points after being multiplied by the corresponding amplitude. Although the memory requirement of N-LUT method reduces dramatically compared with LUT method, it is still large and affects the speed of data acquisition seriously. For some desired 3D objects, the neighboring object points have the same intensity and depth value. Based on this phenomenon, the N-point PFPs are generated by accumulating the FPs of the neighboring object points in off-line calculation instead of on-line calculation36. In this way, the amount of on-line calculation is reduced in comparison with N-LUT method. Although this method is effective to accelerate CGH calculation, it is not a common method for all desired 3D objects. Therefore, scholars implemented further research to obtain a better balance among calculation speed, memory usage, and precision. The non-uniform sampling is an effective way to reduce the storage amount without extra on-line computation37,38. Nevertheless, the stored data for a single depth plane is still a 2D matrix.

The PFP is a function of the radius and independent of the azimuth. Based on this phenomenon, scholars proposed retrieving the PFP of a single depth plane by its radial line, which is a one-dimensional (1D) vector39,40,41. However, this method may lead the missing of some pixels in the PFP. Along this way, split-LUT (S-LUT) method42 was proposed, where a smart approximation is used and the FP of each object point is split by the horizontal and vertical modulation factors. In on-line computation, the FPs can be calculated by multiplying the horizontal and vertical modulation factors according to the coordinate indexes. The S-LUT method brings a trivial approximate but achieves less memory usage and faster computational speed at the same time. According to the above statement, the memory usage of a 3D object in S-LUT method depends on the number of the depth planes and will be huge if the number of the depth planes is large. In order to solve this problem, compressed-LUT (C-LUT) method43 was developed. In C-LUT method, the horizontal and vertical modulator factors are separated from the depth information. Hence, only the horizontal and vertical modulation factors of the first depth plane need to be pre-calculated and the FPs of different depth planes can be generated by multiplying the corresponding longitudinal modulation factors. C-LUT method is based on an approximation where the depth of the 3D object is considerably smaller than the distance between the object and the hologram. As a result, there is distortion in the reconstructed images and the distortion increases with the increment of the object depth. Later, accurate C-LUT method44, accurate high C-LUT method45 was proposed in succession to alleviate the distortion by executing additional exponential computation.

Another way to speed up the calculation in point-based method is to reduce the calculation region of each object point. Inspired by this idea, Shimobaba et al. developed wavefront recording plane (WRP)46, which is a virtual plane and placed between the 3D object and the hologram, to accelerate the computation. This method consists of two steps. In the first step, the complex amplitude distribution of a 3D object on the WRP is recorded by point-based method. In the second step, the complex amplitude distribution on the CGH is calculated by executing Fresnel diffraction of convolution form between the WRP and CGH. Since WRP has the amplitude and phase information of a 3D object, the diffraction calculation from the WRP to the CGH is equivalent to calculate the complex amplitude distribution on the CGH from a 3D object directly. If the WRP is placed to the 3D object closely and the thickness of the 3D object is small, each object point will emit a wavefront covering only a small region on the WRP due to the restriction of the maximum diffraction angle47. In this way, the computation burden can be reduced dramatically because the size of the FPs on the WRP is much smaller than that of the CGH48. In order to obtain further acceleration, LUT method can be used in the first step49,50. From above, it is obvious that WRP method is very efficient in dealing with the 3D objects within small depth range because the size of the FPs on the WRP is proportional to the distance between the object point and the WRP. In other words, the computation efficiency will decrease dramatically if the depth range of the 3D object is large. In order to address this problem, double WRP51 and multiple WRP52 methods were proposed. In these methods, the 3D object is partitioned into two or more regions with small depth range along the axial direction. Then WRPs are placed near each region to record the wavefront of the corresponding region and the CGHs are finally generated by summing the contributions of all WRPs. In addition, tilted WRP method was also proposed to generate CGHs of a deep structure object scene53. Furthermore, the wavelet transform was also employed to reduce the amount of computation in WRP method54,55.

Polygon-based method

Although numerous fast calculation methods based on point-based method have been proposed, it is still time-consuming due to the huge amount of object points. In order to accelerate the calculation, researchers developed polygon-based method, which regards the 3D object as thousands of polygons rather than millions of points. In this way, the amount of the computational unit is significantly decreased. In polygon-based method, each polygon is regarded as a polygonal aperture, and the CGHs are obtained by adding the diffraction patterns of all polygonal apertures. In addition, combining with the rendering algorithms of computer graphics, polygon-based method can easily add texture and shade to the 3D scene. Usually, a 3D object is divided into thousands of tilted polygons which are not parallel to the hologram plane in polygon-based method. Hence, the core issue in polygon-based method is the diffraction calculation between the tilted plane and the hologram plane. Currently, polygon-based method can be divided into four categories, including traditional polygon-based method, full analytical polygon-based method, spatial approximate polygon-based method, and 3D affine transformation polygon-based method.

In polygon-based method, a 3D object is always investigated in two coordinate systems. One is the local coordinate system; the other is the global coordinate system. In implementation, traditional polygon-based method56,57,58,59 depicts the tilted polygon in the local coordinate system, computes the spectrum by FFT and the new frequency from the 3D rotational transformation matrix, rotates the spectrum to the global coordinate system by interpolation. After the spectrum propagates to the hologram plane in the global coordinate system, the diffraction pattern can be calculated by inverse FFT. For each polygon, traditional polygon-based method must conduct one polygon depiction, one FFT, and one 2D linear interpolation, which are time-consuming. Nevertheless, traditional polygon-based method can add texture for each independent facet and reconstruct realistic 3D scene.

In order to alleviate the heavy computation of traditional polygon-based method, full analytical polygon-based method was proposed, which aims to establish a connection between the spectrum of the primitive polygon and that of the arbitrary polygon60,61. Full analytical polygon-based method defines a primitive polygon and computes the analytical spectrum firstly. Then it implicitly uses the Fourier analysis of the 2D affine transformation, the 3D rotational transformation, and the angular spectrum propagation to compute the diffraction pattern in the hologram plane from the analytical spectrum. Although full analytical polygon-based method avoids polygon depiction and FFT for each polygon, it needs extra diffusion and texture adding computation to reconstruct realistic 3D objects, which are both time-consuming.

Spatial approximate polygon-based method uses the precomputed diffraction pattern of the primitive polygon62 to calculate the diffraction pattern of the tilted polygon approximately, which has the translational, rotational, and scaling relation with the primitive polygon. This method avoids FFT because all the computations are in the spatial domain and can add diffusive information without extra computation. However, the reconstruction distance is constrained and the texture cannot be added for each independent polygon.

Traditional 3D affine transformation polygon-based method63 defines a primitive polygon with an amplitude and a phase function in the local coordinate system and computes its spectrum firstly. Then the pseudo inversion matrix is used to calculate the core parameters in the 3D affine transformation matrix from the vertex vectors of the primitive polygon and the tilted polygon. Finally, the CGHs can be calculated in one step using the core parameters in the 3D affine transformation matrix because the 3D affine transformation contains the translational, rotational, and scaling transformation in 3D space. This method saves the time in polygon depiction and FFT, needs no extra diffusion computation and has no depth limitation. To speed up the computation, full analytical 3D affine transformation polygon-based method64,65 was also proposed, where one primitive triangle is defined and its analytical spectrum is explicitly expressed. The global angular spectrum of an arbitrary polygon in the hologram plane is calculated by using the analytical spectrum of the primitive triangle and 3D affine transformation matrix. Moreover, LUT method was also applied in polygon-based method to speed up the calculation by pre-calculating and storing the analytical spectrum in a table66.

In conclusion, polygon-based method has less computational unit compared with point-based method. However, the computation in each unit is more complex, such as depiction and interpolation. Although several improved polygon-based methods have been proposed to avoid above complex computation, they need extra diffusion and texture adding computation, which are also time-consuming.

Layer-based method

Point-based method and polygon-based method can provide precise geometrical information of a 3D scene, whereas the amount of the computational unit is extremely large. In recent years, layer-based method was developed to reduce the computational unit and accelerate the computation67. In layer-based method, the 3D object is divided into several layers parallel to the hologram plane and each layer is regard as an independent computational unit. Then Fresnel diffraction is used to calculate the sub-hologram of each layer and the CGHs are obtained by the superimposition of all sub-holograms. Considering the limited accommodation resolution of human eyes, the computational unit in layer-based method is small compared with point-based method and polygon-based method. Afterwards, the angular-spectrum method was also used in layer-based method to avoid the paraxial approximation and calculate accurate diffracted field68. From above, it is obvious that 2D FFT is the basic mathematics tool in layer-based method and limits the calculation speed no matter what kind of diffraction method is used. Accordingly, some improved methods were proposed to speed up the calculation, such as non-uniform 2D FFT method69, sub-sparse 2D FFT method70 and so on71,72.

Image quality enhancement and speckle suppression

In holographic display, the CGHs are always loaded on the SLM to acquire the target wavefront, so the feature of the SLM has great influence on holographic display effect. At present, most commercial SLMs can hardly modulate amplitude and phase simultaneously and independently. In other words, there is information loss in CGH encoding process, which degrades the reconstruction quality. The reconstructed image is composed of spots and each spot has an area known as the Airy disk. Hence, the actual intensity of the reconstructed image is determined by not only the amplitude distribution but also the phase distribution due to the interference in the overlapping spot areas. It is well known that random phase (RAP) is widely utilized to diffuse the object information in the hologram plane in CGH calculation. As a result, there are unwanted interferences between the adjacent pixels due to the phase differences, which are known as the speckle noise. In the last decades, numerous optimized algorithms have been developed to enhance the reconstruction quality and suppress the speckle noise. From the principle, the algorithms can be divided into phase optimization method, complex amplitude modulation (CAM) method and other methods.

Phase optimization method

The traditional phase optimization method is iterative algorithm. Gerchberg-Saxton (GS) algorithm is a widely used iterative algorithm to optimize the phase distribution73 as shown in Fig. 4. To begin with, the RAP φ0 is used to multiply the target image as the initial input complex amplitude A0 = | At | exp(jφ0) in the image plane, where |At | is the amplitude distribution of the target image. In each iteration, there are four steps as shown in Eq. (3): (1) the complex amplitude field Hk = |Hk | exp(jΦk) in the hologram plane is calculated by inverse Fourier transform (IFT) of the complex amplitude field Ak = |At | exp(jφk) in the image plane, where k represents the kth iteration; (2) the phase-only hologram (POH) H'k = exp(jΦ'k) is generated from the complex amplitude field Hk after phase extraction and amplitude normalization; (3) the reconstructed complex amplitude field A'k = |A'k | exp(jφ'k) in the image plane is calculated by Fourier transform (FT) of the POH H'k; (4) the input complex amplitude Ak+1 for the next iteration is generated after the reconstructed amplitude |A'k | is replaced by the target amplitude |At | . In implementation, GS algorithm reduces the errors within the first few iterations rapidly, whereas the convergence slows down or even stagnates in subsequent iterations.

In order to speed up convergence, Fienup algorithm was developed. The first three steps of Fienup algorithm are the same as GS algorithm. In the fourth step, the linear combination of the target amplitude and the reconstructed amplitude is used as the input amplitude for the next iteration instead of imposing the target amplitude directly74 as shown in Eq. (4). Afterwards, the nonlinear combination of the target amplitude and the reconstructed amplitude was also presented to improve the computation efficiency75.

where α is set for enhancing the convergence.

Although GS algorithm and Fienup algorithm can achieve effective convergence, they always stagnate into the local minimum. Instead of imposing amplitude constraint in the entire image plane, Fidoc algorithm has introduced amplitude freedom in the image plane to improve the reconstruction quality76. In Fidoc algorithm, the image plane is partitioned into signal region and freedom region. In each iteration, the amplitude distribution in the signal region is constrained, whereas the constraint in the freedom region is relaxed as shown in Eq. (5). Along this way, the noise suppression parameter77 and weighted constraint iterative algorithm78 were developed in succession to suppress the noise in the freedom region and improve the diffraction efficiency.

where α is set for enhancing the convergence, γ is set for suppressing the noise and M is a 2D matrix, the value in the signal region is 1 and the value in the freedom region is 0.

Iterative algorithms can optimize the phase distribution effectively by repeating forward and backward diffraction calculation between the image plane and the hologram plane. However, they are time-consuming and not suitable to realize dynamic display. Hence, some non-iterative algorithms have also been proposed to improve the reconstruction quality. As we know, the initial RAP with 2π range causes excessive diffusion of the object information, especially for high frequency region. In other words, it does not need RAP with 2π range as the initial phase to be added on the target image. Inspired by this idea, limited random phase method79, where RAP with 1.2π range is provided for the gray image and RAP with 1.5π range is added on the binary image, and gradient-limited random phase80,81, where RAP with different ranges is provided for the low frequency region and the high frequency region of the target image, were proposed successively. Furthermore, a frequency-based optimized random phase method82 was also developed, where RAP with different frequency ranges and maximal values is added on different frequency regions of the target image with the help of the Butterworth filte. Although above methods can enhance the reconstruction quality, the speckle noise could not be suppressed completely due to the presence of the RAP. Recently, patterned-POH method was proposed, where a tiled random phase mask is added to the desired image83. The tiled random phase mask acts as a diffuser and smooths the magnitude of the diffracted waves to a near uniform distribution. In addition, several RAP-free methods were also proposed to suppress the speckle noise. In RAP-free methods, virtual convergence light84,85 and quadratic phase86,87 with continuous distributed spectrum were utilized as the initial phase distribution of the desired image to generate Fresnel and Fourier POHs, respectively. Owing to the avoidance of the RAP, RAP-free methods can suppress the speckle noise effectively.

Moreover, optimized random phase (ORAP) method88, which combined iterative algorithms and non-iterative algorithms, was also developed to implement the generation of POHs for arbitrary target images. This method consists of off-line calculation and on-line calculation as shown in Fig. 5, where the red dashed line represents off-line calculation and the green dashed line represents on-line calculation. In off-line calculation, a target window |A0 | corresponding to the size of the target image is multiplied by the random phase φ0 as the initial input complex amplitude A0 = | A0 | exp(jφ0) in the image plane and GS algorithm is performed to optimize the phase of the reconstructed target window. Once the GS algorithm is completed, the phase of the reconstructed target window φ'k (ORAP) can be taken as the initial phase distribution φ of arbitrary target images |At | with the same size of the target window to generate POHs Φ as shown in Eq. (6). ORAP method avoids the on-line iterative calculation and is suitable for dynamic holographic display. Thereafter, ORAP method was extended to Fresnel region with the help of iterative Fresnel transform algorithm89. Moreover, tiled random phase mask90 and quadratic phase91 were also combined with ORAP method to suppress the speckle noise and achieve high-quality reconstruction.

where angle represents phase extraction operation.

CAM method

As mentioned above, there is information loss when the CGHs are loaded on the SLM. To avoid the information loss and realize CAM, both device method and encoding method have been proposed. So far, device method can be mainly classified into two categories according to the number of SLMs in the optical system. One is device method based on multiple SLMs; the other is device method based on single SLM. In 1984, Bartelt used two phase-only transmissive elements as spatial filters and auxiliary optical system to form a complex amplitude spatial filter92. Subsequently, several cascaded methods for different types of liquid crystal displays were proposed and demonstrated93,94,95,96. Afterwards, inspired by the superposition of light waves in the interferometer, researchers proposed interference method97,98 to achieve CAM, where the complex hologram is decomposed into two amplitude-only holograms (AOHs) or POHs based on analytic formula as shown in Eq. (7) and the decomposed two holograms are uploaded on two SLMs which are placed in the interferometer as shown in Fig. 6a. Thereafter, iteration and phase compensation method99 was also proposed. In this method, the POH uploaded on the first SLM is calculated by iterative algorithm according to the target amplitude and the second SLM is used to compensate the phase reconstructed by the first SLM. Moreover, a system which can control the amplitude and phase arbitrarily by changing the phase distribution loaded on two polarization-dependent SLMs with the assistance of a polarizer and a half-wave plate100 was also designed. As mentioned above, it is obvious that the optical structure of device method based on multiple SLMs is complicated and the alignment is hard to be achieved no matter what kind of system is used.

where Aexp(jφ) is the complex hologram, Acosφ and Asinφ are the decomposed two AOHs, exp(jθ1) = exp{j[φ + cos−1(A/2)]} and exp(jθ2) = exp{j[φ − cos−1(A/2)]} are the decomposed two POHs.

In order to simplify the optical system and avoid alignment, several device methods based on single SLM were designed to achieve CAM in the last decade. In 2011, Liu et al. uploaded the decomposed two AOHs in different regions of the SLM and utilized an amplitude grating filter as a frequency filter to couple them to a complex hologram in the output plane of the 4 f system101. Afterwards, the combination of two POHs and an amplitude grating or a phase grating102,103 was also utilized to generate a complex hologram as shown in Fig. 6b. Besides, grating filter can also synthesize two POHs arranged in a checkerboard pattern to a complex hologram with the help of a microprism array104. Although grating filter is widely used to generate the complex hologram, it introduces extra orders and reduces the efficiency for luminous energy utilization. In addition to grating filter, the combination of a structured half-wave plate, a birefringent crystal and a polarizer105 was used to control the amplitude and phase accurately and independently. However, the polarizer also reduces the efficiency for luminous energy utilization. Moreover, the iteration and phase compensation method was optimized by using a single SLM with the assistance of a reflective concave mirror106. Although this scheme simplifies the optical system, the iterative calculation is still time-consuming.

Compared with device method, encoding method is more convenient and has been widely applied in holographic display field. A well-known encoding method to generate complex amplitude field is superpixel method107,108,109,110. A superpixel contains several actual pixels of the SLM and represents a pixel of the target complex amplitude. In implementation, the actual value loaded on each pixel of the SLM is a part of the target complex amplitude which meets the modulation characteristics of the SLM. According to the sampling theorem, the light wave is resampled after passing through a low-pass filter and the CAM can be achieved successfully by controlling the aperture size of the filter. Working along this direction, double-phase hologram (DPH) method based on single-pixel modulation111,112,113 was also proposed to achieve compact arrangement of each decomposed part and high utilization efficiency of the spatial bandwidth product (SBP). In this method, the target complex amplitude is decomposed into two POHs and complementary 2D binary gratings (checkerboard patterns) are utilized to sample the POHs and combine them into a DPH as shown in Fig. 7a and Eq. (8). Compared with superpixel method, this method ensures that the number of sampling points between the target image and the hologram is always consistent and has high computational efficiency. However, due to the complementary sampling, the reconstructed results are affected by the approximation of the adjacent pixels and the reconstruction accuracy is slightly lower than that of superpixel method. No matter superpixel method or single-pixel method, the spatial shifting noise cannot be eliminated completely by filtering system due to the distribution characteristics of the envelope function. Hence, the weight factor114 and band-limiting function115 were proposed successively to suppress the spatial shifting noise.

where exp(jθ1) = exp{j[φ + cos−1(A/2)]} and exp(jθ2) = exp{j[φ − cos−1(A/2)]} are decomposed two POHs, Aexp(jφ) is the target complex amplitude, M1 and M2 are complementary 2D binary gratings (checkerboard patterns), and exp(iφ') is the DPH.

Another kind of encoding method for CAM is hologram bleaching method116,117,118. In this method, the amplitude information is encoded as a part of the phase information after the target complex amplitude interferes with an off-axis reference plane wave as shown in Fig. 7b. According to the property of Bessel function, the −1 order of the expansion is the desired complex amplitude as shown in Eq. (9), which can be picked up by a band-pass filter in reconstruction. Working along this direction, the band-limited zone plates and intensity modulation coefficient were introduced to modulate the bandwidth and intensity of the target complex amplitude119.

where H is the encoded hologram, H-1 is the −1 order of the expansion, Jm(*) is the m order of the Bessel function of the first kind. Aexp(jφ) is the target complex amplitude, exp(-jφr) is the off-axis reference plane wave.

In addition to the above two encoding methods, double-constraint iterative method was also introduced to modulate amplitude and phase simultaneously. Compared with Fidoc algorithm, the amplitude and phase in the signal domain are both constrained and replaced by the desired values120,121 as shown in Eq. (10). In this way, the optimized POHs can reconstruct the desired complex amplitude distribution approximatively. The optical system of double-constraint iterative method is simple. However, iterative calculation is time-consuming and can only generate an approximate result rather than an accurate one.

where M is a 2D matrix, the value in the signal region is 1 and the value in the freedom region is 0. |At | is the desired amplitude distribution, φt is the desired phase distribution, which is usually chosen as uniform phase or quadratic phase. α, β and γ are set for enhancing the intensity and contrast in the signal domain.

Other methods

In addition to phase optimization method and CAM method, there are also some other methods for speckle noise suppression. As mentioned above, the speckle noise exists due to the unwanted interferences between the adjacent object points caused by RAP in reconstruction process. Because of the independence and randomness of the RAP, the unwanted interferences are independent and random. Inspired by this idea, researchers proposed time-average method122,123,124,125,126 to suppress the speckle noise by summating multiple images generated by different RAP. Although this method is successful in reducing the speckle noise, it requires a SLM with high frame rate and is difficult to realize dynamic holographic display.

Another solution for speckle noise suppression is to avoid the interferences between the adjacent object points. Based on this idea, pixel separation method127,128,129 was proposed, where the CGHs of the sparse images are generated at different time and combined to form the complete image. However, the combination of multiple sparse images is also time-consuming and not suitable for dynamic holographic display. Afterwards, a sampled POH method130, which down-samples the desired image with a uniform grid-cross lattice prior to the generation of the POH, was also presented to preserve good visual quality on the reconstructed image. Working along this direction, the edge dependent down-sampled lattice131, the complementary down-sampled lattice132 and an optimal sampled phase-only hologram method133 were presented sequentially to improve the reconstruction quality. Although the above methods can suppress the speckle noise effectively, the down-sampling causes the loss of information.

Moreover, partially coherent light (PCL) illumination is also a feasible way to suppress the speckle noise by reducing the coherence of the light source134,135. In the last decade, many scholars have studied the influence of PCL illumination on holographic reconstruction136,137,138 and designed various CGH algorithms139,140. However, PCL illumination will result to the problem of image blurring, which is fatal for display. Hence, coherent light is still the main illumination source for holographic display due to the high brightness and contrast.

Color display

Since holography is based on interference and diffraction theory, red (R), green (G), blue (B) holograms of the color object need to be generated and reconstructed separately. Currently, the most widely used method to realize color holographic display is spatial-multiplexing method as shown in Fig. 8a, where three SLMs are required to load RGB hologram, respectively141,142,143. In reconstruction, RGB lasers illuminate on the corresponding SLMs and the color object is formed by combining RGB objects using BSs. The system based on spatial-multiplexing method fully utilizes the SBP of the SLM and has high optical efficiency. However, the display system is too bulky in size and too high in cost because the optical elements used in spatial-multiplexing method trebles that of monochromatic holographic display. Moreover, the system has the problem of precise alignment for RGB reconstructed objects. To reduce the number of SLMs required in color holographic display, time-multiplexing method was proposed144,145,146 as shown in Fig. 8b. In time-multiplexing method, RGB lasers illuminate on a single SLM time-sequentially and the corresponding holograms are loaded on the SLM synchronously. When the frequency of switching RGB lasers and the corresponding holograms is high enough, the color objects can be observed by the persistence effect of human eyes. This system requires a SLM with very high frame rate. Besides, it also needs the accurate synchronization of the RGB laser illumination and the corresponding hologram loading. In addition, an improved spatial-multiplexing method147,148 was proposed to reduce the system size, where one SLM is divided into three regions for RGB holograms and illuminated by spatially separated RGB lasers. However, it is obvious that the decrease of effective resolution for RGB holograms deteriorates the reconstruction quality.

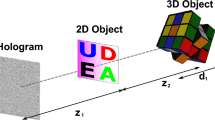

In the last decade, various CMC methods have been developed as an alternative to spatial-multiplexing method and time-multiplexing method to realize color holographic display. In these methods, RGB holograms are synthesized into a color-multiplexing hologram (CMH) by multiplexing different physical parameters. In 2008, depth-division multiplexing (DDM) method149,150 was developed to reconstruct color 2D images as shown in Fig. 9a, b. In DDM method, the distance from the CMH to RGB images is predetermined and an iterative multi-plane optimization algorithm is applied to improve the final reconstruction quality. When the CMH is illuminated by RGB lasers simultaneously, the color image can be reconstructed at the predetermined position. DDM achieves color holographic display by a single SLM successfully. However, it can only reconstruct color 2D images rather than 3D objects and is difficult to realize dynamic display because of the iterative computation. Afterwards, space-division multiplexing (SDM) method151 was presented to reconstruct color 3D objects as shown in Fig. 9c, d. SDM divides the color 3D object into transversely distributed RGB components and utilizes Fresnel diffraction or Shifted-Fresnel diffraction to calculate RGB holograms, respectively. In reconstruction, the color 3D object can be obtained by illuminating RGB lasers to the CMH at the predetermined angle simultaneously. Due to the transverse distribution of RGB components, the unwanted images are separated from the desired object in space domain. It is noted that RGB holograms are optimized individually by GS algorithm for improvement of the reconstruction quality in SDM method, which indicates that it is also not suitable for dynamic holographic display.

a Recording and b reconstruction processes of DDM; c Recording and d reconstruction processes of SDM, where z is the diffraction distance, d is the distance between each transverse component and θ is the incident angle of the reconstruction light. Reprinted with permission from ref. 151. ©The Optical Society.

In 2014, another multiplexing method152 was also proposed to achieve color holographic display by using off-axis CMHs as shown in Fig. 10a. In this method, RGB diffraction patterns interfere with the corresponding tilted plane reference lights along x axis to generate RGB off-axis holograms. Then RGB off-axis holograms are synthesized and encoded as a phase-only CMH based on analytic formula after interfering with titled plane reference lights along y axis. In reconstruction, RGB lasers are separated to accommodate the illumination angle of off-axis RGB holograms and the desired images can be picked up by a band-pass filter in the frequency plane. This method can be easily applied for color dynamic holographic display because there is no iterative calculation. However, the system is complex and unstable due to the arrangement of the illumination source. Subsequently, a specially designed optical element, which consists of two prisms, a transparent glass and RGB color filters, was used to filter and refract the white light source into RGB colors with different directions153. The designed optical element greatly simplifies the complexity of the system based on off-axis CMH.

a The method in ref. 152, where c = r, g, b corresponds to RGB channel, Ac is the target image, Uc is the diffraction pattern of Ac, Rc = exp(jkcxsinθc) is the tilted plane reference light along x axis, Hc = Uc × Rc is the off-axis hologram, R'c = exp(jkcysinθ) is the titled plane reference light along y axis, and H is the complex CMH; b The method in ref. 157, where A'c is the target image after bandwidth limitation, Uc is the diffraction pattern of A'c, Rc = exp(jkcysinθ) is the titled plane reference light along y axis, and H is the complex rainbow hologram.

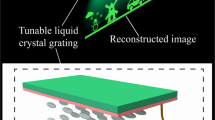

In recent years, several frequency-division methods (FDMs) were successively developed to realize color holographic display154,155,156. FDM divides the frequency spectrum of RGB components at distinct location in the Fourier plane of a CMH. In this way, the color object can be reconstructed successfully with the assistant of a filter in the frequency plane when a multi-wavelength light source is used as the illumination source. FDM simplifies the display system because of the on-line illumination. Another holographic display technology with white light illumination is rainbow holography. Recently, a rainbow hologram alike method157,158 based on frequency domain multiplexing has also been proposed for color holographic display as shown in Fig. 10b. In implementation, RGB diffraction patterns are calculated after the bandwidth of RGB components is limited by a slit filter in the vertical direction. Then RGB diffraction patterns are synthesized as a rainbow hologram after interfering with the plane reference lights with an off-axis angle θ along y axis. In reconstruction, the rainbow hologram is illuminated by white light with an off-axis angle -θ along y axis and a slit filter is used to extract the desired RGB frequency components to achieve color display. It is noted that the color of the reconstructed object can be changed by changing the position of the slit filter as well as the width of the slit filter Fig. 10.

Unwanted terms and modulation accuracy

Unwanted terms

In holographic display, there is always a zero-order beam in the center of the reconstructed image due to the pixelated structure of the SLM159. Specifically, the incident light is are modulated by not only the active areas but also the inactive areas between the adjacent pixels160 and the zero-order beam is determined by the fill factor, the amplitude and the phase of the inactive areas161. In order to eliminate the zero-order beam, two kinds of methods have been proposed. One is to translate the reconstructed image from the zero-order beam by superimposing a linear phase and a divergent spherical phase161, or an additional phase checkerboard function162 to the CGHs, the other is to produce a cancellation beam which destructively interferes with the zero-order beam163,164,165. Apart from the zero-order beam, the conjugate image always appears in the AOH due to the coding process from the complex hologram to the nonnegative AOH. Off-axis recording could separate the conjugate image from the desired image in the spatial frequency domain by adding a carrier frequency on the CGHs. In addition, single-sideband method can also eliminate the conjugate image by filtering out half of the spatial frequency in both recording and reconstruction process166,167. Because of the pixelated structure of the SLM, the diffraction pattern is always replicated laterally, which is known as the high-order diffraction terms. In order to eliminate the high-order diffraction terms, a 4 f system with a filter in the frequency plane can be used in optical experiments168. Moreover, the diffraction pattern is modulated by an envelope function caused by the finite pixel size. In order to improve the visual effect, digital pre-filtering method was presented to compensate the distortion169.

Modulation accuracy

Although the SLM can modulate the amplitude or phase of the incident light, the accurate wavefront manipulation is still hindered by some errors. In other words, in order to achieve high-precision holographic display, the modulation errors caused by the SLM cannot be ignored. Generally, the modulation accuracy of the SLM is mainly influenced by two aspects: the static error and the dynamic error. The static error is introduced by the flatness of the cover glass, while the dynamic error is caused by the nonuniform and nonlinear gamma curve. In practical applications, a compensation matrix for the panel of the SLM or a remapping LUT for the gamma curve is built after the modulation capability of the SLM is measured and then the calibration information is added to the CGH to achieve precise encoding. Until now, several approaches have been proposed to compensate the static error. Xun et al. utilized the four-step phase-shift interference algorithm to measure and compensate the nonuniform flatness of the backplane curvature170. Otón et al. developed a multipoint calibration method to improve the compensation performance and calibrated the error by Michelson interference and Ronchi grating method171. To improve the modulation accuracy, researchers have also made several attempts to overcome the influence from the dynamic error. Reichelt introduced a spatially resolved phase response method to measure and compensate the spatial nonuniformity172. Yang et al. employed digital holographic microscopy to characterize the nonlinear dynamic phase response of the SLM and utilized gamma curve calibration to optimize the distortion173. Zhao et al. divided the entire panel of the SLM into several local regions and proposed a multi-region calibration method to minimize the nonlinear response and static distortion of each local region174. Apart from manual calibration, some automatic calibration software has also been developed for the fast measurement and the automatic compensation. In short, the calibration method has gradually become more precise and faster.

Conclusion

In this paper, we review numerous CGH algorithms with the aim of color dynamic holographic 3D display. For computation speed, we report various fast calculation algorithms based on point-based method, polygon-based method, and layer-based method. Although many effective algorithms have been developed, they are still isolated and not fast enough. The combination of different primitive methods into an optimized algorithm is a good solution to accelerate CGH computation. In addition, with the development of computer, the combination of fast calculation algorithms and high-performance computing equipment is also an effective means to speed up the calculation175,176. Furthermore, deep learning has also been used to CGH generation in recent years177,178,179,180, which shows great potential for both high-speed and high-quality reconstruction. For image quality enhancement and speckle suppression, we report phase optimization method, CAM method and some other methods. Traditional iterative phase optimization method is time-consuming, whereas non-iterative phase optimization method will cause other problems, such as ringing artifacts or a periodic patterning effect. In recent years, the appearance of ORAP, which combines iterative and non-iterative phase optimization methods, opens a door to realize high-quality dynamic holographic display by optimizing phase distribution. CAM is realized with the reduction of effective resolution because the amount of information of a complex hologram doubles that of an AOH or a POH. In other words, the essence of CAM, no matter how to realize, is sacrificing the SBP to achieve the expression of the complex amplitude information. In order to remit the limitation of finite SBP, some approaches have been proposed181,182,183,184. At the same time, the development of new optical materials, such as metamaterials, lights up a passage for complex amplitude modulators185,186. Temporal method, pixel separation method, and PCL illumination can suppress the speckle noise efficiently, whereas, there is lack of further optimization direction. For color display, we review various CMC methods which aim to realize color holographic display. However, limited by the SBP of existing SLMs, the display effect cannot meet the requirements of humans and needs to be improved further. Despite the CMC method is facing the restriction of finite SBP, it is meaningful to introduce it into color holographic display because it avoids not only the complex structure but also the high cost. With the development of SLMs towards higher resolution, smaller pixel size and more accurate modulation, it is believed that color dynamic holographic 3D display will make a breakthrough in the future187. In summary, holographic display is regarded as one of the most promising 3D display technologies because it can reconstruct all the depth cues of a 3D scene. Meanwhile, there is still a great potential for the further development of color dynamic holographic 3D display. With the development of not only the CGH algorithms but also the devices and systems188,189, it is expected that holographic display will come into the market and daily life in a near future.

References

Tsang, P. W. M. & Poon, T. C. Review on the state-of-the-art technologies for acquisition and display of digital holograms. IEEE Trans. Ind. Inform. 12, 886–901 (2016).

Gabor, D. A new microscopic principle. Nature 161, 777–778 (1948).

Leith, E. N. & Upatnieks, J. Reconstructed wavefronts and communication theory. J. Optical Soc. Am. 52, 1123–1130 (1962).

Leith, E. N. & Upatnieks, J. Wavefront reconstruction with continuous-tone objects. J. Optical Soc. Am. 53, 1377–1381 (1963).

Leith, E. N. & Upatnieks, J. Wavefront reconstruction with diffused illumination and three-dimensional objects. J. Optical Soc. Am. 54, 1295–1301 (1964).

Denisyuk, Y. N. Photographic reconstruction of the optical properties of an object in its own scattered radiation field. Sov. Phys. Dokl. 7, 543 (1962).

Denisyuk, Y. N. The reflection of optical properties of an object in the wave field of its scattered radiation. Opt. I Spektroskopiya 15, 522–532 (1963).

Tsuruta, T. Holography using an extended spatially incoherent source. J. Optical Soc. Am. 60, 44–48 (1970).

Agour, M. & Kreis, T. Experimental Investigation of Holographic 3D-TV Approach. 2009 3DTV Conference: The True Vision—Capture, Transmission and Display of 3D Video. Potsdam (EEE, 2009).

Paturzo, M. et al. Synthesis and display of dynamic holographic 3D scenes with real-world objects. Opt. Express 18, 8806–8815 (2010).

Kozacki, T. et al. Extended viewing angle holographic display system with tilted SLMs in a circular configuration. Appl. Opt. 51, 1771–1780 (2012).

Zhang, H. et al. Fully computed holographic stereogram based algorithm for computer-generated holograms with accurate depth cues. Opt. Express 23, 3901–3913 (2015).

Zhang, H., Cao, L. C. & Jin, G. F. Three-dimensional computer-generated hologram with Fourier domain segmentation. Opt. Express 27, 11689–11697 (2019).

Chu, J. C. H. et al. Application of holographic display in radiotherapy treatment planning II: a multi-institutional study. J. Appl. Clin. Med. Phys. 10, 115–124 (2009).

Abdelazeem, R. M. et al. Three-dimensional visualization of brain tumor progression based accurate segmentation via comparative holographic projection. PLoS One 15, e0236835 (2020).

Bianco, V. et al. Quasi noise-free digital holography. Light.: Sci. Appl. 5, e16142 (2016).

Bianco, V. et al. Strategies for reducing speckle noise in digital holography. Light.: Sci. Appl. 7, 48 (2018).

Kozma, A. & Kelly, D. L. Spatial filtering for detection of signals submerged in noise. Appl. Opt. 4, 387–392 (1965).

Brown, B. R. & Lohmann, A. W. Complex spatial filtering with binary masks. Appl. Opt. 5, 967–969 (1966).

Lohmann, A. W. & Paris, D. P. Binary fraunhofer holograms, generated by computer. Appl. Opt. 6, 1739–1748 (1967).

Lesem, L. B., Hirsch, P. M. & Jordan, J. A. The kinoform: a new wavefront reconstruction device. IBM J. Res. Dev. 13, 150–155 (1969).

Lucente, M. E. Interactive computation of holograms using a look-up table. J. Electron. Imaging 2, 28–34 (1993).

Yamaguchi, M. et al. High-quality recording of a full-parallax holographic stereogram with a digital diffuser. Opt. Lett. 19, 135–137 (1994).

Matsushima, K., Schimmel, H. & Wyrowski, F. Fast calculation method for optical diffraction on tilted planes by use of the angular spectrum of plane waves. J. Optical Soc. Am. A 20, 1755–1762 (2003).

Nishi, S. et al. Fast calculation of computer-generated Fresnel hologram utilizing distributed parallel processing and array operation. Optical Rev. 12, 287–292 (2005).

Ahrenberg, L. et al. Computer generated holography using parallel commodity graphics hardware. Opt. Express 14, 7636–7641 (2006).

Pi, D. P. et al. Accelerating calculation method for curved computer-generated hologram using look-up table in holographic display. Opt. Commun. 485, 126750 (2021).

Pi, D. P., Liu, J. & Yu, S. Two-step acceleration calculation method to generate curved holograms using the intermediate plane in a three-dimensional holographic display. Appl. Opt. 60, 7640–7647 (2021).

Hilaire, P. S., Benton, S. A. & Lucente, M. Synthetic aperture holography: a novel approach to three-dimensional displays. J. Optical Soc. Am. A 9, 1969–1977 (1992).

Kreis, T. M., Aswendt, P. & Hoefling, R. Hologram reconstruction using a digital micromirror device. Optical Eng. 40, 926–933 (2001).

Haist, T., Schönleber, M. & Tiziani, H. J. Computer-generated holograms from 3D-objects written on twisted-nematic liquid crystal displays. Opt. Commun. 140, 299–308 (1997).

Blanche, P. A. Holography, and the future of 3D display. Light.: Adv. Manuf. 2, 28 (2021).

Tsang, P. W. M., Poon, T. C. & Wu, Y. M. Review of fast methods for point-based computer-generated holography [Invited]. Photonics Res. 6, 837–846 (2018).

Zhang, Y. P. et al. Polygon-based computer-generated holography: a review of fundamentals and recent progress [Invited]. Appl. Opt. 61, B363–B374 (2022).

Kim, S. C. & Kim, E. S. Effective generation of digital holograms of three-dimensional objects using a novel look-up table method. Appl. Opt. 47, D55–D62 (2008).

Kim, S. C. & Kim, E. S. Fast computation of hologram patterns of a 3D object using run-length encoding and novel look-up table methods. Appl. Opt. 48, 1030–1041 (2009).

Zhang, Z. et al. Tunable nonuniform sampling method for fast calculation and intensity modulation in 3D dynamic holographic display. Opt. Lett. 38, 2676–2679 (2013).

Pi, D. P. et al. Simple and effective calculation method for computer-generated hologram based on non-uniform sampling using look-up-table. Opt. Express 27, 37337–37348 (2019).

Yang, Z. et al. A new method for producing computer generated holograms. J. Opt. 14, 095702 (2012).

Nishitsuji, T. et al. Fast calculation of computer-generated hologram using the circular symmetry of zone plates. Opt. Express 20, 27496–27502 (2012).

Jiao, S. M., Zhuang, Z. Y. & Zou, W. B. Fast computer generated hologram calculation with a mini look-up table incorporated with radial symmetric interpolation. Opt. Express 25, 112–123 (2017).

Pan, Y. C. et al. Fast CGH computation using S-LUT on GPU. Opt. Express 17, 18543–18555 (2009).

Jia, J. et al. Reducing the memory usage for effective computer-generated hologram calculation using compressed look-up table in full-color holographic display. Appl. Opt. 52, 1404–1412 (2013).

Gao, C. et al. Accurate compressed look up table method for CGH in 3D holographic display. Opt. Express 23, 33194–33204 (2015).

Pi, D. P. et al. Reducing the memory usage of computer-generated hologram calculation using accurate high-compressed look-up-table method in color 3D holographic display. Opt. Express 27, 28410–28422 (2019).

Shimobaba, T., Masuda, N. & Ito, T. Simple and fast calculation algorithm for computer-generated hologram with wavefront recording plane. Opt. Lett. 34, 3133–3135 (2009).

Yoshikawa, H., Yamaguchi, T. & Kitayama, R. Real-time generation of full color image hologram with compact distance look-up table. Adv. Imaging https://doi.org/10.1364/DH.2009.DWC4 (2009).

Tsang, P. W. M. & Poon, T. C. Review on theory and applications of wavefront recording plane framework in generation and processing of digital holograms. Chin. Opt. Lett. 11, 010902 (2013).

Shimobaba, T. et al. Rapid calculation algorithm of Fresnel computer-generated-hologram using look-up table and wavefront-recording plane methods for three-dimensional display. Opt. Express 18, 19504–19509 (2010).

Pi, D. P. et al. Acceleration of computer-generated hologram using wavefront-recording plane and look-up table in three-dimensional holographic display. Opt. Express 28, 9833–9841 (2020).

Phan, A. H. et al. Generation speed and reconstructed image quality enhancement of a long-depth object using double wavefront recording planes and a GPU. Appl. Opt. 53, 4817–4824 (2014).

Hasegawa, N. et al. Acceleration of hologram generation by optimizing the arrangement of wavefront recording planes. Appl. Opt. 56, A97–A103 (2017).

Arai, D. et al. Acceleration of computer-generated holograms using tilted wavefront recording plane method. Opt. Express 23, 1740–1747 (2015).

Shimobaba, T. & Ito, T. Fast generation of computer-generated holograms using wavelet shrinkage. Opt. Express 25, 77–87 (2017).

Arai, D. et al. An accelerated hologram calculation using the wavefront recording plane method and wavelet transform. Opt. Commun. 393, 107–112 (2017).

Pan, Y. J. et al. Analytical brightness compensation algorithm for traditional polygon-based method in computer-generated holography. Appl. Opt. 52, 4391–4399 (2013).

Nishi, H., Matsushima, K. & Nakahara, S. Rendering of specular surfaces in polygon-based computer-generated holograms. Appl. Opt. 50, H245–H252 (2011).

Matsushima, K. Formulation of the rotational transformation of wave fields and their application to digital holography. Appl. Opt. 47, D110–D116 (2008).

Matsushima, K. Computer-generated holograms for three-dimensional surface objects with shade and texture. Appl. Opt. 44, 4607–4614 (2005).

Kim, H., Hahn, J. & Lee, B. Mathematical modeling of triangle-mesh-modeled three-dimensional surface objects for digital holography. Appl. Opt. 47, D117–D127 (2008).

Ahrenberg, L. et al. Computer generated holograms from three dimensional meshes using an analytic light transport model. Appl. Opt. 47, 1567–1574 (2008).

Sakata, H. & Sakamoto, Y. Fast computation method for a Fresnel hologram using three-dimensional affine transformations in real space. Appl. Opt. 48, H212–H221 (2009).

Pan, Y. J. et al. Fast polygon-based method for calculating computer-generated holograms in three-dimensional display. Appl. Opt. 52, A290–A299 (2013).

Pan, Y. J. et al. Improved full analytical polygon-based method using Fourier analysis of the three-dimensional affine transformation. Appl. Opt. 53, 1354–1362 (2014).

Zhang, Y. P. et al. Fast generation of full analytical polygon-based computer-generated holograms. Opt. Express 26, 19206–19224 (2018).

Wang, F. et al. Acceleration of polygon-based computer-generated holograms using look-up tables and reduction of the table size via principal component analysis. Opt. Express 29, 35442–35455 (2021).

Bayraktar, M. & Özcan, M. Method to calculate the far field of three-dimensional objects for computer-generated holography. Appl. Opt. 49, 4647–4654 (2010).

Zhao, Y. et al. Accurate calculation of computer-generated holograms using angular-spectrum layer-oriented method. Opt. Express 23, 25440–25449 (2015).

Chang, C. L. et al. Simple calculation of a computer-generated hologram for lensless holographic 3D projection using a nonuniform sampled wavefront recording plane. Appl. Opt. 55, 7988–7996 (2016).

Jia, J., Si, J. & Chu, D. P. Fast two-step layer-based method for computer generated hologram using sub-sparse 2D fast Fourier transform. Opt. Express 26, 17487–17497 (2018).

Kim, H. G., Jeong, H. & Ro, Y. M. Acceleration of the calculation speed of computer-generated holograms using the sparsity of the holographic fringe pattern for a 3D object. Opt. Express 24, 25317–25328 (2016).

Kim, H. G. & Ro, Y. M. Ultrafast layer based computer-generated hologram calculation with sparse template holographic fringe pattern for 3-D object. Opt. Express 25, 30418–30427 (2017).

Gerchberg, R. W. & Saxton, W. O. A practical algorithm for the determination of phase from image and diffraction plane pictures. Optik 35, 237–246 (1972).

Fienup, J. R. Iterative method applied to image reconstruction and to computer-generated holograms. Optical Eng. 19, 297–305 (1980).

Kuzmenko, A. V. Weighting iterative Fourier transform algorithm of the kinoform synthesis. Opt. Lett. 33, 1147–1149 (2008).

Akahori, H. Spectrum leveling by an iterative algorithm with a dummy area for synthesizing the kinoform. Appl. Opt. 25, 802–811 (1986).

Georgiou, A. et al. Aspects of hologram calculation for video frames. J. Opt. A: Pure Appl. Opt. 10, 035302 (2008).

Chen, L. Z. et al. Weighted constraint iterative algorithm for phase hologram generation. Appl. Sci. 10, 3652 (2020).

Ma, H. et al. Influence of limited random-phase of objects on the image quality of 3D holographic display. Opt. Commun. 385, 153–159 (2017).

Pi, D. P. et al. Design methods to generate a computer hologram for improving image quality. Appl. Opt. 57, 2720–2726 (2018).

Zhao, T. et al. Image quality enhancement via gradient-limited random phase addition in holographic display. Opt. Commun. 442, 84–89 (2019).

He, Z. H. et al. Frequency-based optimized random phase for computer-generated holographic display. Appl. Opt. 60, A145–A154 (2021).

Tsang, P. W. M., Chow, Y. T. & Poon, T. C. Generation of patterned-phase-only holograms (PPOHs). Opt. Express 25, 9088–9093 (2017).

Shimobaba, T. & Ito, T. Random phase-free computer-generated hologram. Opt. Express 23, 9549–9554 (2015).

Shimobaba, T. et al. Random phase-free kinoform for large objects. Opt. Express 23, 17269–17274 (2015).

Chen, C. et al. Fast method for ringing artifacts reduction in random phase-free kinoforms. Appl. Opt. 58, A13–A20 (2019).

Nagahama, Y. et al. Image quality improvement of random phase-free holograms by addressing the cause of ringing artifacts. Appl. Opt. 58, 2146–2151 (2019).

Zea, A. V., Ramirez, J. F. B. & Torroba, R. Optimized random phase only holograms. Opt. Lett. 43, 731–734 (2018).

Velez-Zea, A. et al. Generation and experimental reconstruction of optimized Fresnel random phase-only holograms. J. Opt. 23, 055602 (2021).

Velez-Zea, A. & Torroba, R. Optimized random phase tiles for non-iterative hologram generation. Appl. Opt. 58, 9013–9019 (2019).

Chen, L. Z. et al. Non-iterative phase hologram generation with optimized phase modulation. Opt. Express 28, 11380–11392 (2020).

Bartelt, H. Computer-generated holographic component with optimum light efficiency. Appl. Opt. 23, 1499–1502 (1984).

Gregory, D. A., Kirsch, J. C. & Tam, E. C. Full complex modulation using liquid-crystal televisions. Appl. Opt. 31, 163–165 (1992).

Amako, J., Miura, H. & Sonehara, T. Wave-front control using liquid-crystal devices. Appl. Opt. 32, 4323–4329 (1993).

Neto, L. G., Roberge, D. & Sheng, Y. L. Full-range, continuous, complex modulation by the use of two coupled-mode liquid-crystal televisions. Appl. Opt. 35, 4567–4576 (1996).

Hsieh, M. L., Chen, M. L. & Cheng, C. J. Improvement of the complex modulated characteristic of cascaded liquid crystal spatial light modulators by using a novel amplitude compensated technique. Optical Eng. 46, 070501 (2007).

Zhu, N. et al. Holographic projection based on interference and analytical algorithm. Opt. Commun. 283, 4969–4971 (2010).

Gao, Q. K. et al. Monocular 3D see-through head-mounted display via complex amplitude modulation. Opt. Express 24, 17372–17383 (2016).

Siemion, A. et al. Diffuserless holographic projection working on twin spatial light modulators. Opt. Lett. 37, 5064–5066 (2012).

Zhu, L. & Wang, J. Arbitrary manipulation of spatial amplitude and phase using phase-only spatial light modulators. Sci. Rep. 4, 7441 (2014).

Liu, J. P. et al. Complex Fresnel hologram display using a single SLM. Appl. Opt. 50, H128–H135 (2011).

Song, H. et al. Optimal synthesis of double-phase computer generated holograms using a phase-only spatial light modulator with grating filter. Opt. Express 20, 29844–29853 (2012).

Gao, Q. K. et al. Compact see-through 3D head-mounted display based on wavefront modulation with holographic grating filter. Opt. Express 25, 8412–8424 (2017).

Choi, S. et al. Modulation efficiency of double-phase hologram complex light modulation macro-pixels. Opt. Express 22, 21460–21470 (2014).

Reichelt, S. et al. Full-range, complex spatial light modulator for real-time holography. Opt. Lett. 37, 1955–1957 (2012).

Jesacher, A. et al. Near-perfect hologram reconstruction with a spatial light modulator. Opt. Express 16, 2597–2603 (2008).

Goorden, S. A., Bertolotti, J. & Mosk, A. P. Superpixel-based spatial amplitude and phase modulation using a digital micromirror device. Opt. Express 22, 17999–18009 (2014).

Bagnoud, V. & Zuegel, J. D. Independent phase and amplitude control of a laser beam by use of a single-phase-only spatial light modulator. Opt. Lett. 29, 295–297 (2004).

Arrizón, V. Complex modulation with a twisted-nematic liquid-crystal spatial light modulator: double-pixel approach. Opt. Lett. 28, 1359–1361 (2003).

Arrizón, V. & Sánchez-De-La-Llave, D. Double-phase holograms implemented with phase-only spatial light modulators: performance evaluation and improvement. Appl. Opt. 41, 3436–3447 (2002).

Mendoza-Yero, O., Mínguez-Vega, G. & Lancis, J. Encoding complex fields by using a phase-only optical element. Opt. Lett. 39, 1740–1743 (2014).

Qi, Y., Chang, C. L. & Xia, J. Speckleless holographic display by complex modulation based on double-phase method. Opt. Express 24, 30368–30378 (2016).

Chang, C. et al. Speckle reduced lensless holographic projection from phase-only computer-generated hologram. Opt. Express 25, 6568–6580 (2017).

Kim, Y. K., Lee, J. S. & Won, Y. H. Low-noise high-efficiency double-phase hologram by multiplying a weight factor. Opt. Lett. 44, 3649–3652 (2019).

Sui, X. M. et al. Band-limited double-phase method for enhancing image sharpness in complex modulated computer-generated holograms. Opt. Express 29, 2597–2612 (2021).

Li, X. et al. 3D dynamic holographic display by modulating complex amplitude experimentally. Opt. Express 21, 20577–20587 (2013).

Li, X. et al. Color dynamic holographic display with wide viewing angle by improved complex amplitude modulation. Opt. Express 26, 2349–2358 (2018).

Pi, D. P., Liu, J. & Yu, S. Speckleless color dynamic three-dimensional holographic display based on complex amplitude modulation. Appl. Opt. 60, 7844–7848 (2021).

Chen, Y. et al. Holographic near-eye display based on complex amplitude modulation with band-limited zone plates. Opt. Express 29, 22749–22760 (2021).

Chang, C. L. et al. Speckle-suppressed phase-only holographic three-dimensional display based on double-constraint Gerchberg-Saxton algorithm. Appl. Opt. 54, 6994–7001 (2015).

Pang, H. et al. High-accuracy method for holographic image projection with suppressed speckle noise. Opt. Express 24, 22766–22776 (2016).

Liu, S. J., Wang, D. & Wang, Q. H. Speckle noise suppression method in holographic display using time multiplexing technique. Opt. Commun. 436, 253–257 (2019).

Takaki, Y. & Yokouchi, M. Speckle-free and grayscale hologram reconstruction using time-multiplexing technique. Opt. Express 19, 7567–7579 (2011).

Hsu, W. F. & Yeh, C. F. Speckle suppression in holographic projection displays using temporal integration of speckle images from diffractive optical elements. Appl. Opt. 50, H50–H55 (2011).

Amako, J., Miura, H. & Sonehara, T. Speckle-noise reduction on kinoform reconstruction using a phase-only spatial light modulator. Appl. Opt. 34, 3165–3171 (1995).

Liu, J. P. et al. Performance estimation of intensity accumulation display by computer-generated holograms. Appl. Sci. 11, 7729 (2021).

Makowski, M. Minimized speckle noise in lens-less holographic projection by pixel separation. Opt. Express 21, 29205–29216 (2013).

Mori, Y., Fukuoka, T. & Nomura, T. Speckle reduction in holographic projection by random pixel separation with time multiplexing. Appl. Opt. 53, 8182–8188 (2014).

Ducin, I. et al. Holographic projection of images with step-less zoom and noise suppression by pixel separation. Opt. Commun. 340, 131–135 (2015).

Tsang, P. W. M., Chow, Y. T. & Poon, T. C. Generation of phase-only Fresnel hologram based on down-sampling. Opt. Express 22, 25208–25214 (2014).

Tsang, P. W. M., Chow, Y. T. & Poon, T. C. Enhancement on the generation of sampled phase-only holograms. Chin. Opt. Lett. 13, 060901 (2015).

Tsang, P. W. M., Chow, Y. T. & Poon, T. C. Generation of complementary sampled phase-only holograms. Opt. Express 24, 23390–23395 (2016).

Tsang, P. W. M. et al. Optimal sampled phase-only hologram (OSPOH). Opt. Express 29, 25488–25498 (2021).

Liu, J. P. et al. Nonlinearity compensation and complex-to-phase conversion of complex incoherent digital holograms for optical reconstruction. Opt. Express 24, 14582–14588 (2016).

Liu, J. P. et al. Incoherent digital holography: a review. Appl. Sci. 8, 143 (2018).

Pang, X. N. et al. Image quality improvement of polygon computer generated holography. Opt. Express 23, 19066–19073 (2015).

Deng, Y. B. & Chu, D. P. Coherence properties of different light sources and their effect on the image sharpness and speckle of holographic displays. Sci. Rep. 7, 5893 (2017).

Zhao, Z. J., Duan, J. Y. & Liu, J. Speckle reduction in holographic display with partially spatial coherent illumination. Opt. Commun. 507, 127604 (2022).

Duan, J. Y. et al. Formulas of partially spatial coherent light and design algorithm for computer-generated holograms. Opt. Express 26, 22284–22295 (2018).

Duan, X. H. et al. Novel computer-generated hologram encoding method based on partially temporal coherent light. Opt. Express 27, 6851–6862 (2019).

Yaraş, F., Kang, H. & Onural, L. Real-time phase-only color holographic video display system using LED illumination. Appl. Opt. 48, H48–H53 (2009).

Shiraki, A. et al. Simplified electroholographic color reconstruction system using graphics processing unit and liquid crystal display projector. Opt. Express 17, 16038–16045 (2009).

Nakayama, H. et al. Real-time color electroholography using multiple graphics processing units and multiple high-definition liquid-crystal display panels. Appl. Opt. 49, 5993–5996 (2010).

Shimobaba, T. & Ito, T. A color holographic reconstruction system by time division multiplexing with reference lights of laser. Optical Rev. 10, 339–341 (2003).

Shimobaba, T. et al. An electroholographic colour reconstruction by time division switching of reference lights. J. Opt. A: Pure Appl. Opt. 9, 757–760 (2007).

Oikawa, M. et al. Time-division color electroholography using one-chip RGB LED and synchronizing controller. Opt. Express 19, 12008–12013 (2011).

Makowski, M. et al. Color image projection based on Fourier holograms. Opt. Lett. 35, 1227–1229 (2010).

Makowski, M. et al. Simple holographic projection in color. Opt. Express 20, 25130–25136 (2012).

Makowski, M., Sypek, M. & Kolodziejczyk, A. Colorful reconstructions from a thin multi-plane phase hologram. Opt. Express 16, 11618–11623 (2008).

Makowski, M. et al. Experimental evaluation of a full-color compact lensless holographic display. Opt. Express 17, 20840–20846 (2009).

Shimobaba, T. et al. Numerical study of color holographic projection using space-division method. Opt. Express 19, 10287–10292 (2011).

Xue, G. L. et al. Multiplexing encoding method for full-color dynamic 3D holographic display. Opt. Express 22, 18473–18482 (2014).

Lin, S. F. et al. Full-color holographic 3D display system using off-axis color-multiplexed-hologram on single SLM. Opt. Lasers Eng. 126, 105895 (2019).

Kozacki, T. & Chlipala, M. Color holographic display with white light LED source and single phase only SLM. Opt. Express 24, 2189–2199 (2016).

Lin, S. F. & Kim, E. S. Single SLM full-color holographic 3-D display based on sampling and selective frequency-filtering methods. Opt. Express 25, 11389–11404 (2017).

Lin, S. F., Cao, H. K. & Kim, E. S. Single SLM full-color holographic three-dimensional video display based on image and frequency-shift multiplexing. Opt. Express 27, 15926–15942 (2019).

Yang, X. et al. Full-color computer-generated holographic near-eye display based on white light illumination. Opt. Express 27, 38236–38249 (2019).

Yang, X. et al. Phase-only color rainbow holographic near-eye display. Opt. Lett. 46, 5445–5448 (2021).

Arrizón, V., Carreón, E. & Testorf, M. Implementation of Fourier array illuminators using pixelated SLM: Efficiency limitations. Opt. Commun. 160, 207–213 (1999).

Palima, D. & Daria, V. R. Effect of spurious diffraction orders in arbitrary multifoci patterns produced via phase-only holograms. Appl. Opt. 45, 6689–6693 (2006).

Zhang, H. et al. Elimination of a zero-order beam induced by a pixelated spatial light modulator for holographic projection. Appl. Opt. 48, 5834–5841 (2009).

Wong, D. W. K. & Chen, G. Redistribution of the zero order by the use of a phase checkerboard pattern in computer generated holograms. Appl. Opt. 47, 602–610 (2008).

Milewski, G., Engström, D. & Bengtsson, J. Diffractive optical elements designed for highly precise far-field generation in the presence of artifacts typical for pixelated spatial light modulators. Appl. Opt. 46, 95–105 (2007).

Palima, D. & Daria, V. R. Holographic projection of arbitrary light patterns with a suppressed zero-order beam. Appl. Opt. 46, 4197–4201 (2007).

Liang, J. Y. et al. Suppression of the zero-order diffracted beam from a pixelated spatial light modulator by phase compression. Appl. Opt. 51, 3294–3304 (2012).

Takaki, Y. & Tanemoto, Y. Band-limited zone plates for single-sideband holography. Appl. Opt. 48, H64–H70 (2009).

Wang, X. Y. et al. Generalized single-sideband three-dimensional computer-generated holography. Opt. Express 27, 2612–2620 (2019).

Agour, M. et al. Suppression of higher diffraction orders and intensity improvement of optically reconstructed holograms from a spatial light modulator. J. Opt. A: Pure Appl. Opt. 11, 105405 (2009).

Agour, M., Falldorf, C. & Von Kopylow, C. Digital pre-filtering approach to improve optically reconstructed wavefields in opto-electronic holography. J. Opt. 12, 055401 (2010).

Xun, X. D. & Cohn, R. W. Phase calibration of spatially nonuniform spatial light modulators. Appl. Opt. 43, 6400–6406 (2004).

Otón, J. et al. Multipoint phase calibration for improved compensation of inherent wavefront distortion in parallel aligned liquid crystal on silicon displays. Appl. Opt. 46, 5667–5679 (2007).

Reichelt, S. Spatially resolved phase-response calibration of liquid-crystal-based spatial light modulators. Appl. Opt. 52, 2610–2618 (2013).

Yang, L. et al. Nonlinear dynamic phase response calibration by digital holographic microscopy. Appl. Opt. 54, 7799–7806 (2015).

Zhao, T. et al. Multi-region phase calibration of liquid crystal SLM for holographic display. Appl. Opt. 56, 6168–6174 (2017).