Abstract

It is frequently assumed that learner characteristics (e.g., reading skill, self-perceptions, optimism) account for overestimations of text comprehension, which threaten learning success. However, previous findings are heterogenous. To circumvent a key problem of previous research, we considered cognitive, metacognitive, motivational, and personality characteristics of learners (N = 255) simultaneously with regard to their impact on the judgment biases in prediction and postdiction judgments about factual and inference questions. The main results for the factual questions showed that men, lower reading skill, working memory capacity, and topic knowledge, yet higher self-perceptions of cognitive and metacognitive capacities yielded stronger overestimations for prediction judgments. For inference questions, a lower reading skill, higher self-perceptions of metacognitive capacities, and a higher self-efficacy were related to stronger overestimations for prediction and postdiction judgments. A higher openness was a risk factor for stronger overestimations when making predictions for the inference questions. The findings demonstrate that learner characteristics are a relevant source of judgment bias, which should be incorporated explicitly in theories of judgment accuracy. At the same time, fewer learner characteristics were actually relevant than previous research suggests. Moreover, which learner characteristics impact judgment bias also depends on task requirements, such as factual versus inference questions.

Similar content being viewed by others

Learners are usually poor at accurately monitoring and judging their text comprehension (Maki & McGuire, 2002; Prinz et al., 2020a; Thiede et al., 2009). This poor accuracy, often reflected in overestimations but also in underestimations of comprehension, hampers learning (Dunlosky & Rawson, 2012). Therefore, knowledge of what causes poor metacomprehension accuracy is of major importance. In this context, characteristics of the learners (e.g., reading skill, self-beliefs, optimism) have repeatedly been in the focus of attention, which seems intuitively appealing. However, previous findings are heterogeneous and not overly robust (cf. Lin & Zabrucky, 1998). Methodological issues probably contributed to this lack of clarity. For example, studies have mostly been restricted to the examination of one or two learner characteristics simultaneously. Hence, interdependencies between (psychologically similar) learner characteristics across studies have mainly been disregarded. To circumvent these shortcomings and to gain reliable insights, the present study included, based on a literature review, multiple potentially relevant learner characteristics and determined their impact on metacomprehension accuracy when analyzed simultaneously. As accuracy measure, we investigated judgment bias (overestimation/underestimation) because individual differences are most likely to manifest in this measure (cf. Maki et al., 2005).

Metacomprehension Accuracy and Its Relevance for Learning

Successful learning from text is based on text comprehension and metacomprehension accuracy. Text comprehension is a complex process of constructing and mentally representing the meaning of a text. To this end, learners are required to extract the main idea units of the text, build connections (i.e., inferences) between text information within and across sentences and between text information and prior knowledge (van den Broek et al., 2002). Moreover, learners need to accurately monitor these cognitive processes and judge their level of comprehension, which is known as metacomprehension accuracy (Maki & McGuire, 2002). This metacognitive process is secondary to the cognitive processes. Thus, the less effectively and routinely learners carry out the cognitive processes during reading, the less cognitive capacity is available for metacomprehension (Nelson & Narens, 1990). Hence, factors that hinder the cognitive processes also hamper metacomprehension accuracy. These factors include a learner’s skills and resources (e.g., reading skill, prior knowledge, reading motivation), text characteristics (e.g., text difficulty), and the reading situation (e.g., reading for comprehension vs. memory of facts; Zwaan & Rapp, 2006; van den Broek et al., 2002).

Metacomprehension accuracy is particularly relevant for learning because a higher compared to a lower accuracy enables a more effective regulation (e.g., rereading) and, hence, a higher text comprehension (Thiede et al., 2009). For example, overestimation entails that learners likely overlook underdeveloped aspects of their text representation, and hence, their efforts in regulating their understanding will very likely be insufficient (e.g., skip rereading, miss relevant parts during rereading). Accordingly, poor accuracy and overestimation in particular, which are a widespread phenomenon (Lin & Zabrucky, 1998; Maki & McGuire, 2002; Prinz et al., 2020a; Thiede et al., 2009), have been shown to result in underachievement (Dunlosky & Rawson, 2012).

The Role of Learner Characteristics for Metacomprehension Accuracy

According to the cue-utilization framework (Koriat, 1997), metacomprehension accuracy depends on the cues that learners use to infer their level of comprehension because cues differ in their validity in indicating actual understanding. For metacomprehension, representation-based and heuristic cues are differentiated for prediction judgments (i.e., judgments made after reading but before answering comprehension questions; cf. Griffin et al., 2009). Representation-based cues emerge from monitoring the text processing (e.g., the coherence of text representation, ease of explanation, memorability of information). They are, hence, closely related to and rather valid indicators of a text representation. Yet, monitoring becomes less likely, the less effectively and routinely learners process a text at the cognitive level. Thus, regarding learner characteristics, poor cognitive skills and resources (i.e., reading skill, working memory capacity, prior knowledge), and reading-related motivational factors (e.g., self-efficacy) can be assumed to hinder metacomprehension accuracy.

In contrast, heuristic cues are available to learners whether or not a text has been read. These are factors that learners can access and believe to be relevant for judgments, including, with regard to learner characteristics, self-perceptions of cognitive or metacognitive capacities and motivational beliefs (e.g., self-perceived reading skill, self-assessed prior knowledge, self-efficacy). Learners can draw on these cues directly to make a judgment. For example, a high self-perceived reading skill might induce a learner to give a high judgment of comprehension, even though it might be an overestimation for the actual text. Therefore, heuristic cues are usually less valid than representation-based cues (Griffin et al., 2009).

Compared to prediction judgments, postdictions are judgments that learners make after having answered comprehension questions. This test experience provides additional judgment cues (e.g., feeling of confidence during answering, number and similarity of response alternatives). Apart from that, postdictions can theoretically be influenced by the same learner characteristics and cues as predictions.

Some of the learner characteristics investigated already, namely personality facets such as extraversion or optimism and gender (e.g., Agler et al., 2019; Buratti et al., 2013; Dahl et al., 2010; de Bruin et al., 2017), hardly influence text comprehension or appear to be less accessible to learners. These characteristics are, thus, conceptually distinct from representation-based and heuristic cues. Yet, they might involve bias-inducing cognitive styles, which are, nevertheless, compatible with the cue-utilization framework.

Findings on the Impact of Learner Characteristics on Metacomprehension Accuracy

As various learner characteristics may impact judgment accuracy, the focus in research has been broad: Prior studies addressed cognitive and metacognitive skills and resources, motivation, personality, and gender. In these studies, accuracy was determined as judgment bias (i.e., the signed difference between judgments and performance; overestimation/underestimation), absolute accuracy (i.e., the absolute deviation of judgments and performance), and/or relative accuracy (i.e., the differentiation between well and less well understood texts).

In the present study, we investigated judgment bias because it shows a good stability across tasks and over time (Kelemen et al., 2000) as opposed to relative accuracy. Hence, stable effects of individual differences are more likely to be detected for judgment bias (cf. Maki et al., 2005). The number of studies in this field is, however, limited. Thus, findings on absolute and relative accuracy can nevertheless provide helpful insights, albeit generalizations of effects across the different measures have to be drawn with caution. In the following overview of previous research, we therefore focused on findings about learner characteristics’ effects on judgment bias, but also included some findings on absolute and relative accuracy. We also refer to studies that not strictly examined judgment accuracy for texts (i.e., metacomprehension accuracy) but some kind of written material (e.g., problem tasks) or knowledge tests. A summary of the reviewed previous findings is provided in Appendix 1 Table 5.

When interpreting effects of judgment bias, it needs to be considered that bias as a derived score can depend on differences in judgment magnitude and performance. This implies in its extreme that differences in bias between, for example, two groups of learners can completely result from performance differences (i.e., same judgment, yet higher/lower performance). In this case, it can be questioned whether the effect of bias results from metacognitive effects (Griffin et al., 2013, p. 26). Hence, effects of learner characteristics on bias should be interpreted alongside their effects on judgment magnitude and performance. This detailed information is often missing in previous studies though (see Appendix 1 Table 5).

Cognitive and Metacognitive Skills and Resources

For adults, reading skill mainly manifests in the proficiency in automatically conducting and strategically regulating the multiple cognitive processes involved in reading at the text level. Thus, a lower compared to a higher reading skill requires more working memory capacity for cognitive processes and therefore hampers monitoring (van den Broek et al., 2002; Veenman, 2016). Accordingly, Maki et al. (2005) and Golke and Wittwer (2017) showed, for texts with an age-appropriate text difficulty, that a lower reading skill leads to more overestimation of comprehension when making predictions, whereas higher reading skill results in less overestimation or rather accurate predictions. For postdictions, which are usually lower than predictions (Pierce & Smith, 2001), the extent of overestimation for lower reading skill decreased, while the high-skilled learners floated into underestimation. However, the negative impact of a low reading skill on judgment bias might be mitigated by rereading texts as findings from Griffin et al. (2008) suggest.

Due to the resource-demanding processes of text comprehension, constraints in working memory capacity (WMC) can also account for poor monitoring. Accordingly, a lower compared to a higher WMC has been shown to hamper metacomprehension accuracy regarding relative accuracy for predictions after one-trial reading of a text (Griffin et al., 2008; Ikeda & Kitagami, 2012). Komori (2016) showed, though for word recall, that a lower compared to a higher WMC arrived at poorer absolute accuracy for postdictions, while WMC was irrelevant for judgment bias. Moreover, a lower compared to a higher WMC is linked to less attention control (Unsworth & McMillan, 2013), which contributes to poor monitoring. However, rereading a text can alleviate or, as Griffin et al. unveiled, eliminate the disadvantage of a low WMC.

Since monitoring as a metacognitive skill is a higher-order process, it has also been connected with fluid intelligence (Ohtani & Hisasaka, 2018; van der Stel & Veenman, 2014; Veenman et al., 2005). A higher compared to a lower fluid intelligence helps to process information more rapidly and carefully and to develop strategies prior to task completion (Sternberg, 1986). However, findings on its relationship with monitoring (not strictly metacomprehension) accuracy are very heterogenous. Ohtani and Hisasaka’s (2018) meta-analysis yielded a small average effect. As it is based on effects aggregated across domains, accuracy measures, and predictions and postdictions, it has to be interpreted cautiously. Besides, reasoning ability is less relevant for text comprehension (Corso et al., 2016). It is, hence, unclear whether fluid intelligence has a substantial effect on metacomprehension accuracy, in particular when the conceptually related WMC (cf. Oberauer et al., 2005) is also considered.

Another potential impact on metacomprehension accuracy, directly or via its influence on text comprehension, is prior knowledge. It includes topic knowledge that enables cognitive processes of text comprehension (e.g., drawing inferences; Kendeou & O’Brien, 2016) and metacognitive knowledge about, for instance, reading strategies, comprehension standards, or monitoring activities (Veenman, 2016). Prior knowledge supports text comprehension (Griffin et al., 2009; Kendeou & O’Brien, 2016; Zabrucky et al., 2015), and it can partially compensate for lower WMC, reading skill, and general cognitive abilities (e.g., Hambrick & Engle, 2002; Miller et al., 2006). Accordingly, a lower compared to a higher topic knowledge has been linked to less accurate predictions in terms of absolute accuracy (Griffin et al., 2009) and relative accuracy (de Bruin et al., 2007). Griffin et al. also showed that it led to stronger underestimation but decreased and arrived at rather accurate judgments for higher topic knowledge. However, participants in Griffin et al.’s study, on average, produced underestimation, not the commonly found overestimation, which might be due to specifics of the study’s material (e.g., detailed baseball knowledge for a psychology student sample). For materials that provoke overestimation, theoretically, lower prior knowledge might lead to stronger overestimations as it impairs text comprehension.

For postdiction accuracy, it is not topic knowledge that seems relevant (Schraw, 1997) but metacognitive knowledge (cf. Lin & Zabrucky, 1998). More specifically, Schraw (1997) unveiled that lower knowledge about monitoring strategies for taking tests was related to underestimation of the correctness of one’s responses while higher knowledge was linked to rather accurate judgments. Contrarily, metacognitive knowledge in its broad sense (i.e., declarative/procedural/conditional knowledge of cognition and knowledge about regulation) and with regard to learning situations in general instead of test taking situations was unrelated to judgment bias for postdictions (Schraw & Dennison, 1994). For prediction accuracy, evidence for the impact of metacognitive knowledge is scanty (Lin & Zabrucky, 1998).

In addition to the objective amount of prior (topic) knowledge, learners have been found to draw on their self-perceived topic knowledge, which reflects the familiarity with a domain or topic, when making predictions and postdictions (Ehrlinger & Dunning, 2003, Study 3; Glenberg et al., 1987; Griffin et al., 2009). As it is only a distal indicator of topic knowledge and not necessarily related to the actual text understanding, a higher self-perceived topic knowledge can theoretically be assumed to provoke overestimation. Findings from Ehrlinger and Dunning support this view. Contrarily, Griffin et al. observed that higher self-perceived topic knowledge resulted in less underestimating, rather accurate judgments, but the overall underestimation in their study limits the transferability of this finding. Generally, learners seem to make only limited use of domain/topic familiarity as judgment cue for text comprehension though (cf. Lin & Zabrucky, 1998).

Moreover, epistemological beliefs as a specific component of metacognitive knowledge (Winne & Hadwin, 1998) have been in the focus of interest. They are implicit beliefs about the nature of knowledge and the process of knowing. More sophisticated beliefs (i.e., knowledge is complex, uncertain, justified by evidence, and gained by rational inquiry) are related to more adaptive processing and hence a higher text comprehension than less sophisticated beliefs (i.e., knowledge is simple, certain, reflecting reality, and transmitted by authorities; Bråten et al., 2016). In line with this notion, prior studies found that more sophisticated epistemological beliefs resulted in better strategy selection during learning which has been interpreted as an indicator of enhanced monitoring (e.g., Mason et al., 2010; Stahl et al., 2006). Whether these findings allow to conclude that epistemological beliefs impact metacomprehension accuracy is up for debate though (Griffin et al., 2013). Thus, epistemological beliefs might theoretically impact metacomprehension accuracy in the way that more sophisticated beliefs support text comprehension and, hence, the generation of more representation-based cues as opposed to less sophisticated beliefs.

Taken together, empirical findings support the general view that low reading skill, WMC, and prior knowledge are related with poor metacomprehension accuracy. Specifically, a lower reading skill has led to stronger overestimations (for predictions and, to a smaller extent, for postdictions). Lower metacognitive knowledge about monitoring has been linked to underestimations for postdictions. Lower topic knowledge has been related to stronger underestimation for predictions, yet due to specifics of the prior research, it is unclear whether underestimation (or overestimation instead) would also emerge for other material. The impact of WMC and fluid intelligence (reasoning) on judgment bias for text comprehension is understudied. Theoretically, a lower WMC might more likely lead to overestimation, but the effect might be absent after rereading of texts. Lower fluid intelligence might weakly contribute to overestimations, if at all, especially when the other cognitive factors are considered simultaneously.

Motivation

Motivational characteristics can impact metacomprehension accuracy for two reasons. First, as text comprehension is effortful, especially for longer and difficult texts, it depends on learners’ motivation (Guthrie & Klauda, 2016). It influences the constructed level of text representation that provides more or less representation-based cues. Second, motivation in terms of generalized self-perceptions of ability is available as heuristic cues. It is argued that learners draw on these self-representations because they are rather stable across texts and, hence, enable a less effortful and resource-demanding judgment as opposed to a situation-specific integration of text-, learner-, test-, and environment-related cues (Moore et al., 2005). Prior research on metacomprehension accuracy addressed self-perceptions of reading skill and monitoring ability, self-efficacy beliefs, and goal orientation.

Regarding self-perceived reading skill, Kwon and Linderholm (2014) found that, under control of actual reading skill, learners with a higher self-perceived reading skill gave higher predictions and postdictions of text comprehension than those with a lower self-perceived reading skill. This finding suggests, due to the general tendency toward overestimation, that a higher compared to a lower self-perceived reading skill bears a higher risk to overestimate one’s text comprehension.

Additionally, the self-perceived metacomprehension ability, which is positively and moderately related to actual metacognitive knowledge (Schraw & Dennison, 1994), can also be relevant for the accuracy. Learners who ascribed themselves a low general metacomprehension ability showed stronger overestimations (Schraw & Dennison, 1994, Study 2) and poorer absolute accuracy (Schraw, 1994) for item-level postdictions than those with a high self-perceived metacomprehension ability.

A special role among the learner characteristics might be seen in self-efficacy. It refers to learners’ beliefs about how capable they are to perform a certain upcoming task. Thus, comprehension/performance judgments and their accuracy have been construed as one dimension of self-efficacy (Zimmerman & Moylan, 2009). In line with this notion, Vössing and Stamov-Roßnagel (2016) found that lower self-efficacy beliefs for learning tasks (e.g., understanding ideas taught in a course/presented in a text) were related to lower predictions of comprehension than higher self-efficacy beliefs. Bouffard-Bouchard (1990) also observed lower item-level postdictions for problem solving tasks in a low self-efficacy group compared to a high self-efficacy group. Their data furthermore showed that judgments were mostly overconfident in the high self-efficacy group and rather equally often overconfident and underconfident in the low self-efficacy group.

Goal orientation, that is, the disposition to pursue a specific purpose when engaging with a task and to apply certain standards for evaluating task performance, has been assumed to extend to judgment accuracy (Ikeda et al., 2016; Kroll & Ford, 1992; Zhou, 2013). More specifically, performance orientation entails a learner’s focus on their achievements relative to others. This can be expressed in displaying their abilities to project a positive self-image (i.e., performance-approach), which is believed to invite overestimations, or in hiding potential failures and deficits (i.e., performance-avoidance), which is assumed to result in less overestimation or, as self-defense, in underestimation. In contrast, mastery orientation is to strive for increasing one’s knowledge and abilities and to use an intraindividual evaluation of one’s achievements. This should lead to more accurate judgments. Prior studies provide some support for these views. Learners with performance approach have been shown to give higher predictions than mastery-approach learners, while their performance on a text comprehension test was equal (Ikeda et al., 2016, Exp. 3). Moreover, stronger overestimations on predictions have been found for higher performance-approach and performance-avoidance orientations (Kroll & Ford, 1992; Zhou, 2013), which might, however, have mainly been driven by performance differences in Kroll and Ford’s study (no details available in Zhou’s study). For mastery orientation in general, no association with accuracy was evident (Kroll & Ford, 1992; Zhou, 2013), albeit Zhou who also distinguished the less commonly used mastery-avoidance orientation found that it yielded more overestimation. No significant relationships were found between goal orientations and postdiction accuracy (Zhou, 2013).

In conclusion, low self-perceived metacomprehension ability has been linked to stronger overestimations for item-level postdictions; research for predictions is missing. For self-efficacy, findings suggest that higher compared to lower self-efficacy might be related to stronger overestimations, but evidence is still limited. Furthermore, for self-perceived reading skill, it can be speculated that, when reading skill is equal, a higher compared to a lower self-perceived reading skill yields stronger overestimation. With respect to goal orientations, a higher performance orientation has been linked to stronger overestimation for predictions; for postdictions, no relationship has been found.

Personality

Although not explicitly discussed in theoretical models on metacomprehension accuracy, personality traits might function as bias-inducing cognitive styles. Previous research investigated the Big-Five personality facets, optimism, and narcissism. The latter is, however, not considered in the present study as nonclinical samples show rather low values of narcissism (i.e., possible bottom effects).

Personality is widely defined by the interrelated Big-Five facets extraversion, openness to experience, neuroticism, conscientiousness, and agreeableness (Rammstedt & John, 2005).Footnote 1 For metacomprehension, Agler et al. (2019) investigated relative accuracy and found that only a higher openness was linked to poorer accuracy of predictions. This effect became insignificant, however, when accuracy was regressed on all Big-Five facets simultaneously. No reliable relationships occurred for postdictions.

In addition, when judgment accuracy for postdictions in knowledge and ability tests (not text comprehension) was focused, a higher extraversion was rather consistently related to stronger overestimations (Buratti et al., 2013; Dahl et al., 2010; Pallier et al., 2002; Schaefer et al., 2004), but a relationship was not always evident (Händel et al., 2020). In addition, a higher openness was also found to be related to stronger overestimations in Buratti et al.’s study, but not in others (Dahl et al., 2010; Händel et al., 2020; Schaefer et al., 2004). Given that extraversion, which is associated with openness, overlaps with a more positive self-esteem and higher optimism (Dahl et al., 2010; Sharpe et al., 2011), these characteristics combined might result in overly confident judgments. Moreover, a higher conscientiousness has been related with more overestimation in some studies (Dahl et al., 2010; Händel et al., 2020, Study 1, for psychology exam), but not in others (Händel et al., 2020, Study 1, for math exam; Schaefer et al., 2004). The reported relationships were mostly small to moderate. Regarding their relationships with other learner characteristics, the Big-Five are most likely linked to self-efficacy and goal orientation (Judge & Ilies, 2002; Zweig & Webster, 2004), not to text comprehension (Agler et al., 2019).Footnote 2

Dispositional optimism is the tendency to believe in positive outcomes in life (Sharpe et al., 2011) that becomes unrealistic when one expects more positive outcomes than could possibly be true (Shepperd et al., 2013). Thus, learners with a higher dispositional optimism might be particularly prone to overestimate their understanding (de Bruin et al., 2017; Kleitman et al., 2019). Händel et al. (2020) observed this type of relationship for performance judgments in a math test but not in a psychology test. De Bruin et al. (2017) also found no reliable connection to overestimation when learners predicted their exam score. Whether optimism impacts the more complex process of metacomprehension is unclear. Regarding its relationship with other learner characteristics, optimism appears to be unrelated with academic performance in general (Rand et al., 2011), positively linked to self-efficacy (Alarcon et al., 2013; Chemers et al., 2001), and presumably also to mastery and performance-approach goal orientation (Carver & Scheier, 2014).

Another factor that might contribute to overestimations is an individual’s tendency to present themselves overly positive, as assessed via the tendency to overclaim knowledge (cf. Bensch et al., 2019; Bing et al., 2011). Overclaiming has been linked to overconfidence in the correctness of one’s responses to a knowledge test (Bensch et al., 2019), yet its relationship with metacomprehension accuracy is unexplored. It is thinkable that a tendency toward a positive self-presentation explains some amount of overestimation which, interestingly, would not be attributable to poor metacomprehension during reading but to a subsequent (more or less conscious) adjustment when making the comprehension judgments.

In conclusion, evidence for an impact of personality (i.e., the Big-Five and optimism) on judgment accuracy is very mixed. For metacomprehension accuracy, no reliable effects of the Big-Five have been shown yet and studies on optimism are missing. As a currently unexplored facet, overclaiming as a self-enhancement tendency might further explain why some learners are more prone to overestimations than others.

Gender

Although males are observed to be more overconfident than females in various domains (Lundeberg & Mohan, 2009), findings in the context of learning are mixed. Some findings support a weak gender effect (e.g., Buratti et al., 2013; Händel et al., 2020) and others discourage it (e.g., Ariel et al., 2018; Dahl et al., 2010; de Bruin et al., 2017; Händel et al., 2020; see Appendix 1 Table 5 for details). For metacomprehension accuracy, Stankov and Lee (2008) observed a small effect as males more likely overestimated the correctness of their responses (i.e., item-level postdictions) to text comprehension questions than females.

Importantly, although men might slightly outperform women in text inferencing, no effect of gender was found for text memory, knowledge integration, knowledge access (Hannon, 2014), and composite measures of text comprehension (Hannon, 2014; Hyde & McKinley, 1997). Moreover, males compared with females report to be less extraverted, open, agreeable, and neurotic (Moutafi et al., 2006); to adopt a weaker mastery but stronger performance goal orientation (D’Lima et al., 2014); and to have a lower self-perceived reading skill even as young adults (Marsh, 1989) and less sophisticated epistemological beliefs (Bendixen et al., 1998; King & Magun-Jackson, 2009).

Limitations of Previous Research and Open Questions

Which learner characteristics actually contribute to metacomprehension accuracy in terms of overestimation and underestimation of text comprehension cannot yet be answered clearly. Reasons are a scarcity of research for many of the learner characteristics and methodological discrepancies between the studies. More importantly though, most studies investigated only one, sometimes two learner characteristics simultaneously. This neglects the relationships between the various potentially relevant learner characteristics. Hence, findings from previous studies are insufficient to comprehensively explain the actual impact of learner characteristics on judgment bias. For example, a learner characteristic’s relationship to bias might be substantial in terms of a bivariate correlation, but can become irrelevant when other characteristics are considered concurrently, or the impact of a learner characteristic on bias might be obscured (in bivariate data) until other learner characteristics are included. Both has been observed in Händel et al.’s (2020) study that is so far an exception in the previous research as they investigated the impact of multiple characteristics (gender, performance, motivation, and personality) on the judgment accuracy for knowledge tests in math and psychology. Yet, their findings are not fully transferable to metacomprehension accuracy as it is based on other cognitive and metacognitive processes and cues specific to text comprehension. To circumvent these shortcomings and to gain more reliable insights into the role of learner characteristics for metacomprehension accuracy, studies are needed that include several learner characteristics simultaneously.

Present Study

To address a major shortcoming of previous research, we identified, based on the literature review, multiple potentially relevant learner characteristics and examined their impact on metacomprehension accuracy in terms of judgment bias (overestimations/underestimations) of text comprehension. To do so, we used expository texts with highly similar features such as text difficulty because text features can also add to judgment bias (cf. Lin & Zabrucky, 1998), which was, however, not in the scope of the present study. We assessed accuracy with respect to two central comprehension processes: extracting main idea units (i.e., factual questions) and building semantic connections between and across sentences (i.e., inference questions). We did not assume differential effects of the learner characteristics on judgment bias for predictions versus postdictions (unless specified otherwise) and for factual versus inference questions as neither theory nor previous findings currently support this view.

We made the following assumptions about the impact of learner characteristics on judgment bias. For the cognitive factors, we expected in line with previous research (Golke & Wittwer 2017; Maki et al., 2005; for texts with age-appropriate text difficulty) that a lower reading skill would be associated with stronger overestimation. A lower WMC should either be related to stronger overestimation (cf. Griffin et al., 2008; Ikeda & Kitagami, 2012; Komori, 2016) or be irrelevant due to the rereading of texts (cf. Griffin et al., 2008) which was allowed in the present study. Moreover, a lower fluid intelligence might be related to stronger overestimation. However, previous findings are heterogeneous (Ohtani & Hisasaka, 2018), and an effect of fluid intelligence might be absent when the conceptually related WMC is considered as well. Regarding the knowledge-related learner characteristics, (a) we made no directed hypothesis about the impact of topic knowledge. Theoretical considerations suggested that a lower prior knowledge would result in stronger overestimations. Contrarily, Griffin et al. (2009) found stronger underestimation for lower topic knowledge, yet the specifics of their study limited the transferability of their finding to the present study. This inconclusive situation was basically the same for (b) self-perceived topic knowledge (topic familiarity). Due to Ehrlinger and Dunning’s (2003, Study 3) support for the theoretically consistent assumption that a higher familiarity results in stronger overestimations, we adopted it for the present study. For (c) metacognitive knowledge about monitoring strategies, lower levels might result in stronger underestimation (Schraw, 1997) but probably only for postdiction judgments.

For the motivational factors, the few findings suggested that stronger overestimation can be expected for a higher self-perceived reading skill (Kwon & Linderholm, 2014), a lower self-perceived metacomprehension ability (Schraw, 1994), and, albeit for prediction judgments only, for a higher performance-approach and performance-avoidance goal orientation (Ikeda et al., 2016, Exp. 3; Kroll & Ford, 1992; Zhou, 2013). Moreover, we assumed that a higher self-efficacy for text comprehension would be associated with stronger overestimations while lower self-efficacy would be linked to less overestimation or to underestimation (Bouffard-Bouchard, 1990; Vössing & Stamov-Roßnagel, 2016).

Furthermore, we expected that the impact of personality on metacomprehension accuracy would be weak or negligible when the various personality facets are considered simultaneously (Agler et al., 2019). If, nevertheless, an effect occurred, it should be primarily a higher extraversion and/or openness that resulted in stronger overestimation (Buratti et al., 2013; Dahl et al., 2010; Händel et al., 2020, for psychology exam; Pallier et al., 2002; Schaefer et al., 2004). With respect to dispositional optimism, previous findings are ambivalent (de Bruin et al., 2017; Händel et al., 2020). Hence, if at all, we expected that a higher dispositional optimism would be related to stronger overestimation. We also assumed that a higher overclaiming is linked to a stronger overestimation (Bensch et al., 2019). Concerning gender, we expected that men would show a somewhat stronger overestimation than women (Stankov & Lee, 2008).

It is noteworthy that the assumptions mainly reflect findings on bivariate relationships. We did not expect that every effect should be present when all learner characteristics were considered concurrently. On the contrary, given the relationships between various learner characteristics within and across cognitive, motivational, and personality categories, including gender, we assumed that some of the hypothesized effects reflecting bivariate relationships would be diminished or mitigated then. Moreover, it has been shown that learners use indeed representation-based cues instead of solely drawing on heuristic cues (Lin & Zabrucky, 1998). Therefore, those learner characteristics that can directly impact text comprehension and hence representation-based cues might be more likely to play a role for judgment bias than motivational and personality factors which are more distal to text comprehension.

Method

Sample

In total, 270 students from various study programs (mainly educational science, teacher education, psychology, linguistics, business administration, law studies, natural sciences, and social sciences) participated in the first of two sessions. As 15 students missed the second session, the final sample was N = 255. Their mean age was 22.26 (SD = 3.65, Mdn = 21.00) years, 173 participants (68%) were female, and 233 participants (91%) were native speakers. Nonnatives were reasonably proficient in German due to entry requirements of their study programs. Moreover, 109 participants (43%) were first-semester students (Mdn = 2 semester). Students participated voluntarily and received money or course-credit for it.

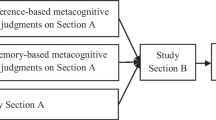

Procedure

Participants attended two sessions (1 week apart) in groups of six or less. In session 1 (approx. 90 min), they were informed about the goal and procedure of the study before they gave their informed consent. Then, they completed the two computerized tests (i.e., reading skill and WMC test). After that, they provided sociodemographic data and continued with the paper–pencil reasoning test (8-min limit). After a short break, they filled in the paper–pencil questionnaires (in the order of their presentation in the “Instruments” section). In session 2 (approx. 70 min), participants assessed their topic knowledge before they completed the topic knowledge test. Afterwards, they were informed about the number and nature of the to-be-read texts. They were instructed to read for understanding as their text comprehension would be tested afterwards. Then, participants read the first text (10-min limit). Reading time was fixed to control time-on-task and participants could not proceed before the time limit had expired. After text reading, participants received a description of the number and nature of the text comprehension questions. Then, they made their prediction judgments, answered the factual and inference questions, and provided their postdiction judgments. The procedure was repeated for the two other texts. Finally, participants received their course credit or money.

Materials

Texts

We used three expository texts (topics: the social-cognitive theory, the situated learning theory, and the attribution theory). They were comparable in length (630, 661, and 601 words, respectively) and readability (Flesch Reading Ease score 26, 31, and 38, respectively). The readability scores reflect rather difficult to read texts typical for higher education. The text order was identical for all participants as we were interested in evaluating individual differences in learner characteristics across tasks (cf. Carlson & Moses, 2001).

Text Comprehension Test

For each text, we used six factual questions and six inference questions. The factual questions asked for information explicitly stated in the texts. The inference questions referred to text information that had to be inferred from the given text information during reading. Both question types addressed central information of a text, yet the inference questions tested a higher level of understanding. Each question had a single-choice format with four response alternatives. As the questions addressed different parts of text information, as recommended for the assessment of metacomprehension (Wiley et al., 2005), internal consistency was low for the factual questions (Cronbach’s α = 0.64) and the inference questions (0.32).

Instruments

Reading Skill

The subtest “text comprehension” of the computerized German reading comprehension test for adults ELVES (Richter & van Holt, 2005) contained two expository texts with 16 verification statements that focused on higher-order comprehension processes. The test score was an integrated value of performance correct and response latency (average Cronbach’s α of 0.70, cf. Richter & van Holt, 2005).

Working Memory Capacity

In the computerized Reading-Span Task (Oswald et al., 2015; German version: Rummel et al., 2017, Cronbach’s α = 0.85), participants had to judge sentences as to whether or not they were sensible, each sentence followed by a letter to be recalled. Afterwards, the letters had to be identified in the correct order. The reading span score was, as recommended by Conway et al. (2005), the sum of letters recalled in the correct position. In line with common procedure, we filtered out scores of participants who misjudged 15% of all sentences or more, indicating that they, contrary to instruction, focused on letter memorization. Thus, the scores of 17 participants were omitted and treated as missing values.

Fluid Intelligence (Reasoning)

The nonverbal matrices subtest of the Intelligence Structure Test 2000 R (Amthauer et al., 2001; Cronbach’s α = 0.71) comprised 20 items and a time limit of 8 min. Each item presented five figures, of which one completed the logical structure of a given set of figures. Items were scored as correct or incorrect (1/0 points). A participant’s sum of correct responses was converted into the age-dependent standard value.

Goal Orientation

The questionnaire SELLMO-St (Spinath et al., 2002) with 31 items and a 5-point scale (1 = disagree completely, 5 = totally agree) assessed goal orientation for university students in terms of mastery (8 items, Cronbach’s α = 0.78), performance-approach (7 items, α = 0.81), performance-avoidance (8 items, α = 0.87), and work-avoidance goal (8 items; not analyzed).Footnote 3

Optimism

The Revised Life Orientation Test by Scheier et al., (1994, Study 2) measured dispositional optimism using six items about optimism (Cronbach’s α = 0.80) and four filler items, all on a 5-point scale (1 = disagree completely, 5 = totally agree).

Personality

The BFI-K, a short scale of the Big-Five Inventory in German (Rammstedt & John, 2005), included 21 items to assess extraversion (4 items; Cronbach’s α = 0.82), agreeableness (4 items; not analyzed)1, conscientiousness (4 items; α = 0.72), neuroticism (4 items; α = 0.79), and openness (5 items; α = 0.74) on a 5-point scale (1 = disagree completely, 5 = totally agree).

Overclaiming

The short form of the Overclaiming Questionnaire (Bing et al., 2011) measured self-enhancement as the tendency to inflate the amount of one’s knowledge. It had 25 items and a 5-point scale (0 = not familiar at all, 4 = very familiar), of which 17 items were real terms (e.g., myth) and eight items were nonexistent terms (e.g., meta-toxins, Cronbach’s α = 0.72).

Self-Perceived Reading Skill

Based on Schraw (1994), we asked participants to assess their level of reading skill with a visual analogue (100 mm) item response format from 1 (very low) to 100 (very high). The term reading skill was explained, and the poles of the scale were labeled in the instruction. A participant’s score was the mm value of their mark on the scale.

Self-Perceived Metacomprehension Ability

Following Schraw (1994), we asked participants to evaluate their ability to accurately monitor and assess their text comprehension using a visual analogue (100 mm) item response format from 1 (very poor) to 100 (excellent). The term metacomprehension ability was described, and the poles of the scale were labeled in the instruction. The position of the mark on the scale (in mm) represented the score.

General Monitoring Strategies

The General Monitoring Strategies Checklist (Schraw, 1997) included 10 items and a 5-point scale (1 = disagree completely, 5 = totally agree) to collect self-reported monitoring knowledge and strategies (e.g., I stop and reread when I get confused) when one has to demonstrate one’s understanding or knowledge on a test. Cronbach’s alpha was initially 0.63, but increased to 0.72 after excluding three items with a discrimination power below 0.30.

Self-Efficacy for Text Comprehension

We used six items of the Motivated Strategies for Learning Questionnaire (subscale self-efficacy for learning and performance; Pintrich et al., 1991) with a 5-point scale (1 = disagree completely, 5 = totally agree). We changed their wording by referring to text comprehension instead of “learning in class”. We instructed participants to answer the items with regard to reading an expository text about a rather new psychological topic about which they would have to answer comprehension questions. Cronbach’s α was 0.81.

Epistemological Beliefs

The Epistemological Belief Questionnaire (Schommer, 1990; translated into German by Klopp, 2014) contained 63 items for five subscales (i.e., simple knowledge, omniscient authority, certain knowledge, innate ability, quick learning) and a 5-point response scale (1 = disagree completely, 5 = totally agree). Internal consistency (Cronbach’s alpha) was very poor for all subscales, Mdn = 0.32, M = 0.31, Min = − 0.10, Max = 0.59. An alternative factor structure proposed by Klopp showed the same problem. Therefore, epistemological beliefs were excluded from data analyses.

Self-Perceived Topic Knowledge

Participants indicated the amount of their prior knowledge of the topics of the three to-be-read texts (i.e., the social-cognitive theory, the situated learning theory, and the attribution theory; 3 items) on a 6-point response scale (1 = nothing, 6 = a lot). Cronbach’s α was 0.86.

Topic Knowledge

Pre-existing knowledge about the three text topics was assessed with 12 questions, which had a single-choice format with four response alternatives and were scored as correct versus incorrect (1/0 points). Their content was not identical, neither verbatim nor paraphrased, with the questions of the text comprehension tests. Instead, the topic knowledge questions addressed general knowledge that would be helpful to understand the content of the texts. The questions tapped heterogenous aspects of the topics which is reflected in a low internal consistency: The initial Cronbach’s α was 0.39; it increased to 0.51 after two items with a discrimination power near zero were excluded.

Metacognitive Judgments and Metacomprehension Accuracy

After reading a text, participants predicted how many of the comprehension test questions (values 0–6) they would answer correctly (i.e., prediction). After the test, they indicated how many questions (values 0–6) they thought they had answered correctly (i.e., postdiction). Judgments were given separately for factual and inference questions.

Metacomprehension accuracy was calculated as judgment bias, which is the signed difference between a participant’s judgment and their actual performance (negative values = underestimation, positive values = overestimation). Four outcome measures were calculated: the prediction bias and the postdiction bias of factual and inference questions, respectively.

Data Handling and Analysis

We used IBM SPSS Statistics version 27.0. Values of 2 SD above/below a variable’s group mean were handled as outliers and replaced with this cutoff value. Missing data occurred in less than 1%. More specifically, (a) no more than 2% of data were missing on any questionnaire, (b) seven scores of the reading skill test were unavailable due to technical issues, and (c) the WMC score was missing once due to technical issues and was omitted for 17 participants due to violations of instruction (see the “Instruments” section). Missing data were replaced using the SPSS Multiple Imputation procedure.

To test the impact of the learner characteristics on metacomprehension accuracy, we conducted a hierarchical regression analysis (method: forward) for each of the four outcome measures (i.e., judgment bias of predictions/postdictions of factual/inference questions). Based on our assumption about the impact of learner characteristics when considered simultaneously (see the “Present Study” section) and similar to the analytical approach in Händel et al. (2020), we included the learner characteristics in three steps as predictors into the regression models: (1) gender because it has been shown to be related to several other cognitive, motivational and personality characteristics (see “Introduction”); (2) the objective measures of cognitive learner characteristics (i.e., reading skill, reasoning, WMC, and prior knowledge) as they can directly impact text comprehension and, hence, representation-based cues; (3) the subjective measures of self-perceptions of abilities and motivational and personality factors, whose impact on accuracy is mostly less substantiated by previous research (see “Introduction”). The statistical assumptions for the regression analyses were met (i.e., normal distribution of the outcome variables and residuals, linear relationships between predictors and outcome variables, homoscedasticity, and no multicollinearity).

To facilitate the interpretation of bias effects, we plotted the effect of each statistically significant learner characteristic on judgment bias showing whether high and low levels of the learner characteristic resulted in overestimation or underestimation (Fig. 1). Furthermore, we plotted the effects of each significant learner characteristic on judgment magnitude and performance separately to inspect whether its impact on bias was driven by influences on the one or the other (Figs. 2 and 3).

Results

Descriptive Statistics

The descriptive statistics for the outcome measures (see Table 1) show that the mean performance on both question types was on a medium level (i.e., no floor or ceiling effect). Thus, the mean judgment bias can be interpreted meaningfully. Moreover, judgment bias showed a substantial range in the extent of overestimation and underestimation with mostly a tendency toward overestimation. Table 2 presents the descriptive statistics for the learner characteristics and their bivariate correlations. Table 3 contains the bivariate correlations between the learner characteristics and the outcome measures (i.e., judgment magnitudes, performance, and judgment bias). It can be seen that a lot of the learner characteristics were significantly related to judgment magnitude, and a smaller number of them were (also) associated with performance. Those learner characteristics that were not related to the same extent to judgments and performance yielded a significant correlation with the bias measure. Overall, there were a few learner characteristics that showed a significant bivariate correlation with judgment bias. However, not all of them remained statistically significant for judgment bias when considered together in the regression analyses.

Regression Analyses

The regression analyses (see Table 4Footnote 4) revealed that the statistically significant learner characteristics explained a moderate amount (cf. Cohen, 1988) of 17% and 14% of variance in the measures of prediction bias and a small amount of 11% and 12% of variance in the postdiction bias measures. Hence, learner characteristics were slightly more relevant for the prediction bias than for the postdiction bias. Which learner characteristics were significant for judgment bias differed between the factual and the inference questions.

The regression analysis for the prediction bias of factual questions revealed (see Table 4) that gender, WMC, topic knowledge, self-perceived topic knowledge, and self-perceived metacomprehension ability were statistically significant predictors. The impact of topic knowledge and reading skill was less straightforward, however: When initially included in step 2 of the analysis (see model 2 in Table 4), reading skill was statistically significant for the prediction bias, while topic knowledge was not. However, when self-perceived topic knowledge was included as significant predictor in the last step of the analysis, the weight of topic knowledge increased considerably (probably because some of its shared variance with self-perceived topic knowledge got cleared), revealing it as statistically significant, whereas the weight of reading skill decreased slightly falling below the level of significance.

Regarding the question whether high and low levels of the significant learner characteristics resulted in overestimation or underestimation, Fig. 1 shows that, in line with expectations, males, lower WMC, and lower topic knowledge, and, contrary to expectations, higher self-perceived topic knowledge and self-perceived metacomprehension ability were associated with stronger overestimations while their counterparts showed hardly any overestimation. Furthermore, to unravel how the identified learner characteristics impacted judgment bias, Fig. 2 presents their relationship with judgment magnitude and performance for the factual questions.Footnote 5 It can be seen that gender and self-perceived metacomprehension ability mainly influenced the magnitude of the prediction judgments, but hardly performance. In contrast, WMC, actual, and self-perceived topic knowledge had a significant effect on performance and a slight (albeit not statistically significant) influence on judgment magnitude.

For the postdiction bias of the factual questions, which slightly tended toward underestimation on the group level (see Table 1), underestimation was prevalent for females, higher reading skill, lower self-perceived topic knowledge, and lower self-perceived metacomprehension ability (see Fig. 1). Conversely, males, participants with lower reading skill, and higher self-perceptions of topic knowledge and of metacomprehension ability were hardly overestimating anymore. As Fig. 2 shows in greater detail, the effects of gender and self-perceived metacomprehension ability on postdiction bias resulted mainly from differences in judgment magnitude, not in performance. Self-perceived topic knowledge was related to inverse effects on both judgment magnitude and performance, that is, participants with higher self-perceived topic knowledge provided higher judgments but lower performance than participants with lower self-perceived knowledge, although the spread between judgment and performance was greater for the latter. Furthermore, the bias-inducing effect of reading skill was mainly due to the fact that participants with higher reading skill outperformed those with lower reading skill, while their judgments were almost equal.

Regarding the inference questions, the results for the prediction bias and postdiction bias were similar. As expected, a lower reading skill was associated with stronger overestimations than higher reading skill (see Fig. 1). A higher amount of self-reported general monitoring strategies was related to stronger overestimations compared to lower levels of this learner characteristic, which, against our expectation, still showed some overestimation. Likewise, in line with expectations, a higher compared with a lower self-efficacy and, although only for the prediction bias, a higher compared to a lower openness contributed to stronger overestimations. As Fig. 3 breaks down, the effect of openness on the prediction bias was mainly based on differences in judgment magnitude, not performance. However, the effects of reading skill, self-efficacy, and self-reported general monitoring strategies on the prediction bias and postdiction bias were driven by divergent effects on both judgment magnitude and performance, albeit statistically significant differences in judgments and performance, respectively, occurred only for self-efficacy.5 It is also noteworthy that gender, although it was a significant predictor in the first step of the regression analyses, became nonsignificant after motivational and personality factors were included in the analyses, indicating that the latter factors carry the true relationship between gender and judgment bias for inference questions.

Discussion

We examined which learner characteristics contribute to overestimation and underestimations of text comprehension. Based on a literature review and theory, we investigated multiple learner characteristics concurrently to consider interdependencies between them. In doing so, we circumvented a major gap in previous research and the present study is the first of its kind in the field of metacomprehension. Since our texts resulted, on average, in medium performance, overestimations and underestimations are not the pure by-product and, hence, not a statistical artifact of very hard or very easy tasks (cf. Juslin et al., 2000). Thus, the judgment bias and the impact of learner characteristics on it can be interpreted meaningfully.

Who Is at Risk to Overestimate or Underestimate Their Text Comprehension?

We found a general tendency toward overestimation, except for the postdictions of the factual questions. Which learner characteristics substantially contributed to overestimation, or underestimation, differed mainly between factual and inference questions. For factual questions, we found that men provided considerably higher prediction and postdiction judgments than women, while their performance was equal. Consequently, men overestimated their comprehension for predictions of factual questions more strongly than women who were rather accurate. When making postdictions, men hardly overestimated and women underestimated their comprehension. These findings do not necessarily mean that men have a better metacomprehension accuracy in this situation because men and women have lowered their postdictions to an equal extent. It is common that learners drop their judgments from predictions to postdictions as a reaction to the test experience (Pierce & Smith, 2001). Hence, this finding may also reflect a general metacognitive heuristic rather than a gender-specific difference. For the inference questions, a gender effect disappeared after self-efficacy and the self-assessed amount of monitoring strategies were considered. Hence, the latter two factors accounted for men’s stronger overestimations for inference questions. Thus, our findings add to previous studies that either did (e.g., Buratti et al., 2013; Stankov & Lee, 2008) or did not find a gender effect (e.g., Ariel et al., 2018; de Bruin et al., 2017), which altogether underline the relevance of contextual influences such as subject matter for the effect to occur.

A rather consistent finding was that a low reading skill contributed to overestimations, in particular for inference questions. For factual questions, a lower compared to a higher reading skill was also, albeit only marginally significantly, related to stronger overestimations for predictions. For the postdiction bias of factual questions, that, in general, tended toward underestimation, a higher reading skill resulted in underestimation and a lower reading skill in rather accurate judgments. This was, however, the result of differences in performance, not in judgment magnitude. Contrarily, for the inference questions, the stronger overestimations occurred because participants with a lower reading skill provided slightly higher judgments and lower performance (both not statistically significant though) than those with higher reading skill. In all, these findings are compatible with prior studies using texts with age-appropriate text difficulty (Golke & Wittwer, 2017; Maki et al., 2005) and with theories on text comprehension and the cue-utilization framework on metacomprehension (cf. Griffin et al., 2009). Concretely, deficits in reading skill entail that learners construct poorer mental text representations (i.e., fewer, more incorrect, and less integrated information) for which they also have to invest more cognitive resources. Hence, they have fewer resources available for metacomprehension compared to learners with a higher reading skill. This includes less awareness of representation-based cues which therefore cannot be used for valid comprehension judgments and, relevant for postdictions, a poorer mental representation also hampers evaluating the correctness of one’s responses to comprehension questions (Griffin et al., 2009; Koriat, 1997). In this case, learners with a lower reading skill are seduced to overestimate rather than to underestimate their comprehension.

Importantly, the detrimental effect of lower reading skill (in particular for judgment bias of inference questions) occurred although participants could reread each text. Rereading normally improves comprehension as it alleviates constraints in WMC which enhanced metacomprehension in terms of relative accuracy in Griffin et al.’s (2008) study. However, in the present study, either rereading as a regulation strategy was insufficient to alleviate poorer reading skills for the text materials or the less-skilled participants did not reread (they were not obliged to do so as opposed to Griffin et al.’s study). The latter interpretation is supported by the fact that learners with lower reading skills often hold erroneous beliefs about what text comprehension involves and when comprehension is attained (i.e., standards of coherence, cf. van den Broek et al., 2011). Furthermore, it is worth noting that participants in the present study were university students. Thus, even among learners with a comparatively high level of reading skill, differences in this ability affect judgment bias noticeably. For learners with poorer reading skills, this negative effect should be even more pronounced.

Moreover, a lower topic knowledge contributed to stronger overestimations for the prediction of factual questions. Similar to the role of reading skill, having little topic knowledge impedes the construction of a mental text representation (cf. Kendeou & O’Brien, 2016; van den Broek et al., 2002). Accordingly, we found that lower compared to higher topic knowledge was related to a substantially lower performance on the factual questions. Its positive effect on the magnitude of the prediction judgments was, however, only slight (and statistically nonsignificant). Hence, learners with low topic knowledge might have had some sense of their lower understanding, but they failed to adjust their prediction judgments properly compared to learners with high topic knowledge. The same pattern was found for the prediction judgments of the inference questions, albeit topic knowledge did not prevail its impact on the prediction bias over other learner characteristics. For the postdictions, however, learners with lower and higher topic knowledge were better able to match their judgments to their lower and higher performance, respectively; hence, no effect on postdictions bias occurred, neither for the factual nor the inference questions.

Theoretically, a low WMC should contribute to overestimations due to its limiting effect on comprehension (cf. van den Broek et al., 2002) and, hence, metacomprehension. For measures other than judgment bias, a detrimental effect has already been shown (Griffin et al., 2008; Ikeda & Kitagami, 2012; Unsworth & McMillan, 2013). Rereading of texts, however, can alleviate this effect (Griffin et al., 2008). Although rereading was allowed in the present study, we found that lower WMC did result in stronger overestimations, but only for the prediction bias of factual questions. This suggests, first, that lower-WMC participants not necessarily reread the texts; hence, lower WMC took its toll. Second, the factual questions asked for explicit text information. Hence, participants with a higher compared with a lower WMC could retain more of the facts which they believed to have gathered when making the prediction judgment and use it to answer the questions. The bias-reducing effect of a higher WMC was mainly driven by a higher performance, but also by slightly (though statistically nonsignificant) higher predictions. Hence, the deviation between prediction judgments and performance was smaller for higher compared to lower WMC. However, for the inference questions, solely a better memorization of facts due to a higher WMC was not sufficient as they asked for information that had to be inferred. For these questions, reading skill is more predictive. Likewise, the postdiction bias for both question types depended on reading skill rather than WMC alone. The absent effect of WMC on the postdiction bias measures is in line with Komori’s (2016) findings on item-specific postdictions for a word recall task.

Over and above gender and the cognitive factors, a higher self-perceived topic knowledge contributed to overestimations for the predictions of factual questions while a lower level was related to rather accurate judgments. This effect occurred because a higher compared to a lower self-perceived topic knowledge resulted in slightly higher judgments and considerably lower performance. As the postdiction judgments decreased, participants with a higher self-perceived topic knowledge were rather accurate while those with a lower level were underconfident. These findings correspond with prior research: A higher domain/topic familiarity gives learners a heightened sense of confidence because familiarity concerns the knowledge about a general topic (which is vague), while test questions address a certain text (Lin & Zabrucky, 1998). Our finding of the positive relationship between familiarity and judgment magnitude is in line with previous studies (Ehrlinger & Dunning, 2003, Exp. 3; Glenberg et al., 1987, Exp. 3 + 4; Griffin et al., 2009). It differs, however, with respect to performance–in these previous studies, higher familiarity did not result in lower performance, but in higher performance or no differences at all. It can be speculated that the self-perceived knowledge was generally very low in the present study. Hence, knowing to have no or hardly any knowledge about a topic might be less harmful for text comprehension than believing to have some knowledge about it.

Furthermore, a higher compared to a lower self-perceived metacomprehension ability contributed to stronger overestimations on the predictions of the factual questions due to higher judgments, yet equal performance. This finding indicates that learners have an unrealistically high self-perception of their monitoring ability or they have underdeveloped beliefs about what accurate monitoring entails. This interpretation is supported by the fact that self-perceived metacomprehension ability was statistically unrelated to actual reading skill. When making postdictions for the factual questions, the participants with a higher self-perceived metacomprehension ability were rather accurate while the self-described lower-ability participants were underconfident. This might again be simply a by-product of the general tendency to lower one’s postdiction judgments. For the inference questions, the self-perceived metacomprehension ability was eventually not predictive for the bias, although their bivariate correlations suggested so. Instead, the self-reported amount of general monitoring strategies and knowledge, which seems to be more specific to inference questions than metacomprehension ability in general, became relevant. Interestingly, in Schraw and Dennison’s (1994) study, a higher self-perceived metacomprehension ability was also associated with higher judgments (in their case, item-specific postdictions), but with an even higher performance on text comprehension questions as well. This resulted in stronger overestimations for lower self-perceived metacomprehension ability in their study. Differences in text materials might explain these contradicting findings for performance, yet due to missing details in the description of their materials, this remains an open question.

With respect to the question which learner characteristics’ impacted judgment bias for the inference questions, reading skill was already discussed as one source of overestimation. Moreover, a higher self-reported amount of general monitoring strategies and knowledge was associated with stronger overestimations of both predictions and postdiction judgments for these questions as the self-ascribed more knowledgeable learners tended toward higher judgments and lower performance. Since Schraw (1997) found a reverse effect (lower knowledge, more underestimation), it suggests that, in the present study, the questionnaire assessed an inflated sense of monitoring skills and knowledge, which is a common threat in assessing strategy knowledge (cf. Veenman, 2016). Its medium correlation with self-perceived metacomprehension ability, which activated overestimations of the factual questions, supports this explanation. Hence, future research should consider more objective (e.g., behavioral) measures of monitoring knowledge and strategies.

Furthermore, we found for the inference questions that a higher self-efficacy for text comprehension resulted in higher performance and even more so in higher judgments. Consequently, higher self-efficacy contributed to stronger overestimations for predictions and postdictions than lower self-efficacy, which is in line with our expectation and prior research (Bouffard-Bouchard, 1990). This bias-inducing effect might seem counter-intuitive given that a higher self-efficacy promotes performance (Zimmerman & Moylan, 2009) and should, hence, decrease overestimations. However, as Zimmerman and Moylan state, self-efficacy as a learner’s beliefs about how capable they are to perform a certain task implies performance judgments and their accuracy. Given that learners usually tend toward overestimation (e.g., Lin & Zabrucky, 1998), (higher) self-efficacy beliefs might in turn be generally overoptimistic. Since overestimations are, however, likely to produce underachievement in later testing situations (Dunlosky & Rawson, 2012), future research should disentangle the pros and cons of a higher self-efficacy in the various phases of self-regulated learning.

Moreover, learners with a higher openness arrived at higher predictions, yet lower performance on inference questions, hence stronger overestimation, than those with lower openness. This finding corresponds to Agler et al.’s (2019) finding on relative metacomprehension accuracy, albeit in their study the effect disappeared when all Big-Five facets were considered concurrently. It might be speculated that openness is particularly predictive for bias of higher-order comprehension questions such as inference questions (Agler et al. did not differentiate between factual/inference questions): Inference questions address higher-order processes (e.g., causal relations between/across sentences) and are, therefore, usually more complex and difficult than factual questions. Correspondingly, learners in the present study gave lower judgments and performed poorer on the inference questions. The complexity and difficulty of these questions might heighten learners’ uncertainty about their performance on them. In this unclear situation, participants with a higher openness, who have also been found to experience less self-doubt (Buratti et al., 2013), show more overestimation for predictions (not postdictions) than less open participants.

In sum, learners with a low reading skill and, when controlled for cognitive capabilities, higher self-perceptions/beliefs about their topic knowledge and capabilities in understanding (self-efficacy) and monitoring texts (self-perceived metacomprehension ability) are particularly at risk to overestimate their comprehension of expository texts, especially when these risk factors coexist. A low WMC, a low topic knowledge, a higher openness, and being male can also contribute to overestimations, depending on the task. Thus, relatively few of the various learner characteristics that have been studied (mostly separately) in prior work emerged as significant predictors for judgment bias when considered simultaneously. This finding corresponds with Händel et al.’s (2020) study for judgment accuracy on knowledge tests.

Which Learner Characteristics Have No Substantial Effect on Judgment Bias?

Various learner characteristics turned out to be negligible for judgment bias of text comprehension. The absent effects of goal orientations, in particular the performance-approach and performance-avoidance goal orientation, contradict previous findings by Kroll and Ford (1992) and Zhou (2013). Our finding might, thus, suggest that previously found effects of goal orientation are rather attributable to other learner characteristics which are more closely related to text comprehension, for instance, self-efficacy.

The findings on the other learner characteristics that were insignificant for judgment bias are less surprising since evidence from prior research has only been weak or limited. This includes reasoning ability (cf. Ohtani & Hisasaka, 2018). Thus, the intellectual capability of drawing logical inferences per se does not help the accuracy of comprehension judgments, at least for (above) average levels of reasoning of university students. Instead, reading skill as the ability underlying text comprehension is essential.

Furthermore, the self-perceived reading skill had no significant impact on judgment bias, although Kwon and Linderholm (2014) suggested so. We found, just as Kwon and Linderholm did, a moderate relationship between self-perceived and actual reading skill. Yet, the present findings suggest that self-efficacy as the task-specific beliefs about an upcoming text comprehension task and self-perceived metacomprehension ability are more relevant to judgment bias than the generalized beliefs about one’s reading skill.

Moreover, our findings showed that a learner’s tendency to present themselves overly positive (as measured via overclaiming knowledge) is irrelevant or, compared to other learner characteristics, negligible for bias. This finding underlines the accumulated evidence that poor metacomprehension accuracy mainly results from a poor choice of judgments cues (cf. Thiede et al., 2009), not from self-elevation, at least in low-stake test situations.

In addition, we found that dispositional optimism as the belief in positive outcomes in life did not significantly add to explain poor metacomprehension accuracy. This finding corresponds with previous research that found no reliable relationship between bias measures and optimism (de Bruin et al., 2017; Händel et al., 2020 regarding the psychology test), except for Händel et al.’s study on a math test. Thus, learners in general are not strongly inclined to be misled by their optimistic disposition.

With regard to the Big-Five personality, we found that, beyond openness, the other examined facets (i.e., extraversion, neuroticism, and conscientiousness) had no substantial impact on the judgment bias of text comprehension. This finding mainly replicates previous studies that analyzed personality facets simultaneously and found either no effect (Agler et al., 2019; Händel et al., 2020 for math test) or an effect of a single facet (extraversion in Schaefer et al., 2004; conscientiousness in Händel et al., 2020 for psychology test). Our finding also does not conflict with previous studies (e.g., Buratti et al., 2013; Dahl et al., 2010; Pallier et al., 2002) that reported an effect of one or the other Big-Five facet on bias, but based on bivariate correlations only. Thus, the impact of personality facets on judgment bias (for text comprehension) seems to be weak. However, it is thinkable that the impact of personality facets (e.g., neuroticism) depends on affordances of the learning situation (e.g., high-/low-stake tests, text difficulty, domain).

Theoretical and Practical Implications

The cue-utilization framework (Griffin et al., 2009; Koriat, 1997) acknowledges a potential impact of learner characteristics on accuracy. Yet, given the empirical evidence, it needs to be developed with explicit assumptions about which learner characteristics impact accuracy. These should include the role of cognitive, motivational, and personality factors and whether they impact the emergence of representation-based cues, act as heuristics cues, or function as a bias-inducing cognitive style. Since task requirements such as task complexity (e.g., factual vs. inference questions) seem to trigger the influence of different learner characteristics, the theoretical framework should also elaborate on the conditions under which learner characteristics influence accuracy.

Most of the learner characteristics we found to contribute to overestimations are modifiable through instruction or training. These include reading skill (also beliefs about what different levels of text understanding mean) and self-perceptions of one’s abilities or knowledge. Such instructional support is naturally part of the education of younger students, but–as the present study showed–also university students would benefit from such support. Learner characteristics are, however, not the only impact on poor metacomprehension accuracy. Text and task characteristic and unsystematic influences (such as guessing) are also relevant. Hence, students should be provided with interventions such as delayed keyword generation or summarization that have been shown to be effective for de-biasing metacognitive judgments (e.g., Prinz et al., 2020b; Thiede et al., 2009).

Limitations and Future Research

As metacomprehension accuracy depends on the learner, the text, and the test material, the present findings might not be generalized to other text genres or types of tasks. As discussed, the type of task (factual vs. inference questions) was relevant in the present study and future research might focus on other material as well. Moreover, the present findings might not be generalized to samples with, for example, clinically relevant high levels of neuroticism or narcissism, or to learners with severely low levels of cognitive capacities or self-perceptions.