Abstract

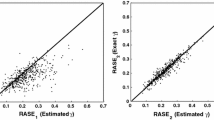

In this paper, we study a constrained estimation problem in a multivariate measurement error regression model. In particular, we derive the joint asymptotic normality of the unrestricted estimator (UE) and the restricted estimators (REs) of the matrix of the regression coefficients. The derived result holds under the hypothesized restriction as well as under the sequence of alternative restrictions. In addition, we establish Asymptotic Distributional Risk for the UE and the REs and compare their relative performance. It is established that near the restriction, the restricted estimators (REs) perform better than the UE. But the REs perform worse than the UE when one moves far away from the restriction. Further, we explore by simulation the performance of the shrinkage estimators (SEs). The numerical findings corroborate the established theoretical results about the relative risk dominance between the REs and the UE. The findings also show that near the restriction, the REs dominate SEs but as one moves far away from the restriction, REs perform poorly while SEs dominate always the UE.

Similar content being viewed by others

Change history

23 July 2022

A Correction to this paper has been published: https://doi.org/10.1007/s00362-022-01339-3

References

Ahmed SE, Hussein A, Nkurunziza S (2010) Robust inference strategy in the presence of measurements error. Stat Probab Lett 80:726–732

Bertsch W, Chang RC, Zlatkis A (1974) The determination of organic volatiles in air pollution studies: characterization of proles. J Chromatogr Sci 12(4):175–182

Chen F, Nkurunziza S (2015) Optimal method in multiple regression with structural changes. Bernoulli 21(4):2217–2241

Chen F, Nkurunziza S (2016) A class of Stein-rules in multivariate regression model with structural changes. Scand J Stat 43:83–102

Chen F, Nkurunziza S (2017) On estimation of the change-points in multivariate regression models with structural changes. Commun Stat-Theory Methods 46(14):7157–7173

Dolby GR (1976) The ultrastructural relation: a synthesis of the functional and structural relations. Biometrika 63(1):39–50

Fuller WA (2009) Measurement error models. Wiley, New York

Izenman AJ (2008) Modern multivariate statistical techniques: regression, classification and manifold learning. Springer, New York

Jain K, Singh S, Sharma S (2011) Restricted estimation in multivariate measurement error regression model. J Multivar Anal 102(2):264–280

Mardia KV, Kent JT, Bibby JM (1980) Multivariate analysis, 1st edn. Academic press, New York

McArdle BH (1988) The structural relationship: regression in biology. Can J Zool 66(11):2329–2339

Nkurunziza S (2012) Constrained estimation and some useful results in several multivariate models. Stat Methodol 9(3):353–363

Nkurunziza S (2012) Shrinkage strategies in some multiple multi-factor dynamical systems. ESAIM: Probab Stat 16:139–150

Nkurunziza S, Yu Y (2017) On the joint asymptotic distribution of the restricted estimators in multivariate regression model. Technical report, arXiv:1706.06632

Saleh AK, Md E (2006) Theory of preliminary test and stein-type estimation with applications, vol 517. Wiley, New York

Stevens JP (2012) Applied multivariate statistics for the social sciences. Routledge, Milton Park

Yu Y (2016) Constrained estimation in multivariate measurement error models. M.Sc. Major Paper, Department of Mathematics and Statistics, University of Windsor

Acknowledgements

We would like to thank the referees and Associate Editor for helpful comments. Further, Dr. S. Nkurunziza would like to acknowledge the financial support received from the Natural Sciences and Engineering Research Council of Canada (NSERC).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this article was revised: The sentence “From the above class of objective function, one obtains a class of restricted estimators \(\{{\tilde{B}}(\hat{{\varvec{\Sigma }}}): \hat{{\varvec{\Sigma }}} \in {\mathcal {P}mulations for the case whe}_{p\times p}\}\) which satisfies ...” is corrected as “From the above class of objective function, one obtains a class of restricted estimators \(\{{\tilde{B}}(\hat{{\varvec{\Sigma }}}): \hat{{\varvec{\Sigma }}} \in {\mathcal {P}}_{p\times p}\}\) which satisfies ....

Appendix: Some technical results

Appendix: Some technical results

In this appendix, we give technical results and proofs which are underlying the established results. The following lemma are useful in establishing the asymptotic distributions. Let

Lemma A.1

Let \({\varvec{w}}_{1},{\varvec{w}}_{2},\dots ,{\varvec{w}}_{n}\) be iid p- column random vectors with \(\text {E}\left( {\varvec{w}}_{1}\right) ={\varvec{0}}\) and

\({\text {Cov}}\left( {\varvec{w}}_{1}\right) ={\varvec{\Omega }}_{0}\) and let \(\left\{ {\varvec{U}}_{n}\right\} _{n=1}^{\infty }\) be a \(p_{0}\times p\)-sequence of nonrandom matrices such that \(\displaystyle {\lim _{n\rightarrow \infty }}{\varvec{U}}_{n}\) exists and non null. Then \(\displaystyle n^{-1/2}\sum _{i=1}^{n}{\varvec{U}}_{i}{\varvec{w}}_{i}\xrightarrow [n\rightarrow \infty ]{d}{\varvec{Z}}_{0}\sim {\mathcal {N}}_{p}\left( {{\varvec{0}}},{\varvec{\Omega }}\right) \) where

Proof

Let \({\varvec{\alpha }}\) be a \(p_{0}\)-column vector (no zero vector) and let \(s_{n}^{2}{=}\displaystyle \sum _{i=1}^{n}{{\text {Cov}}}\left[ {\varvec{\alpha }}^{\prime }n^{-1/2}{\varvec{U}}_{i}{\varvec{w}}_{i}\right] \). This gives \(s_{n}^{2} =n^{-1}\displaystyle \sum _{i=1}^{n}{\varvec{\alpha }}^{\prime }{\varvec{U}}_{i}{\varvec{\Omega }}_{0}{\varvec{U}}^{\prime }_{i}{\varvec{\alpha }}\). By Cesàro mean theorem, we conclude that

\(\displaystyle {\lim _{n\rightarrow \infty }} {\text {Cov}}\left[ \left( n^{-1/2}\sum _{i=1}^{n}{\varvec{U}}_{i}{\varvec{w}}_{i}\right) \right] \) exists. Letting \({\varvec{\Omega }}\) this limit, we get \(\displaystyle {\lim _{n\rightarrow \infty }}s_{n}^{2}={\varvec{\alpha }}^{\prime }{\varvec{\Omega }}{\varvec{\alpha }}\) and

for all \({\varvec{\alpha }}\in {\mathbb {R}}^{p_{0}}\setminus \{0\}\). Hence, by Lindeberg central limit theorem, we get

where \(Z_{0}^{*}\sim {\mathcal {N}}\left( 0,\,1\right) \). Then, by Slutsky’s theorem, \(n^{-1/2}{\varvec{\alpha }}^{\prime }\displaystyle {\sum \nolimits _{i=1}^{n}}{\varvec{U}}_{i}{\varvec{w}}_{i}\xrightarrow [n\rightarrow \infty ]{d} \left( {\varvec{\alpha }}^{\prime }{\varvec{\Omega }}{\varvec{\alpha }}\right) ^{1/2}Z_{0}^{*}\) and note that \(\left( {\varvec{\alpha }}^{\prime }{\varvec{\Omega }}{\varvec{\alpha }}\right) ^{1/2}Z_{0}^{*}\sim {\mathcal {N}}\left( 0,{\varvec{\alpha }}^{\prime }{\varvec{\Omega }}{\varvec{\alpha }}\right) \) this is distributed as \({\varvec{\alpha }}^{\prime }{\varvec{Z}}_{0}\), where \({\varvec{Z}}_{0}\sim {\mathcal {N}}_{p_{0}}\left( {\varvec{0}},{\varvec{\Omega }}\right) \). This completes the proof. \(\square \)

It should be noticed that for the special case where \(p_{0}=p\) and \({\varvec{U}}_{i}={\varvec{I}}_{p_{0}}\), the above Lemma A.1 becomes the classical multivariate central limit theorem. From Lemma A.1, we establish the following lemma which is useful in establishing Theorem 3.2. Let \({\varvec{\Sigma }}_{E}\) be the variance-covariance matrix of each row of \({\varvec{E}}\) i.e \({\varvec{\Sigma }}_{E}={\text {Cov}}\left( {\varvec{e}}_{(i)}\right) \) with \({\varvec{E}}=\left( {{\varvec{e}}}_{(1)},{{\varvec{e}}}_{(2)},\dots ,{{\varvec{e}}}_{(n)}\right) ^{\prime }\),

\({\varvec{\Lambda }}_{\psi }=\text {E}\left( ({{\varvec{\psi }}}_{(1)}{{\varvec{\psi }}}^{\prime }_{(1)})\otimes ({{\varvec{\psi }}}_{(1)} {{\varvec{\psi }}}^{\prime }_{(1)})\right) \) and let \({\varvec{\Lambda }}_{\delta }=\text {E}\left( ({{\varvec{\delta }}}_{(1)}{{\varvec{\delta }}}^{\prime }_{(1)})\otimes ({{\varvec{\delta }}}_{(1)}{{\varvec{\delta }}}^{\prime }_{(1)})\right) \).

Lemma A.2

Suppose that Assumption 1 holds. Then,

Proof

Parts (1) and (2): We have

\(n^{-\frac{1}{2}}{\varvec{\Psi }}^{\prime }{\varvec{\Psi }}-\sigma _\psi ^2{\text {vec}}({\varvec{I}}_{p})= n^{-\frac{1}{2}}\sum \limits _{i=1}^n({{\varvec{\psi }}}_{(i)}{{\varvec{\psi }}^{\prime }}_{(i)} -\sigma _\psi ^2{\varvec{I}}_{p})\) with \(\text {E}\left( {{\varvec{\psi }}}_{(1)}{{\varvec{\psi }}}^{\prime }_{(1)}-\sigma _\psi ^2{\varvec{I}}_{p} \right) =0\) and

where \({\varvec{\Lambda }}_{\psi }=\text {E}\left( ({{\varvec{\psi }}}_{(1)}{{\varvec{\psi }}}^{\prime }_{(1)}) \otimes ({{\varvec{\psi }}}_{(1)}{{\varvec{\psi }}}^{\prime }_{(1)})\right) \). Then, Part (1) follows from Lemma A.1. The proof of Part (2) is similar.

Parts (3)–(5): We have \(n^{-\frac{1}{2}}{\varvec{M}}^{\prime }{\varvec{E}}=n^{-\frac{1}{2}}\sum \limits _{i=1}^n{{\varvec{m}}}_{(i)} {\varvec{e}}^{\prime }_{(i)}\) where \({\varvec{E}}=\left[ {{\varvec{e}}}_{(1)}, {{\varvec{e}}}_{(2)}, \cdots , {{\varvec{e}}}_{(n)}\right] ^{\prime }\) with \({{\varvec{e}}}_{(i)}=(\epsilon _{i1},\epsilon _{i2},\cdots ,\epsilon _{iq})^{\prime }\). Then, the proof of Part (3)follows by combining the conditions \(({\mathcal {A}}_{1})\) and \(({\mathcal {A}}_{4})\) of Assumption 1 along with Lemma A.1. The proof of Parts (4)-(5) are established in the similar way.

Parts (6)-(8): We have \(n^{-\frac{1}{2}}{\varvec{\Psi }}^{\prime }{\varvec{\Delta }}=n^{-\frac{1}{2}}\sum \limits _{i=1}^n{{\varvec{\psi }}}_{(i)}{{\varvec{\delta }}^{\prime }}_{(i)}\) where \({{\varvec{\psi }}}_{(1)}{{\varvec{\delta }}}^{\prime }_{(1)}\), \({{\varvec{\psi }}}_{(2)}{{\varvec{\delta }}}^{\prime }_{(2)}\), ...,\({{\varvec{\psi }}}_{(n)}{{\varvec{\delta }}}^{\prime }_{(n)}\) are iid with \(\text {E}\left( {{\varvec{\psi }}}_{(1)}{{\varvec{\delta }}}^{\prime }_{(1)}\right) ={\varvec{0}}\) and \({\text {Cov}}\left( {{\varvec{\psi }}}_{(1)}{{\varvec{\delta }}}^{\prime }_{(1)}\right) =\text {E}\left[ {\text {vec}}\left( {{\varvec{\psi }}}_{(1)}{{\varvec{\delta }}}^{\prime }_{(1)} \right) \left( {\text {vec}}\left( {{\varvec{\psi }}}_{(1)}{{\varvec{\delta }}}^{\prime }_{(1)}\right) \right) ^{\prime } \right] \). Then

Then, since \({{\varvec{\delta }}}_{(1)}\) and \({{\varvec{\psi }}}_{(1)}\) are independent, we get

and then, Part (6) follows from Lemma A.1. Parts (7) and (8) are established in the similar way. \(\square \)

Lemma A.3

Let Y be a \(p\times q\) random matrix and \({\varvec{Y}}\sim \mathcal {MN}_{p\times q}({\varvec{0}}_{\scriptscriptstyle p\times q},{\varvec{\Lambda }})\), with \({\varvec{\Lambda }}\) a \(pq\times pq\) matrix. For \(j=1,2, \dots , m\), let \(\kappa _{j}\) and \(\alpha _{j}\) be \(q\times q-\) nonrandom matrices, let \(\iota _{j}\) and \(\beta _{j}\) be \(p\times p\)-nonrandom matrices, and let \(\varrho _{j}\) be \(p\times q\)-nonrandom matrices. Then

\( \left( \begin{array}{ccc} \iota _{1}{\varvec{Y}}\kappa _{1}+\beta _1{\varvec{Y}}\alpha _1+\varrho _1\\ \iota _{2}{\varvec{Y}}\kappa _{2}+\beta _2{\varvec{Y}}\alpha _2+\varrho _2\\ \vdots \\ \iota _{m}{\varvec{Y}}\kappa _{m}+\beta _m {\varvec{Y}}\alpha _m+\varrho _m \end{array} \right) \) \(\sim \mathcal {MN}_{mp\times q}\) \( \left( \left( \begin{array}{c} \varrho _1 \\ \varrho _2 \\ \vdots \\ \varrho _{m} \end{array} \right) , \left( \begin{array}{cccc} {\varvec{A}}_{11} &{} {\varvec{A}}_{12}&{}\cdots &{} {\varvec{A}}_{1m} \\ {\varvec{A}}_{21} &{} {\varvec{A}}_{22}&{} \cdots &{} {\varvec{A}}_{2m}\\ \vdots &{} \cdots &{} \cdots &{} \vdots \\ {\varvec{A}}_{m1} &{} {\varvec{A}}_{m2}&{} \cdots &{} {\varvec{A}}_{mm} \end{array} \right) \right) ,\) where \( \quad { } {\varvec{A}}_{ji}=({\varvec{A}}_{ij})^{\prime }, i,j=1,2,\dots ,m,\) and

Proof

We have vec\( \left( \left( \begin{array}{ccc} \iota _{1}{\varvec{Y}}\kappa _{1}+\beta _1{\varvec{Y}}\alpha _1+\varrho _1\\ \iota _{2}{\varvec{Y}}\kappa _{2}+\beta _2{\varvec{Y}}\alpha _2+\varrho _2\\ \vdots \\ \iota _{m}{\varvec{Y}}\kappa _{m}+\beta _m {\varvec{Y}}\alpha _m+\varrho _m \end{array} \right) \right) {=} \left( \begin{array}{c} \kappa ^{\prime }_{1}\otimes \iota _{1}+\alpha ^{\prime }_1\otimes \beta _1\\ \kappa ^{\prime }_{2}\otimes \iota _{2}+\alpha ^{\prime }_2\otimes \beta _2\\ \vdots \\ \kappa ^{\prime }_{m}\otimes \iota _{m}+\alpha ^{\prime }_m\otimes \beta _m \end{array} \right) {\text {vec}}({\varvec{Y}}) +\left( \begin{array}{c} {\text {vec}}(\varrho _1)\\ {\text {vec}}(\varrho _2)\\ \vdots \\ {\text {vec}}(\varrho _m) \end{array} \right) \) then the rest of the proof follows from the properties of normal random vectors along with some algebraic computations, this completes the proof. \(\square \)

Note that this result is more general than Corollary A.2 in Chen and Nkurunziza (2016). By using this lemma, we establish the following lemma, which is more general than Proposition A.10 and Corollary A.2 in Chen and Nkurunziza (2016). The established lemma is particularly useful in deriving the joint asymptotic normality between \(\hat{{\varvec{B}}}_1\), \(\hat{{\varvec{B}}}_2\),\(\hat{{\varvec{B}}}_3\) and \(\hat{{\varvec{B}}}_4\).

Lemma A.4

For \(j=1,2,\dots ,m\), let \(\{\kappa _{jn}\}_{n=1}^{\infty }\), \(\{\iota _{jn}\}_{n=1}^{\infty }\) \(\{\alpha _{jn}\}_{n=1}^{\infty }\),\(\{\beta _{jn}\}_{n=1}^{\infty }\), \(\{\varrho _{jn}\}_{n=1}^{\infty }\), be sequences of random matrices such that \(\kappa _{jn}\xrightarrow [n\rightarrow \infty ]{P}\kappa _{j}\), \(\iota _{jn}\xrightarrow [n\rightarrow \infty ]{P}\iota _{j}\), \(\alpha _{jn}\xrightarrow [n\rightarrow \infty ]{P}\alpha _j\), \(\beta _{jn}\xrightarrow [n\rightarrow \infty ]{P}\beta _j\), \(\varrho _{jn}\xrightarrow [n\rightarrow \infty ]{P}\varrho _j\), where, for \(j=1,2,\dots , m\), \(\kappa _j\), \(\alpha _j\), \(\iota _j\) and \(\beta _j\),\(\varrho _j\), are non-random matrices as defined in Lemma A.3. If a sequence of \(p\times q\) random matrices \(\{{\varvec{Y}}_n\}_{n=1}^{\infty }\) is such that \({\varvec{Y}}_n\xrightarrow [n\rightarrow \infty ]{d}{\varvec{Y}}\sim \mathcal {MN}_{p\times q}({\varvec{0}}_{\scriptscriptstyle p\times q},\Lambda )\), where \({\varvec{\Lambda }}\) is a \(pq\times pq\) matrix. We have

\( \left( \begin{array}{ccc} \iota _{1n} {\varvec{Y}}_n\kappa _{1n}+\beta _{1n}{\varvec{Y}}_n\alpha _{1n}+\varrho _{1n}\\ \iota _{2n}{\varvec{Y}}_n\kappa _{2n}+\beta _{2n}{\varvec{Y}}_n\alpha _{2n}+\varrho _{2n}\\ \vdots \\ \iota _{mn}{\varvec{Y}}_n\kappa _{mn}+\beta _{mn}{\varvec{Y}}_n\alpha _{mn}+\varrho _{mn} \end{array} \right) \xrightarrow [n\rightarrow \infty ]{d} {\varvec{U}}\sim \mathcal {MN}_{mp\times q} \left( {\varvec{\varrho }},\,{\varvec{A}} \right) \)

with \({\varvec{\varrho }}= \left( \begin{array}{c} \varrho _1 \\ \varrho _2 \\ \vdots \\ \varrho _m \end{array} \right) ,\) \({\varvec{A}}= \left( \begin{array}{c c c c} {\varvec{A}}_{11} &{} {\varvec{A}}_{12}&{} \cdots &{} {\varvec{A}}_{1m}\\ {\varvec{A}}_{21} &{} {\varvec{A}}_{22}&{} \cdots &{} {\varvec{A}}_{2m}\\ \vdots &{} \cdots &{} \cdots &{} \vdots \\ {\varvec{A}}_{m1} &{} {\varvec{A}}_{m2}&{} \cdots &{} {\varvec{A}}_{mm} \end{array} \right) \),

where \({\varvec{A}}_{ij}\), \(i=1,2, \dots , m; j=1,2, \dots , m\) are as defined in Lemma A.3.

Proof

We have

where \({\text {vec}}({\varvec{Y}}_n)\xrightarrow [n\rightarrow \infty ]{d}{\text {vec}}({\varvec{Y}})\sim {\mathcal {N}}_{pq}(0,{\varvec{\Lambda }})\), \(\left( \begin{array}{c} {\text {vec}}(\varrho _{1n})\\ {\text {vec}}(\varrho _{2n})\\ \vdots \\ {\text {vec}}(\varrho _{mn}) \end{array} \right) \xrightarrow [n\rightarrow \infty ]{P}\left( \begin{array}{c} {\text {vec}}(\varrho _{1})\\ {\text {vec}}(\varrho _2)\\ \vdots \\ {\text {vec}}(\varrho _{m}) \end{array} \right) , \)

and \(\left( \begin{array}{c} \kappa ^{\prime }_{1n}\otimes \iota _{1n}+\alpha ^{\prime }_{1n}\otimes \beta _{1n}\\ \kappa ^{\prime }_{2n}\otimes \iota _{2n}+\alpha ^{\prime }_{2n}\otimes \beta _{2n}\\ \vdots \\ \kappa ^{\prime }_{mn}\otimes \iota _{mn}+\alpha ^{\prime }_{mn}\otimes \beta _{mn} \end{array} \right) \xrightarrow [n\rightarrow \infty ]{P} \left( \begin{array}{c} \kappa ^{\prime }_{1}\otimes \iota _{1}+\alpha ^{\prime }_1\otimes \beta _1\\ \kappa ^{\prime }_{2}\otimes \iota _{2}+\alpha ^{\prime }_2\otimes \beta _2\\ \vdots \\ \kappa ^{\prime }_{m}\otimes \iota _{m}+\alpha ^{\prime }_m\otimes \beta _m \end{array} \right) .\)

Then, by using Slutsky’s theorem, we have

and then

Then, the proof follows directly from Lemma A.3. \(\square \)

From this lemma, we establish the following corollary.

Corollary A.1

Suppose that the conditions Lemma A.4 hold. We have \(({\varvec{Y}}^{\prime }_n,({\varvec{Y}}_n+\beta _{2n}{\varvec{Y}}_n\alpha _{2n}+\varrho _{2n})^{\prime })^{\prime }\xrightarrow [n\rightarrow \infty ]{d}({\varvec{Y}}^{\prime },({\varvec{Y}}+\beta _{2} {\varvec{Y}}\alpha _{2}+\varrho _{2})^{\prime })^{\prime }\), with

where \({\varvec{V}}_{11}={\varvec{\Lambda }}\); \({\varvec{V}}_{12}={\varvec{\Lambda }}+{\varvec{\Lambda }} (\alpha ^{\prime }_{2}\otimes \beta _{2})\); \({\varvec{V}}_{21}=({\varvec{V}}_{12})^{\prime }\);

\({\varvec{V}}_{22}=({\varvec{I}}_{pq}+\alpha ^{\prime }_{2}\otimes \beta _{2}){\varvec{\Lambda }}({\varvec{I}}_{pq}+\alpha ^{\prime }_{2}\otimes \beta _{2}).\)

The proof follows directly from Lemma A.4 by taking \(m=2\), \(\kappa _{jn}={\varvec{I}}_{q}\), \(\iota _{jn}={\varvec{I}}_{p}\), \(\alpha _{1n}={\varvec{0}}\), \(\beta _{1n}={\varvec{0}}\) and \(\varrho _{1n}={\varvec{0}}\).

Proof of Theorem 3.2

We have

with \({\varvec{G}}_{2n}({\hat{{\varvec{\Sigma }}}})=\hat{{\varvec{\Sigma }}}^{-1}{{\varvec{R}}^{\prime }_1}[{\varvec{R}}_{1} \hat{{\varvec{\Sigma }}}^{-1}{{\varvec{R}}^{\prime }_1}]^{-1}\) and \({\varvec{P}}_{n}=({{\varvec{R}}^{\prime }_{2}}{\varvec{R}}_{2})^{-1}{{\varvec{R}}^{\prime }_{2}}\). Then, since

\({\varvec{R}}_{1}{\varvec{B}}{\varvec{R}}_2={\varvec{\theta }}+{\varvec{\theta }}_{0}\big /\sqrt{n}\), this last relation gives

Hence,

Note that

with

Further, let \({\varvec{\beta }}_{2}={\varvec{G}}_2({\varvec{Q}}_{0}){\varvec{R}}_{1}\), let \({\varvec{\alpha }}_{2}={\varvec{R}}_{2}P\) and let \({\varvec{\varrho }}={\varvec{G}}_{2}({\varvec{Q}}_{0}){\varvec{\theta }}_{0}{\varvec{P}}\). By using Corollary A.1, we have

this completes the proof. \(\square \)

Proof of Theorem 3.1

The proof is similar to that of Theorem 3.2 by taking \({\varvec{\mu }}\left( {\varvec{Q}}_{0}\right) ={\varvec{0}}\). For the convenience of the reader, we also write the main steps here. We have

Hence,

Hence, by combining Corollary A.1 with relations (A.1) and (A.2), we get

where \({\varvec{\beta }}_{2}={\varvec{G}}_2({\varvec{Q}}_{0}){\varvec{R}}_{1}\), let \({\varvec{\alpha }}_{2}={\varvec{R}}_{2}P\) and let \({\varvec{\varrho }}={\varvec{0}}\). Then, we have

as desired result. \(\square \)

Proof of Theorem 3.3

From (2.7), (2.8) and (2.9), we have

with \({\varvec{G}}_{2n}{=}({\varvec{S}} {\varvec{K}}_{X})^{-1}{{\varvec{R}}^{\prime }_1}[{\varvec{R}}_{1}({\varvec{S}} {\varvec{K}}_{X})^{-1} {{\varvec{R}}^{\prime }_1}]^{-1}\), \({\varvec{S}}{=}{\varvec{X}}^{\prime }{\varvec{X}}\), \({\varvec{G}}_{3n}{=}{\varvec{S}}^{-1} {{\varvec{R}}^{\prime }_1}[{\varvec{R}}_{1}{\varvec{S}}^{-1}{{\varvec{R}}^{\prime }_1}]^{-1}\),

\({\varvec{G}}_{4n}={{\varvec{R}}^{\prime }_1}[{\varvec{R}}_{1}{{\varvec{R}}^{\prime }_1}]^{-1}\), \({\varvec{P}}_{n}=({\varvec{R}}_{2}^{\prime }{\varvec{R}}_{2})^{-1}{\varvec{R}}_{2}^{\prime }\). Then, since \({\varvec{R}}_{1}{\varvec{B}}{\varvec{R}}_2={\varvec{\theta }}+{\varvec{\theta }}_{0}/\sqrt{n}\), we have

Therefore,

with \(n^{\frac{1}{2}}(\hat{{\varvec{B}}}_1-{\varvec{B}}) \xrightarrow [n\rightarrow \infty ]{d}\eta _1\sim \mathcal {MN}_{p \times q}\left( {\varvec{O}}_{\scriptscriptstyle p\times q}, {\varvec{\Sigma }}_{11}\right) \),

\({\varvec{G}}_{2n} \xrightarrow [n\rightarrow \infty ]{P}{\varvec{G}}_{2}=({\varvec{\Sigma }} {\varvec{K}})^{-1}{\varvec{R}}^{\prime }_1({\varvec{R}}_{1}({\varvec{\Sigma }} {\varvec{K}})^{-1}{\varvec{R}}^{\prime }_1)^{-1}\), \({\varvec{G}}_{3n} \xrightarrow [n\rightarrow \infty ]{P}{\varvec{G}}_{3}={\varvec{\Sigma }}^{-1} {\varvec{R}}^{\prime }_1({\varvec{R}}_{1} {\varvec{\Sigma }}^{-1}{\varvec{R}}^{\prime }_1)^{-1}\), \({\varvec{G}}_{4n} \xrightarrow [n\rightarrow \infty ]{P}{\varvec{G}}_{4}={\varvec{R}}^{\prime }_1({\varvec{R}}_{1} {\varvec{R}}^{\prime }_1)^{-1}\), \({\varvec{P}}_{n} \xrightarrow [n\rightarrow \infty ]{P}{\varvec{P}}=({\varvec{R}}_{2}^{\prime }{\varvec{R}}_{2})^{-1} {\varvec{R}}_{2}^{\prime }\). Therefore, by using Lemma A.4, we get the statement of the proposition. \(\square \)

Proof of Theorem 3.4

The first statement follows from Theorem 3.2. Further, we have,

This gives

Further, one can verify that \({\text {vec}}({\varvec{J}}_1({\varvec{Q}}_{0})\otimes {\varvec{J}})={\text {vec}}(({\varvec{\Sigma }} {\varvec{K}})^{-1}\otimes {\varvec{I}}_q)\). Then, the rest of the proof follows from some algebraic computations. \(\square \)

Proof of Theorem 3.5

From Theorem 3.4, we have

Note that \(f_1({\varvec{Q}}_{0})\ge 0\) and obviously, if \(f_1({\varvec{Q}}_{0})=0\), ADR\((\tilde{{\varvec{B}}}(\hat{{\varvec{\Sigma }}}), {\varvec{B}}; {\varvec{W}})>\)ADR\((\hat{{\varvec{B}}}_1, {\varvec{B}},{\varvec{W}})\) provided that \(({\text {vec}}({\varvec{\theta }}_0))^{\prime }{\varvec{F}}_1({\varvec{Q}}_{0}){\text {vec}} ({\varvec{\theta }}_0)>0\). Thus, we only consider the case where \(f_1({\varvec{Q}}_{0})>0\). From (A.3), ADR\((\tilde{{\varvec{B}}}(\hat{{\varvec{\Sigma }}}), {\varvec{B}}; W)>\)ADR\((\hat{{\varvec{B}}}_1, {\varvec{B}},{\varvec{W}})\) if and only if

\(-f_1({\varvec{Q}}_{0})+({\text {vec}}({\varvec{\theta }}_0))^{\prime }{\varvec{F}}_1({\varvec{Q}}_{0}) {\text {vec}}({\varvec{\theta }}_0)>0\). If \(f_1({\varvec{Q}}_{0})<({\text {vec}}({\varvec{\theta }}_0))^{\prime }{\varvec{F}}_1({\varvec{Q}}_{0}) {\text {vec}}({\varvec{\theta }}_0),\) then

Further, since \(f_1({\varvec{Q}}_{0})>0\), we have

Further, by using Courant Theorem, we have

Therefore, for the inequality in (A.4) to hold, it suffices to have

That is if \(||{\varvec{\theta }}_0||^2>\displaystyle \frac{f_1({\varvec{Q}}_{0})}{\text {ch}_{min}({\varvec{F}}_1 ({\varvec{Q}}_{0}))}\), then \(\text {ADR}(\tilde{{\varvec{B}}}(\hat{{\varvec{\Sigma }}}),{\varvec{B}},{\varvec{W}})>\text {ADR}(\hat{{\varvec{B}}}_1, {\varvec{B}};{\varvec{W}}).\) Further, if \(f_1({\varvec{Q}}_{0})>({\text {vec}}({\varvec{\theta }}_0))^{\prime }{\varvec{F}}_1({\varvec{Q}}_{0}) {\text {vec}}({\varvec{\theta }}_0)\), by using (A.5), we establish the condition that if \(||{\varvec{\theta }}_0||^2<\displaystyle \frac{f_1({\varvec{Q}}_{0})}{\text {ch}_{max}({\varvec{F}}_1 ({\varvec{Q}}_{0}))}\), then \(\text {ADR}(\tilde{{\varvec{B}}}(\hat{{\varvec{\Sigma }}}),{\varvec{B}},{\varvec{W}})<\text {ADR} (\hat{{\varvec{B}}}_1,{\varvec{B}};{\varvec{W}}),\) as stated. \(\square \)

Proposition A.1

Under the Assumption 1, we have

Proof

We have \({\varvec{M}}^{\prime }{\varvec{M}}=\displaystyle {\sum \nolimits _{i=1}^{n}}{{\varvec{m}}}_{(i)}{{\varvec{m}}}^{\prime }_{(i)}\). Then, from the condition \(({\mathcal {A}}_{5})\) and Cesàro’s mean theorem, we get \(\displaystyle {\lim \limits _{n\rightarrow \infty }}n^{-1}{\varvec{M}}^{\prime }{\varvec{M}}={\varvec{\sigma }}_{\scriptscriptstyle M}{\varvec{\sigma }}^{\prime }_{\scriptscriptstyle M}\). Further, from Lemma A.2, we have

and \(n^{-1/2}\left[ {\varvec{M}}^{\prime }{\varvec{\Psi }}\vdots {\varvec{M}}^{\prime }{\varvec{\Delta }} \vdots {\varvec{\Psi }}^{\prime }{\varvec{\Delta }} \right] =O_{p}(1)\). Then, together with Slutsky’s theorem, we get the stated results. \(\square \)

From Proposition A.1, we establish the following corollary which plays an important role in deriving the the joint asymptotic normality of the UE and the RE. In the sequel, let

\(K_{\scriptscriptstyle 0,n}=\left( n^{-1}{\varvec{M}}^{\prime }{\varvec{M}}+\sigma ^{2}_{\psi }{\varvec{I}}_{p}+\sigma ^{2}_{\delta }{\varvec{I}}_{p}\right) ^{-1} \left( n^{-1}{\varvec{M}}^{\prime }{\varvec{M}}+\sigma ^{2}_{\psi }{\varvec{I}}_{p}\right) \).

Corollary A.2

Suppose that Assumption 1 holds. Then, \(\hat{{\varvec{\Sigma }}}_X \xrightarrow [n\rightarrow \infty ]{P}{\varvec{\Sigma }}\), \(K_{\scriptscriptstyle 0,n}\xrightarrow [n\rightarrow \infty ]{P} {\varvec{K}},\)

\(\hat{{\varvec{\Sigma }}}_D \xrightarrow [n\rightarrow \infty ]{P} {\varvec{\sigma }}_{\scriptscriptstyle M}{\varvec{\sigma }}^{\prime }_{\scriptscriptstyle M}+\sigma _\psi ^2{\varvec{I}}_{p}\), \({\varvec{K}}_{X}\xrightarrow [n\rightarrow \infty ]{P} {\varvec{K}}\), \(n^{-1}({\varvec{X}}^{\prime }{\varvec{Z}})\xrightarrow [n\rightarrow \infty ]{P} \left( {\varvec{\sigma }}_{\scriptscriptstyle M}{\varvec{\sigma }}^{\prime }_{\scriptscriptstyle M}+\sigma _\psi ^2{\varvec{I}}_{p}\right) {\varvec{B}}\).

Proof

Since \({\varvec{X}}={\varvec{M}}+{\varvec{\Psi }}+{\varvec{\Delta }}\), then \(\hat{{\varvec{\Sigma }}}_X=n^{-1}({\varvec{M}}+{\varvec{\Psi }}+{\varvec{\Delta }})^{\prime }({\varvec{M}}+{\varvec{\Psi }} +{\varvec{\Delta }})\) and then

Then, by using Proposition A.1, we have \(n^{-1}\left( {\varvec{M}}^{\prime }{\varvec{\Psi }}+{\varvec{M}}^{\prime }{\varvec{\Psi }}+{\varvec{\Psi }}^{\prime }{\varvec{M}}+{\varvec{\Delta }}^{\prime }{\varvec{M}} +{\varvec{\Psi }}^{\prime }{\varvec{\Delta }}\right) \xrightarrow [n\rightarrow \infty ]{P}0\),

\(K_{\scriptscriptstyle 0,n}\xrightarrow [n\rightarrow \infty ]{P} {\varvec{K}}\), and \(n^{-1}({\varvec{M}}^{\prime }{\varvec{M}}+{\varvec{\Psi }}^{\prime }{\varvec{\Psi }}+ {\varvec{\Delta }}^{\prime }{\varvec{\Delta }})\xrightarrow [n\rightarrow \infty ]{P} {\varvec{\sigma }}_{\scriptscriptstyle M}{\varvec{\sigma }}^{\prime }_{\scriptscriptstyle M}+\sigma _\psi ^2{\varvec{I}}_{p}+\sigma _\delta ^2{\varvec{I}}_{p}\). Hence,

\({\hat{\Sigma }}_X\xrightarrow [n\rightarrow \infty ]{P}{\varvec{\sigma }}_{\scriptscriptstyle M}{\varvec{\sigma }}^{\prime }_{\scriptscriptstyle M}+\sigma _\psi ^2{\varvec{I}}_{p}+\sigma _\delta ^2{\varvec{I}}_{p}={\varvec{\Sigma }}\). Since \({\hat{\Sigma }}_D={\hat{\Sigma }}_X-{\hat{\sigma }}_\delta ^2{\varvec{I}}_{p}\), where \({\hat{\sigma }}_\delta ^2\) is a consistent estimator for \(\sigma _\delta ^2\), then \({\hat{\Sigma }}_D\xrightarrow [n\rightarrow \infty ]{P}{\varvec{\Sigma }} -\sigma _\delta ^2{\varvec{I}}_{p}={\varvec{\sigma }}_{\scriptscriptstyle M}{\varvec{\sigma }}^{\prime }_{\scriptscriptstyle M}+\sigma _\psi ^2{\varvec{I}}_{p}\). Thus,

\({\varvec{K}}_{X}={\hat{\Sigma }}_X^{-1}{\hat{\Sigma }}_D\xrightarrow [n\rightarrow \infty ]{P} {\varvec{\Sigma }}^{-1}\left( {\varvec{\sigma }}_{\scriptscriptstyle M}{\varvec{\sigma }}^{\prime }_{\scriptscriptstyle M}+\sigma _\psi ^2{\varvec{I}}_{p}\right) ={\varvec{K}}\). Further,

\(\square \)

By using Corollary A.2, we prove the following proposition which proves that the UE is a consistent estimator.

Proposition A.2

If Assumption 1 hold, then \(\hat{{\varvec{B}}}_1 \xrightarrow [n\rightarrow \infty ]{P}{\varvec{B}}\).

Proof

We have \(\hat{{\varvec{B}}}_1 ={\varvec{K}}_{X}^{-1}\hat{{\varvec{\Sigma }}}_{X}^{-1} \left( \frac{{\varvec{X}}^{\prime }{\varvec{Z}}}{n}\right) \). Further, from Corollary A.2,

This proves that \(\hat{{\varvec{B}}}_1 \xrightarrow [n\rightarrow \infty ]{P}{\varvec{B}}\), as desired result. \(\square \)

By combining Corollary A.2, Lemma A.1 and Lemma A.2, we prove Theorem 2.1.

Proof of Theorem 2.1

We have

Hence \(n^{\frac{1}{2}}(\hat{{\varvec{B}}}_1-{\varvec{B}})=\hat{{\varvec{\Sigma }}}_D^{-1}[{\varvec{T}}_{n} +{\varvec{H}}_{n}{\varvec{K}}{\varvec{B}}]+{O_p}(n^{-\frac{1}{2}})\) and then,

Apply the Vec() operator on both sides, we obtain

Further, we have \(\mathrm {Vec}({\varvec{T}}^{\prime }_{n}) =n^{-\frac{1}{2}}\sum \limits _{i=1}^n {\varvec{U}}_{1i}{\varvec{w}}_{1i}\) where

\({\varvec{U}}_{1i}= [{{\varvec{m}}}_{(i)}\otimes {{\varvec{I}}}_q,{{\varvec{I}}}_{pq},{{\varvec{I}}}_{pq},-{{\varvec{m}}}_{(i)}\otimes {\varvec{B}}^{\prime }, -{{\varvec{I}}}_p\otimes {\varvec{B}}^{\prime },-{{\varvec{I}}}_p\otimes {\varvec{B}}^{\prime }]\)

are \(qp\times (2qp+2p^2+p+q)\) non-random matrices and

are \((2qp+2p^2+p+q)\) iid column of random vectors. Similarly, one can verify that

\({\text {vec}}\left( {\varvec{B}}^{\prime }{{{\varvec{K}}}}{{\varvec{H}}}_{n}\right) =n^{-\frac{1}{2}}\displaystyle \sum \limits _{i=1}^n {\varvec{U}}_{2i}{\varvec{w}}_{2i}\) where

\({\varvec{U}}_{2i}= (I_p\otimes {\varvec{B}}^{\prime }{\varvec{K}}) \left[ ({{\varvec{I}}}_p\otimes {{\varvec{m}}}_{(i)}),({{\varvec{m}}}_{(i)}\otimes {{\varvec{I}}}_p), {{\varvec{I}}}_{p^2},{{\varvec{I}}}_{p^2},{{\varvec{I}}}_{p^2}\right] \)

are \(pq\times (3p^2+2p)\) non-random matrices and

are \((3p^2+2p)\)-column random vectors. Therefore, we have

\(\left[ {\text {vec}}({\varvec{T}}^{\prime }_{n})+{\text {vec}}\left( {\varvec{B}}^{\prime }{{{\varvec{K}}}}{{\varvec{H}}}_{n}\right) \right] =n^{-1/2}\sum \limits _{i=1}^n[{\varvec{U}}_{1i},\ {\varvec{U}}_{2i}][{\varvec{w}}^{\prime }_{1i},\ {\varvec{w}}^{\prime }_{2i}]^{\prime } =n^{-1/2}\sum \limits _{i=1}^n {\varvec{U}}_{i}{\varvec{w}}_{i}\).

We also have \(\mathrm {E}\left( {\text {vec}}({\varvec{T}}^{\prime }_{n})+{\text {vec}} \left( {\varvec{B}}^{\prime }{{{\varvec{K}}}}{{\varvec{H}}}_{n}\right) \right) =0\). Further, let

\({{\varvec{\Lambda }}} =\lim \limits _{n\rightarrow \infty }n^{-1}\mathrm {E}\left[ \left( \sum \limits _{i=1}^n {\varvec{U}}_{i}{\varvec{w}}_{i}\right) \left( \sum \limits _{i=1}^n {\varvec{U}}_{i}{\varvec{w}}_{i}\right) ^{\prime }\right] \).

Then, by Lemma A.1, we get

\(\left[ {\text {vec}}({\varvec{T}}^{\prime }_{n})+{\text {vec}}\left( {\varvec{B}}^{\prime }{{{\varvec{K}}}}{{\varvec{H}}}_{n}\right) \right] \xrightarrow [n\rightarrow \infty ]{d}{\varvec{\varsigma }}_{0} \sim {\mathcal {N}}_{pq}({{\varvec{0}}},{{\varvec{\Lambda }}})\).

Therefore, together with (A.6), Corollary A.2 and Slutsky’s theorem,

this completes the proof. \(\square \)

Proof of Proposition 2.1

From 2.3, adding and subtracting \({\varvec{X}}\hat{{\varvec{B}}}_{1}\), we get

Further

By combining the relations (A.7) and (A.8), we get

The stated result follows from the fact that \({\varvec{Z}}^{\prime }{\varvec{X}}-\hat{{\varvec{B}}}_{1}n{\varvec{\Sigma }}_{X}{\varvec{K}}_{X}={\varvec{0}}\). \(\square \)

Rights and permissions

About this article

Cite this article

Nkurunziza, S. On efficiency of some restricted estimators in a multivariate regression model. Stat Papers 64, 617–642 (2023). https://doi.org/10.1007/s00362-022-01324-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-022-01324-w