Abstract

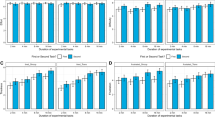

In three separate experiments, we examined the reliability of and relationships between self-report measures and behavioral response time measures of reward sensitivity. Using a rewarded-Stroop task we showed that reward-associated, but task-irrelevant, information interfered with task performance (MIRA) in all three experiments, but individual differences in MIRA were unreliable both within-session and over a period of approximately 4 weeks, providing clear evidence that it is not a good individual differences measure. In contrast, when the task-relevant information was rewarded, individual differences in performance benefits were remarkably reliable, even when examining performance one year later, and with a different version of a rewarded Stroop task. Despite the high reliability of the behavioral measure of reward responsiveness, behavioral reward responsiveness was not associated with self-reported reward responsiveness scores using validated questionnaires but was associated with greater self-reported self-control. Results are discussed in terms of what is actually being measured in the rewarded Stroop task.

Similar content being viewed by others

Notes

Only two of the conditions are included in the calculation of MIRA and the smaller number of trials per participant might have led to artificially reduced reliability for MIRA relative to the reward responsiveness measure that contains many more trials. To test this, separate correlations were conducted with a modified reward responsiveness measure that only included average RTs from two conditions: RTs to no-reward trials where the Stroop condition was incongruent, and the word was not associated with reward minus RTs to potential-reward trials where the Stroop condition was incongruent, and the word was not associated with reward. This allowed for the same number of trials to be included in the reward responsiveness and MIRA measures. In all experiments, reward responsiveness reliabilities remained remarkably high and consistent despite the reduced number of trials. Therefore, the lower reliability for the MIRA measure relative to the reward responsiveness measure is not simply a result of the fewer number of trials per participant.

One alternative method to calculating difference scores is the residualized measure approach. To calculate the residualized MIRA measure, the same conditions were included where the average RTs when the word was reward-unrelated was regressed on average RTs when the word was reward-related, and the standardized residuals were saved for further analyses. Correlations between original and residualized MIRA measures were very high (r’s > .87), and reliabilities for the residualized MIRA measure remained poor in all experiments.

References

Anderson, B. A., Kim, H., Britton, M. K., & Kim, A. J. (2020). Measuring attention to reward as an individual trait: The value-driven attention questionnaire (VDAQ). Psychological Research Psychologische Forschung, 84(8), 2122–2137. https://doi.org/10.1007/s00426-019-01212-3.

Anderson, B. A., Laurent, P. A., & Yantis, S. (2011). Value-driven attentional capture. Proceedings of the National Academy of Sciences, 108(25), 10367–10371. https://doi.org/10.1073/pnas.1104047108.

Bachman, M. D., Wang, L., Gamble, M. L., & Woldorff, M. G. (2020). Physical salience and value-driven salience operate through different neural mechanisms to enhance attentional selection. The Journal of Neuroscience, 40(28), 5455–5464. https://doi.org/10.1523/JNEUROSCI.1198-19.2020.

Bühringer, G., Wittchen, H.-U., Gottlebe, K., Kufeld, C., & Goschke, T. (2008). Why people change? The role of cognitive-control processes in the onset and cessation of substance abuse disorders. International Journal of Methods in Psychiatric Research, 17(S1), S4–S15. https://doi.org/10.1002/mpr.246.

Carter, C. S., Macdonald, A. M., Botvinick, M., Ross, L. L., Stenger, V. A., Noll, D., & Cohen, J. D. (2000). Parsing executive processes: Strategic vs. evaluative functions of the anterior cingulate cortex. Proceedings of the National Academy of Sciences, 97(4), 1944–1948. https://doi.org/10.1073/pnas.97.4.1944.

Carver, C. S., & White, T. L. (1994). Behavioral inhibition, behavioral activation, and affective responses to impending reward and punishment: The BIS/BAS Scales. Journal of Personality and Social Psychology, 67(2), 319–333. https://doi.org/10.1037/0022-3514.67.2.319.

Dale, G., & Arnell, K. M. (2013). Investigating the stability of and relationships among global/local processing measures. Attention, Perception, & Psychophysics, 75, 394–406. https://doi.org/10.3758/s13414-012-0416-7.

Dang, J., King, K. M., & Inzlicht, M. (2020). Why are self-report and behavioral measures weakly correlated? Trends in Cognitive Sciences, 24(4), 267–269. https://doi.org/10.1016/j.tics.2020.01.007.

de Leeuw, J. R. (2015). jsPsych: A JavaScript library for creating behavioral experiments in a Web browser. Behavior Research Methods, 47(1), 1–12. https://doi.org/10.3758/s13428-014-0458-y.

de Ridder, D. T., Lensvelt-Mulders, G., Finkenauer, C., Stok, F. M., & Baumeister, R. F. (2012). Taking stock of self-control: A meta-analysis of how trait self-control relates to a wide range of behaviors. Personality and Social Psychology Review, 16(1), 76–99. https://doi.org/10.1177/1088868311418749.

Desimone, R., & Duncan, J. (1995). Neural mechanisms of selective visual attention. Annual Review of Neuroscience, 18(1), 193–222. https://doi.org/10.1146/annurev.ne.18.030195.001205.

Diamond, A. (2013). Executive functions. Annual Review of Psychology, 64(1), 135–168. https://doi.org/10.1146/annurev-psych-113011-143750.

Draheim, C., Mashburn, C. A., Martin, J. D., & Engle, R. W. (2019). Reaction time in differential and developmental research: A review and commentary on the problems and alternatives. Psychological Bulletin, 145(5), 508. https://doi.org/10.1037/BUL0000192.

Dreisbach, G., & Fischer, R. (2012). The role of affect and reward in the conflict-triggered adjustment of cognitive control. Frontiers in Human Neuroscience, 6, 342–342. https://doi.org/10.3389/fnhum.2012.00342.

Duckworth, A. L., & Kern, M. L. (2011). A meta-analysis of the convergent validity of self-control measures. Journal of Research in Personality, 45(3), 259–268. https://doi.org/10.1016/j.jrp.2011.02.004.A.

Eigsti, I. M., Zayas, V., Mischel, W., Shoda, Y., Ayduk, O., Dadlani, M. B., & Casey, B. J. (2006). Predicting cognitive control from preschool to late adolescence and young adulthood. Psychological Science, 17(6), 478–484. https://doi.org/10.1111/J.1467-9280.2006.01732.X.

Engelmann, J. B., Damaraju, E., Padmala, S., & Pessoa, L. (2009). Combined effects of attention and motivation on visual task performance: Transient and sustained motivational effects. Frontiers in Human Neuroscience, 3, 4. https://doi.org/10.3389/neuro.09.004.2009.

Enkavi, A. Z., Eisenberg, I. W., Bissett, P. G., Mazza, G. L., MacKinnon, D. P., Marsch, L. A., & Poldrack, R. A. (2019). Large-scale analysis of test–retest reliabilities of self-regulation measures. Proceedings of the National Academy of Sciences, 116(12), 5472–5477. https://doi.org/10.1073/pnas.1818430116.

Goodhew, S. C., & Edwards, M. (2019). Translating experimental paradigms into individual-differences research: Contributions, challenges, and practical recommendations. Consciousness and Cognition, 69, 14–25. https://doi.org/10.1016/j.concog.2019.01.008.

Goschke, T., & Bolte, A. (2014). Emotional modulation of control dilemmas: The role of positive affect, reward, and dopamine in cognitive stability and flexibility. Neuropsychologia, 62, 403–423. https://doi.org/10.1016/j.neuropsychologia.2014.07.015.

Gruber, S. A., Rogowska, J., Holcomb, P., Soraci, S., & Yurgelun-Todd, D. (2002). Stroop performance in normal control subjects: An fMRI study. NeuroImage, 16(2), 349–360. https://doi.org/10.1006/nimg.2002.1089.

Hamilton, K. R., Sinha, R., & Potenza, M. N. (2014). Self-reported impulsivity, but not behavioral approach or inhibition, mediates the relationship between stress and self-control. Addictive Behaviors, 39(11), 1557–1564. https://doi.org/10.1016/j.addbeh.2014.01.003.

Hare, T. A., Camerer, C. F., & Rangel, A. (2009). Self-control in decision-making involves modulation of the vmPFC valuation system. Science, 324, 646–648. https://doi.org/10.1126/science.1168450.

Hedge, C., Powell, G., & Sumner, P. (2017). The reliability paradox: Why robust cognitive tasks do not produce reliable individual differences. Behavior Research Methods, 50, 1166–1186. https://doi.org/10.3758/s13428-017-0935-1.

Hickey, C., Chelazzi, L., & Theeuwes, J. (2010). Reward guides vision when it’s your thing: Trait reward-seeking in reward-mediated visual priming. PLoS ONE, 5(11), e14087–e14087. https://doi.org/10.1371/journal.pone.0014087.

Hickey, C., & Peelen, M. V. (2015). Neural mechanisms of incentive salience in naturalistic human vision. Neuron, 85(3), 512–518. https://doi.org/10.1016/j.neuron.2014.12.049.

Hofmann, W., Vohs, K. D., & Baumeister, R. F. (2012). What people desire, feel conflicted about, and try to resist in everyday life. Psychological Science, 23(6), 582–588. https://doi.org/10.1177/0956797612437426.

Jimura, K., Chushak, M. S., & Braver, T. S. (2013). Impulsivity and self-control during intertemporal decision making linked to the neural dynamics of reward value representation. Journal of Neuroscience, 33(1), 344–357. https://doi.org/10.1523/JNEUROSCI.0919-12.2013.

Kim, H., & Anderson, B. A. (2019). Dissociable neural mechanisms underlie value-driven and selection-driven attentional capture. Brain Research, 1708, 109–115. https://doi.org/10.1016/j.brainres.2018.11.026.

Kiss, M., Driver, J., & Eimer, M. (2009). Reward priority of visual target singletons modulates event-related potential signatures of attentional selection. Psychological Science, 20(2), 245–251. https://doi.org/10.1111/j.1467-9280.2009.02281.x.

Krebs, R. M., Boehler, C. N., Appelbaum, L. G., & Woldorff, M. G. (2013). Reward associations reduce behavioral interference by changing the temporal dynamics of conflict processing. PLoS ONE. https://doi.org/10.1371/journal.pone.0053894.

Krebs, R. M., Boehler, C. N., Egner, T., & Woldorff, M. G. (2011). The neural underpinnings of how reward associations can both guide and misguide attention. The Journal of Neuroscience, 31(26), 9752–9759. https://doi.org/10.1523/JNEUROSCI.0732-11.2011.

Krebs, R. M., Boehler, C. N., & Woldorff, M. G. (2010). The influence of reward associations on conflict processing in the Stroop task. Cognition, 117(3), 341–347. https://doi.org/10.1016/j.cognition.2010.08.018.

Kross, E., Bruehlman-Senecal, E., Park, J., Burson, A., Dougherty, A., Shablack, H., Bremner, R., Moser, J., & Ayduk, O. (2014). Self-talk as a regulatory mechanism: How you do it matters. Journal of Personality and Social Psychology, 106(2), 304–324. https://doi.org/10.1037/a0035173.

Locke, H. S., & Braver, T. S. (2008). Motivational influences on cognitive control: Behavior, brain activation, and individual differences. Cognitive, Affective, & Behavioral Neuroscience, 8(1), 99–112. https://doi.org/10.3758/CABN.8.1.99.

MacKay, D. G., Shafto, M., Taylor, J. K., Marian, D. E., Abrams, L., & Dyer, J. R. (2004). Relations between emotion, memory, and attention: Evidence from taboo Stroop, lexical decision, and immediate memory tasks. Memory & Cognition, 32(3), 474–488. https://doi.org/10.3758/BF03195840.

Moffitt, T. E., Arseneault, L., Belsky, D., Dickson, N., Hancox, R. J., Harrington, H., Houts, R., Poulton, R., Roberts, B. W., Ross, S., Sears, M. R., Thomson, W. M., & Caspi, A. (2011). A gradient of childhood self-control predicts health, wealth, and public safety. Proceedings of the National Academy of Sciences, 108(7), 2693–2698. https://doi.org/10.1073/pnas.1010076108.

O’Gorman, J. G., & Baxter, E. (2002). Self-control as a personality measure. Personality and Individual Differences, 32(3), 533–539. https://doi.org/10.1016/S0191-8869(01)00055-1.

Patton, J. H., Stanford, M. S., & Barratt, E. S. (1995). Factor structure of the barratt impulsiveness scale. Journal of Clinical Psychology, 51(6), 768–774. https://doi.org/10.1002/1097-4679(199511)51:63.0.CO;2-1.

Pitchford, B., & Arnell, K. M. (2019). Self-control and its influence on global/local processing: An investigation of the role of frontal alpha asymmetry and dispositional approach tendencies. Attention, Perception, and Psychophysics, 81(1), 173–187. https://doi.org/10.3758/s13414-018-1610-z.

Qi, S., Zeng, Q., Ding, C., & Li, H. (2013). Neural correlates of reward-driven attentional capture in visual search. Brain Research, 1532, 32–43. https://doi.org/10.1016/j.brainres.2013.07.044.

Raymond, J. E., & O’Brien, J. L. (2009). Selective visual attention and motivation: The consequences of value learning in an attentional blink task. Psychological Science, 20(8), 981–988. https://doi.org/10.1111/j.1467-9280.2009.02391.x.

Ridderinkhof, K., Ulldsperger, M., Crone, E. A., & Nieuwenhuis, S. (2004a). The role of the medial frontal cortex in cognitive control. Science, 306(5695), 443–447. https://doi.org/10.1126/science.1100301.

Ridderinkhof, K. R., van Den Wildenberg, W. P. M., Segalowitz, S. J., & Carter, C. S. (2004b). Neurocognitive mechanisms of cognitive control: The role of prefrontal cortex in action selection, response inhibition, performance monitoring, and reward-based learning. Brain and Cognition, 56(2), 129–140. https://doi.org/10.1016/j.bandc.2004.09.016.

Saunders, B., Milyavskaya, M., Etz, A., Randles, D., & Inzlicht, M. (2018). Reported self-control is not meaningfully associated with inhibition-related executive function: A Bayesian analysis. Collabra, 4(1), 9. https://doi.org/10.1525/COLLABRA.134.

Schönbrodt, F. D., & Perugini, M. (2013). At what sample size do correlations stabilize? Journal of Research in Personality, 47(5), 609–612. https://doi.org/10.1016/j.jrp.2013.05.009.

Stanford, M. S., Mathias, C. W., Dougherty, D. M., Lake, S. L., Anderson, N. E., & Patton, J. H. (2009). Fifty years of the Barratt Impulsiveness Scale: An update and review. Personality and Individual Differences, 47(5), 385–395. https://doi.org/10.1016/j.paid.2009.04.008.

Stroop, J. R. (1935). Studies of interference in serial verbal reactions. Journal of Experimental Psychology, 18(6), 643–662. https://doi.org/10.1037/h0054651.

Tangney, J. P., Baumeister, R. F., & Boone, A. L. (2004). High self-control predicts good adjustment, less pathology, better grades, and interpersonal success. Journal of Personality, 72(2), 271–324. https://doi.org/10.1111/j.0022-3506.2004.00263.x.

Theeuwes, J. (1992). Perceptual selectivity for color and form. Perception & Psychophysics, 51(6), 599–606. https://doi.org/10.3758/BF03211656.

Theeuwes, J. (2010). Top-down and bottom-up control of visual selection. Acta Psychologica, 135(2), 77–99. https://doi.org/10.1016/J.ACTPSY.2010.02.006.

van Steenbergen, H., Band, G. P., & Hommel, B. (2009). Reward counteracts conflict adaptation: Evidence for a role of affect in executive control. Psychological Science, 20(12), 1473–1477. https://doi.org/10.1111/j.1467-9280.2009.02470.x.

Wennerhold, L., & Friese, M. (2020). Why self-report measures of self-control and inhibition tasks do not substantially correlate. Collabra, 6(1), 9. https://doi.org/10.1525/collabra.276.

Wolff, M., Krönke, K.-M., & Goschke, T. (2016). Trait self-control is predicted by how reward associations modulate Stroop interference. Psychological Research Psychologische Forschung, 80(6), 944–951. https://doi.org/10.1007/s00426-015-0707-4.

Acknowledgements

The work was supported by a Canadian Graduate Scholarship from the Natural Sciences and Engineering Research Council of Canada (NSERC) to the first author, and by a grant from NSERC to the second author. We thank Carly Lundale, Daniella Zambito and Lauren Kremble for their assistance with data collection.

Funding

The Natural Sciences and Engineering Research Council (NSERC) provided Discovery grant funding to KA and scholarship funding to BP.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Ethical approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Pitchford, B., Arnell, K.M. Evaluating individual differences in rewarded Stroop performance: reliability and associations with self-report measures. Psychological Research 87, 686–703 (2023). https://doi.org/10.1007/s00426-022-01689-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00426-022-01689-5