Abstract

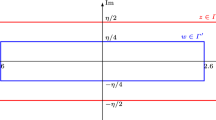

Graph matching aims at finding the vertex correspondence between two unlabeled graphs that maximizes the total edge weight correlation. This amounts to solving a computationally intractable quadratic assignment problem. In this paper, we propose a new spectral method, graph matching by pairwise eigen-alignments (GRAMPA). Departing from prior spectral approaches that only compare top eigenvectors, or eigenvectors of the same order, GRAMPA first constructs a similarity matrix as a weighted sum of outer products between all pairs of eigenvectors of the two graphs, with weights given by a Cauchy kernel applied to the separation of the corresponding eigenvalues, then outputs a matching by a simple rounding procedure. The similarity matrix can also be interpreted as the solution to a regularized quadratic programming relaxation of the quadratic assignment problem. For the Gaussian Wigner model in which two complete graphs on n vertices have Gaussian edge weights with correlation coefficient \(1-\sigma ^2\), we show that GRAMPA exactly recovers the correct vertex correspondence with high probability when \(\sigma = O(\frac{1}{\log n})\). This matches the state of the art of polynomial-time algorithms and significantly improves over existing spectral methods which require \(\sigma \) to be polynomially small in n. The superiority of GRAMPA is also demonstrated on a variety of synthetic and real datasets, in terms of both statistical accuracy and computational efficiency. Universality results, including similar guarantees for dense and sparse Erdős–Rényi graphs, are deferred to a companion paper.

Similar content being viewed by others

Notes

This is in fact not needed for computing the similarity matrix (3).

We implement the rank-2 version of LowRankAlign here because a higher rank does not appear to improve its performance in the experiments.

This experiment is not run on larger graphs because IsoRank and EigenAlign involve taking Kronecker products of graphs and are thus not as scalable as the other methods.

Since a preferential attachment graph is connected by convention, we may repeat this step until the new vertex is connected to at least one existing vertex.

References

Y. Aflalo, A. Bronstein, and R. Kimmel. On convex relaxation of graph isomorphism. Proceedings of the National Academy of Sciences, 112(10):2942–2947, 2015.

H. Almohamad and S. O. Duffuaa. A linear programming approach for the weighted graph matching problem. IEEE Transactions on pattern analysis and machine intelligence, 15(5):522–525, 1993.

G. W. Anderson, A. Guionnet, and O. Zeitouni. An introduction to random matrices, volume 118. Cambridge university press, 2010.

L. Babai, D. Y. Grigoryev, and D. M. Mount. Isomorphism of graphs with bounded eigenvalue multiplicity. In Proceedings of the fourteenth annual ACM symposium on Theory of computing, pages 310–324. ACM, 1982.

A.-L. Barabási and R. Albert. Emergence of scaling in random networks. Science, 286(5439):509–512, 1999.

B. Barak, C.-N. Chou, Z. Lei, T. Schramm, and Y. Sheng. (Nearly) efficient algorithms for the graph matching problem on correlated random graphs. arXiv preprintarXiv:1805.02349, 2018.

M. Bayati, D. F. Gleich, A. Saberi, and Y. Wang. Message-passing algorithms for sparse network alignment. ACM Transactions on Knowledge Discovery from Data (TKDD), 7(1):1–31, 2013.

L. Benigni. Eigenvectors distribution and quantum unique ergodicity for deformed Wigner matrices. arXiv preprintarXiv:1711.07103, 2017.

B. Bollobás. Distinguishing vertices of random graphs. North-Holland Mathematics Studies, 62:33–49, 1982.

P. Bourgade and H.-T. Yau. The eigenvector moment flow and local quantum unique ergodicity. Communications in Mathematical Physics, 350(1):231–278, 2017.

R. E. Burkard, E. Cela, P. M. Pardalos, and L. S. Pitsoulis. The quadratic assignment problem. In Handbook of combinatorial optimization, pages 1713–1809. Springer, 1998.

S. Chatterjee. Superconcentration and related topics, volume 15. Springer, 2014.

D. Conte, P. Foggia, C. Sansone, and M. Vento. Thirty years of graph matching in pattern recognition. International journal of pattern recognition and artificial intelligence, 18(03):265–298, 2004.

D. Cullina and N. Kiyavash. Improved achievability and converse bounds for Erdös-Rényi graph matching. In Proceedings of the 2016 ACM SIGMETRICS International Conference on Measurement and Modeling of Computer Science, pages 63–72. ACM, 2016.

D. Cullina and N. Kiyavash. Exact alignment recovery for correlated Erdös-Rényi graphs. arXiv preprintarXiv:1711.06783, 2017.

D. Cullina, N. Kiyavash, P. Mittal, and H. V. Poor. Partial recovery of Erdős-Rényi graph alignment via \( k \)-core alignment. arXiv preprintarXiv:1809.03553, Nov. 2018.

T. Czajka and G. Pandurangan. Improved random graph isomorphism. Journal of Discrete Algorithms, 6(1):85–92, 2008.

O. E. Dai, D. Cullina, and N. Kiyavash. Database alignment with gaussian features. arXiv preprintarXiv:1903.01422, 2019.

O. E. Dai, D. Cullina, N. Kiyavash, and M. Grossglauser. On the performance of a canonical labeling for matching correlated Erdős-Rényi graphs. arXiv preprintarXiv:1804.09758, 2018.

J. Ding, Z. Ma, Y. Wu, and J. Xu. Efficient random graph matching via degree profiles. Probability Theory and Related Fields, pages 1–87, Sep 2020.

N. Dym, H. Maron, and Y. Lipman. DS++: a flexible, scalable and provably tight relaxation for matching problems. ACM Transactions on Graphics (TOG), 36(6):184, 2017.

F. Emmert-Streib, M. Dehmer, and Y. Shi. Fifty years of graph matching, network alignment and network comparison. Information Sciences, 346:180–197, 2016.

P. Erdös and A. Rényi. On the evolution of random graphs. Publ. Math. Inst. Hungar. Acad. Sci, 5:17–61, 1960.

Z. Fan, C. Mao, Y. Wu, and J. Xu. Spectral graph matching and regularized quadratic relaxations II: Erdős-Rényi graphs and universality. Found Comut Math. https://doi.org/10.1007/s10208-022-09575-7, 2022.

S. Feizi, G. Quon, M. Recamonde-Mendoza, M. Medard, M. Kellis, and A. Jadbabaie. Spectral alignment of graphs. IEEE Transactions on Network Science and Engineering, 7(3):1182–1197, 2019.

G. Finke, R. E. Burkard, and F. Rendl. Quadratic assignment problems. In North-Holland Mathematics Studies, volume 132, pages 61–82. Elsevier, 1987.

F. Fogel, R. Jenatton, F. Bach, and A. d’Aspremont. Convex relaxations for permutation problems. In Advances in Neural Information Processing Systems, pages 1016–1024, 2013.

L. Ganassali. Sharp threshold for alignment of graph databases with Gaussian weights. arXiv preprintarXiv:2010.16295, 2020.

L. Ganassali, M. Lelarge, and L. Massoulié. Spectral alignment of correlated Gaussian matrices. Advances in Applied Probability, pages 1–32.

L. Ganassali and L. Massoulié. From tree matching to sparse graph alignment. In Conference on Learning Theory, pages 1633–1665. PMLR, 2020.

L. Ganassali, L. Massoulié, and M. Lelarge. Impossibility of partial recovery in the graph alignment problem. In Conference on Learning Theory, pages 2080–2102. PMLR, 2021.

F. Götze and A. Tikhomirov. On the rate of convergence to the Marchenko–Pastur distribution. arXiv preprintarXiv:1110.1284, 2011.

F. Götze and A. Tikhomirov. On the rate of convergence to the semi-circular law. In High Dimensional Probability VI, pages 139–165, Basel, 2013. Springer Basel.

P. W. Holland, K. B. Laskey, and S. Leinhardt. Stochastic blockmodels: First steps. Social Networks, 5(2):109–137, 1983.

T. Kato. Continuity of the map \(s\mapsto |s|\) for linear operators. Proceedings of the Japan Academy, 49(3):157–160, 1973.

E. Kazemi and M. Grossglauser. On the structure and efficient computation of isorank node similarities. arXiv preprintarXiv:1602.00668, 2016.

E. Kazemi, H. Hassani, M. Grossglauser, and H. P. Modarres. Proper: global protein interaction network alignment through percolation matching. BMC bioinformatics, 17(1):527, 2016.

E. Kazemi, S. H. Hassani, and M. Grossglauser. Growing a graph matching from a handful of seeds. Proceedings of the VLDB Endowment, 8(10):1010–1021, 2015.

N. Korula and S. Lattanzi. An efficient reconciliation algorithm for social networks. Proceedings of the VLDB Endowment, 7(5):377–388, 2014.

H. W. Kuhn. The Hungarian method for the assignment problem. Naval research logistics quarterly, 2(1-2):83–97, 1955.

J. Leskovec, J. Kleinberg, and C. Faloutsos. Graphs over time: densification laws, shrinking diameters and possible explanations. In Proceedings of the eleventh ACM SIGKDD international conference on Knowledge discovery in data mining, pages 177–187. ACM, 2005.

J. Leskovec and A. Krevl. SNAP Datasets: Stanford large network dataset collection. http://snap.stanford.edu/data, June 2014.

L. Livi and A. Rizzi. The graph matching problem. Pattern Analysis and Applications, 16(3):253–283, 2013.

J. Lubars and R. Srikant. Correcting the output of approximate graph matching algorithms. In IEEE INFOCOM 2018-IEEE Conference on Computer Communications, pages 1745–1753. IEEE, 2018.

V. Lyzinski, D. Fishkind, M. Fiori, J. Vogelstein, C. Priebe, and G. Sapiro. Graph matching: Relax at your own risk. IEEE Transactions on Pattern Analysis & Machine Intelligence, 38(1):60–73, 2016.

V. Lyzinski, D. E. Fishkind, and C. E. Priebe. Seeded graph matching for correlated Erdös-Rényi graphs. Journal of Machine Learning Research, 15(1):3513–3540, 2014.

K. Makarychev, R. Manokaran, and M. Sviridenko. Maximum quadratic assignment problem: Reduction from maximum label cover and LP-based approximation algorithm. Automata, Languages and Programming, pages 594–604, 2010.

C. Mao, M. Rudelson, and K. Tikhomirov. Exact matching of random graphs with constant correlation. arXiv preprintarXiv:2110.05000, 2021.

C. Mao, M. Rudelson, and K. Tikhomirov. Random graph matching with improved noise robustness. In Conference on Learning Theory, pages 3296–3329. PMLR, 2021.

E. Mossel and J. Xu. Seeded graph matching via large neighborhood statistics. In Proceedings of the Thirtieth Annual ACM-SIAM Symposium on Discrete Algorithms, pages 1005–1014. SIAM, 2019.

A. Narayanan and V. Shmatikov. Robust de-anonymization of large sparse datasets. In Security and Privacy, 2008. SP 2008. IEEE Symposium on, pages 111–125. IEEE, 2008.

A. Narayanan and V. Shmatikov. De-anonymizing social networks. In Security and Privacy, 2009 30th IEEE Symposium on, pages 173–187. IEEE, 2009.

P. M. Pardalos, F. Rendl, and H. Wolkowicz. The quadratic assignment problem: A survey and recent developments. In In Proceedings of the DIMACS Workshop on Quadratic Assignment Problems, volume 16 of DIMACS Series in Discrete Mathematics and Theoretical Computer Science, pages 1–42. American Mathematical Society, 1994.

P. Pedarsani, D. R. Figueiredo, and M. Grossglauser. A bayesian method for matching two similar graphs without seeds. In 2013 51st Annual Allerton Conference on Communication, Control, and Computing (Allerton), pages 1598–1607. IEEE, 2013.

P. Pedarsani and M. Grossglauser. On the privacy of anonymized networks. In Proceedings of the 17th ACM SIGKDD international conference on Knowledge discovery and data mining, pages 1235–1243. ACM, 2011.

P. Pedarsani and M. Grossglauser. On the privacy of anonymized networks. In ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pages 1235–1243, 2011.

G. Piccioli, G. Semerjian, G. Sicuro, and L. Zdeborová. Aligning random graphs with a sub-tree similarity message-passing algorithm. arXiv preprintarXiv:2112.13079, 2021.

M. Racz and A. Sridhar. Correlated stochastic block models: Exact graph matching with applications to recovering communities. Advances in Neural Information Processing Systems, 34, 2021.

M. Rudelson and R. Vershynin. Hanson-Wright inequality and sub-Gaussian concentration. Electron. Commun. Probab., 18:no. 82, 9, 2013.

C. Schellewald and C. Schnörr. Probabilistic subgraph matching based on convex relaxation. In International Workshop on Energy Minimization Methods in Computer Vision and Pattern Recognition, pages 171–186. Springer, 2005.

W. Schudy and M. Sviridenko. Concentration and moment inequalities for polynomials of independent random variables. In Proceedings of the Twenty-Third Annual ACM-SIAM Symposium on Discrete Algorithms, pages 437–446. ACM, New York, 2012.

F. Shirani, S. Garg, and E. Erkip. Seeded graph matching: Efficient algorithms and theoretical guarantees. In 2017 51st Asilomar Conference on Signals, Systems, and Computers, pages 253–257. IEEE, 2017.

R. Singh, J. Xu, and B. Berger. Global alignment of multiple protein interaction networks with application to functional orthology detection. Proceedings of the National Academy of Sciences, 105(35):12763–12768, 2008.

S. Umeyama. An eigendecomposition approach to weighted graph matching problems. IEEE transactions on pattern analysis and machine intelligence, 10(5):695–703, 1988.

University of Oregon Route Views Project. Autonomous Systems Peering Networks. Online data and reports, http://www.routeviews.org/.

R. Vershynin. High-Dimensional Probability: An Introduction with Applications in Data Science. Cambridge Series in Statistical and Probabilistic Mathematics. Cambridge University Press, 2018.

J. T. Vogelstein, J. M. Conroy, V. Lyzinski, L. J. Podrazik, S. G. Kratzer, E. T. Harley, D. E. Fishkind, R. J. Vogelstein, and C. E. Priebe. Fast approximate quadratic programming for graph matching. PLOS one, 10(4):e0121002, 2015.

Y. Wu, J. Xu, and H. Y. Sophie. Settling the sharp reconstruction thresholds of random graph matching. In 2021 IEEE International Symposium on Information Theory (ISIT), pages 2714–2719. IEEE, 2021.

L. Xu and I. King. A PCA approach for fast retrieval of structural patterns in attributed graphs. IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), 31(5):812–817, 2001.

L. Yartseva and M. Grossglauser. On the performance of percolation graph matching. In Proceedings of the first ACM conference on Online social networks, pages 119–130. ACM, 2013.

M. Zaslavskiy, F. Bach, and J.-P. Vert. A path following algorithm for the graph matching problem. IEEE Transactions on Pattern Analysis and Machine Intelligence, 31(12):2227–2242, 2008.

Acknowledgements

Y. Wu and J. Xu are deeply indebted to Zongming Ma for many fruitful discussions on the QP relaxation (14) in the early stage of the project. Y. Wu and J. Xu thank Yuxin Chen for suggesting the gradient descent dynamics which led to the initial version of the proof. Y. Wu is grateful to Daniel Sussman for pointing out [45] and Joel Tropp for [1].

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Francis Bach.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Z. Fan is supported in part by NSF Grant DMS-1916198. C. Mao is supported in part by NSF Grant DMS-2053333. Y. Wu is supported in part by the NSF Grants CCF-1527105, CCF-1900507, an NSF CAREER award CCF-1651588, and an Alfred Sloan fellowship. J. Xu is supported by the NSF Grants IIS-1838124, CCF-1850743, and CCF-1856424.

Appendices

Concentration Inequalities for Gaussians

We collect auxiliary results on concentration of polynomials of Gaussian variables.

Lemma 13

Let z be a standard Gaussian vector in \(\mathbb {R}^n\). For any fixed \(v \in \mathbb {R}^n\) and \(\delta > 0\), it holds with probability at least \(1 - \delta \) that

Lemma 14

(Hanson–Wright inequality) Let z be a sub-Gaussian vector in \(\mathbb {R}^n\), and let M be a fixed matrix in \({\mathbb {C}}^{n \times n}\). Then, we have with probability at least \(1 - \delta \) that

where C is a universal constant and \(\Vert z\Vert _{\psi _2}\) is the sub-Gaussian norm of z.

See [59, Section 3.1] for the complex-valued version of the Hanson–Wright inequality. The following lemma is a direct consequence of (75), by taking M to be a diagonal matrix.

Lemma 15

Let z be a standard Gaussian vector in \(\mathbb {R}^n\). For an entrywise nonnegative vector \(v \in \mathbb {R}^n\), it holds with probability at least \(1 - \delta \) that

In particular, it holds with probability at least \(1 - \delta \) that

Theorem 4

(Hypercontractivity concentration [61, Theorem 1.9]) Let z be a standard Gaussian vector in \(\mathbb {R}^n\), and let \(f(z_1, \dots , z_n)\) be a degree-d polynomial of z. Then, it holds that

where \(\mathsf {Var}[ f(z) ]\) denotes the variance of f(z) and \(C > 0\) is a universal constant.

Finally, the following result gives a concentration inequality in terms of the restricted Lipschitz constants, obtained from the usual Gaussian concentration of measure plus a Lipschitz extension argument.

Lemma 16

Let \(B\subset {\mathbb {R}}^n\) be an arbitrary measurable subset. Let \(F: {\mathbb {R}}^n \rightarrow {\mathbb {R}}\) such that F is L-Lipschitz on B. Let \(X \sim N(0,{\mathbf {I}}_n)\). Then, for any \(t>0\),

where c is a universal constant,  and \(\delta = 2 \sqrt{\epsilon ( nL^2+F(0)^2 + \mathbb {E}[F(X)^2] )}\).

and \(\delta = 2 \sqrt{\epsilon ( nL^2+F(0)^2 + \mathbb {E}[F(X)^2] )}\).

Proof

Let \({{\widetilde{F}}}: {\mathbb {R}}^n\rightarrow {\mathbb {R}}\) be an L-Lipschitz extension of F, e.g., \({{\widetilde{F}}}(x) = \inf _{y\in B} F(y)+L\Vert x-y\Vert \). Then, by the Gaussian concentration inequality (cf., e.g., [66, Theorem 5.2.2]), we have

It remains to show that \(|\mathbb {E}F(X)-\mathbb {E}{{\widetilde{F}}}(X)| \le \delta \). Indeed, by Cauchy–Schwarz, \(|\mathbb {E}F(X)-\mathbb {E}{\widetilde{F}}(X)| \le \mathbb {E}|F(X-{{\widetilde{F}}}(X)|{{\mathbf {1}}_{\left\{ {X \notin B}\right\} }} \le \sqrt{\epsilon \mathbb {E}[|F(X-{{\widetilde{F}}}(X)|^2]}\). Finally, noting that \(|{{\widetilde{F}}}(X)| \le F(0)+L\Vert X\Vert _2\) and \(\mathbb {E}\Vert X\Vert _2^2 =n\) completes the proof. \(\square \)

Kronecker Gymnastics

Given \(A,B \in {\mathbb {C}}^{n\times n}\), the Kronecker product \(A \otimes B \in {\mathbb {C}}^{n^2\times n^2}\) is defined as \(\left[ {\begin{matrix} a_{11} B&{}\ldots &{}a_{1n} B\\ \vdots &{}\vdots &{}\vdots \\ a_{n1} B&{}\ldots &{}a_{nn} B \end{matrix}} \right] \). The vectorized form of \(A=[a_1,\ldots ,a_n]\) is \(\,\textsf {vec} (A)=[a_1^\top ,\ldots ,a_n^\top ]^\top \in {\mathbb {C}}^{n\otimes n}\). It is convenient to identify \([n^2]\) with by \([n]^2\) ordered as \(\{(1,1),\ldots ,(1,n),\ldots ,(n,n)\}\), in which case we have \((A\otimes B)_{ij,k\ell }=A_{ik}B_{j\ell }\) and \(\,\textsf {vec} (A)_{ij}=A_{ij}\).

We collect some identities for Kronecker products and vectorizations of matrices used throughout this paper:

The third equality implies that

and hence

Applying the third equality to column vector z and noting that \(\,\textsf {vec} (z^\top )=\,\textsf {vec} (z)=z\), we have

In particular, it holds that

Signal-to-Noise Heuristics

We justify the choice of the Cauchy weight kernel in (4) by a heuristic signal-to-noise calculation for \({\widehat{X}}\). We assume without loss of generality that \(\pi ^*\) is the identity, so that diagonal entries of \({\widehat{X}}\) indicate similarity between matching vertices of A and B. Then, for the rounding procedure in (5), we may interpret \(n^{-1}{\text {Tr}}{\widehat{X}}\) and \((n^{-2}\sum _{i,j:\,i \ne j} {\widehat{X}}_{ij}^2)^{1/2} \approx n^{-1}\Vert {\widehat{X}}\Vert _F\) as the average signal strength and noise level in \({\widehat{X}}\). Let us define a corresponding signal-to-noise ratio as

and compute this quantity in the Gaussian Wigner model.

We abbreviate the spectral weights \(w(\lambda _i,\mu _j)\) as \(w_{ij}\). For \({\widehat{X}}\) defined by (3) with any weight kernel w(x, y), we have

Applying that (A, B) is equal in law to \((OAO^\top ,OBO^\top )\) for a rotation O such that \(O{\mathbf {1}}=\sqrt{n}{\mathbf {e}}_k\), we obtain for every k that

Then, averaging over \(k=1,\ldots ,n\) and applying \(\sum _k {\mathbf {e}}_k {\mathbf {e}}_k^\top ={\mathbf {I}}\) yield that

For the noise, we have

Applying the equality in law of (A, B) and \((OAO^\top ,OBO^\top )\) for a uniform random orthogonal matrix O, and writing \(r=O{\mathbf {1}}/\sqrt{n}\), we get

Here, \(r=(r_1,\ldots ,r_n)\) is a uniform random vector on the unit sphere, independent of (A, B). For any deterministic unit vectors u, v with \(u^\top v=\alpha \), we may rotate to \(u={\mathbf {e}}_1\) and \(v=\alpha {\mathbf {e}}_1+\sqrt{1-\alpha ^2}{\mathbf {e}}_2\) to get

where the last equality applies an elementary computation. Bounding \(1+2\alpha ^2 \in [1,3]\) and applying this conditional on (A, B) above, we obtain

for some value \(c \in [1,3]\).

To summarize,

The choice of weights which maximizes this SNR would satisfy \(w(\lambda _i,\mu _j) \propto (u_i^\top v_j)^2\). Recall that for \(n^{-1+\varepsilon } \ll \sigma ^2 \ll n^{-\varepsilon }\) and i, j in the bulk of the spectrum, we have the approximation (11). Thus, this optimal choice of weights takes a Cauchy form, which motivates our choice in (4).

We note that this discussion is only heuristic, and maximizing this definition of SNR does not automatically imply any rigorous guarantee for exact recovery of \(\pi ^*\). Our proposal in (4) is a bit simpler than the optimal choice suggested by (11): The constant C in (11) depends on the semicircle density near \(\lambda _i\), but we do not incorporate this dependence in our definition. Also, while (11) depends on the noise level \(\sigma \), our main result in Theorem 1 shows that \(\eta \) need not be set based on \(\sigma \), which is usually unknown in practice. Instead, our result shows that the simpler choice \(\eta =c/\log n\) is sufficient for exact recovery of \(\pi ^*\) over a range of noise levels \(\sigma \lesssim \eta \).

Rights and permissions

About this article

Cite this article

Fan, Z., Mao, C., Wu, Y. et al. Spectral Graph Matching and Regularized Quadratic Relaxations I Algorithm and Gaussian Analysis. Found Comput Math 23, 1511–1565 (2023). https://doi.org/10.1007/s10208-022-09570-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10208-022-09570-y

Keywords

- Graph matching

- Quadratic assignment problem

- Spectral methods

- Convex relaxations

- Quadratic programming

- Random matrix theory