Abstract

Cars are increasingly capable of providing drivers with warnings and advice. However, whether drivers should be provided with ipsilateral warnings (signaling the direction to steer towards) or contralateral warnings (signaling the direction to avoid) is inconclusive. Furthermore, how auditory warnings and visual information from the driving environment together contribute to drivers’ responses is relatively unexplored. In this study, 34 participants were presented with animated video clips of traffic situations on a three-lane road, while their eye movements were recorded with an eye-tracker. The videos ended with a near collision in front after 1, 3, or 6 s, while either the left or the right lane was safe to swerve into. Participants were instructed to make safe lane-change decisions by pressing the left or right arrow key. Upon the start of each video, participants heard a warning: Go Left/Right (ipsilateral), Danger Left/Right (contralateral), and nondirectional beeps (Baseline), emitted from the spatially corresponding left and right speakers. The results showed no significant differences in response times and accuracy between ipsilateral and contralateral warnings, although participants rated ipsilateral warnings as more satisfactory. Ipsilateral and contralateral warnings both improved response times in situations in which the left/right hazard was not yet manifest or was poorly visible. Participants fixated on salient and relevant vehicles as quickly as 220 ms after the trial started, with no significant differences between the audio types. In conclusion, directional warnings can aid in making a correct left/right evasive decision while not affecting the visual attention distribution.

Similar content being viewed by others

1 Introduction

Cars are more and more equipped with sensors that can detect other road users in the vicinity. Although it could be many decades before fully automated driving enters the public domain, cars are increasingly able to perceive the environment and provide warnings and advice. Existing systems that do so are blind-spot warning systems and lane departure warning systems, amongst others (e.g., [1, 2]).

1.1 Auditory warnings

Warnings may be provided to drivers in the visual, auditory, and vibrotactile modalities. The present study is concerned with auditory warnings. The auditory modality has several noteworthy qualities. First, the auditory modality “requires no directional search” [3, p. 6], i.e., sounds can be perceived when the driver is visually inattentive [4]. Second, humans have some capacity to perceive the location from which a sound is coming [5, 6]. This quality makes spatial auditory cues promising for enhancing situation awareness [7, 8] and for improving performance in tasks that require a spatial response, such as orientation, visual search, and target localization tasks (for a review, see [9]). Of note, Verbist et al. [10] and Bazilinskyy et al. [11] demonstrated that it is possible to drive a car on a curvy road without any visual feedback by using spatial left/right auditory feedback. Third, the auditory modality can convey semantic information via speech. The potential of (spatialized) speech-based warnings is already recognized in aviation for many decades, where traffic collision avoidance systems (TCAS) and ground proximity warning systems (GPWS) provide speech-based resolution advisories [12,13,14].

1.2 Spatial and directional warnings in driving

In driving, spatial and directional auditory warnings have previously been studied for collision warning systems (e.g., [15,16,17,18] and lane departure warning systems [19, 20]).

Ho and Spence [15] performed several experiments using videos of potential collisions from the front or back, as seen through the windshield and rear-view mirror. Auditory warnings were emitted spatially (i.e., from the front or back) and nonspatially, and in the form of directional verbal warnings (“front” and “back”) or as a car horn. Participants had to respond by pressing the brake pedal or accelerator, depending on the location of the hazard. The results showed that directional-spatial warnings led to the fastest responses. Similarly, in Zhang et al. [21], participants showed shorter braking reaction times when receiving a directional verbal message (“watch out for the vehicle running the red light on your right”) than a nondirectional one (“please watch out for the vehicle running the red light”). In a driving simulator study with an automated vehicle, Sanghavi et al. [17] provided participants with audiovisual take-over requests. The auditory warning was a tone coming from the front (i.e., nonspatial) or from the direction of the free lane. The authors reported faster response times for the spatial than the nonspatial warnings. Liu and Jhuang [16] compared visual, auditory (verbal), and audiovisual warnings, with the audio being either spatial or nonspatial, for a variety of situations (e.g., a motorcycle approaching from the side, an obstacle in front, etc.) and found that spatial audiovisual warnings led to the fastest response times among the tested warning types. Finally, Roßmeier et al. [19] provided drowsy participants in a driving simulator with the left/right spatialized sound of a wheel driving over a rumble strip. The time gap between opening the eyes and the steering response was found to be below 0.19 s in some cases—too fast to be a visually-driven action. The authors concluded that spatial warnings not only caused drivers to focus on the driving scene but could also evoke a steering action directly. In summary, a good body of evidence shows that spatial and directional warnings hold promise in driving.

At the same time, some studies found no effect of spatial warnings compared to nonspatial ones. In a driving simulator study by Suzuki and Jansson [20], the car’s yaw angle was smoothly changed while participants were occupied with a secondary visual task. Beeps were presented from both sides or from the departure side only. The two warning types led to equivalent steering reaction times, and the authors argued that auditory warnings are used to grab the attention of the driver, whereas the steering decision is made based on visual information of the driving scene. The results further showed significantly faster steering reactions and smaller lateral deviations from the lane edge when participants were instructed about the meaning of the warnings compared to when uninstructed. More recently, Petermeijer et al. [22] investigated the effects of spatial auditory (beeps) and tactile (vibrating seat) take-over requests in situations where a stationary car was stranded in the middle lane, and evading to the left and right were viable options. Results showed that most participants overtook the stationary car on the left, consistent with national traffic rules. In a post-experiment questionnaire, the majority of the participants admitted that they could/did not perceive that the sound or vibrations came from different directions. These findings suggest that drivers need to be trained in recognizing and interpreting spatial warnings or that the spatial cues should convey higher levels of semantics (e.g., speech instead of beeps).

1.3 Ipsilateral versus contralateral warnings

One of the topics of discussion in spatial warning systems is whether warnings should be ipsilateral (i.e., at the escape direction, towards which the driver should steer, as in the abovementioned [17] or contralateral (i.e., at the hazard direction, away from which the driver should steer, as in [19, 20]). Only a few studies have examined this topic directly. In Wang et al. [23], participants were asked to respond to an auditory tone by steering a table-mounted steering wheel. One group was informed that the tone was a warning signaling the escape direction, whereas another group was informed that the tone was a warning signaling the direction of the hazard. No visual information about the driving scene was available. Results showed that response times were faster for the tone signaling the escape direction, i.e., the ipsilateral mapping. However, in a later driving simulator study where visual information about the driving scene was available, Wang et al. [18] found that response times were faster for contralateral than ipsilateral warnings. The authors concluded that drivers use visual information to make a steering decision and that contralateral warnings help drivers direct attention to the hazard location before they would ordinarily perceive it, thus decreasing response time. Then again, a study using vibrotactile displays performed by Cohen-Lazry et al. [24] showed that ipsilateral warnings yielded faster and more accurate steering responses than contralateral warnings.

Studies have further shown that the available time to respond (time budget) might be a moderator variable of the effect of spatial warnings. Straughn et al. [25] tested a pedestrian collision warning system. Participants drove a simulator in foggy weather and were provided with spatial collision warnings early (time budget of 4 s) or late (time budget of 2 s). The results showed that for the long time budget, contralateral warnings led to faster responses than ipsilateral warnings, whereas the opposite was found for the short time budget. The authors argued that there is no time to assess the situation visually in late warnings, so the auditory warning is necessarily used to generate a motor response. When a warning is provided early, on the other hand, a contralateral warning will lead to a faster response because it attracts visual attention towards the hazard (see also [18]). More recently, Chen et al. [26] investigated spatial pedestrian collision warnings for time budgets between 2 and 4 s and found that participants responded faster to contralateral than ipsilateral warnings for all time budgets. No advantage of ipsilateral warnings was found for short time budgets, which is inconsistent with Straughn et al.’s findings. Chen et al. argued that the difference might be related to the different driving modes used (manual in Straughn et al. vs. semi-autonomous in Chen et al.). It should also be noted that in Straughn et al., participants drove in a driving simulator while visual feedback was degraded by fog. In contrast, in Chen et al., participants viewed videos on a computer screen.

1.4 Knowledge gap and study aims

The above literature showed mixed results regarding the effects of spatial auditory warnings in driving. Although the benefits of ipsilateral mapping (spatial stimulus–response compatibility) are well established in basic orienting and response tasks [9], whether these benefits generalize to driving can be debated because, in driving, the hazard is to be avoided rather than steered towards.

Several studies indicate that in driving, the effects of ipsilateral versus contralateral warnings are opposite from basic studies. That is, in driving, contralateral warnings have been claimed to be more effective than ipsilateral ones [18, 26]. However, whether the beneficial effects of contralateral warnings become manifest may depend on whether there is enough time for the driver to visually detect the hazard [25]. It is possible that drivers make their steering decision solely based on visual information, not based on the directional information in the sounds, or may misunderstand the meaning of the spatial warnings unless instructed [20, 22].

Despite the crucial role of vision, studies on spatial auditory warnings so far have not measured drivers’ visual attention. The present study used an eye-tracker to gain insight into participants’ visual behavior while receiving auditory warnings. Videos of near-collision scenarios were presented, and participants were asked to avert the forward collision by pressing the appropriate left or right key. Participants were provided with directional speech-based advisory warnings (ipsilateral: Go Left/Right, contralateral: Danger Left/Right) and nondirectional beeps. It was decided to use speech-based warnings because of their semantic richness compared to beeps/tones and positive findings in several previous studies [15, 21] as reviewed above (see also [27,28,29], for studies that tested speech-based warnings in driving).

Consistent with Straughn et al. [25], the hypothesis was that ipsilateral auditory warnings would lead to the fastest responses when there was little time to respond. Additionally, it was hypothesized that contralateral warnings would help drivers visually detect the left/right hazard because the phrase ‘Danger Left’ or ‘Danger Right’ pinpoints the location of the hazard.

2 Methods

2.1 Participants

Thirty-four people (29 males, 5 females), aged between 18 and 29 years (M = 23.3, SD = 2.5) participated. Thirty participants had a driver's license; 4 participants did not. On a scale of 1 (almost never), 2 (less than once a month), 3 (less than once a week), 4 (1–3 times a week), and 5 (almost every day), the mean answer to “How much do you drive?” was 3.38 (SD = 1.41). All participants were recruited from the university community. The study was approved by the university’s human research ethics committee. All participants provided written informed consent.

2.2 Hardware

An SR Research EyeLink 1000 Plus eye-tracker with head support was used to track the right eye. The videos were presented on a 24-inch BenQ XL2420T-B monitor with a resolution of 1920 × 1080 pixels. The monitor was placed at a distance of about 80 cm from the head support, providing a horizontal field of view of about 37 deg. Sounds were presented using two Logitech speakers at a distance of 70 cm and a 45-deg angle from the ears. Participants sat on a height-adjustable office chair. Figure 1 shows a photo of the setup.

2.3 Videos

Animated video clips were programmed using PreScan 7.0 [30]. The videos were previously used by Lu et al. [31] in a study on drivers’ take-over performance and situation awareness.

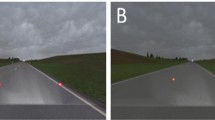

The videos depicted traffic scenarios on a three-lane highway. The ego-car was driving in the middle lane at a constant speed of 100 km/h. In the videos, there were five surrounding cars, one of which was standing still in the middle lane, and one was overtaking the ego-car on the left or right side so that only one escape direction was possible. The other cars drove at a safe distance relative to the ego-car during the entire video. Videos had a length of 1, 3, or 6 s.

The videos ended when the center-to-center distance between the ego-car and the stationary car in front was 19–22 m. At a speed of 100 km/h, and after subtracting a car length of 4.5 m, this would correspond to a time-to-collision of 0.5–0.6 s. At the end of the video, the overtaking car blocked the left or right lane, and the other lane was free to maneuver into. All five surrounding cars were continuously visible, drove at a constant speed in the center of their lanes, and did not change lanes. Figure 2 shows a screenshot of one of the videos. The areas of interest, which are used for the eye-tracking analysis, are shown as well.

In Fig. 3, the start of each of the six videos is shown. It can be seen that three mirrors were available. The left window was visible, whereas the right window was not. The Escape Left and Escape Right situations were mirrored versions of each other. However, the driver was seated in the left seat and therefore had a somewhat different view of the road for the left versus right situations.

2.4 Audio samples

Ipsilateral (Go Left, Go Right) and contralateral (Danger Left, Danger Right) audio files were created using a speech generator [32 (spoken by ‘English (UK) – Selene’ at a speed of 1)]. The sounds were post-processed using Garageband [33] to equalize the maximum volume peak. Audio level peaks were 66–68 dB, measured with a decibel meter from the head support, and the duration of the speech-based samples was about 600 ms. A fifth sample with beeps was generated based on a Tesla warning sound. The beeping sound was made using an electric piano in Garageband [33], producing four beeps of 1148 Hz, one beep every 115 ms.

Besides providing directional semantic information, the warnings were also spatialized. More specifically, Go Left and Danger Left were presented from the left speaker, whereas Go Right and Danger Right were presented from the right speaker. Hence, for the Go warnings, the sound was ipsilateral (i.e., the direction to steer to), and for the Danger warnings, the sound was contralateral (i.e., the direction to steer away from). Figure 4 shows the sound waves of the five samples used in the experiment.

2.5 Experimental design

The following independent variables were used:

-

Audio Type (3 levels): Danger (contralateral), Go (ipsilateral), and Baseline (beeps).

-

Escape Direction (2 levels): In each video, there was one direction of escape (left or right).

-

Time Budget (3 levels): The video duration was 1, 3, or 6 s. The videos ended with a near-collision with the car in front.

A within-subject design was used, with three blocks presented in a Latin-square counterbalanced order. In each block, one of three audio types was provided. Each block consisted of randomized 15 trials. For the Go and Danger blocks, three invalid audio samples were included, i.e., feedback opposite to the video situation, so left instead of right and vice versa. The invalid samples were included to ensure that participants could not rely solely on the auditory warnings. Thus, in 80% (12 of 15) of the trials, valid warnings were given, a percentage that has been shown before to be effective in shortening response times in a similar study on stimulus-reaction responses [15]. The beeps were coming from both speakers and were always valid. Table 1 provides an overview of the number of trials per condition.

An overview of the number of trials per experimental condition is provided in Table 1. In all trials, the video and audio samples were programmed to start simultaneously. However, based on later video recordings at a high frame rate, we established that the onset of the audio samples, as shown in Fig. 4, was about 70 ms later than the onset of the first video frame.

2.6 Procedure

First, participants read and signed a consent & information form. The form mentioned the study purpose (“to investigate the verbal cues Go Left/Right and Danger Left/Right as take-over requests”) and explained the procedure and instructions. It mentioned that during the experiment, participants had to focus on a marker at the bottom of the screen and that at a random interval between 2 and 8 s, the scenario would start. Furthermore, the form indicated that participants had to perform a safe take-over reaction as quickly as possible by pressing the left or right key on the keyboard. It was further stated that they had to complete three blocks (Non-verbal beeps, Verbal Go left/right, Verbal Danger left/right) of 15 trials each and that participants had to keep in mind that the auditory cues were not always correct.

Before the experiment, participants completed a questionnaire about their age and driving experience. The participants were told that they could adjust the height of the chair so that their head rested comfortably on the head support.

The participants were first shown a 6-s demo video depicting a near-collision. This video resembled the videos of the subsequent experiment but did not feature an overtaking car, and participants did not have to respond.

Before each block, the eye-tracker was calibrated using the standard nine-point EyeLink procedure, after which an instructions screen was shown. The instructions screen mentioned that participants would be presented with videos of a potential forward collision and that their task was to avoid the car by pressing the left or right arrow key. At this point, it was not mentioned that participants had to respond as quickly as possible. The instructions screen asked participants to place their right hand on the arrow keys and their left hand on the spacebar, eliminating the need to move the arms.

Before each video, participants had to look at a marker at the bottom of the screen and press the spacebar. This was followed by a black screen with a circle at the bottom. The duration of this screen was 2, 4, 6, or 8 s randomized per trial for the participant not to know when the video would start. Participants were instructed to focus on the marker until the video started. The trial ended when the participant hit an arrow key or 1 s after the video was over.

After each block, participants completed a questionnaire for assessing the acceptance of new driving technology [34] and a NASA-TLX questionnaire for measuring workload [35].

2.7 Dependent variables

Eye data were sampled at 1000 Hz. Periods during which vertical gaze data on the screen were unavailable, such as caused by eye blinks, were marked as data gaps. A margin of 100 ms was added before and after each data gap to account for the closing time and reopening time of the eyelid [36]. The data gaps in the gaze x and y coordinates were linearly interpolated and subsequently median-filtered using a window length of 100 ms. Fixations were defined using a Savitzky-Golay filter, with a threshold of 2000 pixels per second [37]. A minimum fixation duration of 40 ms was adopted [38].

The following measures were computed per participant per condition. Accordingly, for the following dependent variables, 34 × 18 matrices were obtained (34 participants × 18 conditions: 3 audio types × 2 escape directions × 3 time budgets).

-

Response accuracy (% of trials). The percentage of correct responses was determined by relating the escape direction of the video with the participants’ response direction. If the response direction corresponded with the video escape direction, the response was labeled as correct; otherwise, the response was incorrect. Responses that were given more than 300 ms after the video ended were also labeled as incorrect. This time margin, reflecting a typical human reaction time, was implemented to retain trials in which the participant responded just after the video ended, yet still before the estimated collision (the time-to-collision at the end of the video was 0.5–0.6 s).

-

Mean response time (ms). The response time was defined as the time elapsed between the start of the trial and pressing the left or right arrow key. When no button was pressed before 300 ms after the end of the video, no response time was recorded for that trial.

-

First, second, and third fixation location (% of trials). The location of the first, second, and third fixations (excluding fixations on the dashboard) was determined from the mean of the coordinates during the fixation. Areas of interest, as shown in Fig. 2, were computed based on the fixation location. The percentage of each area of interest relative to the number of trials with eye-tracking data were determined.

-

Mean first fixation time (ms). This is defined as the start time of the first fixation on any area of interest, excluding the dashboard.

In addition, the following was determined per audio type (Go, Danger, Baseline) based on the post-block questionnaires.

-

Acceptance. A 9-item paper questionnaire was used to determine the usefulness and satisfaction of the auditory warnings. The mean usefulness score was determined across the following items: 1. useful–useless; 3. bad–good; 5. effective–superfluous; 7. assisting–worthless. The mean satisfaction score was determined from the following items: 2. pleasant–unpleasant; 4. nice–annoying; 6. irritating–likable; and 8. undesirable–desirable. All items were rated on a five-point semantic-differential scale. Sign reversals were conducted for Items 1, 2, 4, 5, 7, and 9. Item 9 ‘raising alertness–sleep-inducing’ was analyzed separately. The original questionnaire [34] regarded systems that raise alertness as useful, but for the present study, which involved a near-collision scenario, a high ‘raising alertness’ score was regarded as low in usefulness. That is, a system that would fail to alert the driver would be expected to raise alertness because of the upcoming crash.

-

Workload. The NASA-TLX paper questionnaire was used for measuring six facets of workload: mental demand, physical demand, temporal demand, performance, effort, and frustration. All scores were linearly transformed to a scale from 0% (lowest score possible, i.e., very low/perfect) to 100% (highest score possible, i.e., very high/failure). The overall workload score was computed by averaging the responses of the six items.

2.8 Analyses

The scores on the dependent variables per experimental condition were visualized in bar graphs together with 95% confidence intervals for within-subjects designs (computed according to [39]).

The within-subject confidence intervals were computed for the 1-s, 3-s, and 6-s time budgets separately (i.e., for 6 conditions: 3 audio types × 2 escape directions) because of the large differences in variances in response times between the three time budgets. Although the percentage of correct responses could take on only a distinct number of values per participant per experimental condition (e.g., in case of 2 trials per experimental condition, it could be 0%, 50%, and 100%; in case of 3 trials per experimental condition, it could be 0%, 33.3%, 66.7%, and 100%), confidence intervals were computed based on a normal distribution. This approach was deemed robust enough for our purposes (see [40] for a similar argument about linear vs. logistic regression analysis).

Significant differences between conditions were judged by whether the confidence intervals overlapped. According to Cumming and Finch [41], nonoverlapping confidence intervals correspond to a p-value smaller than 0.006. For the questionnaires, differences between conditions were assessed using paired-samples t-tests at the level of participants with a significance level of 0.05.

3 Results

3.1 Performance measures

Figure 5 shows the percentage of correct responses for each of the 18 conditions. There were no significant differences between the Danger, Go, and Baseline audio types, except for the Escape Right situation with 1-s time budget, where Go and Danger outperformed the Baseline condition. In the Baseline condition with 1-s time budget, participants often did not respond at all (9% of Escape Left trials, 30% of Escape Right trials, see Fig. S1).

Figure 6 shows the corresponding mean response times. Longer time budgets yielded longer mean response times, a finding in agreement with much previous research (see [42] for a meta-analysis on the effect of time budget or ‘urgency’ on take-over times, and see [43], for a review on brake response times in manual driving). For the 1-s time budgets, no significant differences in response times between conditions were observed. Furthermore, for the Escape Left situations with 3-s and 6-s time budgets, responses were significantly faster (i.e., confidence intervals were nonoverlapping) for Danger and Go compared to Baseline. The effects for Escape Right situations were in the same direction.

Of the 1530 trials completed (34 participants × 45 trials per participant), eye-tracking data were available for 1364 trials. More specifically, for one participant, no eye-tracking data were available, and for six participants, 10, 19, 19, 27, 30, and 44 of 45 trials had eye-tracking data. The other 27 participants had complete eye-tracking data in all trials. Accordingly, the subsequent analyses are based on 33 of 34 participants, with missing data for 6 participants.

Figure 7 shows the distribution of where the participants’ first fixation landed. It can be seen that the traffic situation (i.e., time budget and escape direction) affected the distribution of fixations, whereas the audio type (i.e., Danger, Go, Baseline) did not have a distinct influence. More specifically, in the 1-s videos, participants generally first looked at the road center (red bars) for all audio types; in the center, there was a stranded car, which was presumably the most salient stimulus for the critical 1-s time budget. Additionally, participants were more likely to place their first fixation on the hazard side (i.e., green bars relative to gray bars) than on the escape side for all audio types. This effect was especially strong with the 3-s time budgets in which the overtaking car was relatively close at the start of the video.

Figure 7 further shows the area-of-interest distribution of the second and third fixations. It can be seen that compared to the first fixation, attention was attracted away from the center road towards other areas, including the center mirror. For the 1-s videos, a second and third fixation were available in only about 35% and 15% of the trials, respectively, i.e., most participants had responded before placing their second fixation. No consistent differences between the three audio types are apparent.

Finally, Fig. 8 further shows the moment of the first fixation; it can be seen that participants placed their first fixation about 200–250 ms after the trial started. In other words, the first fixation occurred well before the typical response time of 800 ms in the shortest time-budget condition (see Fig. 6).

3.2 Questionnaires

The results of the acceptance questionnaire, as shown in Table 2, revealed no significant differences in usefulness between the three audio types. Regarding satisfaction, ipsilateral warnings (Go) received significantly higher ratings than contralateral warnings (Danger). Furthermore, the Baseline condition raised alertness more than the Go and Danger conditions. Finally, there were no significant differences in overall workload between the three audio types. The workload was slightly lower for ipsilateral warnings than contralateral warnings and the Baseline condition; the differences were largest for the Temporal Demand and Effort items of the NASA-TLX.

4 Discussion

This study investigated drivers’ evasive responses and visual behaviors as they receive directional auditory warnings in critical situations. Participants were shown videos with time budgets of 1, 3, and 6 s, and were provided with ipsilateral (Go Left, Go Right) and contralateral (Danger Left, Danger Right) warnings coming from the spatially corresponding left and right directions, as well as nondirectional beeps (Baseline conditions). Participants’ performance was assessed based on response times and response accuracy. Eye-tracking was used to evaluate attention distribution for the first, second, and third fixations.

It was hypothesized that the ipsilateral warnings would lead to the fastest responses when the time budget was short. Additionally, it was hypothesized that contralateral warnings would help drivers to visually locate the left/right hazard.

4.1 Ipsilateral vs. contralateral warnings

The first hypothesis was not confirmed, as our results showed no significant differences in response times and response accuracy between ipsilateral and contralateral warnings. Nonetheless, ipsilateral warnings led to higher self-reported satisfaction than contralateral warnings. Previous research has found faster response times for ipsilateral warnings [23] (see [24] for vibrotactile warnings) than for contralateral warnings, or vice versa [18, 26] or has suggested that time budget is a crucial moderator variable [25]. Our null result can be interpreted in the context of previous papers that have indicated that the phenomenon of spatial multisensory synergy is highly task-dependent [9]. In the present study, there were five surrounding cars, creating high visual demands. In comparison, in Straughn et al. [25], visual information was degraded by fog, as a result of which a strong effect of auditory warnings may be expected. We recommend that future research on the topic of ipsilateral versus contralateral warnings examines the moderating rule of the timing of events (e.g., time budget, obstacle appearance, obstacle visibility).

The second hypothesis was that the contralateral warnings would support drivers’ detection of the hazard, i.e., the overtaking car in the left or right lane. However, no significant differences were observed between left/right attention distributions between the three audio types. Participants placed their first fixation 220 ms after the trial started, which was well before the words Left or Right could be heard, but after the direction from which the sound was emitted could have been noticeable.

The first fixations were typically placed on the area containing the most salient or relevant object (i.e., the car in front in 1-s videos and the overtaking car in 3-s videos). The second and third fixations were also goal-directed, such as fixations in the center mirror to assess the situation behind. Furthermore, in the majority of 1-s videos, participants had pressed the response key before placing their second fixation, i.e., participants often performed only one fixation before making their left/right decision. Thus, the eye-tracking data showed that participants’ fixation distribution was determined by the traffic situation and the temporal sequence (i.e., first look ahead; then in the rear-view mirror) and not by the auditory cues.

In summary, the findings reveal no significant differences in response times and accuracy between ipsilateral and contralateral warnings. Our findings suggest that visual information is dominant and more potent than the type of directional warning. Still, when participants were asked after the experiment which type of warnings they prefer, ipsilateral warnings came out best. This latter finding suggests that participants prefer stimulus–response compatibility, where the warning message is consistent with the required steering action (i.e., the message “Left” implies steering to the left, and the message “Right” implies steering to the right).

4.2 Directional vs. nondirectional warnings

Although there was no clear performance difference between ipsilateral and contralateral warnings, directional warnings (i.e., both ipsilateral and contralateral ones) helped improve performance compared to the Baseline condition. More specifically, in the 1-s Escape Right scenario, ipsilateral (Go) and contralateral (Danger) warnings yielded response accuracies of 87% and 84%, respectively—a substantial improvement compared to the response accuracy of 58% for the Baseline condition. These benefits were obtained despite the fact that participants could not perfectly trust the warnings since 20% of the trials featured invalid warnings.

The low accuracy of 58% in the Baseline condition was largely attributable to participants not providing a response within the available time. These non-responses may be caused by the view on the left side being poor due to the A-pillar, as a result of which participants may have been unaware about what would be the correct escape direction. It is also possible that participants were hesitant to steer to the right as doing so would violate common experience and traffic rules. This latter suggestion is in line with a driving simulator study that used a scenario similar to ours but without a blocking car in the left or right lane [22]. In that study, it was found that participants predominantly decided to steer to the left instead of the right.

A positive effect of the directional warnings was also found in 3-s and 6-s situations, where it caused participants to respond faster (not necessarily more accurate) than in the Baseline condition. A faster response implies a longer remaining time-to-collision with the stationary object, which may explain why the directional warnings reduced scores on the ‘raising alertness’ item of the acceptance questionnaire. A likely explanation for the fast responses with directional warnings is that the overtaking car was still far away at the start of the video, so the hazard was not yet manifest. In other words, the directional warnings provided the drivers with early knowledge of what was about to happen. In the Baseline condition, on the other hand, directional information was not provided, and so participants were unable to make an informed left/right decision early in the video.

In summary, directional auditory warnings encouraged participants to make a quick decision when visual information was unavailable or scarce. This observation is consistent with the finding in psychophysics that the weaker the unimodal stimuli, the stronger the multimodal benefit [44]. The eye movements of our lab-based study may not be comparable to actual driving since driving involves a larger field of view. We expect that directional warnings would be (even) more effective when hazardous events occur outside the human field of view, a hypothesis that deserves further investigation.

4.3 Limitations and recommendations for future research

Literature suggests that results obtained in basic stimulus–response tasks may not transfer to driving environments. In basic studies, stimulus–response compatibility has been found to improve response times compared to an incompatible stimulus–response layout [45, 46]. In driving, however, drivers are inclined to steer away from a hazard rather than towards it [47]. A strength of our study is that it conveyed a natural driving scene. Still, our study is not an entirely realistic representation of actual driving.

In real driving, there may be competing auditory cues such as the sound of rumble strips (which inherently provide contralateral feedback) or sounds from other vehicles in the vicinity. In the current study, the contralateral warnings informed the drivers about the hazard in the left or right lane (Danger Left, Danger Right) but did not inform them about the hazard posed by the stationary car in front. In other words, the contralateral feedback may have been suboptimal since it may have caused some confusion as to whether the danger message referred to the vehicle in the left/right lane or to the car in front. Future research will have to examine the effects of directional warnings in combination with other sounds, such as forward-collision warnings. At the same time, ipsilateral feedback cannot be relied upon completely either since participants will need to countercheck whether the lane is indeed free. In fact, in the context of external human–machine interfaces (eHMIs) for automated vehicles, instructive messages (such as GO, WALK, CROSS) are advised against by human factors experts because such messages may risk overreliance [48]. More generally, drivers should not blindly rely on decision aids (e.g., [49], p. 149).

Each participant performed 45 trials, i.e., 2–3 trials per experimental condition. The optimal number of trials to use is a dilemma in this type of research. On the one hand, it may be argued that our number of trials is small relative to typical psychophysics research [50] (see also [26], for a study with 120 trials per participant for evaluating directional warnings in a driving scenario). On the other hand, it may be argued that our number of trials is too large because near-collisions in real vehicles are rare events. In a similar scenario as the current experiment, seminal research by [51] used only one trial per participant, which can be seen as realistic if the aim is to surprise participants. Indeed, in real traffic and more immersive driving simulators, drivers may exhibit a startle response if confronted with a near-collision (e.g., [52]). Future research should investigate drivers’ eye movements in immersive driving simulators or field studies. Future research could also examine other measures of driver behavior and acceptance, such as whether the driver keeps the vehicle stable or enters a skid, as well as system disuse (cf. [53]).

In our study, there was a 70 ms latency of the speech samples (see Fig. 4). On top of this, an inherent disadvantage of speech-based warnings is that it takes time to pronounce the message, or as noted by Simpson and Marchionda-Frost [54], “we must generally wait until very close to the end of the message before we comprehend the entire message” (p. 511).

In the simulator-based study by Wang et al. [18], spatial warnings were provided 0.5 s before a threatening vehicle appeared. Ho and Spence [15] pointed to the benefits of early warnings: “the available evidence now supports the view that performance of a variety of visual tasks can be facilitated by the presentation of an auditory cue from the same location shortly beforehand.” (p. 158; Italics added). In simulated environments such as ours, warnings can be provided at any arbitrary moment. In reality, however, collision warning systems that provide early warnings may cause false positives due to sensor noise and the inherent uncertainty about how a given traffic situation will evolve [55,56,57,58]. A possible solution is to use artificial intelligence that predicts early in time whether a traffic situation will become hazardous [59]. Yet another option is to provide early warnings with the help of vehicle-to-vehicle communication [60, 61]. That is, surrounding cars may communicate their upcoming braking and lane changing maneuvers before initiating these maneuvers. It is noted that if drivers are drowsy and have their eyes closed, auditory warnings, by definition, precede visual information [19]. Future research could evaluate the effectiveness of directional warnings in driving for different degrees of asynchrony between visual information and auditory cues.

5 Conclusion

It is concluded that ipsilateral warnings are perceived as more satisfactory than contralateral warnings, while response accuracy and response times are equivalent. Compared to beeps, directional warnings (both ipsilateral and contralateral) improve performance in situations where visual information is scarce or unavailable. Furthermore, the current study highlighted the powerful role of vision, as demonstrated by the fact that drivers placed their first fixation on situation-specific stimuli at about 220 ms since the start of the trial.

Data Availability

A demo video and MATLAB codes used for the analyses are available at https://doi.org/10.4121/19524448.

References

Bengler K, Dietmayer K, Farber B, Maurer M, Stiller C, Winner H (2014) Three decades of driver assistance systems: Review and future perspectives. IEEE Intell Transp Syst Mag 6:6–22. https://doi.org/10.1109/MITS.2014.2336271

Narote SP, Bhujbal PN, Narote AS, Dhane DM (2018) A review of recent advances in lane detection and departure warning system. Pattern Recogn 73:216–234. https://doi.org/10.1016/j.patcog.2017.08.014

Stanton NA, Edworthy J (1999) Human factors in auditory warnings. Ashgate, Aldershot, UK

Stokes A, Wickens C, Kite K (1990) Display technology. Human factors concepts. Society of Automotive Engineers, Warrendale, PA

Makous JC, Middlebrooks JC (1990) Two-dimensional sound localization by human listeners. J Acoust Soc Am 87:2188–2200. https://doi.org/10.1121/1.399186

Stevens SS, Newman EB (1936) The localization of actual sources of sound. Am J Psychol 48:297–306. https://doi.org/10.2307/1415748

Gang N, Sibi S, Michon R, Mok B, Chafe C, Ju W (2018) Don’t be alarmed: Sonifying autonomous vehicle perception to increase situation awareness. In: Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (pp. 237–246). Toronto, Canada. https://doi.org/10.1145/3239060.3265636

Wang M, Lyckvi SL, Chen C, Dahlstedt P, Chen F (2017) Using advisory 3D sound cues to improve drivers' performance and situation awareness. In: Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (pp. 2814–2825). Denver, CO, USA. https://doi.org/10.1145/3025453.3025634

Spence C (2013) Just how important is spatial coincidence to multisensory integration? Evaluating the spatial rule. Ann N Y Acad Sci 1296:31–49. https://doi.org/10.1111/nyas.12121

Verbist K, Boer ER, Mulder M, Van Paassen MM (2009) Car lane-keeping using auditory feedback. Delft University of Technology, Delft, The Netherlands

Bazilinskyy P, Van der Geest L, Van Leeuwen S, Numan B, Pijnacker J, De Winter J (2016) Blind driving by means of auditory feedback. IFAC-PapersOnLine 49:525–530. https://doi.org/10.1016/j.ifacol.2016.10.612

Begault DR (1993) Head-up auditory displays for traffic collision avoidance system advisories: A preliminary investigation. Hum Factors 35:707–717. https://doi.org/10.1177/001872089303500409

Berman BA, Kochan JA, Burian BK, Pruchnicki S, Christopher B, Silverman E (2017) Alerts and cues on the flight deck: Analysis and application in training (Technical Memorandum NASA/TM—2017-219720). Ames Research Center, National Aeronautics and Space Administration, Moffett Field, CA, USA.

Veitengruber JE, Boucek GP Jr, Smith WD (1977) Aircraft alerting systems criteria study volume 1: Collation and analysis of aircraft alerting systems data (FAA-RD-76–222–1). Boeing Commercial Airplane Company, Seattle.

Ho C, Spence C (2005) Assessing the effectiveness of various auditory cues in capturing a driver’s visual attention. J Exp Psychol Appl 11:157–174. https://doi.org/10.1037/1076-898X.11.3.157

Liu YC, Jhuang JW (2012) Effects of in-vehicle warning information displays with or without spatial compatibility on driving behaviors and response performance. Appl Ergon 43:679–686. https://doi.org/10.1016/j.apergo.2011.10.005

Sanghavi H, Jeon M, Nadri C, Ko S, Sodnik J, Stojmenova K (2021) Multimodal takeover request displays for semi-automated vehicles: Focused on spatiality and lead time. In: Krömker H (ed), International Conference on Human-Computer Interaction in Mobility, Transport, and Automotive Systems (pp. 315–334). Springer, Cham. https://doi.org/10.1007/978-3-030-78358-7_22

Wang DYD, Pick RF, Proctor RW, Ye Y (2007) Effect of a side collision-avoidance signal on simulated driving with a navigation system. In: Proceedings of the Fourth International Driving Symposium on Human Factors in Driver Assessment, Training and Vehicle Design (pp. 206–2011). University of Iowa, Iowa City. https://doi.org/10.17077/drivingassessment.1238

Roßmeier M, Grabsch H-P, Rimini-Doering M (2005) Blind flight: Do auditory lane departure warnings attract attention or actually guide action? In: Proceedings of ICAD 05-Eleventh Meeting of the International Conference on Auditory Display. Limerick, Ireland.

Suzuki K, Jansson H (2003) An analysis of driver’s steering behaviour during auditory or haptic warnings for the designing of lane departure warning system. Rev Automot Eng 24:65–70. https://doi.org/10.1016/S0389-4304(02)00247-3

Zhang Y, Yan X, Yang Z (2015) Discrimination of effects between directional and nondirectional information of auditory warning on driving behavior. Discret Dyn Nat Soc 2015:980281. https://doi.org/10.1155/2015/980281

Petermeijer S, Bazilinskyy P, Bengler K, De Winter J (2017) Take-over again: Investigating multimodal and directional TORs to get the driver back into the loop. Appl Ergon 62:204–215. https://doi.org/10.1016/j.apergo.2017.02.023

Wang DY, Proctor RW, Pick DF (2003) Stimulus-response compatibility effects for warning signal and steering response. In: Proceedings of the Second International Driving Symposium on Human Factors in Driver Assessment (pp. 226–230). The University of Iowa, Iowa City. https://doi.org/10.17077/drivingassessment.1128

Cohen-Lazry G, Katzman N, Borowsky A, Oron-Gilad T (2019) Directional tactile alerts for take-over requests in highly-automated driving. Transport Res F: Traffic Psychol Behav 65:217–226. https://doi.org/10.1016/j.trf.2019.07.025

Straughn SM, Gray R, Tan HZ (2009) To go or not to go: Stimulus-response compatibility for tactile and auditory pedestrian collision warnings. IEEE Trans Haptics 2:111–117. https://doi.org/10.1109/TOH.2009.15

Chen J, Šabić E, Mishler S, Parker C, Yamaguchi M (2022) Effectiveness of lateral auditory collision warnings: should warnings be toward danger or toward safety? Hum Factors 64:418–435. https://doi.org/10.1177/0018720820941618

Gold C, Berisha I, Bengler K (2015) Utilization of drivetime–Performing non-driving related tasks while driving highly automated. Proc Human Factors Ergon Soc Ann Meeting 59:1666–1670. https://doi.org/10.1177/1541931215591360

Kalb L, Streit L, Bengler K (2018) Multimodal priming of drivers for a cooperative take-over. In: Proceedings of the 21st International Conference on Intelligent Transportation Systems (ITSC) (pp. 1029–1034). Maui, HI. https://doi.org/10.1109/ITSC.2018.8569619

Politis I, Brewster S, Pollick F (2014) Speech tactons improve speech warnings for drivers. In: Proceedings of the 6th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, New York. https://doi.org/10.1145/2667317.2667318

Tass International. (2021) PreScan overview [Computer software]. https://tass.plm.automation.siemens.com/prescan-overview

Lu Z, Happee R, De Winter JCF (2020) Take over! A video-clip study measuring attention, situation awareness, and decision-making in the face of an impending hazard. Transport Res F Traffic Psychol Behav 72:211–225. https://doi.org/10.1016/j.trf.2020.05.013

Naturalsoft. (2018) NaturalReader. https://www.naturalreaders.com

Apple. (2018) GarageBand for Mac. https://www.apple.com/mac/garageband

Van der Laan JD, Heino A, De Waard D (1997) A simple procedure for the assessment of acceptance of advanced transport telematics. Transport Res C Emerg Technol 5:1–10. https://doi.org/10.1016/S0968-090X(96)00025-3

Hart SG, Staveland LE (1988) Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. Adv Psychol 52:139–183. https://doi.org/10.1016/S0166-4115(08)62386-9

Caffier PP, Erdmann U, Ullsperger P (2003) Experimental evaluation of eye-blink parameters as a drowsiness measure. Eur J Appl Physiol 89:319–325. https://doi.org/10.1007/s00421-003-0807-5

Eisma YB, Cabrall CDD, De Winter JCF (2018) Visual sampling processes revisited: Replicating and extending Senders (1983) using modern eye-tracking equipment. IEEE Trans Human Mach Syst 48:526–540. https://doi.org/10.1109/thms.2018.2806200

Nyström M, Holmqvist K (2010) An adaptive algorithm for fixation, saccade, and glissade detection in eyetracking data. Behav Res Meth 42:188–204. https://doi.org/10.3758/BRM.42.1.188

Morey RD (2008) Confidence intervals from normalized data: A correction to Cousineau (2005). Tutorial Quant Methods Psychol 4:61–64. https://doi.org/10.20982/tqmp.04.2.p061

Hellevik O (2009) Linear versus logistic regression when the dependent variable is a dichotomy. Qual Quant 43:59–74. https://doi.org/10.1007/s11135-007-9077-3

Cumming G, Finch S (2005) Inference by eye: confidence intervals and how to read pictures of data. Am Psychol 60:170–180. https://doi.org/10.1037/0003-066X.60.2.170

Zhang B, De Winter J, Varotto S, Happee R, Martens M (2019) Determinants of take-over time from automated driving: A meta-analysis of 129 studies. Transport Res F: Traffic Psychol Behav 64:285–307. https://doi.org/10.1016/j.trf.2019.04.020

Green M (2000) "How long does it take to stop?" Methodological analysis of driver perception-brake times. Transport Hum Factors 2:195–216. https://doi.org/10.1207/STHF0203_1

Van de Rijt LP, Roye A, Mylanus EA, Van Opstal AJ, Van Wanrooij MM (2019) The principle of inverse effectiveness in audiovisual speech perception. Front Hum Neurosci 13:335. https://doi.org/10.3389/fnhum.2019.00335

Fitts PM, Seeger CM (1953) S-R compatibility: Spatial characteristics of stimulus and response codes. J Exp Psychol 46:199–210. https://doi.org/10.1037/h0062827

Simon JR (1967) Choice reaction time as a function of auditory SR correspondence, age and sex. Ergonomics 10:659–664. https://doi.org/10.1080/00140136708930919

Müsseler J, Aschersleben G, Arning K, Proctor RW (2009) Reversed effects of spatial compatibility in natural scenes. Am J Psychol 122:325–336. https://www.jstor.org/stable/27784406

Tabone W, De Winter JCF, Ackermann C, Bärgman J, Baumann M, Deb S, Emmenegger C, Habibovic A, Hagenzieker M, Hancock PA, Happee R, Krems J, Lee JD, Martens M, Merat N, Norman DA, Sheridan TB, Stanton NA (2021) Vulnerable road users and the coming wave of automated vehicles: Expert perspectives. Transp Res Interdiscip Perspec 9:100293. https://doi.org/10.1016/j.trip.2020.100293

Sheridan TB (2002) Humans and automation: Systems design and research issues. Santa Monica/New York: Human Factors and Ergonomics Society/Wiley

Smith PL, Little DR (2018) Small is beautiful: In defense of the small-N design. Psychonomic Bull Rev 25:2083–2101. https://doi.org/10.3758/s13423-018-1451-8

Gold C, Damböck D, Lorenz L, Bengler K (2013) “Take over!” How long does it take to get the driver back into the loop? In: Proceedings of the Human Factors and Ergonomics Society Annual Meeting vol 57, pp 1938–1942. https://doi.org/10.1177/1541931213571433

Hault-Dubrulle A, Robache F, Pacaux MP, Morvan H (2011) Determination of pre-impact occupant postures and analysis of consequences on injury outcome. Part I: A driving simulator study. Accid Anal Prev 43:66–74. https://doi.org/10.1016/j.aap.2010.07.012

Kidd DG, Cicchino JB, Reagan IJ, Kerfoot LB (2017) Driver trust in five driver assistance technologies following real-world use in four production vehicles. Traffic Inj Prev 18:S44–S50. https://doi.org/10.1080/15389588.2017.1297532

Simpson CA, Marchionda-Frost K (1984) Synthesized speech rate and pitch effects on intelligibility of warning messages for pilots. Hum Factors 26:509–517. https://doi.org/10.1177/001872088402600503

Brown TL, Lee JD, McGehee DV (2001) Human performance models and rear-end collision avoidance algorithms. Hum Factors 43:462–482. https://doi.org/10.1518/001872001775898250

Fecher N, Hoffmann J (2016) Driver warning elements. In: Winner H, Hakuli S, Lotz F, Singer C (eds) Handbook of driver assistance systems (pp. 857–870). Springer, Cham, pp 857–870. https://doi.org/10.1007/978-3-319-12352-3_37

Navarro J, Yousfi E, Deniel J, Jallais C, Bueno M, Fort A (2016) The impact of false warnings on partial and full lane departure warnings effectiveness and acceptance in car driving. Ergonomics 59:1553–1564. https://doi.org/10.1080/00140139.2016.1158323

Winkler S, Werneke J, Vollrath M (2016) Timing of early warning stages in a multi stage collision warning system: Drivers’ evaluation depending on situational influences. Transport Res F Traffic Psychol Behav 36:57–68. https://doi.org/10.1016/j.trf.2015.11.001

Li Y, Karim MM, Qin R (2021) Who is better at anticipating traffic crashes, human or artificial Intelligence? A gaze data-based exploratory study. https://arxiv.org/abs/2108.01599

Tan HS, Huang J (2006) DGPS-based vehicle-to-vehicle cooperative collision warning: engineering feasibility viewpoints. IEEE Trans Intell Transp Syst 7:415–428. https://doi.org/10.1109/TITS.2006.883938

Yang W, Wan B, Qu X (2020) A forward collision warning system using driving intention recognition of the front vehicle and V2V communication. IEEE Access 8:11268–11278. https://doi.org/10.1109/ACCESS.2020.2963854

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

de Winter, J., Hu, J. & Petermeijer, B. Ipsilateral and contralateral warnings: effects on decision-making and eye movements in near-collision scenarios. J Multimodal User Interfaces 16, 303–317 (2022). https://doi.org/10.1007/s12193-022-00390-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12193-022-00390-6