Abstract

In this work, we studied a two-component mixture model with stochastic dominance constraint, a model arising naturally from many genetic studies. To model the stochastic dominance, we proposed a semiparametric modelling of the log of density ratio. More specifically, when the log of the ratio of two component densities is in a linear regression form, the stochastic dominance is immediately satisfied. For the resulting semiparametric mixture model, we proposed two estimators, maximum empirical likelihood estimator (MELE) and minimum Hellinger distance estimator (MHDE), and investigated their asymptotic properties such as consistency and normality. In addition, to test the validity of the proposed semiparametric model, we developed Kolmogorov–Smirnov type tests based on the two estimators. The finite-sample performance, in terms of both efficiency and robustness, of the two estimators and the tests were examined and compared via both thorough Monte Carlo simulation studies and real data analysis.

Similar content being viewed by others

1 Introduction

We consider the two-sample model introduced by Abedin (2018). Specifically, suppose there is a sample from a two-component mixture population \(H=(1-\lambda )F+\lambda G\), where the mixing proportion \(\lambda \) is strictly between 0 and 1, and F, G and H are cumulative distribution functions (c.d.f.) such that \(F\ne G\) and F and G satisfy the stochastic dominance constraint \(F\ge G\). In addition, we have a separate sample identified as from the first component F. As a result, the data and model are formularized as

where f, g and h are the corresponding probability density functions (p.d.f.) of F, G and H, respectively. Here f, g and \(\lambda \) are all unknown. The problem of our interest is to make inferences for \(\lambda \), treating f and g as nuisance parameters, and to estimate the likelihood of a new observation coming from a particular component.

A motivating example that made model (1) arise is the problem to identify differentially expressed genes in case-control studies (e.g., contract vs. not contract COVID-19) of genetic data, such as in Chen and Wu (2013), Kharchenko et al. (2014), Lu et al. (2015) and Ficklin et al. (2017), among many others. In order to solve the problem, frequently a proposed test is applied repeatedly to each single gene. The distribution of those thousands of test statistic values is thus a two-component mixture \((1-\lambda )f+\lambda g\), where f is the p.d.f. of the statistic under the null hypothesis that a particular gene is not differentially expressed while g is that under the alternative hypothesis that a particular gene is differentially expressed, and \(\lambda \) is the proportion of differentially expressed genes. Usually f is much easier to derive theoretically than g, otherwise if f is unknown in practice, in many studies pathologists or experts can confidently identify some genes that are not differentially expressed so that we have a sample (test statistic values of those genes) from f. The latter case is exactly model (1). The dominance constraint \(F\ge G\) is very intuitive in many cases where the statistic values of marker genes are likely larger (or smaller) than those of non-marker genes (e.g., Student’s t, ANOVA test statistic). In this motivating example one may argue that the genes, and thus the values of a particular statistic, are not i.i.d. as assumed in model (1). Nevertheless, the distribution of the statistic over all genes is assumed nonparametrically unknown which will provide enough flexibility to weaken, if not remove, the effect of dependence. By Bayes’ rule the probability of a gene with test statistic value y being a marker gene is given by

Once \(\lambda \), f and g are estimated, one can estimate according to (2) the probability that a particular gene is differentially expressed.

Besides the motivating example, model (1) could also be used to model many other real data structure. For more examples readers are referred to Abedin (2018) and Wu and Abedin (2021) which, to our best knowledge, are the only works in literature on model (1). In these two works, the authors proposed and studied two estimators. The first one is based on c.d.f. estimations with use of the dominance inequality, while the second is a maximum likelihood estimator (MLE) based on multinomial approximation. Another work on a model closely related to model (1) is given in Smith and Vounatsou (1997). However their model doesn’t assume the stochastic dominance constraint but instead makes a generally stronger assumptions on the p.d.f.s f and g.

This paper is organized in the following way. In Sect. 2, we introduce a semiparametric mixture model in order to accommodate the stochastic dominance constraint. A maximum empirical likelihood estimator (MELE) and a minimum Hellinger distance estimator (MHDE) of the unknown parameters are proposed in Sects. 3 and 4, respectively. Their asymptotic properties such as consistency and asymptotic normality are also presented in these two sections. Section 5 is devoted to testing the validity of the proposed semiparametric model with use of the MELE and MHDE. The finite-sample performance of the two proposed estimators and the goodness-of-fit tests are assessed in Sect. 6 via thorough Monte Carlo simulation studies, while the implementation of the proposed methods are demonstrated in Sect. 7 through two real data examples. Finally the concluding remarks are presented in Sect. 8. Some conditions for the theoretical results are deferred to Appendix, while the derivations and proofs of the theorems and lemmas are given in a separate supplementary document to save space.

2 A semiparametric modelling

Abedin (2018) and Wu and Abedin (2021) proposed and studied two consistent estimators for model (1). However, due to the non-identifiability of the model in general, it is difficult to obtain an estimator with good asymptotic properties such as asymptotic normality. Therefore, we introduce in what follows a semiparametric model which will be proved identifiable and will ensure the stochastic dominance.

Let Z denote a binary response variable and Y the associated covariate. Then the logistic regression model is given by

where \(r(y)=(r_{1}(y),\ldots ,r_{p}(y))^\top \) is a given \(p\times 1\) vector of functions of y, \(\alpha ^{*}\) is the intercept parameter and \(\beta =(\beta _{1},\ldots ,\beta _{p})^\top \) is the \(p \times 1\) coefficient parameter vector. In case-control studies data are collected retrospectively. For example, a random sample of subjects with disease \(Z=1\) (‘case’) and a separate random sample of subjects without disease \(Z=0\) (‘control’) are selected with Y observed for each subject. Let \(\pi =P(Z=1)=1-P(Z=0)\). Let f(y) and g(y) denote the conditional p.d.f.s of Y given \(Z=0\) and \(Z=1\) respectively, then it follows from the Bayes’ rule that

where \(\alpha =\alpha ^{*}+\log [(1-\pi )/\pi ]\). Now model (1) is reduced to the semiparametric mixture model

with \(f\in {\mathcal {F}}\) and the parameter vector of interest \(\theta =\left(\lambda ,\alpha ,\beta ^\top \right)^\top \in \varTheta \subseteq {\mathbb {R}}^{p+2}\) such that \(\int e^{\alpha +\beta ^\top r(x)} f(x){\text {d}}x=1\), where

The relationship (3) between two p.d.f.s was first proposed by Anderson (1972). It essentially assumes that the log-likelihood ratio of the two p.d.f.s is linear in the observations. With \(r(x)=x\) or \(r(x)=(x,x^{2})^\top \), it has wide applications in logistic discriminant analysis (Anderson, 1972, 1979) and case-control studies (Prentice and Pyke, 1979; Breslow and Day, 1980). For \(r(x)=x\), (3) encompasses many common distributions, including two exponential distributions with different means and two normal distributions with common variance but different means. Model (3) with \(r(x)=(x,x^2)^\top \) also coincides with the exponential family of densities considered in Efron and Tibshirani (1996). Moreover, model (3) can be viewed as a biased sampling model with the ‘tilt’ weight function \(\exp \left[\alpha +\beta ^\top r(x)\right]\) depending on the unknown parameters \(\alpha \) and \(\beta \). Note that the test of equality of f and g can be regarded as a special case of model (4) with \(\beta =0\).

Qin and Zhang (1997) discussed a goodness-of-fit test for logistic regression based on case-control data where the first sample comes from the control group f and independently the second sample comes from the case group g. They proposed a Kolmogorov–Smirnov type statistic to test the validity of (3) with \(r(y)=y\). When data from both the mixture and the two individual components satisfying (3) are available, Qin (1999) developed an empirical likelihood ratio-based statistic for constructing confidence intervals of the mixing proportion. For the same model and data structure, Zhang (2002) proposed an EM algorithm to calculate the MELE while (Zhang, 2006) proposed a score statistic to test the mixing proportion. Chen and Wu (2013) employed (3) to model differentially expressed genes of acute lymphoblastic leukemia patients and acute myeloid leukemia patients.

For model (4) with \(r(y)=y\), if \(\beta >0\) then we can easily check that p(y) in (2), the probability of y being from g, is a monotonic increasing function. Further we can prove in the next theorem that if \(\beta >0\), then the stochastic dominance constraint \(F\ge G\) is implied by (3). We call \((1,r(y))^\top \) linearly independent on the support, say \(\varOmega \), of f if \((1,r(y))^\top \), as a vector of functions of y, is linearly independent over \(\varOmega \).

Theorem 1

If \((1,r(y))^\top \) is linearly independent on the support of f, then model (4) with parameter space (5) is identifiable. Specially, (4) is identifiable when \(r(y)=y\); if further \(\beta >0\), then \(F\ge G\).

Even though Theorem 1 tells us that the condition (3) is stronger than the original stochastic dominance constraint, the resulting semiparametric mixture model (4) is identifiable and has better interpretation than the nonparametric mixture model (1). In addition, the estimation of (4) may possess better asymptotic properties than those of (1). One may also consider higher order polynomial for r(y) and find sufficient conditions for \(F\ge G\). For model simplicity, we focus on model (4) with \(r(x)=x\) and \(\beta >0\) (to ensure the dominance \(F\ge G\)) throughout this paper. We first propose two estimators for this model.

3 MELE of the parameters

Let \((T_1,\ldots ,T_{m+n})^\top = ( X_1,\ldots ,X_m,Y_1,\ldots ,Y_n)^\top \) be the pooled data. In MELE, F is assumed a step function with \(p_i=dF(T_i)\) (jump at \(T_i\)) such that \(\sum _{i=1}^{m+n}p_i=1\). As a result, \(dH(Y_j)=p_{m+j}\left[ (1-\lambda )+\lambda e^{\alpha +\beta Y_j}\right] \). Consequently, the empirical likelihood function of (4) with \(r(y)=y\) and \(\beta >0\) is

subject to constraints \(\beta > 0\), \(0<\lambda <1\), \(p_i\ge 0\), \(\sum \nolimits _{i=1}^{m+n}p_i=1\), and \(\sum \nolimits _{i=1}^{m+n}p_{i} e^{\alpha +\beta T_i}=1\). The constraint \(\beta >0\) won’t limit the use of model (4) and proposed estimation methods, as we can always switch F with G if \(\beta <0\) in model (4). Though in this work we assume both F and G are two continuous populations, we can see that the above likelihood is also valid for discrete populations. To find the MELE, we use the Lagrange multipliers and maximize

By taking partial derivatives we obtain (details in the supplementary document)

where \(\rho _N=n/(m+n)\) with \(N=m+n\). Therefore ignoring a constant, the empirical log-likelihood function is

Let \({\hat{\theta }}_{\text {MELE}}=({\hat{\lambda }}_{\text {MELE}},{\hat{\alpha }}_{\text {MELE}},\hat{\beta }_{\text {MELE}})^\top \) denote the MELE of \(\theta \), i.e., the maximizer of the log-likelihood function l in (7). In our numerical studies, we used Optim in the R-package to find \(\hat{\theta }_{\text {MELE}}\). Then the MELE of p(y) in (2) is

We now examine the asymptotic properties of the MELE \({\hat{\theta }}_{\text {MELE}}\). Define

with l given in (7), and let

where

with

Since \(f\in {\mathcal {F}}\) and \(r(x)=x\), implying \(\int e^{\beta y}f(y){\text {d}}y<\infty \), it is easy to show that all the \(S_{ij}\)’s defined above are finite.

Lemma 1

Assume \(\rho _{N}\rightarrow \rho \) as \(N\rightarrow \infty \), with \(0<\rho <1\). Then \(S_{N} \overset{P}{\longrightarrow }\rho (1-\rho ) S\) as \(N\rightarrow \infty \), where \(S_N\) and S are defined in (9) and (10) respectively and \( \overset{P}{\rightarrow }\) denotes convergence in probability.

The condition \(\rho _{N}\rightarrow \rho \) with \(0<\rho <1\), as \(N\rightarrow \infty \), in Lemma 1 requires that the sample sizes m and n converge to infinity at the same order. With the results in Lemma 1, the following theorem gives the asymptotic normality of the MELE \({\hat{\theta }}_{\text {MELE}}\). Let

where

with \(w_1\) and \(w_2\) defined in (11).

Theorem 2

Assume \(\rho _{N}\rightarrow \rho \) as \(N\rightarrow \infty \) with \(0<\rho <1\) and S defined in (10) is invertible. Then under model (4) with parameter space (5) and some regularity conditions (for MELE in general; see Qin and Lawless, 1994),

where \(\varSigma =\frac{1}{\rho (1-\rho )}S^{-1}VS^{-1}\) with S and V defined in (10) and (12) respectively, and \( \overset{D}{\rightarrow }\) denotes convergence in distribution. In addition, V and further \(\varSigma \) are positive definite.

In Theorem 2, the assumption of S being invertible is generally true except for some special cases such as \(\lambda =0\), or \(\alpha =\beta =0\), or f is a singleton. When \(0<\lambda <1\), \(\beta \ne 0\) and f is continuous (or an even weaker condition that the support of f contains at least two points), we would expect S being invertible. With the results in Theorem 2, one can easily make MELE-based inferences, such as Wald test, about the parameter when the unknown \(\theta \) in \(\varSigma \) is replaced with some consistent estimators such as the MELE.

4 MHDE of the parameters

Though MELE is efficient, it is generally non-robust against outliers and model misspecifications. As a robust alternative, we propose in this section an MHDE for the unknown parameters in model (4).

The Hellinger distance between two functions \(f_1\) and \(f_2\) is defined as \(\Vert f_1^{1/2}-f_2^{1/2}\Vert \), the \(L^2\)-norm of root functions. For a fully parametric model \(\{h_\theta :\theta \in \varTheta \}\) with \(\varTheta \) the parameter space, the MHDE of \(\theta \) is defined as

where \({\hat{h}}\) is an appropriate nonparametric estimator of \(h_\theta \). MHDE was first introduced by Beran (1977) for fully parametric models. Beran (1977) showed that the MHDE for parametric model has both full efficiency and good robustness properties. Lindsay (1994) outlined the comparison between MHDE and MLE in terms of robustness and efficiency and showed that MHDE and MLE are members of a larger class of efficient estimators with various second-order efficiency properties. However, the literature on MHDE for mixture models is not redundant. Lu et al. (2003) considered the MHDE for mixture of Poisson regression models. MHDE of mixture complexity for finite mixture models was investigated by Woo and Sriram (2006, 2007). Recently, MHDE has been extended from parametric models to semiparametric models. Wu et al. (2010) proposed an MHDE for two-sample case-control data under model (3) and investigated the asymptotic properties and robustness of the proposed estimator. Xiang et al. (2014) proposed a minimum profile Hellinger distance estimator (MPHDE) for the two-component semiparametric mixture model studied by Bordes et al. (2006) where one component is known and the other is an unknown symmetric function with unknown location parameter. Wu et al. (2017) and Wu and Zhou (2018) proposed algorithms for calculating the MPHDE and the MHDE, respectively, for the two-component semiparametric location-shifted mixture model. Inspired by these works, we propose to use the MHDE to estimate the parameters in (4).

In model (4), even though \(\alpha \) and \(\beta \) can possibly take any value on the real line, we can essentially use intervals that are large enough to cover their true values. So for practical purpose, we can assume that \(\theta \in \varTheta \) with \(\varTheta \) a compact subset of \(\mathbb {R}^{3}\). To give the MHDE for model (4), note that the MHDE defined in (13) is not available in practice since the f in \(h_t (x)=(1-t_1+t_1 e^{t_2+t_3 x})f(x)\), with \(t=(t_1,t_2,t_3)^\top \), is unknown. Intuitively, we can use an appropriate nonparametric density estimator, say \(f_{m}\), based on \(X_1,\dots ,X_m\) to replace f and apply the plug-in rule to estimate \(h_t\) by

With another appropriate nonparametric density estimator, say \(h_n\), of \(h_\theta \) based on \(Y_1,\dots ,Y_n\), we define the MHDE of \(\theta =(\lambda ,\alpha ,\beta )^\top \) as

That is, \(\hat{\theta }_{\text {MHDE}}\) is the minimizer t of the Hellinger distance between the estimated parametric model \(\hat{h}_t\) and the nonparametric density estimator \(h_{n}\). In this work, we use kernel density estimators

where \(K_{0}\) and \(K_{1}\) are kernel p.d.f.s and bandwidths \(b_{n}\) and \(b_{m}\) are positive sequences such that \(b_{m}\rightarrow 0\) as \(m \rightarrow \infty \) and \(b_{n} \rightarrow 0\) as \(n \rightarrow \infty \). The MHDE of p(y) in (2) is given by

Note that in (15) we do not impose any restriction on \(\hat{h}_t\) to make it a density function. The reason behind this is that a \(t\in \varTheta \) that makes \(\hat{h}_t\) not a density can still make \(h_t\) a density. The true parameter value \(\theta \) may not make \(\hat{h}_{\theta }\) a density, but it is not reasonable to exclude \(\theta \) as the estimate \({\hat{\theta }}_{\text {MHDE}}\) of itself. As the explicit expression of \({\hat{\theta }}_{\text {MHDE}}\) does not exist, one needs to use iterative methods such as Newton-Raphson to numerically calculate it. Karunamuni and Wu (2011) has shown for parametric models that with an appropriate initial value, even one-step iteration works well and gives a quite accurate approximation of MHDE.

We now examine the asymptotic properties of the MHDE \(\hat{\theta }_{\text {MHDE}}\) given in (15). Let \({\mathcal {H}}\) be the set of all p.d.f.s with respect to Lebesgue measure on the real line. The conditions (D1)–(D3) and (C0)–(C11) are listed in Appendix. The following theorem gives the existence, Hellinger-metric consistency, and uniqueness of the proposed MHDE.

Theorem 3

Suppose \(\varTheta \) is a compact parameter space and (D1) holds for all \(t\in \varTheta \). Then

-

(i)

For every \(\varphi \in {\mathcal {H}}\) and \(f_m\) defined in (16) with \(K_0\) compactly supported, there exist \(T(f,\varphi )\) and \(T(f_m, \varphi )\) in \(\varTheta \) satisfying (15).

-

(ii)

Suppose that \(m,n\rightarrow \infty \) as \(N\rightarrow \infty \) and \(\theta =T(f, \varphi )\) is unique for \(\varphi \in {\mathcal {H}}\). Then \(\theta _N = T(f_m,\varphi _n)\rightarrow \theta \) as \(N\rightarrow \infty \) for any density sequences \(f_m\) and \(\varphi _n\) such that \(\Vert \varphi _n^{1/2}-\varphi ^{1/2}\Vert \rightarrow 0\) and \(\sup _{t\in \varTheta }\Vert {\hat{h}}_t^{1/2}-h_t^{1/2}\Vert \rightarrow 0\) as \(N\rightarrow \infty \) with \({\hat{h}}_t\) given in (14).

-

(iii)

\(T(f,h_\theta )=\theta \) uniquely for any \(\theta \in \varTheta \).

With the results given in Theorem 3, the consistency of our proposed MHDE can be proved and is presented in the following theorem.

Theorem 4

Let \(m,n\rightarrow \infty \) as \(N\rightarrow \infty \). Suppose that (D1) holds for any \(\theta \in \varTheta \) and the bandwidths \(b_{m}\) and \(b_{n}\) in (16) and (17) respectively satisfy \(b_{m},b_{n}\rightarrow 0\) and \(m b_{m},n b_{n}\rightarrow \infty \) as \(N\rightarrow \infty \). Further suppose that either (D2) or (D3) holds. Then \(\Vert f_m^{1/2}-f^{1/2}\Vert {\mathop {\rightarrow }\limits ^{P}} 0\), \(\Vert h_{n}^{1/2}-h_{\theta }^{1/2}\Vert {\mathop {\rightarrow }\limits ^{P}} 0\) and \(\sup _{t\in \varTheta }\Vert \hat{h}_t^{1/2}-h_t^{1/2}\Vert {\mathop {\rightarrow }\limits ^{P}}0\) as \(N\rightarrow \infty \). Furthermore, \(\hat{\theta }_{\text {MHDE}}{\mathop {\rightarrow }\limits ^{P}}\theta \) as \(N\rightarrow \infty \), where \(\hat{\theta }_{\text {MHDE}}\) is defined in (15) with \(f_{m}\), \(h_{n}\) and \(\hat{h}_t\) given in (16), (17) and (14), respectively.

We derive in the next theorem an expression of the bias term \(\hat{\theta }_{\text {MHDE}}-\theta \). Define symmetric matrix

where

Let

where

Theorem 5

Suppose \(\theta \in int(\varTheta )\), \(\varDelta (\theta )\) defined in (19) is invertible, (C2) and assumptions in Theorem 4 hold. Then it follows that

where \(\hat{\theta }_{\text {MHDE}}\) is defined by (15) and \(R_{N}\) is a \(3\times 3\) matrix with elements tending to zero in probability as \(N\rightarrow \infty \).

From this result, we obtain immediately the asymptotic distribution of the MHDE.

Theorem 6

Suppose that \({\hat{\theta }}_{\text {MHDE}}\) defined in (15) satisfies (21). Further suppose that conditions \((C0)--(C9)\) hold. Then the asymptotic distribution of \(\sqrt{N} (\hat{\theta }_{\text {MHDE}}-\theta )\) is \(N(0,\varSigma )\), where \(\varSigma \) is defined by

with

In Theorems 5 and 6, the assumption of \(\varDelta (\theta )\) being invertible is generally true except for some special cases such as \(\lambda =0\), or \(\alpha =\beta =0\), or f is a singleton.

Since MELE is a likelihood-based method, we expect MELE asymptotically more efficient than MHDE. Looking at the asymptotic covariance matrices given in Theorems 2 and 6 for MELE and MHDE respectively, we note that roughly speaking \(|S_{ij}|\) is smaller than \(|\varDelta _{ij}|\) while \(|{\bar{\varDelta }}_{ij}|\) is much bigger than both, due to the fact that the denominator \(w_1\) or \(w_2\) can control the exponential terms in the integrands. As a result, the asymptotic variances of the MELEs of \(\lambda \), \(\alpha \) and \(\beta \) are smaller than those of the corresponding MHDEs.

To see the asymptotic relative efficiency of the MHDE with respect to the MELE, we take the normal mixture \((1-\lambda )N(0,1)+\lambda N(\mu ,1)\), a model examined also in our later simulation studies, as an example and Table 1 below presents the asymptotic covariance matrices of the MELE and MHDE for \(\rho =0.5\) and various parameter settings \(\mu =.5, 1, 2\) (i.e., \((\alpha ,\beta )=(-.125, .5), (-.5,1), (-2,2)\) respectively) and \(\lambda =.05, .20, .50, .80, .95\). From Table 1 we see that, as expected, the elements in the covariance matrices of the MELE are almost always smaller than those of the MHDE, in absolute values. The difference in the asymptotic variances of the MELE and MHDE of \(\lambda \) or \(\beta \) is very small for smaller \(\mu \) (\(=.5\)) and smaller \(\lambda \) values. The relative efficiency of the MHDE with respect to the MELE deteriorates when \(\lambda \) increases or, especially when \(\mu \) increases. We also observe from Table 1 that the asymptotic variances of both the MELE and MHDE of \(\alpha \) and \(\beta \) decrease when \(\lambda \) increases, which is expected by the fact that the information of \(\alpha \) and \(\beta \) is contained in the second component only and the amount of such information increases when the mixing proportion \(\lambda \) of the second component increases. Comparatively, when \(\lambda \) increases, the asymptotic variances of both the MELE and MHDE of \(\lambda \) increase first, reach their maxima around \(\lambda =0.5\) and then decrease. This phenomenon can be explained by the fact that the estimation of \(\lambda \) is associated with a Bernoulli random variable with parameter \(\lambda \) and the variance of this random variable, \(\lambda (1-\lambda )\), as a function of \(\lambda \) has the same increasing-decreasing trend.

5 Test of the semiparametric model

In this section we discuss the validity of the semiparametric mixture model (4) or equivalently the relationship (3) between f and g, with \(r(x)=x\). Several goodness-of-fit test statistics for testing the relationship (3) in case-control studies are available in literature; see, for example, Qin and Zhang (1997), Zhang (1999, 2001, 2006), and Deng et al. (2009). For our semiparametric mixture model (4), we will construct Kolmogorov–Smirnov (K–S) type statistics based on the proposed MELE and MHDE.

The idea of K–S test statistic is to use the discrepancy between two c.d.f. estimates, one with the model assumption and the other without, to assess the validity of a model. For model (4) with \(r(x)=x\), we can use the empirical c.d.f. based on the first sample \(X_i\)’s as the first estimate and the MELE or MHDE based on both samples \(X_i\)’s and \(Y_i\)’s exploiting (4) as the second. We first look at the special case of testing \(\beta =0\) in model (4). When \(\beta =0\), we also have \(\alpha =0\), which implies the equality of the two components F and G and further the equality of F and H. The two-sample K–S statistic for testing the equality of F and H is

where \(N=m+n\), \({\hat{F}}\), \({\hat{H}}\) and \({\tilde{F}}_0\) denote the empirical c.d.f.s based on \(X_i\)’s, \(Y_i\)’s and \(T_i\)’s respectively, and \((T_1,\dots , T_N)^\top =(X_1,\dots ,X_m,Y_1,\dots , Y_n)^\top \) is the pooled sample. Note that \(\hat{F}\) and \(\hat{H}\) are the nonparametric MLEs of F and H respectively without the assumption of \(F=H\), whereas \(\tilde{F_{0}}\) is the nonparametric MLE of F with the assumption of \(F=H\). Now consider the general case of testing (4) with any \(\beta \). Motivated by the construction of the K–S statistic for testing \(\beta =0\), to test the validity of model (4) with \(r(x)=x\), we propose to use the test statistic

where \(\tilde{F}\) is an estimator of F based on either the MELE or the MHDE of \(\theta =(\lambda , \alpha ,\beta )^\top \) with model assumption (4). Recall in Sect. 3, with \(p_i=dF(T_i)\) and \(\rho _N=n/N\), the MELE \({\hat{p}}_i\) of \(p_i\) is given by (6). Then an estimator \({\tilde{F}}\) of F under model (4) is given by

If the \({\hat{\theta }}=({\hat{\lambda }}, {\hat{\alpha }},{\hat{\beta }})^\top \) in (6) and (23) is the MELE \({\hat{\theta }}_{MELE}\) that we constructed in Sect. 3, then the resulting \({\tilde{F}}_{MELE}\) is the actual MELE of F under (4) and we denote the corresponding test statistic in (22) as \({\text {KS}}_{MELE}\). This test statistic is similar to that in Qin and Zhang (1997), but they used it for case-control data instead of our more complicated mixture model (4). Intuitively, we can also use the MHDE \({\hat{\theta }}_{\text {MHDE}}\) given in (15) for \({\hat{\theta }}\), and then we denote the resulting \({\tilde{F}}\) in (23) and KS in (22) as \({\tilde{F}}_{\text {MHDE}}\) and \({\text {KS}}_{\text {MHDE}}\), respectively.

In our later numerical studies, we use bootstrap procedure to find the approximated distributions and critical values for \({\text {KS}}_{MELE}\) and \({\text {KS}}_{\text {MHDE}}\). To generate bootstrapping data, we randomly select independent samples \(X_i^*\)’s from \(d\tilde{F}(x)\) and \(Y_i^*\)’s from \((1-{\hat{\lambda }}+{\hat{\lambda }} e^{{\hat{\alpha }}+{\hat{\beta }} x})d\tilde{F}(x)\), where \({\hat{\theta }}\) and \({\tilde{F}}\) are either the MELEs \({\hat{\theta }}_{\text {MELE}}\) and \({\tilde{F}}_{\text {MELE}}\) or the MHDEs \({\hat{\theta }}_{\text {MHDE}}\) and \({\tilde{F}}_{\text {MHDE}}\), respectively. Note that both \(X_i^*\)’s and \(Y_i^*\)’s are selected from the pooled data \((T_1,\dots ,T_N)\) but with different probability distribution function. This means that some of the selected \(X_{i}^{*}\)’s could be values in the original second sample \(Y_i\)’s and some of the selected \(Y_{i}^{*}\)’s could be values in the original first sample \(X_i\)’s. Let \((T_{1}^{*},\ldots ,T_N^{*})\) denote the combined bootstrapping sample and \({\hat{\theta }}^*=({\hat{\lambda }}^{*},{\hat{\alpha }}^{*},{\hat{\beta }}^{*})^\top \) be either the MELE or the MHDE based on the bootstrapping samples \(X_i^*\)’s and \(Y_i^*\)’s. Then we can calculate the empirical function \({\hat{F}}\) based on \(X_i^*\)’s, the quantities in (6) and the function in (23) based on \(T_i^*\)’s and \({\hat{\theta }}^*\), with results denoted by \({\hat{F}}^*\), \({\hat{p}}^*_i\) and \({\tilde{F}}^*\), respectively. Then finally the bootstrapping KS test statistic is

We can generate 1000 bootstrapping samples to produce 1000 bootstrapping KS statistic values for both \({\text {KS}}_{\text {MELE}}\) and \({\text {KS}}_{\text {MHDE}}\) at the same time. Then the distributions, and further the critical values, of \({\text {KS}}_{\text {MELE}}\) and \({\text {KS}}_{\text {MHDE}}\) can be estimated by these respective 1000 statistic values.

6 Simulation studies

In this section we examine through Monte Carlo simulation studies the finite-sample performance of the proposed MELE and MHDE of the parameters in model (4) and the proposed K–S tests of this model. In our simulation study, we consider mixture of normals, Poissons or uniforms given in Table 2. We can easily check that all the five models satisfy the stochastic dominance condition, however only models M1-M4 satisfy relationship (4). Model M5 does not satisfy (4) since the two components have different support. Even though the focus of this paper is on continuous mixture models, we also want to check the performance of the proposed methods for discrete mixture models such as M3 and M4. For each model, the true values of \(\alpha \) and \(\beta \) are calculated and listed in Table 2.

6.1 Efficiency of the estimators

For each of the mixture models in Table 2, we consider different \(\lambda \) values varying from .05 to .95. Under each model, we take \(N=1000\) repeated random samplings and for each sampling we use two different sample sizes \((m,n)=(30,30)\) and (100, 100). In the kernel density estimators \(f_m\) and \(h_n\) given in (16) and (17) respectively, standard normal p.d.f. is used as both kernels \(K_0\) and \(K_1\), and the bandwidths \(b_m\) and \(b_n\) are chosen the same as in Silverman (1986) and Wu and Abedin (2021). The effect of different choice of kernel on kernel estimator is trivial, while the bandwidth has more influence on the finite-sample performance and the convergence rate of the nonparametric kernel estimator. Nevertheless, Theorems 4–6 indicate that the MHDE has the consistency and asymptotic normality if the bandwidths are of the form \(b_m,b_n=O(N^{-r})\) with r from a subinterval of (0, 1). This subinterval may depend on the population of the two components. With the component population unknown, we choose \(r=1/5\) in our simulation as in Silverman (1986) and Wu and Abedin (2021) which achieves the optimal mean square error of the kernel estimator. In addition, the bandwidths \(b_m\) and \(b_n\) of our choice are adaptive in the sense that they involve a factor of robust scale estimate to incorporate the different variation of f and h.

To compute the estimates numerically, we use \(\lambda _+\), an estimator based on odds ratio, given by Smith and Vounatsou (1997) as the initial of \(\lambda \). Initial values of \(\alpha \) and \(\beta \) are calculated by exploiting the relationship (3), or equivalently

Thus for each \(T_i\) in the pooled sample, we generate the pair \((T_i, R_i)\), where \(R_i=\log \frac{h_n(T_i)/f_m(T_i)-(1-\lambda _+)}{\lambda _+}\). Then we use \((T_i,R_i)\), \(i=1,\dots ,N\), to fit a least-squares regression line and the fitted coefficients are used as the initials of \(\alpha \) and \(\beta \). To evaluate the finite-sample performance of an estimator \({\hat{\theta }}\) of \(\theta \), we calculate the bias and mean squared error (MSE) over \(N=1000\) replications. The results are presented in Tables 3, 4 and 5.

Table 3 gives the biases and MSEs of \({\hat{\lambda }}_{\text {MELE}}\) and \({\hat{\lambda }}_{\text {MHDE}}\) for all the five models with varying \(\lambda \) values and sample size \(m=n=30\) whereas Table 4 is for \(m=n=100\). For the purpose of comparison, we also report the results of the two estimators \({\hat{\lambda }}\) and \({\hat{\lambda }}_L\) proposed in Wu and Abedin (2021) for model (1) directly, without assuming the relationship (3). The \({\hat{\lambda }}\) is based on cumulative distribution estimation while \({\hat{\lambda }}_L\) is the MLE of the multinomial approximation of model (1). From Tables 3 and 4 we can see that all the four methods are very competitive when \(\lambda \) is concerned. The nonparametric estimators \({\hat{\lambda }}\) and \({\hat{\lambda }}_L\) perform slightly better than the semiparametric estimators \({\hat{\lambda }}_{\text {MELE}}\) and \({\hat{\lambda }}_{\text {MHDE}}\) when the two components are close to each other (such as M1 and M3) or the relationship (3) does not holds (M5), while \({\hat{\lambda }}_{\text {MELE}}\) and \({\hat{\lambda }}_{\text {MHDE}}\) perform better than \({\hat{\lambda }}\) and \({\hat{\lambda }}_L\) when the two components are relatively far away (such as M2). Both the MELE and the MHDE perform surprisingly well for model M5, even though the assumed relationship (3) is not valid for M5.

The simulation results for estimating \(\beta \) are given in Table 5. Since (3) is not assumed in Wu and Abedin (2021), their methods are for estimating \(\lambda \) only and thus in Table 5 we compare \({\hat{\beta }}_{\text {MELE}}\) and \({\hat{\beta }}_{\text {MHDE}}\) only. In addition, we omit the results for \(\alpha \) and model M5 as \(\alpha \) depends on \(\beta \) through normalization and M5 doesn’t satisfy (3). From Table 5 we observe that the MHDE performs better than the MELE in terms of both smaller bias and MSE. However, the MHDE tends to give very large bias and MSE when \(\lambda \) is small, such as M2 with \(\lambda =.05\). We also observe from Table 5 that the MSEs of both MHDE and MELE of \(\beta \) decrease as the true \(\lambda \) value increases, which is very consistent with the observations from Table 1 on their asymptotic variances. This phenomenon is justified by the fact that when \(\lambda \) increases the expected number of observations from the second component g gets larger, and as a result the data contains more and more information about \(\beta \). This is also the reason why the MHDE and the MELE give large biases and inflated MSEs for small \(\lambda \), especially when sample size is small. For example, for model M2 with \(\lambda =0.05\) and \(m=n=30\) (or 100), we would expect only \(n\lambda =1.5\) (or 5) observations on average from g that contain information about \(\beta \), which thus produces estimates of \(\beta \) with large bias and MSE.

In order to examine the effect of unbalanced sample sizes on the performance of MELE and MHDE, in Table 6 we present the simulation results for sample sizes \((m,n)=(50, 150)\) and (150, 50). Comparing Table 6 with Table 4, we observe that when \(\lambda \) is concerned, the MELE and MHDE under unbalanced sample sizes \((m,n)=(50, 150)\) and (150, 50) perform slightly worse, though still comparable, than under balanced sample sizes \((m,n)=(100, 100)\), especially in terms of MSE. When \(\beta \) is concerned and Table 6 is compared with Table 5, we observe that the performance of the MELE and MHDE under unbalanced sample sizes is significantly worse than that under balanced sample sizes, especially in terms of MSE. These phenomenon can be possibly explained by the fact that both the asymptotic covariance matrices of MELE and MHDE given in Theorems 2 and 6 respectively have a factor \(\frac{1}{\rho (1-\rho )}\) in front. Other places in the covariance matrix expressions also involve \(\rho \), though not as influencing as this factor, which makes the level of deterioration different for \(\lambda \) and \(\beta \) when \(m/(m+n)\) deviates away from 1/2 (i.e., balanced samples).

To examine the performance of \({\hat{p}}_{\text {MELE}}\) and \({\hat{p}}_{\text {MHDE}}\) given in (8) and (18) respectively, we calculate misclassification rates (MR) based on these estimates and a simple classification rule with use of .5 as the hard threshold, i.e., an individual with observation y is classified as from G if \({\hat{p}}(y)>.5\) and as from F if otherwise, where \({\hat{p}}\) is either \({\hat{p}}_{\text {MELE}}\) or \({\hat{p}}_{\text {MHDE}}\). Considering the fact that MR is higher for some models, such as M1 when the two components significantly overlap, than some other models such as M2, we use the optimal misclassification rate (OMR) in Wu and Abedin (2021) as the baseline to compare with. The OMR is the misclassification rate calculated assuming p(y) is completely known (ideal scenario) and the same value .5 is used as the classification threshold. The simulation results are presented in Table 7. For the purpose of comparison, we also report the results of this classification rule but based on the nonparametric estimators \({\hat{\lambda }}\) and \({\hat{\lambda }}_L\) in Wu and Abedin (2021).

From Table 7 we observe that \({\hat{\lambda }}_L\) performs worst, while other three methods are quite competitive. Particularly, \({\hat{\lambda }}_{\text {MELE}}\) and \({\hat{\lambda }}_{\text {MHDE}}\) performs much better than \({\hat{\lambda }}\) in model M2, while \({\hat{\lambda }}\) has a little better performance in M1, M3 and M5. Even though the assumed relationship (3) is not valid for M5, the classification results based on MELE and MHDE for M5 are surprisingly reasonable and the MRs don’t deviate from OMR too much for larger sample sizes.

6.2 Robustness of the estimators

In this subsection, we examine the robustness properties of the proposed MELE and MHDE. In order to compare with the two nonparametric estimators in Wu and Abedin (2021), we check the performance of estimators of \(\lambda \) only but not \(\alpha \) and \(\beta \) that Wu and Abedin (2021) didn’t introduce.

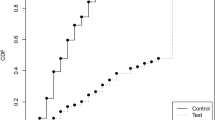

Specifically, we examine the behavior of the four estimators, \({\hat{\lambda }}_{\text {MELE}}\), \({\hat{\lambda }}_{\text {MHDE}}\), \({\hat{\lambda }}\) and \({\hat{\lambda }}_L\) when data are contaminated by a single outlying observation. Presence of several outliers will be similar and thus omitted here. Note that the outlying observation can be in either the first sample from f(x) or the second sample from the mixture h(x). We report results for the latter case and similar results are observed for the former case. We look at the change in estimate before and after data contamination. For this purpose, the \(\alpha \)-influence function (\(\alpha \)-IF) given in Beran (1977) is an appropriate measure of the change in estimate. However its application in mixture context is very difficult, as discussed in Karlis and Xekalaki (1998). Therefore, we employ an adaptive version of the \(\alpha \)-IF as in Lu et al. (2003) which uses the change in estimate, before and after outlying observations are included, divided by contamination rate. Taking model M1 as an example, after drawing two independent samples with one from N(0, 1) and the other from \((1-\lambda ) N(0,1)+\lambda N(1,1)\), we replace the last observation generated from the mixture with a single outlier, an integer from the interval \([-30,20]\). Then the \(\alpha \)-IF is given by

averaged over 100 replications, where the total sample size \(N=60\) or 200 and W is an estimator of \(\lambda \). In our study, W is either \({\hat{\lambda }}_{\text {MELE}}\), \({\hat{\lambda }}_{\text {MHDE}}\), \(\hat{\lambda }\) or \(\hat{\lambda }_{L}\). The results are presented in Figs. 1, 2 and 3. Figure 1 is for M1 with \(\lambda =.15\) and .55, Figure 2 for M2 with \(\lambda =.25\) and .75, and Fig. 3 for M3 with \(\lambda =.25\) and .75. The results for other models and \(\lambda \) values are very similar and thus omitted to save space.

The \(\alpha \)-IFs of \(\hat{\lambda }_{\text {MELE}}\) (dashed), \(\hat{\lambda }_{\text {MHDE}}\) (solid), \(\hat{\lambda }\) (dotted) and \(\hat{\lambda }_{L}\) (dot-dashed) for model M1: a \(\lambda =.15\) and \(m=n=30\); b \(\lambda =.15\) and \(m=n=100\); c \(\lambda =.55\) and \(m=n=30\); d \(\lambda =.55\) and \(m=n=100\)

The \(\alpha \)-IFs of \(\hat{\lambda }_{\text {MELE}}\) (dashed), \(\hat{\lambda }_{\text {MHDE}}\) (solid), \(\hat{\lambda }\) (dotted) and \(\hat{\lambda }_{L}\) (dot-dashed) for model M2: a \(\lambda =.25\) and \(m=n=30\); b \(\lambda =.25\) and \(m=n=100\); c \(\lambda =.75\) and \(m=n=30\); d \(\lambda =.75\) and \(m=n=100\)

The \(\alpha \)-IFs of \(\hat{\lambda }_{\text {MELE}}\) (dashed), \(\hat{\lambda }_{\text {MHDE}}\) (solid), \(\hat{\lambda }\) (dotted) and \(\hat{\lambda }_{L}\) (dot-dashed) for model M3: a \(\lambda =.25\) and \(m=n=30\); b \(\lambda =.25\) and \(m=n=100\); c \(\lambda =.75\) and \(m=n=30\); d \(\lambda =.75\) and \(m=n=100\)

From Figs. 1, 2 and 3 we observe that no matter for which model and what sample size, \({\hat{\lambda }}_{\text {MHDE}}\) always performs the best and \({\hat{\lambda }}_{\text {MELE}}\) performs the worst. The \(\alpha \)-IF of \({\hat{\lambda }}_{\text {MELE}}\) is generally unbounded while that of \({\hat{\lambda }}_{\text {MHDE}}\), \({\hat{\lambda }}\) and \({\hat{\lambda }}_L\) seems bounded when the outlying observation increases in both directions for mixture of normals and in the right direction for mixture of Poissons. This indicates that \({\hat{\lambda }}_{\text {MELE}}\) is generally not resistant to outliers whereas \({\hat{\lambda }}_{\text {MHDE}}\), \({\hat{\lambda }}\) and \({\hat{\lambda }}_L\) are. The bad performance of \({\hat{\lambda }}_{\text {MELE}}\) is mostly for when the outlying observation is bigger than 10. When the outlying observation is less than 10, the performance of \({\hat{\lambda }}_{\text {MELE}}\) is generally reasonable and is similar to that of other three estimators. The \(\alpha \)-IFs of \({\hat{\lambda }}_{\text {MHDE}}\), \({\hat{\lambda }}\) and \({\hat{\lambda }}_L\) are almost constants for outliers beyond the range \([-10,5]\) for mixture of normals and [0, 5] for mixture of Poissons, though the constants are of different magnitude, and they fluctuate within the respective ranges. When \({\hat{\lambda }}_{\text {MHDE}}\), \({\hat{\lambda }}\) and \({\hat{\lambda }}_L\) are compared, \({\hat{\lambda }}_L\) behaves the worst in terms of having largest \(\alpha \)-IF for mixture of normals and \({\hat{\lambda }}\) behaves the worst for mixture of Poisson distributions.

6.3 Test of model

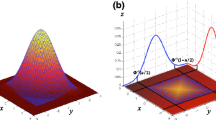

In this part, we examine the performance of the K–S tests we proposed in Sect. 5 for testing the validity of model (3). We consider model (3) with \(r(x)=(x,x^2)^\top \) as the collection of all possible models under consideration. Then we test whether the reduced model (3) with \(r(x)=x\) is the actual true model or not. For demonstration purpose, we consider mixture of normals \(H(x)=(1-\lambda )F(x)+\lambda G(x)\) with \(F\sim N(0,1)\) and \(G\sim N(\mu ,\sigma ^{2})\). Then f(x) and h(x) are related by

where \(\alpha =-\frac{1}{2}\left( \log \sigma ^{2}+\frac{\mu ^{2}}{\sigma ^{2}}\right) \), \(\beta =\frac{\mu }{\sigma ^{2}}\) and \(\gamma =\frac{1}{2}\left( 1-\frac{1}{\sigma ^{2}}\right) \). Note that (24) is a special case of (3) when \(r(x)=(x,x^2)^\top \). If \(\sigma =1\), then \(\gamma =0\) and thus model (3) holds with \(r(x)=x\). So testing the validity of model (3) with \(r(x)=x\) is equivalent to testing the null hypothesis \(H_{0}:\gamma =0\) under model (24). We consider \(\gamma =0\), \(-.9\) and \(-1.5\), \(\lambda =.35\) and .65, and sample sizes \(m=n=30\) and \(m=n=100\). For simplicity, we just fix \(\mu =1\) and as a result \(\sigma =1\), .6 and .5 for \(\gamma =0\), \(-.9\) and \(-1.5\), respectively. For each \(\lambda \), \(\gamma \) and sample size considered, we use 500 total number of replications for our calculation. Within each replication, we use totally 1000 bootstrapping samples to estimate the distribution and critical values of the test statistics \({\text {KS}}_{\text {MELE}}\) and \({\text {KS}}_{\text {MHDE}}\). We choose different levels of significance \(a=.10\), .05 and .01. The simulation results are presented in Table 8. Note that \(\gamma =0\) means model (3) with \(r(x)=x\) is correct and thus the correspondingly calculated values in Table 8 are the estimated significance levels. When \(\gamma \ne 0\), model (3) with \(r(x)=x\) is not correct and thus the correspondingly calculated values in Table 8 are the estimated powers at that value of \(\gamma \).

From Table 8 we can see that the two test statistics \({\text {KS}}_{\text {MELE}}\) and \({\text {KS}}_{\text {MHDE}}\) are quite competitive in terms of achieved significance level and power. The achieved levels of significance are quite close to the true levels for most of the cases except for the case of \({\text {KS}}_{\text {MHDE}}\) with \(\lambda =0.65\) and \(m=n=30\). The power of \({\text {KS}}_{\text {MHDE}}\) becomes larger when \(\gamma \) is away from 0 except for the case of \(\lambda =0.65\) and \(m=n=30\). Surprisingly, the power of \({\text {KS}}_{\text {MELE}}\) becomes smaller when \(\gamma \) is away from 0 except for the case of \(\lambda =0.65\) and \(m=n=100\). Note that \({\text {KS}}_{\text {MELE}}\) and \({\text {KS}}_{\text {MHDE}}\) use respectively the MELE and MHDE of \(\beta \), which have been shown in Sect. 6.1 to have large bias and MSE when sample sizes are small. Consequently, the results for \(m=n=30\) have some abnormals while the results for \(m=n=100\) are much better and more reliable. As expected, when the significance level a decreases, both the observed significance level and power decrease. For both \({\text {KS}}_{\text {MELE}}\) and \({\text {KS}}_{\text {MHDE}}\), the powers are generally high for significance levels \(a=0.10\) and 0.05.

7 Real data examples

In this section we consider two real-life data examples and demonstrate the applications of our proposed methods.

Example 1: Grain data.

Smith and Vounatsou (1997) analyzed a data to determine the proportion \(\lambda \) of cells in the test sample with size \(n=94\) that were exposed to radioactive materials. The cells in control group with size \(m=94\) were not exposed to radioactivity. The number of grains shown in autoradiograph of cells can measure the amount of radioactive material, however grains can appear in autoradiograph due to radioactive material or due to background fogging. This dataset and more details of the data are available in Smith and Vounatsou (1997), and Wu and Abedin (2021) showed that the dominance constraint \(F\ge G\) is valid for this dataset. We apply the two proposed estimators to this data and compare them with the estimators in Wu and Abedin (2021), Smith and Vounatsou (1997) and Smith et al. (1986). The results are given in Table 9, in which bootstrap method with 1000 repetitions are used to calculate the confidence intervals of \(\lambda \).

From Table 9 we can see that our two proposed methods produce similar point estimates of \(\lambda \) to most other methods available in literature except for the two-by-two table and Logistic power methods. When interval estimation is concerned, the two proposed estimators produce confidence intervals with width similar to those of \({\hat{\lambda }}\), \({\hat{\lambda }}_L\) and the latent class method but much smaller than those of Poisson mixture, two-by-two table and monotone logistic methods. In Table 9, the confidence intervals in the parentheses for \({\hat{\lambda }}_{\text {MELE}}\) and \({\hat{\lambda }}_{\text {MHDE}}\) are calculated using the asymptotic covariance matrices given in Theorems 2 and 6, respectively. From the results we see that bootstrap approximation is quite accurate. So when making inferences about \(\lambda \), computationally intensive bootstrap is not necessary for \({\hat{\lambda }}_{\text {MELE}}\) and \({\hat{\lambda }}_{\text {MHDE}}\) while it is necessary for all other methods due to the unavailability of results on their asymptotic distribution. This is another great benefit of using \({\hat{\lambda }}_{\text {MELE}}\) and \({\hat{\lambda }}_{\text {MHDE}}\) over other methods.

Example 2: Malaria data.

We also examine a clinical malaria dataset first described by Kitua et al. (1996) and further studied by Vounatsou et al. (1998). Parasite densities in children with fever can be modelled as a two-component mixture, where one component represents the parasite densities in children without clinical malaria (F) while the other with clinical malaria (G), and \(\lambda \) is the proportion of children whose fever is attributable to malaria. In this dataset, level of parasitaemia were observed on children between 6 and 9 months in a village in Tanzania for both the wet season (\(m=94\), \(n=251\)) and the dry season (\(m=122\), \(n=245\)). Wu and Abedin (2021) showed that the dominance constraint \(F\ge G\) is valid for both wet and dry seasons.

We apply the proposed \({\hat{\lambda }}_{\text {MELE}}\) to both seasons and compare the results with \({\hat{\lambda }}_L\) in Wu and Abedin (2021) and the Bayesian approach in Vounatsou et al. (1998). Note that this is a discretized data (parasitaemia levels were grouped into 10 categories), so kernel smoothing and hence \({\hat{\lambda }}_{\text {MHDE}}\) and \({\hat{\lambda }}\) are not applicable for this data. Table 10 presents the estimation results where the numbers in parentheses are estimated standard errors based on 500 bootstrapping samples. From this table we observe that \({\hat{\lambda }}_{\text {MELE}}\), \({\hat{\lambda }}_L\) and Bayesian approach produce similar estimates.

8 Concluding remarks

In this work, we proposed a two-component semiparametric mixture model (4) to accommodate the stochastic dominance constraint. We not only proposed and studied two estimators, the MELE and the MHDE, but also proposed K–S statistics to test the validity of the model (4). For both estimators, we proved theoretically their consistency and asymptotic normality. The finite-sample performance, in terms of both efficiency and robustness, of the proposed estimators and tests of the semiparametric model were examined through simulation studies and real data analysis.

The introduction of the two-component semiparametric mixture model (4) has several advantages over the fully nonparametric mixture model (1). First, the semiparametric model automatically solves the unidentifiability issue that the fully nonparametric model generally has. Second, the semiparametric model easily accommodates the stochastic dominance constraint with an equivalent and natural positivity constraint on the parameter \(\beta \), while the dominance constraint in the original nonparametric model is imposed on two functions which is much harder to handle and assess directly. Third, the semiparametric model has much better interpretation than the nonparametric model. The \(\beta \) value quantifies the difference between the two components, and the higher the \(\beta \) value the larger the difference. Fourth, for the semiparametric model we proposed two estimators with proved asymptotic normality and derived asymptotic covariance matrices, and these asymptotic results are in lack in literature for the nonparametric model. As a result, we can easily conduct statistical inferences for the mixing proportion \(\lambda \) (and \(\beta \)) based on these derived asymptotics for the semiparametric model, while for the nonparametric model some computationally intensive method, such as bootstrap, is necessary in order to make inferences about \(\lambda \), due to the unavailability of asymptotic properties of available estimators. Of course, using the semiparametric model instead of the fully nonparametric model will lose some flexibility which we think will not be significant given the fact that many commonly used population families satisfy the exponential tilt relationship (3). This loss is worth, considering the aforementioned advantages of introducing the semiparametric model.

When the two proposed estimators for the semiparametric mixture model (4) are compared, we observe the following preference from our numerical studies. When the estimation of the mixing proportion \(\lambda \) or classification is our interest, MELE and MHDE perform equivalently well, in terms of bias and MSE or misclassification rate, and no one is dominantly better than the other. When the estimation accuracy of \(\beta \) is our focus, MHDE is highly preferred over MELE when \(\lambda \) is moderate or large while MELE is preferred when \(\lambda \) is small. When the presence of outliers is our concern, MHDE is much more robust and thus is highly preferred over MELE.

Since the proposed model and methods were motivated by the example in case-control genetic studies based on test statistic values (one dimension), this paper only focused on univariate case. Nevertheless, we can see that the proposed MELE and MHDE methods can be easily extended to multidimensional case. On the other side, the kernel estimations used in MHDE method may not be able to handle high dimensionality well.

For future work, we may consider to use minimum profile Hellinger distance estimation (MPHDE) for the semiparametric mixture model (4). Wu and Karunamuni (2015) first introduced the profile Hellinger distance particularly for semiparametric models and investigated the MPHDE for semiparametric model of general form. Wu and Karunamuni (2015) proved that the MPHDE is as robust as MHDE and achieves full efficiency at the true model. Xiang et al. (2014) used the MPHDE for a two-component semiparametric mixture model where one component is known up to some unknown parameters while the other component is unspecified. Wu et al. (2017) and Wu and Zhou (2018) applied the MPHDE for semiparametric location-shifted mixture models. Another direction to approach the estimation problem of model (4) could be Bayesian method. Vounatsou et al. (1998) applied Gibbs sampling approach to estimate the unknown mixing proportion in a two-component mixture model with discretized data.

References

Abedin, T. (2018). Inferences for two-component mixture models with stochastic dominance. Ph.D. thesis, Department of Mathematics and Statistics, University of Calgary.

Anderson, J. A. (1972). Separate sample logistic discrimination. Biometrika, 59, 19–35.

Anderson, J. A. (1979). Multivariate logistic compounds. Biometrika, 66, 17–26.

Beran, R. (1977). Minimum Hellinger distance estimators for parametric models. The Annals of Statistics, 5, 445–463.

Bordes, L., Delmas, C., Vandekerkhove, P. (2006). Semiparametric estimation of a two-component mixture model where one component is known. Scandinavian Journal of Statistics, 33, 733–752.

Breslow, N. E., Day, N. E. (1980). Statistical Methods in Cancer Research: the Analysis of Case-Control Studies. Lyon: IARC Scientific Publications.

Chen, G., Wu, J. (2013). Molecular classification of acute leukemia. Some recent advances in mathematics and statistics—Proceedings of statistics 2011 Canada/IMST 2011-FIM XX, 60–74.

Deng, X., Wan, S., Zhang, B. (2009). An improved Goodness-of-Fit test for logistic regression models based on case-control data by random partition. Communications in Statistics: Simulation and Computation, 38, 233–243.

Efron, B., Tibshirani, R. (1996). Using specially designed exponential families for density estimation. The Annals of Statistics, 24, 2431–2461.

Ficklin, S. P., Dunwoodie, L. J., Poehlman, W. L., Watson, C., Roche, K. E., Feltus, F. A. (2017). Discovering condition-specific gene co-expression patterns using gaussian mixture models: A cancer case study. Scientific Reports, 7, 8617.

Karlis, D., Xekalaki, E. (1998). Minimum Hellinger distance estimation for Poisson mixtures. Computational Statistics & Data Analysis, 29, 81–103.

Karunamuni, R. J., Wu, J. (2011). One-step minimum Hellinger distance estimation. Computational Statistics & Data Analysis, 55, 3148–3164.

Kharchenko, P. V., Silberstein, L., Scadden, D. T. (2014). Bayesian approach to single-cell differential expression analysis. Nature Methods, 11, 740–742.

Kitua, A. Y., Smith, T., Alonso, P. L., Masanja, H., Urassa, H., Menendez, C., Kimario, J., Tanner, M. (1996). Plasmodium falciparum malaria in the first year of life in an area of intense and perennial transmission. Tropical Medicine & International Health, 1, 475–484.

Lindsay, B. G. (1994). Efficiency versus robustness: The case for minimum Hellinger distance and related methods. The Annals of Statistics, 22, 1081–1114.

Lu, R., Smith, R. M., Seweryn, M., Wang, D., Hartmann, K., Webb, A., Sadee, W., Rempala, G. A. (2015). Analyzing allele specific RNA expression using mixture models. BMC Genomics, 16, 566.

Lu, Z., Hui, Y. V., Lee, A. H. (2003). Minimum Hellinger distance estimation for finite mixtures of Poisson regression models and its applications. Biometrics, 59, 1016–1026.

Prentice, R. L., Pyke, R. (1979). Logistic disease incidence models and case-control studies. Biometrika, 66, 403–411.

Qin, J. (1999). Empirical likelihood ratio based confidence intervals for mixture proportions. The Annals of Statistics, 27, 1368–1384.

Qin, J., Lawless, J. (1994). Empirical likelihood and general estimating equations. The Annals of Statistics, 22, 300–325.

Qin, J., Zhang, B. (1997). A goodness of fit test for logistic regression models based on case-control data. Biometrika, 84, 609–618.

Silverman, B. W. (1986). Density Estimation for Statistics and Data Analysis. London: Chapman and Hall.

Smith, T., Vounatsou, P. (1997). Logistic regression and latent class models for estimating positives in diagnostic assays with poor resolution. Communications in Statistics: Theory and Methods, 26, 1677–1700.

Smith, T. A., Smith, A. G., Hooper, M. L. (1986). Selection of a mouse embryonal carcinoma clone resistant to the inhibition of metabolic cooperation by retinoic acid. Experimental Cell Research, 165, 417–430.

Vounatsou, P., Smith, T., Smith, A. F. M. (1998). Bayesian analysis of two-component mixture distributions applied to estimating malaria attributable fractions. Journal of the Royal Statistical Society: Series C (Applied Statistics), 47, 575–587.

Woo, M.-J., Sriram, T. N. (2006). Robust estimation of mixture complexity. Journal of the American Statistical Association, 101, 1475–1486.

Woo, M.-J., Sriram, T. N. (2007). Robust estimation of mixture complexity for count data. Computational Statistics & Data Analysis, 51, 4379–4392.

Wu, J., Abedin, T. (2021). A two-component nonparametric mixture model with stochastic dominance. Journal of the Korean Statistical Society, 50, 1029–1057.

Wu, J., Karunamuni, R. J. (2015). Profile Hellinger distance estimation. Statistics, 49, 711–740.

Wu, J., Zhou, X. (2018). Minimum Hellinger distance estimation for a semiparametric location-shifted mixture model. Journal of Statistical Computation and Simulation, 88, 2507–2527.

Wu, J., Karunamuni, R. J., Zhang, B. (2010). Minimum Hellinger distance estimation in a two-sample semiparametric model. Journal of Multivariate Analysis, 101, 1102–1122.

Wu, J., Yao, W., Xiang, S. (2017). Computation of an efficient and robust estimator in a semiparametric mixture model. Journal of Statistical Computation and Simulation, 87, 2128–2137.

Xiang, S., Yao, W., Wu, J. (2014). Minimum profile Hellinger distance estimation for a semiparametric mixture model. Canadian Journal of Statistics, 42, 246–267.

Zhang, B. (1999). A chi-squared goodness-of-fit test for logistic regression models based on case-control data. Biometrika, 86, 531–539.

Zhang, B. (2001). An information matrix test for logistic regression models based on case-control data. Biometrika, 88, 921–932.

Zhang, B. (2002). An EM algorithm for a semiparametric finite mixture model. Journal of Statistical Computation and Simulation, 72, 791–802.

Zhang, B. (2006). A score test under a semiparametric finite mixture model. Journal of Statistical Computation and Simulation, 76, 691–703.

Acknowledgements

The authors thank the Chief Editor, the Associate Editor and the referees for their valuable comments and suggestions that have led to significant improvements in the manuscript. The authors acknowledge with gratitude the support for this research via Discovery Grants project RGPIN-2018-04328 from Natural Sciences and Engineering Research Council (NSERC) of Canada and NSF project ZR2021MA048 of Shandong Province of China.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Appendix: Conditions in Theorems 3–6

Appendix: Conditions in Theorems 3–6

We decompose the parameter vector \(\theta \) into two parts \( \theta = \left(\lambda ,\theta _r^\top \right)^\top \), where \(\theta _r=(\alpha ,\beta )^\top \) represents the regression coefficient parameters in (4). Note that \(g_{\theta _r}(x)=e^{\alpha +\beta x}f(x)\) is essentially the g in (3). Parallelly for each \(t\in \varTheta \) we write \(t=\left(t_1,t_r^\top \right)^\top \) with \(t_r=(t_2,t_3)^\top \) and \(g_{t_r}(x)=e^{t_2+t_3 x}f(x)\).

-

(D1)

There exists an \(\epsilon \)-neighbourhood \(B(\theta _r,\epsilon )\) of \(\theta _r\) for some \(\epsilon \ge 0\) such that \(g_{t_r}-g_{\theta _r}\) is bounded by an integrable function for any \(t_r\in B(\theta _r,\epsilon )\).

-

(D2)

f and \(K_{0}\) in (4) and (16) respectively have compact supports.

-

(D3)

f in (4) has infinite support, \(K_{0}\) in (16) is a bounded symmetric density with support \([-a_{0},a_{0}]\) for some \(0< a_{0} < \infty \), the second derivative of f exists and there exists a sequence \(\alpha _m\) of positive numbers such that as \(m\rightarrow \infty \), \(\alpha _{m}\rightarrow \infty \) and

$$\begin{aligned}&\displaystyle \sup _{\theta \in \varTheta }\int I_{\left\{ |x|>\alpha _{m} \right\} }h_{\theta }(x){\text {d}}x \ \longrightarrow \ 0, \end{aligned}$$(25)$$\begin{aligned}&b_{m}^{2}\displaystyle \sup _{\theta \in \varTheta }\int I_{\left\{ |x|>\alpha _{m}\right\} } h_{\theta }(x)\sup _{|t|\le a_{0}}\frac{|f^{(2)}(x+tb_{m})|}{f(x)}{\text {d}}x \ \longrightarrow \ 0, \end{aligned}$$(26)$$\begin{aligned}&m^{-1}b_{m}^{-1}\displaystyle \sup _{\theta \in \varTheta }\int I_{\left\{ |x|\le \alpha _{m}\right\} } h_{\theta }(x) \sup _{|t|\le a_{0}}\frac{f(x+tb_{m})}{f^2(x)}{\text {d}}x \ \longrightarrow \ 0, \end{aligned}$$(27)$$\begin{aligned}&b_{m}^{4}\displaystyle \sup _{\theta \in \varTheta }\int I_{\left\{ |x|\le \alpha _{m}\right\} }h_{\theta }(x) \sup _{|t|\le a_{0}}\left[ \frac{f^{(2)}(x+tb_{m})}{f(x)}\right] ^{2}{\text {d}}x \ \longrightarrow \ 0, \end{aligned}$$(28)where \(f^{(k)}\) denotes that \(k^{th}\) derivative of f.

Condition (D3) is satisfied by probability families such as normal and exponential. When f is a normal or an exponential population, (25)–(28) hold with any sequence \(\alpha _m\) such that \(\alpha _m=o(\log [mb_m])\).

Let \(\left\{ \alpha _{N}\right\} \) be a sequence of positive numbers such that \(\alpha _{N}\rightarrow \infty \) as \(N\rightarrow \infty \).

-

(C0)

f has infinite support \((-\infty ,\infty )\).

-

(C1)

The second derivative of f exists.

-

(C2)

\(\frac{n}{N}\rightarrow \rho \in (0,1)\) as \(N\rightarrow \infty \).

-

(C3)

\(K_{0}\) and \(K_{1}\) in (16) and (17) respectively are bounded symmetric densities with support \([-a_{0},a_{0}]\) and \([-a_{1},a_{1}]\) respectively, where \(0<a_{0},a_{1}<\infty \).

-

(C4)

All the elements in both \(\varDelta (\theta )\) and \({\bar{\varDelta }}(\theta )\) are finite, where \(\varDelta (\theta )\) is defined in (19) and \({\bar{\varDelta }}(\theta )\ = \ \int \left(\frac{\partial w_1(x)}{\partial \theta }\right)\left(\frac{\partial w_1(x)}{\partial \theta }\right)^\top f(x){\text {d}}x\).

-

(C5)

The second derivative of f exists and satisfies for \(i=1,2,3\) that as \(N\rightarrow \infty \),

$$\begin{aligned} b_{m}^{2}\int \epsilon _{Ni}^{2}(x) \frac{f}{w_1}(x) \sup _{|t|\le a_{0}} \frac{f^{(2)}(x+tb_{m})}{f(x)} {\text {d}}x \ = \ O(1), \end{aligned}$$where \(\epsilon _N(x)=\frac{\partial w_1(x)}{\partial \theta } I_{\{|x|>\alpha _{N}\}}\).

-

(C6)

As \(N\rightarrow \infty \),

$$\begin{aligned} \begin{array}{c} N\cdot P(|X_{1}|>\alpha _{N}-a_{0}b_{m}) \ \longrightarrow \ 0, \\ N\cdot P(|Y_{1}|>\alpha _{N}-a_{1}b_{n}) \ \longrightarrow \ 0. \end{array} \end{aligned}$$ -

(C7)

The second derivatives of f exists and satisfies, as \(N\rightarrow \infty \),

$$\begin{aligned} \begin{array}{c} N^{-1/2}b_{n}^{-1}\int |\delta _N(x)|w_1^{-1}(x) \sup _{|t|\le a_{1}}\frac{h_\theta (x+tb_{n})}{h_{\theta }(x)}{\text {d}}x \ \longrightarrow \ 0, \\ N^{1/2}b_{n}^{4}\int |\delta _N(x)|f(x)\sup _{|t|\le a_{1}}\left[ \frac{h_{\theta }^{(2)}(x+tb_{n})}{h_{\theta }(x)} \right] ^{2} {\text {d}}x \ \longrightarrow \ 0, \\ N^{-1/2}b_{m}^{-1}\int |\delta _N(x)|\sup _{|t|\le a_{0}}\frac{f(x+tb_{m})}{f(x)}{\text {d}}x \ \longrightarrow \ 0, \\ N^{1/2}b_{m}^{4}\int |\delta _N(x)|f(x)\sup _{|t|\le a_{0}}\left[ \frac{f^{(2)}(x+tb_{m})}{f(x)}\right] ^{2} {\text {d}}x \ \longrightarrow \ 0, \end{array} \end{aligned}$$where \(\delta _N(x)=\frac{\partial w_1(x)}{\partial \theta }I_{\{|x|\le \alpha _{N}\} }\).

-

(C8)

The second derivatives of f exists and satisfies, as \(N\rightarrow \infty \),

$$\begin{aligned} \begin{array}{c} N^{1/2}b_{n}^{2}\int |\delta _N(x)|f(x)\sup _{|t|\le a_{1}}\frac{|h_{\theta }^{(2)}(x+tb_{n})|}{h_{\theta }(x)} {\text {d}}x \ \longrightarrow \ 0, \\ N^{1/2}b_{m}^{2}\int |\delta _N(x)|f(x)\sup _{|t|\le a_{0}}\frac{|f^{(2)}(x+tb_{m})|}{f(x)} {\text {d}}x \ \longrightarrow \ 0. \end{array} \end{aligned}$$ -

(C9)

As \(N\rightarrow \infty \),

$$\begin{aligned} \begin{array}{c} \sup _{|x|\le \alpha _{N}}\sup _{|t|\le a_{1}}\frac{h_{\theta }(x+tb_{n})}{h_{\theta }(x)} \ = \ O(1), \\ \sup _{|x|\le \alpha _{N}}\sup _{|t|\le a_{0}}\frac{f(x+tb_{m})}{f(x)} \ = \ O(1). \end{array} \end{aligned}$$ -

(C10)

As \(N\rightarrow \infty \),

$$\begin{aligned} \begin{array}{c} b_{n}^{2}\displaystyle \int I_{\{|x|\le \alpha _{N}\}}h_\theta (x)\sup _{|t|\le a_{1}}\left[ \frac{\partial ^2 \log w_1(y)}{\partial \theta \partial y}|_{y=x+tb_n}\right] ^2{\text {d}}x \ \longrightarrow \ 0, \\ \displaystyle b_{m}^{2}\int I_{\left\{ |X|\le \alpha _{N}\right\} }f(x)\sup _{|t|\le a_{0}}\left[ \frac{\partial ^2 w_1(y)}{\partial \theta \partial y}|_{y=x+tb_{m}}\right] ^{2}{\text {d}}x \ \longrightarrow \ 0. \end{array} \end{aligned}$$ -

(C11)

The second derivative of f exists and satisfies, as \(N\rightarrow \infty \),

$$\begin{aligned} N^{1/2}b_{m}^{2}\int |\epsilon _{N}(x)| f(x) \sup _{|t|\le a_{0}} \frac{|f^{(2)}(x+tb_{m})|}{f(x)} {\text {d}}x \ \longrightarrow \ 0. \end{aligned}$$

Conditions (C4)–(C11) hold for probability families such as normal and exponential. When f is a normal population, (C4)–(C11) hold with \(b_m,b_n\asymp N^{-r}\), \(1/4<r<1/2\), and any sequence \(\alpha _N\) such that \(\alpha _N=o(N^r\log N)\) and \(\log N=o(\alpha _N^2)\). When f is an exponential distribution \(f(x)=ce^{-cx}\) with some \(c>0\) and \(2\beta -c<0\), (C4)–(C11) hold with \(b_m,b_n\asymp N^{-r}\), \(1/4<r<1/2\), and any sequence \(\alpha _N\) such that \(\alpha _N=o(N^r\log N)\) and \(\log N=o(\alpha _N)\).

About this article

Cite this article

Wu, J., Abedin, T. & Zhao, Q. Semiparametric modelling of two-component mixtures with stochastic dominance. Ann Inst Stat Math 75, 39–70 (2023). https://doi.org/10.1007/s10463-022-00835-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10463-022-00835-5