Abstract

Gaussian boson sampling (GBS) allows for a way to demonstrate quantum supremacy with the relatively modest experimental resources of squeezed light sources, linear optics, and photon detection. In a realistic experimental setting, numerous effects can modify the complexity of the sampling, in particular loss, partial distinguishability of the photons, and the use of threshold detectors rather than photon counting detectors. In this paper, we investigate GBS with partial distinguishability using an approach based on virtual modes and indistinguishability efficiency. We develop a model using these concepts and derive the probabilities of measuring a specific output pattern from partially distinguishable and lossy GBS for both types of detectors. In the case of threshold detectors, the probability as calculated by the Torontonian is a special case under our framework. By analyzing the expressions of these probabilities we propose an efficient classical simulation algorithm which can be used to calculate the probabilities. Our model and algorithm provide foundations for an approximate method for calculating probabilities. It also allows for a way to design sampling algorithms that are not only compatible with existing algorithms for ideal GBS, but can also reduce their complexity exponentially, depending on the indistinguishability efficiency. Using this we show how the boundary of quantum supremacy in GBS can be affected by partial distinguishability.

Similar content being viewed by others

Introduction

It is commonly believed that quantum computers have the ability to outperform classical computers and falsify the Extended Church-Turing Thesis1. In order to demonstrate this quantum speedup, one requires not only a large number of qubits but also sufficiently suppressed logical errors. Decoherence typically removes the quantum aspect of the computation, such that it becomes possible to classically simulate the computation efficiently. Specifically, quantum supremacy is claimed when the quantum device achieves a superpolynomial quantum speedup. This was the case in the comparison between the quantum processor created by Martinis and co-workers2 and the classical simulation algorithms of ref. 3 and recently from ref. 4.

Another heated battlefield in the context of quantum supremacy is the Boson sampling (BS) problem where a passive linear optics interferometer, Fock states at the input, and photon-detectors are placed at the output. It was proved by Aaronson & Arkhipov1 that the output distribution of such device cannot be efficiently simulated by a classical computer in polynomial time5,6 because it corresponds to the permanent of a given transformation matrix. However, challenges arise in the experimental implementation Aaronson & Arkhipov’s Boson sampling (AABS). One of them is the low generation efficiency of single photons. To truly outperform the current state-of-art classical simulation algorithm for AABS7, at least 50 photons are required at the input. But due to the low single photon efficiency of solid state sources8,9,10 which is typically between 0.2 and 0.3, the largest experimentally demonstrated number of single photon inputs in AABS has only been 20 to date11. It was then proposed that this challenge can be tackled by changing the input light from Fock states to Gaussian states which was first conceived in the form of Scattershot Boson Sampling (SBS)12,13,14 and later developed to GBS15,16. The probability of measuring a specific output pattern in GBS corresponds to the Hafnian of a matrix17 which has been also proven as being computationally hard5. While generally the significance of BS is in relation to quantum supremacy, GBS has also potential applications in finding subgraphs18 and molecular vibronic spectra19.

GBS was first realized in small-scale experiments20,21. In 2020, quantum supremacy was claimed by Pan, Lu, and co-workers in a GBS device named Jiuzhang22, which was able to generate up to 76 photon detection events at the output of a 100-mode interferometer. It should be noted the average output photon detection events is in the vicinity of 40 which means that it was not in the collision-free regime which most of the current GBS theories fall into. Recently, the same group released an improved version with lower losses23, which generates 113-photon detection events in a 144-mode circuit, going further away from the collision-free regime. Another major aspect of the experiment is the type of detection device used. In the experiment, threshold detectors, rather than photon number resolving (PNR) detectors were used, which only indicate the presence or lack of a photon. The use of threshold detectors changes the underlying theory because the output probability distribution is no longer determined by a Hafnian, but the Torontonian of the matrix representing the linear optical network24,25.

The Hafnian and the Torontonian have the same computational complexity for the ideal case, which is O(N32N) for a 2N × 2N matrix. Despite having the same complexity, their origins are in fact rather different. In the case of the Hafnian, the complexity arises due to the large number of permutations of matrix elements and the lack an efficient algorithm (i.e., which is available for other matrix functions such as the determinant). Meanwhile, the complexity of computing the Torontonian comes from the computation of 2N determinants. Recently, it was shown that a quadratic speedup for simulating GBS can be achieved26, based on the observation that the probability of a N-photon detection for a pure Gaussian state only scales as \({2}^{\frac{N}{2}}\). This allows for the reduction in complexity of simulating GBS with PNR detectors27 and with threshold detectors28 even for the case of lossy GBS with the help of the Williamson decomposition and loop Hafnian. It however is not as effective in the case of mixed Gaussian states since it requires calculating a large number of loop Hafnians associated with the pure state decomposition of the mixed state.

Another major challenge is to understand the impact of experimental imperfections such as losses29,30, network noise31 and partial photon distinguishability32,33,34,35,36. Similarly to AABS, the original theory of GBS only handles the ideal case and is not easily extendable to include these imperfections. So far, only losses and detector dark counts in GBS have been analyzed37. Ref. 37 is based on the framework of ref. 29 where the generalized Wigner function is used to demarcate the region of efficient classical simulation as a function of losses and detector dark counts. In contrast, no analysis has been carried out for partial photon distinguishability in GBS, except for a recent paper38 claiming to extend their results for partial distinguishability in AABS to that in GBS. The results of ref. 38 are somewhat unsatisfactory in the sense that their results for GBS must be applied in a context similar to AABS. For example, their investigation is strictly limited to the collision-free regime with weak squeezing, and they presume that the number of photons generated by the source is fixed and known at the beginning of the derivation. This is a rather strong assumption in relation to the indeterminate-photon number nature of Gaussian states, especially in the presence of loss. In addition, ref. 38 only discusses GBS with PNR detectors, such that the theory is not applicable to the current GBS experiments using threshold detectors.

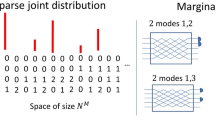

In this paper, we provide an investigation of the partial distinguishability problem in GBS, not limited by the collision-free or determinate photon number assumptions. We develop a model for partial distinguishability and apply it to GBS with both PNR and threshold detectors. In this model we introduce a new parameter called the indistinguishability efficiency. Along with other existing parameters such as the squeezing parameter of the input light and transmission rate introduced from the lossy GBS model, it forms a composite parameter that affects the probability distribution and its underlying structure, similar to how transmission rate and dark counts work together to determine the classical simulatability of imperfect AABS29. We define virtual modes to incorporate the distinguishable photons that do not interfere with other photons but contribute to the photon detection at the end. We note that a similar approach was also used in ref. 39 where it was used for investigating the trade-off between Hong-Ou-Mandel interference visibility and photon generation efficiency for heralded single photon source. For GBS with PNR detectors, the resulting probability is calculated as a sum of all possible combinations of different outputs of these states. Despite starting from completely different models, we find our characterization of the distinguishable photons in GBS matches perfectly with that derived for AABS40, which adds evidence towards the validity of our model. For GBS with threshold detectors, we include both partial distinguishability and losses in deriving the expression for the probability. In order to include the distinguishable photons from the virtual modes, we abandon the commonly employed Torontonian method and propose a method based on the marginal probability and prove that the probability defined by Torontonian is a special case of our result.

We finally discuss how partial distinguishability affects quantum supremacy in light of our results. For partially distinguishable GBS with PNR detectors, since every term in the output probability corresponds to a particular number of indistinguishable photons, this determines the computational cost of calculating that term. By showing that the cost increases exponentially with the number of indistinguishable photons, we obtain an efficient approximation scheme by considering only a fraction of all terms which involves a smaller number of indistinguishable photons. In other words, GBS becomes more “classical” with reduced indistinguishability. We check the fidelity and complexity of the approximation which depends mainly on the indistinguishability efficiency. The fidelity of our approximation increases exponentially with decreasing indistinguishability. We also propose two efficient classical simulation algorithms, one for PNR detectors and one for threshold detectors. With these algorithms, the complexities of simulation algorithms such as those in refs. 27,28 can be reduced exponentially in certain cases, depending on the indistinguishability efficiency. In this way, we show that partial distinguishability affects quantum supremacy.

Results

The model of partial distinguishability

A typical GBS scheme consists of three parts: an interferometer with K spatial ports for inputting photons and K ports for outputs; M of these input ports are fed with squeezed vacuum states and each output port has a detector. We note that what we refer to as “ports” are commonly referred to as “modes” in most of the literature on BS since all photons are typically assumed to be indistinguishable. In this paper, we use the word “port” since each port may consist of multiple modes. The interferometer is characterized by a K × K Haar-random matrix T where its columns correspond to the input ports and its rows correspond to the output ports.

It is convenient to represent a Gaussian state by a quasiprobability distribution (QPD) because the first two statistical moments of the QPD—the displacement vector and covariance matrix—are sufficient to fully characterize the density matrix41,42. Since the Gaussian states we are dealing with always have zero displacement vector, we write ρ = ρ(V) and only use the covariance matrix V to represent the density matrix. Its definition is given by

where \({{\Delta }}\hat{{{{\bf{x}}}}}=\hat{{{{\bf{x}}}}}-\langle \hat{{{{\bf{x}}}}}\rangle\) and the quadrature field operators are defined as \(\hat{{{{\bf{x}}}}}=[{\hat{q}}_{1},{\hat{p}}_{1},...,{\hat{q}}_{K},{\hat{p}}_{K}],{\hat{q}}_{k}={\hat{a}}_{k}+{\hat{a}}_{k}^{{\dagger} },{\hat{p}}_{k}={{{\rm{i}}}}({\hat{a}}_{k}^{{\dagger} }-{\hat{a}}_{k})\), and \({\hat{a}}_{k}\) are the annihilation operators for the kth port. We let k ∈ [1, K] throughout this paper.

By directly using the existing model for lossy GBS as in ref. 37, the covariance matrix of squeezed vacuum inputs with losses is

where ηt is the overall transmission rate:

Here, ηs is the transmission of the inputs (sources) before entering the interferometer, ηu is the transmission for the uniform loss inside the interferometer, and ηd is the detection efficiency. In principle, we should only include ηs in Eq. (2) because it describes the Gaussian state before entering the interferometer, but since our model is compatible with the result in ref. 7,37, we combine ηs, ηu and ηd at this stage. Here, In is the n × n identity matrix which is the covariance matrix of the vacuum state. A standard assumption that is typically used in experiments such as ref. 22 is that all inputs have identical squeezing parameter rm = r.

For GBS, partial distinguishability originates from imperfect input light where minor shifts in time or frequency is the origin of the distinguishability35,36. To characterize it, in our model all input light is initially indistinguishable. Before entering the interferometer, the photons go through a process where they have the probability of becoming a distinguishable photon. These photons do not interfere with other photons during their propagation in the interferometer, but will be registered by the detectors at the output. In order to satisfy this assumption, we create an additional virtual mode for every port which has input light. The Gaussian state in each virtual mode is characterized as ρ(m)(V(m)). Throughout this paper, the superscript (m) is used to denote the quantities and parameters related to the distinguishable photons in the mth virtual mode and we let m ∈ [1, M]. The superscript (0) will be reserved for the indistinguishable mode. We will also use superscript \((m^{\prime} )\) with \(m^{\prime} \in [0,M]\) when including both indistinguishable and distinguishable cases. These virtual modes are initially in vacuum states, V(m) = I2K, until they are fed with photons through the corresponding port from the indistinguishable mode. The transformation of photons from the indistinguishable mode to the virtual mode is characterized by the unitary operator:

where \({\hat{a}}_{m}^{{\dagger} }\) and \({\hat{a}}_{m}\) are the creation and annihilation operators of the mth port of the indistinguishable mode, \({\hat{b}}_{m}^{(m){\dagger} }\) and \({\hat{b}}_{m}^{(m)}\) are the creation and annihilation operators of the mth port of the mth virtual mode. Here, the subscript m is to indicate which port. We define the indistinguishability efficiency as the probability of a photon not exchanged from the indistinguishable mode to the virtual modes, denoted as ηind. Under the effect of Eq. (4), it satisfies the relation:

There is some similarity between this model and that for lossy GBS29,37, but the major difference is that the photons in the virtual modes are not lost, instead, they go on to propagate in the interferometer until they are detected at the output.

Now we obtain the new density matrices for all M + 1 modes after applying the distinguishable-indistinguishable transformation by taking the partial trace:

Since we use the covariance matrix to represent the density matrix, we give the covariance matrices of all M + 1 modes:

where we have

In our model, the output pattern measured at detection does not only come from the indistinguishable mode. It is the combined output pattern of all M + 1 modes. Consider the case of ideal PNR detection. If the output pattern of the \(m^{\prime}\)th mode is \({{{{\bf{s}}}}}^{(m^{\prime} )}=[{s}_{1}^{(m^{\prime} )},{s}_{2}^{(m^{\prime} )},...,{s}_{K}^{(m^{\prime} )}]\) where \({s}_{k}^{(m^{\prime} )}\) denotes the number of output photons at kth port of \(m^{\prime}\)th mode, then the overall output pattern is

and for each port we have

Partially distinguishable GBS with PNR detectors

We now proceed to calculate the probability distribution of the output for PNR detectors. For the original GBS with perfect indistinguishability, the probability of observing an output pattern s = [s1, s2, ..., sK] where sk is the number of detected photons at the output of the kth port is given by the equation:

where Haf(⋅) stands for the matrix function Hafnian and As is the selected kernel matrix which is obtained by repeating rows and columns of the kernel matrix according to s. The kernel matrix is constructed out of the covariance matrix. Q is the covariance matrix of Q-function43.

For partially distinguishable GBS under our model, we need to first decompose the overall output pattern into different combinations of M + 1 patterns, namely s(0), s(1)... s(M), according to Eq. (14) and Eq. (15). We define \({P}^{(m^{\prime} )}({{{{\bf{s}}}}}^{(m^{\prime} )})\) as the probability to obtain output pattern \({{{{\bf{s}}}}}^{(m^{\prime} )}\) for the \(m^{\prime}\)th mode. The probabilities of all possible combinations are then combined to obtain the overall probability:

In Eq. (17) there are a total of \(\mathop{\prod }\nolimits_{k = 1}^{K}({s}_{k}^{(0)}+1)\) possible configurations of s(0), ranging from [0, ..., 0] to [s1, ..., sK]. Now we regroup them by the total number of photons in a configuration, denoted as N(0):

and rewrite Eq. (17) as

where

where N is the total number of photons of the overall output pattern:

Here, we consider the photons from all the virtual modes as a whole because later we propose an classical sampling algorithm for their combined probability in Eq. (20).

In the Methods section, we obtain the covariance matrix Q and kernel matrix A of all M + 1 modes. Applying them to Eq. (16) we obtain the specific probability distribution for each state. The expression for the probability with respect to the indistinguishable mode takes the same form as Eq. (16):

For the distinguishable modes, on the other hand, the change in the form of kernel matrix leads to the reduction of the Hafnian matrix function to simple multiplication:

where \(q\in \{0,2,...,2\lfloor \frac{{N}^{(m)}}{2}\rfloor \}\) and N(m) is the total number of photons in the mth virtual mode

Here, Tk,m is an element of the interferometer matrix T. It represents the amplitudes of the transformation of a photon from the mth port to the kth port. Expressions for parameters \({\beta }_{{{{\rm{d}}}}}^{\prime}\) and \({\alpha }_{{{{\rm{d}}}}}^{\prime}\) are

A detailed derivation of Eq. (23) can be found in the Methods section . Hence the computational complexity of calculating one specific probability from mth virtual mode is only a 1st degree polynomial respect to total number of output photons.

It should be noted that this result does not indicate that the exact calculation of Pdis can be done in polynomial time, because it contains \(\mathop{\prod }\nolimits_{k = 1}^{K}\frac{(M-1+{s}_{k})!}{(M-1)!{s}_{k}!}\) terms. Each term corresponds to a possible combination of s(1), ..., s(M). For the extreme case of the collision-free regime, there would still be MN terms. Even though the computational complexity of calculating each term is only polynomial according to Eq. (23), adding them costs at least exponential time. Nevertheless, unlike Eq. (22), Eq. (23) provides a back door for classical simulation. In the next section we propose an classical simulation method for generating Pdis.

Efficient classical simulation for distinguishable GBS

Looking at Eq. (23) closely, we find that by multiplying an additional factorial, the product on the right side forms a multinomial distribution:

which means that the output port of each photon is randomly chosen among all K ports following the probability distribution (∣T1,m∣2, ∣T2,m∣2... ∣TK,m∣2). \({P}_{{{{\rm{prod}}}}}^{(m)}\)(s) is also related to the probability distribution of obtaining output pattern s in distinguishable AABS40:

where \({{{{\bf{T}}}}}_{{{{\bf{s}}}}}^{\#}\) denotes a matrix with entries ∣Tij∣2 where Tij is an entry of the original complex AABS transformation matrix Ts. Under the condition that there is only one input port, Eq. (29) reduces to Eq. (28). With this in mind, the remaining coefficients in Eq. (23) can be interpreted as the probability of obtaining N(m) photons from the state described by Eq. (9):

Now we can write Eq. (23) as a multiplication of Eq. (28) and Eq. (30).

Such an analysis enables us to provide a classical sampling method for distinguishable GBS, with the help of the two probabilities distributions \({P}_{{{{\rm{prod}}}}}^{(m)}\)(s) and \({P}_{{{{\rm{num}}}}}^{(m)}(N)\). Before doing that, we need to set the range for the number of photons. Theoretically, this range is from zero to infinity, but since the probability decreases exponentially with N, and \(G(N)\propto {\alpha }_{{{{\rm{d}}}}}^{^{\prime} N}\), it is convenient to truncate the sampling range at a number, \({N}_{{{{\rm{t}}}}}={\overline{N}}_{{{{\rm{d}}}}}t\) where \({\overline{N}}_{{{{\rm{d}}}}}\) is the average number of photons:

and t is a truncation factor. Due to the truncation we renormalize \({P}_{{{{\rm{num}}}}}^{(m)}(N)\) according to:

Algorithm 1

Efficient sampling of distinguishable GBS.

Algorithm 1 then allows us to sample the output pattern from all virtual modes. The computational complexity of the worst-case scenario is \(O(MK\lceil t{\overline{N}}_{d}\rceil )\) which scales only polynomially. We can create a probability distribution of all output patterns from distinguishable GBS with ε accuracy requiring a computational cost \(O(MK\lceil t{\overline{N}}_{d}\rceil /\varepsilon )\). n binary digit accuracy can be achieved for each probability if we let ε = 1/2n. We denote this probability distribution as \({P}_{{{{\rm{sim}}}}}({{{\bf{s}}}},\varepsilon )\).

While Pdis(s) is only calculated for one s at a time, \({P}_{{{{\rm{sim}}}}}({{{\bf{s}}}},\varepsilon )\) updates the probabilities for all output patterns simultaneously with each sampling. This is extremely useful in Eq. (19) where Pdis(s) of a considerable number of different patterns s needs to be calculated to obtain the result. Naturally we obtain an approximation to P(s) with accuracy ε by replacing Pdis(s) with \({P}_{{{{\rm{sim}}}}}({{{\bf{s}}}},\varepsilon )\).

Partially distinguishable GBS with threshold detectors

In the original proposal of GBS, each output port has a PNR detector. For a quantum supremacy demonstration, the number of ports—and hence the number of PNR detectors—is rather large, which may be prohibitive experimentally. As such, in works such as ref. 22 they were replaced with threshold detectors where the detection result only shows the presence of the photons regardless of its exact number. If any number of photons greater or equal to one are detected, it is refered to as a “click”. Of course, the probability of a click can be calculated by adding the probabilities of all possible output patterns from PNR detectors over an infinite number of terms. A better way than this direct approach is to use the P-functions of the POVM elements \(\left|0\right\rangle \left\langle 0\right|\) and \(\hat{{{{\bf{I}}}}}-\left|0\right\rangle \left\langle 0\right|\), where one can directly calculate the probability of the output pattern s as in ref. 24

with a matrix function defined as Torontonian:

Here, \({{{\mathcal{U}}}}\) is a set where the elements are the ports that have clicks in pattern s, \({{{\mathcal{P}}}}({{{\mathcal{U}}}})\) is the power set which contains all the subsets of \({{{\mathcal{U}}}}\), and \({{{{\bf{Q}}}}}_{{{{\mathcal{U}}}}}^{(m)}\) is a matrix formed by keeping in Q(m) only the rows and columns corresponding to the ports in set \({{{\mathcal{U}}}}\).

In ref. 24, Eq. (33) is obtained by considering only the Gaussian state-of ideal GBS. Furthermore, how to include the effects of partial distinguishablility is not obvious from their formalism. Therefore, we need a new expression for P(s) to include the effects of all M + 1 modes and make Eq. (33) a special case. We propose the probability as a weighted sum of the marginal probabilities of no-click events:

where \(\widetilde{P}({{{\mathcal{R}}}})\) is the marginal probability of a no-click event for the ports in the given set \({{{\mathcal{R}}}}\) with the expression:

Here, \({{{\mathcal{R}}}}\) is the set difference of [1, M] and \({{{\mathcal{V}}}}\) i.e. \({{{\mathcal{R}}}}=[1,M]-{{{\mathcal{V}}}}\). \({\widetilde{P}}^{({m}^{\prime})}({{{\mathcal{R}}}})\) is the marginal probability of a no-click event in the \({m}^{\prime}\)th mode.

For Gaussian states the marginal probability distribution of certain ports can be directly calculated by only considering the columns and rows corresponding to these ports in the covariance matrix. Additionally, the marginal probability of a no-click event is a function of determinant, therefore we have

When ηind = 1, all photons are indistinguishable hence there is no click in any mth virtual mode such that \({\widetilde{P}}^{(m)}({{{\mathcal{R}}}})=1\). By proving that

Eq. (33) becomes a special case of Eq. (35). The proof is given in the Methods section. Apparently, \(\det ({{{{\bf{Q}}}}}^{(0)})\) is irreducible so that the complexity of calculating \({\widetilde{P}}^{(0)}({{{\mathcal{R}}}})\) remains \(O(| {{{\mathcal{R}}}}{| }^{3})\) as in the case of Torontonian.

Interestingly, the marginal probability of a no-click events in all virtual modes can be reduced to

where \({{{{\mathcal{T}}}}}_{m}({{{\mathcal{R}}}})={\sum }_{j\in {{{\mathcal{R}}}}}| {T}_{j,m}{| }^{2}\). A detailed derivation of Eq. (39) can be found in the Methods section. \({{{{\mathcal{T}}}}}_{m}({{{\mathcal{R}}}})\) can be interpreted as the total transmission rate of ports in \({{{\mathcal{R}}}}\). When ηind = 1, αd = βd = 0 we have \({\widetilde{P}}^{(m)}({{{\mathcal{R}}}})=1\). This gives an exact calculation of the probability distribution of threshold detector GBS with partial distinguishability.

Quantum supremacy with partially distinguishable GBS

We have obtained the probability distribution of partially distinguishable GBS that does not require any assumptions of being collision-free, or having a determinate photon number and explicitly include losses. We proceed to discuss how partial distinguishability affects quantum supremacy.

Let us define an approximate version of the probability P(s) by replacing Pdis(s) with \({P}_{{{{\rm{sim}}}}}({{{\bf{s}}}},\varepsilon )\) and truncating Eq. (19) at a certain value Ncut

Here we can write the accuracy for Papprox and Pn respectively as

We note that the accuracy here refers to the probability for a particular output pattern rather than the whole distribution. Obviously \(\mathop{\sum }\nolimits_{n = 0}^{{N}_{{{{\rm{cut}}}}}}{\sum }_{{{{{\bf{s}}}}}_{n}^{(0)}}{P}^{(0)}({{{{\bf{s}}}}}_{n}^{(0)})\) occupies a tiny portion of the whole distribution. Therefore we have εn ≪ ε and \(\widetilde{\varepsilon }\ll \varepsilon\). Hence the absolute accuracy of Papprox is larger compared to that of \({P}_{{{{\rm{sim}}}}}\) although the relative accuracy most likely stays unchanged.

Here, Pn(s, εn) includes the contributions of all configurations that have n indistinguishable photons. The magnitude of this term depends on the indistinguishability efficiency ηind. For the extreme condition that ηind = 1 which corresponds to the ideal case, Pn(s, εn) from n < N are all zero. Since the dependence of Pn(s, εn) on n for ηind < 1 is approximately exponentially decreasing, we may safely truncate Papprox with Ncut smaller than N. The fidelity of the approximation is defined as

This approximation is powerful because the computational complexity increases superexponentially with Ncut. This is because the computational complexity of calculating \({P}^{(0)}({{{{\bf{s}}}}}_{n}^{(0)})\) is O(n32n) which is exponential and the number of elements in \({{{{\bf{s}}}}}_{n}^{(0)}\) is maximally \(\left(\genfrac{}{}{0.0pt}{}{N}{n}\right)\) when the output pattern is collision-free. The complexity of \({P}_{{{{\rm{approx}}}}}({{{\bf{s}}}},\widetilde{\varepsilon },{N}_{{{{\rm{cut}}}}})\) is then at most \(O({N}^{{N}_{{{{\rm{cut}}}}}}{2}^{{N}_{{{{\rm{cut}}}}}})\) which is polynomial to N. By using the Ncut parameter, this reduces the computational cost of the approximation, reducing it from the ideal GBS case which takes O(N32N) steps. From Fig. 3 we see that with Ncut = 2 and N = 9, which has only a modest computational overhead, the fidelity of our approximation can be maintained to exceed 98.2%. This demonstrates how effective our approximation method is.

We have not been able Fig. 1 to obtain an analytical relationship between F and ηind. However, numerically we observe that the fidelity obeys an approximate relation

as shown in Fig. 2, where c is a fitting parameter. According to this relation, the approximation can achieve high fidelity for Ncut much smaller than N unless ηind is close to 1. In Fig. 2 we show the approximation for various Ncut. We see that the fidelity is above 0.9 even for a modest Ncut = 3 approximation with ηind as large as 0.9.

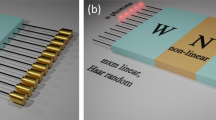

For the simplicity of illustration, we show a 3 × 3 interferometer (K = 3) characterized by T with input light at first two ports (M = 2). When partially distinguishable input light (represented by the mixed color ball for the input ports) enters the interferometer, they decompose into non-interfering modes and propagate inside the interferometer independently until they leave the interferometer and collectively meet the detectors at the output ports. The thin solid-lined rectangle represents the interferometer for the indistinguishable photons. The dashed rectangles represent the interferometer for the distinguishable virtual modes.

Numerical evaluations of fidelity, Eq. (42), for Ncut = 3, Ncut = 4 and Ncut = 5 when K = 35, M = 6, N = 6, r = 0.9, ηt = 0.9. The output pattern is s1 = ... = sN = 1, sN+1 = ... = sK = 0 the other choices of output pattern give similar results. This result is obtained over 94 Haar-random unitary matrices. The error bar represents the standard deviation.

Another observation is that for a given GBS setup, when the total number of photons of an output pattern increases while the order of an approximation Ncut is fixed, the fidelity of the Papprox only decreases linearly or even more slowly. For the example shown in Fig. 3, we use Papprox with Ncut = 2 to approximate the output patterns for a total number of photons N, from 3 to 9. While the exact calculation of these output patterns requires an exponential increase in computational time, the fidelity of the approximation Papprox decreases linearly, instead of exponentially. The rate of decrease in fact appears to ease as N increases. Performing a linear extrapolation of the data for N < 8 to N = 50, we expect a fidelity of ~90%, which is likely to be an underestimate as the data has some evidence of reducing in gradient with N. This relation between fidelity and computational time again shows the effectiveness of this approximation method.

Numerical evaluations of fidelity, Eq. (42) for Papprox with number of detected photons N for (a) Ncut = 2, K = 30, M = 6, r = 0.9, ηind=0.5, ηt=0.9; (b) Ncut = 3, K = 30, M = 6, r = 0.9, ηind = 0.9, ηt = 0.99. The output pattern for each N is s1 = ... = sN = 1, sN+1 = ... = sK = 0, the other choices of output pattern give similar results. These results are obtained over 94 Haar-random unitary matrices.

For GBS with threshold detectors, partial distinguishability does not affect quantum supremacy directly because the main exponential contribution to the complexity comes from the number of elements to be calculated, which is 2N. Partial distinguishability only reduces the complexity of calculating one element from O(N3) to O(N), by converting calculation of the determinant to multiplication as shown in Eq. (39). We note that if we let ηind ≈ 0 such that all photons are almost distinguishable, the computational complexity is still exponential because the number of elements is still 2N which is established as the proof for exponential complexity of calculating probability for a particular output in GBS with threshold detectors. But as we have seen above, the sampling method for \({P}_{{{{\rm{sim}}}}}({{{\bf{s}}}},\varepsilon )\) can be used to efficiently sample the distribution in this limit.

We note that while we have focused primarily on the probability of an output pattern in this paper, our model can also be used for generating samples for partially distinguishable GBS. Our model is compatible with existing simulation algorithms such as refs. 27,28, which allows one to take advantage of these algorithms and the imperfect indistinguishability at the same time. For GBS with PNR detectors, we first create two samples. One is for photons from all M distinguishable modes, directly created by Algorithm 1. The other is for the photons from the indistinguishable mode, created by feeding the covariance matrix representing the mode

into the sampling method provided in ref. 27. Then, we combine these two samples by adding the results of each port to create one final sample.

For GBS with threshold detectors, we could directly take the sample created for PNR detectors. We can however improve the sampling algorithm by taking advantage of the following two observations. The first is that for threshold detectors, one photon is enough to register a click in a port, and whether this photon comes from the indistinguishable mode or distinguishable modes is irrelevant. The second point is that the complexity of sampling an indistinguishable photon is much larger than that of sampling a distinguishable one. Therefore, we can first sample the M distinguishable modes. According to that sampling result, we can neglect the output ports where clicks are already registered. Hence we only need to sample the output pattern for the remaining ports. This is possible for a Gaussian state because the marginal probabilities can be calculated by selecting the corresponding rows and columns of the covariance matrix. The details of the algorithm can be found in Algorithm 2.

Algorithm 2

Sampling algorithm of partially distinguishable GBS with threshold detectors.

The complexity of generating a sample with N photons (clicks) for ideal GBS are O(N32N/2) for the two algorithms of refs. 27,28. For the partially distinguishable case, since only part of the sampled photons actually come from the indistinguishable mode, the complexity of will be reduced. For every photon counted as a photon with the PNR detectors or registered as a click with threshold detectors, approximately ηind of the probability comes from the indistinguishable mode, which makes the complexity \(O({(N{\eta }_{{{{\rm{ind}}}}})}^{3}{2}^{N{\eta }_{{{{\rm{ind}}}}}/2})\). This expression covers quantitatively all levels of indistinguishability, from the totally distinguishable case ηind = 0, where the exponential term becomes unity, to the perfectly indistinguishable case where ηind = 1, where the complexity remains the same as the ideal case. For partially distinguishable cases, there is an exponential reduction of \({2}^{N(1-{\eta }_{{{{\rm{ind}}}}})/2}\). This reduction of complexity can be substantial with a large number of sampled photons (clicks) or small indistinguishable efficiency. It indicates that 1/ηind as many photons (clicks) are needed in a partially distinguishable GBS experiment to reach the regime where classical simulations become intractable.

Discussion

In this paper, we have developed a model which allows us to model GBS with partially distinguishable photons and obtain the expressions for the probabilities of a given output pattern for both PNR and threshold detectors. The model is based on the construction of virtual modes which incorporates the distinguishable photons and forms a new Gaussian state that propagates inside the interferometer independently until it reaches the detectors. We have proved that the expression for the probability of the photons from these distinguishable Gaussian states contains the previous result obtained by Aaronson and Arkhipov for the distinguishable AABS as a special case. This is because we included the indeterminate-photon-number nature of Gaussian states in contrast to AABS where the photon number is fixed. Based on that we proposed an algorithm for efficient simulation of the output patterns from these distinguishable Gaussian states which enables us to exponentially reduce the computational time of calculating the probabilities.

Our method provides a framework to calculate the probabilities for imperfect GBS, especially for GBS with threshold detectors which has only been theoretically investigated for the ideal case. We proved that the Torontonian—the result obtained in the ideal case—is a special case within our framework. We note that for low indistinguishability, the proof that supports the complexity of computing the Torontonian still holds. Our aforementioned simulation algorithm can reduce the computationally hard exact calculation with a highly accurate approximation particularly for low indistinguishability.

For GBS with PNR detectors, which to date is more theoretically developed, we proposed an approximation based on the structure of the expression of the probability with respect to indistinguishability efficiency to show how partial distinguishability affects quantum supremacy. We have taken advantage of the physical nature of the indistinguishability, i.e. interference of photons which is the cause of the computationally hard complexity. Therefore for low indistinguishability, we only include contributions from a smaller number of interfering photons which takes exponentially less time. We note that for GBS with extremely high indistinguishability a direct calculation of the Hafnian is more efficient than the approximation method due to the additional overhead of incorporating the distinguishable photons. Numerically we showed how the computational time of our approximation for a given fidelity decreases exponentially with reduced indistinguishablity which indicates the relationship between partial distinguishablity and quantum supremacy.

While we have obtained the expression Eq. (35) for the exact calculation of the probability for GBS with threshold detectors, using this to find an approximation method is less easily constructed, unlike the case for PNR detectors. For the PNR detector case, the approximation Eq. (40) comes naturally from the expression Eq. (17) for exact sampling. In this sense, GBS with threshould detectors is advantageous than GBS with PNR detectors in the context of probability calculation due to the lack of an efficient approximation algorithm. Though For PNR detectors, our approximation method may be used to obtain the bound for indistinguishability efficiency for efficient simulation at a required fidelity and computational time. In our numerical studies we have found that the indistinguishability efficiency should be larger than 0.9, such that the computational requirements of the approximation method become costly. This is satisfied for the recent GBS experiments to date20,21,22,23.

Our model also provides a foundation for taking advantage of the partial distinguishability to reduce the computational time in classical simulation of GBS. We proposed two simulation algorithms for partially distinguishable GBS with both types of detectors based on Algorithm 1 and the algorithms of refs. 27,28. With imperfect indistinguishability, the computational time is exponentially reduced, depending on the number of photons (clicks) and the level of indistinguishability. Our result shows that roughly 1/ηind as many as photons (clicks) are needed in a experiment in comparison to a classical simulation. Therefore, in both a probability calculation and a classical simulation algorithm, our model shows that partial distinguishability can affect quantum supremacy in GBS.

Methods

Q-function covariance matrices and kernel matrices of all M + 1 modes

As shown in Eq. (16), the probability of obtaining a certain output pattern s from a Gaussian state requires the covariance matrix of the Q-function of the state, denoted as Q. It is needed for the value of its determinant and for constructing the kernel matrix A:

where Q is converted from the real covariance matrix V. From the relation between the covariance matrix of the light before entering the interferometer, namely Qin, and the light after the interferometer, namely Qout:

we could obtain similar relation for the kernel matrices:

Eq. (47) is very useful because it allows us to calculate the kernel matrices successively from one interferometer to the other without the need of calculating the inverse matrix for every covariance matrix being transformed. The only kernel matrix we need to directly calculate from the definition Eq. (45) is for the light emerging from the source, which is what are we will calculate in the next step for our partially distinguishable light.

First we obtain Qin for all M + 1 modes from Eq. (8) and Eq. (9):

where

Then we calculate Ain. By observing that

it can be found that

where the values of \({\alpha }_{i}^{\prime},{\alpha }_{d}^{\prime},{\beta }_{i}^{\prime},{\beta }_{d}^{\prime}\) can be calculated through Eqs. (57):

In the last step, we used Eq. (47) to obtain the kernel matrices after the propagation inside the interferometer:

where

We note that the subscripts “in” and “out” are only used in this section because in the main text we are only interested in the kernel matrices after the interferometer.

Derivation of Eq. 23

To obtain Eq. (23), firstly we need to calculate Haf(A(m)) where the form of A(m) is given by Eq. (65). Direct calculation is very difficult so we observe that A(m) can be decomposed into two matrices with no overlap:

Combined with the definition of Hafnian function we obtain

where we have

and H is a 2n × 2n matrix such that

With the definitions Eq. (66) and Eq. (67), it is easy to prove that

which can be used to reduce the calculation of Haf(A(m)) to the calculation of Haf(H):

The calculation of Haf(H) is non-trivial and we must resort to graph theory. As is well-known, the Hafnian is closely linked to weighted perfect matchings of a graph by the following definition for a 2n × 2n matrix:

which means we have to perfectly match 2n vertices to form one permutation in PMP(2n).

Regarding the definition of Hi,j, we can divide these 2n vertices into two sets: \({{{{\mathcal{N}}}}}_{1}=\) [1, n] and \({{{{\mathcal{N}}}}}_{1}=[n+1,2n]\). A match inside \({{{{\mathcal{N}}}}}_{1}\) or \({{{{\mathcal{N}}}}}_{2}\) corresponds to the weight \({\beta }_{{{{\rm{d}}}}}^{\prime}\) and the match between \({{{{\mathcal{N}}}}}_{1}\) and \({{{{\mathcal{N}}}}}_{2}\) corresponds to the weight \({\alpha }_{{{{\rm{d}}}}}^{\prime}\). For a given permutation, if we denote the number of matches inside \({{{{\mathcal{N}}}}}_{1}\) or \({{{{\mathcal{N}}}}}_{2}\) as q, the number of matches between \({{{{\mathcal{N}}}}}_{1}\) and \({{{{\mathcal{N}}}}}_{2}\) is consequently n − q. Note the value of q is always even because a match in \({{{{\mathcal{N}}}}}_{1}\) inevitably leads to a match in \({{{{\mathcal{N}}}}}_{2}\). Now we can rewrite Haf(H) as a summation respect to q:

where \(q\in \{0,2,...,2\lfloor \frac{n}{2}\rfloor \}\).

The final step is to obtain the expression of fq. Firstly, we regroup the vertices in \({{{{\mathcal{N}}}}}_{1}\) into two sets: \({{{{\mathcal{N}}}}}_{1,{{{\rm{in}}}}}\) contains the vertices matching vertices inside \({{{{\mathcal{N}}}}}_{1}\); \({{{{\mathcal{N}}}}}_{1,{{{\rm{out}}}}}\) contains the vertices matching vertices in \({{{{\mathcal{N}}}}}_{2}\). We do the same thing for vertices in \({{{{\mathcal{N}}}}}_{2}\) such that in total there are \({\left(\frac{n!}{q!(n-q)!}\right)}^{2}\) configurations for this process.

Secondly, we count the number of perfect matches for vertices in \({{{{\mathcal{N}}}}}_{1,{{{\rm{in}}}}}\) and \({{{{\mathcal{N}}}}}_{2,{{{\rm{in}}}}}\). Since each one contributes ∣ PMP(q) ∣ = (q−1)!! permutations, in total there are ((q−1)!!)2 configurations in this process.

Thirdly, we count the number of matches between vertices in \({{{{\mathcal{N}}}}}_{1,{{{\rm{out}}}}}\) and \({{{{\mathcal{N}}}}}_{2,{{{\rm{out}}}}}\). Since there are n − q vertices in each set, we can straightforwardly obtain the number of configurations to be (n − q)!.

Combining them we obtain the closed-form expression of fq:

It is easy to prove that ∑qfq = (2n − 1)!!, which verifies the correctness of the expression.

Thus we obtain the analytical result for Haf(A(m)) and hence Eq. (23) where we let G(N) = Haf(H).

Proof of Eq. 38

This section proves that for a covariance matrix Σ of size 2K × 2K, \({{{\mathcal{U}}}}=[1,K]\), \({{{\mathcal{R}}}}\) is an arbitrary subset of \({{{\mathcal{U}}}}\), \({{{{\mathcal{R}}}}}^{c}\) is the relative complement set of \({{{\mathcal{R}}}}\) respect to \({{{\mathcal{U}}}}\), we will always have:

Proof: First, we regroup the matrix Σ as \(\left[\begin{array}{cc}{{{\bf{A}}}}&{{{\bf{B}}}}\\ {{{\bf{C}}}}&{{{\bf{D}}}}\end{array}\right]\) by moving rows and columns so that A includes the indices from \({{{{\mathcal{R}}}}}^{c}\) and B includes the indices from \({{{\mathcal{R}}}}\). Note the sign of the determinant will not be changed because the interchange of rows (columns) are always carried out an even number of times therefore we have

Next, we use the Schur complement

to obtain

Then we calculate the inverse matrix of \(\left[\begin{array}{cc}{{{\bf{A}}}}&{{{\bf{B}}}}\\ {{{\bf{C}}}}&{{{\bf{D}}}}\end{array}\right]\)to be \(\left[\begin{array}{cc}{({{{\bf{A}}}}-{{{\bf{B}}}}{{{{\bf{D}}}}}^{-1}{{{\bf{C}}}})}^{-1}&-{{{{\bf{A}}}}}^{-1}{{{\bf{B}}}}{({{{\bf{A}}}}-{{{\bf{B}}}}{{{{\bf{D}}}}}^{-1}{{{\bf{C}}}})}^{-1}\\ -{{{{\bf{D}}}}}^{-1}{{{\bf{C}}}}{({{{\bf{D}}}}-{{{\bf{C}}}}{{{{\bf{A}}}}}^{-1}{{{\bf{B}}}})}^{-1}&{({{{\bf{D}}}}-{{{\bf{C}}}}{{{{\bf{A}}}}}^{-1}{{{\bf{B}}}})}^{-1}\end{array}\right].\) Therefore

such that we obtain another equation of \(\det ({{{\bf{A}}}}-{{{\bf{B}}}}{{{{\bf{D}}}}}^{-1}{{{\bf{C}}}})\)

Combining Eq. (80) and Eq. (82) we obtain Eq. (77).

Calculation of marginal probability of distinguishable photons

This section calculates the marginal probability of given ports according to Eq. (37). Basically, that amounts to calculating the determinant of a selected covariance matrix \({{{{\bf{Q}}}}}_{{{{\mathcal{R}}}}}^{(m)}\) whose rows and columns are selected from Q(m) according to the ports listed in set \({{{\mathcal{R}}}}\). Without loss of generality, we presume there are n elements in \({{{\mathcal{R}}}}\) and we denote its ith element as Ri.

First we need to calculate Q(m) which is \({{{{\bf{Q}}}}}_{{{{\rm{out}}}}}^{(m)}\) obtained by putting Eq. (49) into Eq. (46):

Therefore, \({{{{\bf{Q}}}}}_{{{{\mathcal{R}}}}}^{(m)}\) can be written as a single matrix:

where \({\gamma }_{i}=1/| {T}_{{R}_{i},m}{| }^{2}\). Now we take all the coefficients and form another matrix C:

Then we calculate its determinant by resorting to the definition of determinant

where \({{{\mathcal{C}}}}=\mathop{\prod }\nolimits_{j = 1}^{n}{\gamma }_{j}\). Then we find that

where \({{{\mathcal{T}}}}=\mathop{\sum }\nolimits_{j = 1}^{n}1/{\gamma }_{j}\). Consequently we have

Data availability

All data supporting the findings of this study are available upon request.

Code availability

The code used to generate the numerical results presented in this paper can be made available upon reasonable request.

References

Aaronson, S. & Arkhipov, A. The computational complexity of linear optics. Theory Comput. 9, 143–252 (2013).

Arute, F. et al. Quantum supremacy using a programmable superconducting processor. Nature 574, 505–510 (2019).

Pednault, E., Gunnels, J. A., Nannicini, G., Horesh, L. & Wisnieff, R. Leveraging secondary storage to simulate deep 54-qubit sycamore circuits. Reprint at: https://arXiv.org/abs/1910.09534 (2019)

Pan, F. & Zhang, P. Simulating the Sycamore quantum supremacy circuits. Reprint at: https://arXiv.org/abs/2103.03074 (2021).

Valiant, L. The complexity of computing the permanent. Theor. Comput. Sci. 8, 189–201 (1979).

Scheel, S. Permanents in linear optical networks. Reprint at: https://arXiv.org/abs/quant-ph/0406127 (2004).

Neville, A. et al. Classical boson sampling algorithms with superior performance to near-term experiments. Nat. Phys. 13, 1153–1157 (2017).

Wang, H. et al. High-efficiency multiphoton boson sampling. Nat. Photon. 11, 361–365 (2017).

He, Y. et al. Time-bin-encoded boson sampling with a single-photon device. Phys. Rev. Lett. 118, 190501 (2017).

Loredo, J. et al. Boson sampling with single-photon fock states from a bright solid-state source. Phys. Rev. Lett. 118, 130503 (2017).

Wang, H. et al. Boson sampling with 20 input photons and a 60-mode interferometer in a 1014-dimensional Hilbert space. Phys. Rev. Lett. 123, 250503 (2019).

Lund, A. P. et al. Boson sampling from a Gaussian state. Phys. Rev. Lett. 113, 100502 (2014).

Barkhofen, S. et al. Driven Boson sampling. Phys. Rev. Lett. 118, 020502 (2017).

You, C. et al. Multiparameter estimation with single photons–linearly-optically generated quantum entanglement beats the shotnoise limit. J. Opt. 19, 124002 (2017).

Hamilton, C. S. et al. Gaussian Boson sampling. Phys. Rev. Lett. 119, 170501 (2017).

Kruse, R. et al. Detailed study of Gaussian boson sampling. Phys. Rev. A 100, 032326 (2019).

Caianiello, E. R.Combinatorics and renormalization in quantum field theory (W. A. Benjamin, Inc, 1973).

Arrazola, J. M. & Bromley, T. R. Using Gaussian boson sampling to find dense subgraphs. Phys. Rev. Lett. 121, 030503 (2018).

Huh, J., Guerreschi, G. G., Peropadre, B., McClean, J. R. & Aspuru-Guzik, A. Boson sampling for molecular vibronic spectra. Nat. Photon. 9, 615–620 (2015).

Zhong, H.-S. et al. Experimental Gaussian Boson sampling. Sci. Bull. 64, 511–515 (2019).

Paesani, S. et al. Generation and sampling of quantum states of light in a silicon chip. Nat. Phys. 15, 925–929 (2019).

Zhong, H.-S. et al. Quantum computational advantage using photons. Science 370, 1460–1463 (2020).

Zhong, H.-S. et al. Phase-programmable Gaussian Boson sampling using stimulated squeezed light. Phys. Rev. Lett. 127, 180502 (2021).

Quesada, N., Arrazola, J. M. & Killoran, N. Gaussian boson sampling using threshold detectors. Phys. Rev. A 98, 062322 (2018).

Li, Y. et al. Benchmarking 50-photon Gaussian Boson sampling on the sunway TaihuLight. IEEE Trans. Parallel Distrib. Syst. 33, 1357–1372 (2021).

Björklund, A., Gupt, B. & Quesada, N. A faster Hafnian formula for complex matrices and its benchmarking on a supercomputer. J. Exp. Algorithmics. 24, 1–17 (2019).

Quesada, N. et al. Quadratic speed-up for simulating gaussian boson sampling. PRX Quantum 3, 010306 (2022).

Bulmer, J. F. F. et al. The boundary for quantum advantage in gaussian boson sampling. Science Advances 8, eabl9236 (2022).

Rahimi-Keshari, S., Ralph, T. C. & Caves, C. M. Sufficient conditions for efficient classical simulation of quantum optics. Phys. Rev. X 6, 021039 (2016).

Oszmaniec, M. & Brod, D. J. Classical simulation of photonic linear optics with lost particles. New J. Phys. 20, 092002 (2018).

Shchesnovich, V. S. Noise in boson sampling and the threshold of efficient classical simulatability. Phys. Rev. A 100, 012340 (2019).

Rohde, P. P. Optical quantum computing with photons of arbitrarily low fidelity and purity. Phys. Rev. A 86, 052321 (2012).

Tichy, M. C., Mayer, K., Buchleitner, A. & Mølmer, K. Stringent and efficient assessment of boson-sampling devices. Phys. Rev. Lett. 113, 020502 (2014).

Shchesnovich, V. S. Partial indistinguishability theory for multiphoton experiments in multiport devices. Phys. Rev. A 91, 013844 (2015).

Tichy, M. C. Sampling of partially distinguishable bosons and the relation to the multidimensional permanent. Phys. Rev. A 91, 022316 (2015).

Renema, J. J. et al. Efficient classical algorithm for Boson sampling with partially distinguishable photons. Phys. Rev. Lett. 120, 220502 (2018).

Qi, H., Brod, D. J., Quesada, N. & García-Patrón, R. Regimes of classical simulability for noisy Gaussian Boson sampling. Phys. Rev. Lett. 124, 100502 (2020).

Renema, J. J. Simulability of partially distinguishable superposition and Gaussian boson sampling. Phys. Rev. A 101, 063840 (2020).

Thomas, O. F., McCutcheon, W. & McCutcheon, D. P. S. A general framework for multimode Gaussian quantum optics and photo-detection: application to Hong-Ou-Mandel interference with filtered heralded single photon sources. APL Photonics 6, 040801 (2021).

Aaronson, S. & Arkhipov, A. Boson sampling is far from uniform. Quantum Inf. Comput. 14, 1383–1423 (2014).

Ferraro, A., Olivares, S. & Paris, M. G. A. Gaussian states in continuous variable quantum information. Reprint at: https://arXiv.org/abs/quant-ph/0503237 (2005).

Weedbrook, C. et al. Gaussian quantum information. Rev. Mod. Phys. 84, 621–669 (2012).

Barnett, S. & Radmore, P. Methods in theoretical quantum optics, vol. 15 (Oxford University Press, 2002).

Gupt, B., Izaac, J. & Quesada, N. The walrus: a library for the calculation of hafnians, hermite polynomials and gaussian boson sampling. J. Open Source Softw. 4, 1705 (2019).

Acknowledgements

We acknowledge The Walrus Python library as a helpful reference on hafnian algorithms44. This work is supported by the National Natural Science Foundation of China (62071301); NYU-ECNU Institute of Physics at NYU Shanghai; the Joint Physics Research Institute Challenge Grant; the Science and Technology Commission of Shanghai Municipality (19XD1423000, 22ZR1444600); the China Foreign Experts Program (G2021013002L); the NYU Shanghai Boost Fund; NYU Shanghai Major-Grants Seed Fund.

Author information

Authors and Affiliations

Contributions

J.S. performed the calculations and developed the theory. T.B. conceived the work and supervised the project. Both authors wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Shi, J., Byrnes, T. Effect of partial distinguishability on quantum supremacy in Gaussian Boson sampling. npj Quantum Inf 8, 54 (2022). https://doi.org/10.1038/s41534-022-00557-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-022-00557-9