Abstract

Numerous lines of research suggest that communicative dyadic actions elicit preferential processing and more accurate detection compared to similar but individual actions. However, it is unclear whether the presence of the second agent provides additional cues that allow for more accurate discriminability between communicative and individual intentions or whether it lowers the threshold for perceiving third-party encounters as interactive. We performed a series of studies comparing the recognition of communicative actions from single and dyadic displays in healthy individuals. A decreased response threshold for communicative actions was observed for dyadic vs. single-agent animations across all three studies, providing evidence for the dyadic communicative bias. Furthermore, consistent with the facilitated recognition hypothesis, congruent response to a communicative gesture increased the ability to accurately interpret the actions. In line with dual-process theory, we propose that both mechanisms may be perceived as complementary rather than competitive and affect different stages of stimuli processing.

Similar content being viewed by others

Introduction

The ability to build a representative model of the surrounding environment is a key function of the human brain (Fletcher & Frith, 2009). Given the importance and complexity of social interactions and the increased salience of social signals, it has been proposed that social information modifies both bottom-up processing of sensory evidence and top-down expectancies (Brown & Brüne, 2012). While many lines of social cognition research have focused on the ability to read others’ intentions from their faces (Hugenberg & Wilson, 2013), it has been suggested that the capacity to accurately infer others’ intentions from their body movements lies at the core of human communication (Yovel & O’Toole, 2016)—misreading intentions when the other agent is not close enough to observe their face could have dire consequences on survival. Thus, it is believed that communicative actions (COM) of other people are the primary source of interpersonal information when the face is obscured and are crucial for adequately interpreting and engaging in social encounters (Centelles et al., 2013; Puglia & Morris, 2017). COM can be defined as body motions intended to convey specific information to the observer, e.g., greeting someone by hand-waving or drawing attention to something by pointing one’s finger at it. It has been proposed that these types of actions are processed differently than individual actions (IND), i.e., body motions without communicative intentions, such as using tools or exercising (Ding et al., 2017; Redcay & Carlson, 2015).

In line with this notion, communicative gestures are easily recognized from motion kinematics by healthy individuals (Becchio et al., 2012), even after dramatically obscuring the availability of visual information using point-light motion displays (Zaini et al., 2013). High proficiency at detecting communicative interactions in third-party encounters (Manera et al., 2016) is associated with widespread activity of the main social brain networks (Centelles et al., 2013). Neuroimaging studies have also documented that detection of communicative intentions elicits activation of both mentalizing and action observation networks, as well as increased coupling between them (Ciaramidaro et al., 2014; Trujillo et al., 2020), and may be observed as rapidly as 100 ms after the presentation of an agent (Redcay & Carlson, 2015).

These robustly replicated effects have been linked to semantic coupling within a dyad, i.e., the communicative gestures of one agent carry significant information about the expected response of the other (Manera, Becchio, et al., 2011). In line with this suggestion, which can be framed as the facilitated recognition hypothesis, the communicative gesture of one agent has been shown to increase the accuracy of detecting a masked agent responding to the gesture in a stereotypical and time-congruent way (Manera et al., 2013; Manera, Becchio, et al., 2011). Manera, Becchio, et al. (2011) showed that, for example, observing a hand gesture of an agent who invites someone to sit facilitates spotting an agent who sits down and is masked with additional noise-dots. Furthermore, dyadic social interactions were found to be stored in the working memory as a single chunk of information (Ding et al., 2017). It has been proposed that dyadic social interactions are bounded in unified representations providing an “initial perceptual framework” (Fedorov et al., 2018; Vestner et al., 2019), and thus the additional cues derived from temporal and behavioral congruence between observed individuals allow for increased sensitivity to COM presented in a dyadic context. This phenomenon, termed interpersonal predictive coding, is believed to stem from coupling between social perception and action observation networks and top-down expectations of specific responses to communicative gestures that shape bottom-up perceptual processes (Manera, Becchio, et al., 2011).

It is also possible that, as in the case of other salient signals, the mere presence of two agents alters perceptual thresholds due to the importance of communicative signals. The very presence of a potential interaction partner may elicit communication bias, i.e., a tendency to infer communicative intentions from observed dyadic displays. In line with this hypothesis, it has been previously shown that observing the communicative gesture of one agent may prompt the perception of a second agent, even when the second agent is not present (Manera, Becchio, et al., 2011). Dyadic displays suggesting typical interaction patterns (i.e., two faces or silhouettes facing each other) were found to elicit more spontaneous attention in both adults (Roché et al., 2013) and preverbal children (Augusti et al., 2010). Abassi and Papeo (2020) demonstrated that body-selective visual cortex (extrastriate body area; EBA) responds more strongly to face-to-face bodies than identical bodies presented back-to-back and that the basic function of the EBA is enhanced when a single body is presented in the context of another facing body. These findings provide initial evidence that observing two agents (whose spatial proximity and mutual accessibility enable communicative interaction) may automatically alter expectations about potential interactions.

These two hypotheses, namely facilitated recognition and dyadic communicative bias, offer different perspectives on the crucial mechanisms underlying preferential processing and the accuracy of detecting COM; the former highlights the importance of additional information provided by congruent responses, while the latter focuses on the initial expectations elicited by the context itself. However, whether the presence of a second agent enables more accurate discriminability between communicative and individual intentions or if it biases viewers to perceive third-party encounters as interactive has not been directly investigated. While the available literature supports the facilitated recognition hypothesis (e.g., only time-locked responses of the other agent facilitate recognition of communicative interactions; Manera et al., 2013), to our knowledge, no previous study has examined whether the second agent changes the response criterion by increasing the likelihood of reporting COM. As discussed by Stanislaw and Todorov (1999), separating the effects of the response criterion and sensitivity on hit rates and false alarms is crucial for accurately grasping the processes underlying classification and decision-making. Thus, the current study uses a signal detection theory (SDT) framework to investigate the implicit processes associated with either facilitated recognition or dyadic communicative bias based on the pattern of explicit responses during a COM detection task.

Pilot Study

Method

To explore the hypothesized distinct effects of response bias and sensitivity on detecting COM, we retrospectively pooled data from previous projects performed in our lab. Each project utilized two tasks examining detection of communicative intentions from single (Gestures Task; (Jaywant et al., 2016) or dyadic (Communicative Interaction Database—5 Alternative Forced Choice; Manera et al., 2016) point-light walkers, which are described below. The point-light displays (PLD) technique was used to limit the availability of visual information about the presented person. Initially proposed by Johansson (1973), PLD provides an effective way to convey salient social information, such as the communicative or individual meaning of an action, in the absence of peripheral visual cues (Manera et al., 2010). The tasks were designed to measure two levels of action recognition: simple action classification (communicative vs. individual) and action interpretation (interpretation of the specific content of the presented actions). However, due to the large discrepancy between the format of action interpretation (5-alternative choice vs. verbal description), only action classification scores are included in the current analysis as they were collected in a similar manner.

The sample consisted of 284 participants self-reporting no neurological or psychiatric diagnoses. All participants were below the age of 50, as the processing of PLDs may be impaired in older adults (Billino et al., 2008). Each participant provided informed consent prior to participation and was reimbursed upon completion of the study. All study protocols were approved by the Ethics Committee of the Institute of Psychology, Polish Academy of Sciences.

The study took place at the Institute of Psychology in Warsaw. The tasks were presented in a counterbalanced order: 147 participants completed the CID-5 task first, while 126 participants completed the Gesture task first.

Eleven participants were excluded from further analysis due to outlying scores (defined as COM or IND classification accuracy scores more than three standard deviations (SD) from the mean). Thus, the final sample consisted of 273 participants (126 males; age: M = 26.6, SD = 6.9). Requests for the data or materials used in all the studies can be sent via email to the corresponding author. Neither of the experiments reported in this article was preregistered.

Communicative Interaction Database – 5AFC Format (CID-5)

The task was originally created by Manera et al. (2016). It consists of 14 vignettes depicting two point-light agents interacting with each other and seven control vignettes presenting two point-light agents performing activities independently. Each point-light agent had 13 markers indicating head, shoulders, elbows, wrists, hips, knees, and feet.

Every animation was presented twice, with a separation cross between both displays. The duration of each animation varied between 2.5 and 8 s, depending on the action presented. The second presentation was followed by two questions. First, participants were asked to indicate whether the vignette had depicted an interaction (COM) or independent actions (IND). After the first question, five response alternatives were presented, which included the correct action description and four incorrect response alternatives (two COM and two IND). Participants were asked to choose the correct description of the animation.

More detailed information about the stimuli and the procedure can be found in Table 1 and in Manera et al. (2016). The task was validated for Polish sample and effectively utilized in previous studies carried out on clinical populations (Bala et al., 2018; Okruszek et al., 2018).

Gestures from BioMotion

The task consisted of 26 animations derived from a set provided by Zaini et al. (2013). Each animation depicted a single point-light agent made of 13 markers indicating head, shoulders, elbows, wrists, hips, knees, feet, and, additionally, 10 markers for finger joints of both hands. Thirteen animations presented an agent performing a communicative gesture and 13 presented an agent performing an object-oriented gesture. A similar task was previously utilized in Parkinson's disease research (Jaywant et al., 2016). A list of the stimuli for each category is presented in Table 1.

The stimuli ranged in duration from 2 to 5.5 s. Each display was presented twice and followed by a question, regarding whether the agent was communicating something or privately using an object. Participants were instructed to press the assigned key depending on their answer and were awarded one point for each stimulus they correctly identified as communicative or non-communicative.

After choosing a response, participants were asked to verbally describe the content of the presented animation, which was recorded by the examiner. Subsequently, the responses were rated by two independent judges to see how well they matched the correct responses given in Table 2. There was 95% scoring agreement for communicative actions and 96% for individual actions.

Statistical Analysis

To examine response bias and discriminability between the signal (COM) and the noise (IND), two SDT parameters were extracted for the pilot study and the subsequent studies: criterion and sensitivity scores. The criterion parameter is a measure of the bias—a higher value suggests a more conservative response threshold, or a tendency to classify stimuli as noise, whereas lower values indicate a more liberal threshold, or a tendency to classify stimuli as the signal. Criterion equal to zero indicates no bias in any direction. The sensitivity parameter measures the ability to differentiate between the signal and the noise, and higher values reflect better discriminability.

Both parameters were calculated with IBM SPSS Statistics 26 using a syntax proposed by Stanislaw and Todorov (1999), i.e., criterion = − (PROBIT(H) + PROBIT(F))/2 and sensitivity = PROBIT(H) − PROBIT(F), where H and F stand for hit rate and false-alarm rate, respectively. Accuracy for COM animations during the detection task was used as a hit rate, while the false alarm rate was calculated as 1—accuracy for IND. Moreover, following the recommendations of Macmillan and Creelman (2004), proportions of 0 and 1 were replaced with 1/(2 N) and 1 – 1/(2 N), respectively.

In order to investigate whether facilitated recognition and dyadic communicative bias were observed during the processing of COM from dyadic displays, we compared SDT parameters associated with sensitivity for COM vs. non-COM actions (facilitated recognition) and response criterion (dyadic communicative bias) observed for the detection of COM actions from single and dyadic PLDs. Facilitated recognition should be linked to increased sensitivity when detecting COM from dyadic vs. single-agent displays, whereas dyadic communicative bias should be linked to a lower response criterion during dyadic vs. single-agent tasks, as it signalizes bias towards recognizing ambivalent stimuli as the signal.

In order to examine differences in SDT parameters between the tasks, two paired t-tests were used to compare the criterion and sensitivity scores. One-sample t-tests were used to examine response bias (i.e., criterion significantly different from zero).

Additionally, two mixed ANOVAs with criterion or sensitivity parameters as a within-subject factor and order of tasks as a between-subject factor were used in order to assess whether the order of displays (single-agent first vs dyadic first) could have an impact on participants’ scores.

Results

Mean hit and false-alarm rates for dyadic displays were 92% (SD = 7%) and 16% (SD = 13%) respectively, and 89% (SD = 9%) and 10% (SD = 13%) for single-agent displays (the values were based on the accuracy scores corrected in accordance with Macmillan & Creelman, 2004 recommendations).

Criterion differed from zero significantly in both single-agent (M = 0.05; SD = 0.33) and dyadic displays (M = -0.19; SD = 0.32), suggesting bias in participants responses, single-agent: t(272) = 2.78, p < 0.01; dyadic: t(272) = − 9.77, p < 0.001. Criterion was found to be significantly lower for dyadic displays than for single-agent displays: t(272) = 8.49, p < 0.001, d = 0.514, 95% CI [0.19, 0.30].

Sensitivity was significantly lower for dyadic displays (M = 2.58; SD = 0.55) than for single-agent displays (M = 2.77; SD = 0.66): t(272) = 3.63, p < 0.001, d = 0.220, 95% CI [0.08, 0.28].

Additional analyses revealed no effects of order of the task for criterion, F(1, 271) < 0.001, p = 0.991, η2 < 0.001, or sensitivity, F(1, 271) < 0.001, p = 0.957, η2 < 0.001. Interaction effects were also found not significant, criterion: F(1, 271) = 0.87, p = 0.350, η2 = 0.003; sensitivity: F(1, 271) = 1.27, p = 0.261, η2 = 0.005. The results are presented in Fig. 1.

Discussion

The results of the first study support the dyadic communicative bias hypothesis, given the finding of a significantly lower response criterion for two agent vs single-agent displays. Interestingly, sensitivity to COM was lower for dyadic vs. single-agent presentations, which is contrary to the facilitated recognition hypothesis.

These results are limited by several factors. The PLDs used in this study came from two available datasets (Jaywant et al., 2016; Manera et al., 2016) that differ in their basic visual characteristics, such as the number of markers (additional markers for hands in single-agent displays), perspective (second-person perspective in single-agent displays and third-person perspective in dyadic displays), and proportion of IND and COM displays (2:1 ratio for COM and IND in the CID-5 task). Thus, the increased sensitivity in single-agent displays might be associated with the additional information provided by the extra markers, while the decreased response criterion in dyadic displays could arise from the higher probability of encountering COM than IND.

To address these methodological limitations, a follow-up experiment using modified dyadic displays was designed to investigate the replicability of the main findings.

Study 1

Method

To address the limitations of the pilot study, we modified the CID-5 task to create CID-Removed (CID-R). In CID-R, the side of the original stimuli that entailed the second agent response was masked to limit the visibility of the cues solely to the first agent actions. Participants were asked to assess whether the agent performed an individual gesture or communicated something to another person, invisible on the display. One animation was excluded due to visual overlap of the presented agents, resulting in 13 COM and 7 IND displays. Thus, the same set of first agent actions was used in the single (CID-R) and dyadic (CID-5) versions of the task. In each trial, the PLDs were presented twice. Upon the second presentation, participants were asked to distinguish whether the presented action was COM or IND. Participants were then asked to choose which of five descriptions best matched the presented animation. For CID-R, the original descriptions from CID-5 were modified by omitting the description of the second agent response (an example can be found in Table 3). The response format was the same for both tasks, which allowed for an unbiased comparison of the scores from each version of the task. A between-subject design was chosen due to the fact that the single- and two-agent displays consisted of the same actions, and thus showing both sets to participants would strongly influence their recognition of the repeated stimuli and not allow for counterbalancing.

A sample of 80 participants (19–46 years old, with no self-reported history of neurological or psychiatric diagnoses) was recruited for Study 1. One participant was excluded due to highly outlying scores (sensitivity < − 1, 0% accuracy in single-agent IND detection), thus the final sample consisted of 79 participants of which 39 completed CID-5 (14 males, age: M = 24.97, SD = 5) and 40 completed CID-R (18 males, age: M = 24.3, SD = 5.66). There was no difference in gender, Χ2(1) = 0.68, p = 0.41, or age, t(77) = 0.56, p = 0.576, between the two groups.

We originally planned to gather a sample of 96 participants, as calculated using G*Power software (Faul et al., 2007) for a one-tailed comparison of two independent means, given effect size d = 0.514 (based on Cohen’s d for criterion scores difference in the pilot study) and power = 0.80. However, due to the COVID-19 pandemic, we had to suspend examination of participants. For a one-tailed comparison of two independent means with power = 0.80, the sample size of 79 should be sufficient to detect an effect size d = 0.564, which falls a little above the effect size observed in the pilot study. As Study 1 was not an exact replication of the pilot study and thus the effect size for a between-subject design could differ, we decided to analyze the gathered data.

Statistical Analysis

Two independent-sample t-tests were used to compare the criterion and sensitivity scores between CID-R and CID-5 tasks. Moreover, mixed ANOVA was used to measure the accuracy of interpretation of given actions. The type of action (COM vs. IND) was used as a within-subject factor, and the task (CID-5 vs. CID-R) as a between-subject factor.

Results

SDT Parameters

Mean hit and false-alarm rates for CID-5 were 89% (SD = 13%) and 34% (SD = 29%) respectively, and 62% (SD = 14%) and 12% (SD = 8%) for CID-R (values were corrected as in the pilot study).

Similarly to the pilot study, criterion differed from zero both for CID-R (M = 0.45; SD = 0.27) and CID-5 (M = − 0.48; SD = 0.58) conditions: CID-R: t(39) = 10.416, p < 0.001; CID-5: t(38) = − 5.08, p < 0.001. Criterion was found to be significantly lower in CID-5 condition than in CID-R condition: t(77) = − 9, p < 0.001, d = 2.034, 95% CI [− 1.14, − 0.72].

Sensitivity did not differ significantly between the CID-5 (M = 1.9; SD = 1) and CID-R (M = 1.57; SD = 0.54): t(77) = 1.79, p = 0.079, d = 0.403, 95% CI [0.18, − 0.04]. The results are illustrated in Fig. 2.

Action Interpretation

There was a significant main effect of action type, F(1, 77) = 164.03, p < 0.001, η2 = 0.681, with IND (M = 0.76; SD = 0.2) being interpreted more accurately than COM (M = 0.52; SD = 0.24). The main effect of task was also significant, F(1, 77) = 8.77, p < 0.010, η2 = 0.102, such that participants in the CID-5 group (M = 0.69; SD = 0.17) were more successful at identifying specific actions than participants in the CID-R group (M = 0.52; SD = 0.13).

The interaction effect between type of action and task was significant, F(1, 80) = 166.54, p < 0.001, η2 = 0.684. Bonferroni-corrected post-hoc comparisons showed that COM actions were more successfully recognized in the CID-5 group (M = 0.69; SD = 0.17) than the CID-R group (M = 0.35; SD = 15%; 95% CI [0.27, 0.42], p < 0.001), while the reverse pattern was present for IND actions, with more successful recognition in the CID-R group (M = 0.83; SD = 0.14) than the CID-5 group (M = 0.69; SD = 0.22; 95% CI [0.6, 0.22], p = 0.001). The results are illustrated in Fig. 3.

Discussion

Again, in line with the dyadic communicative bias hypothesis, participants showed more liberal decision thresholds for classifying actions as COM in the CID-5 task than in the CID-R task. Although the SDT analyses do not support the facilitated recognition hypothesis, the presentation of dyadic displays increased recognition of specific COM actions but decreased recognition of IND actions.

Several limitations of this study should be pointed out: The unequal number of IND and COM trials, significant variance in animation length (2.5–8 s), and between-subject design preclude inferences whether the observed differences can be attributed solely to dyadic vs. single action presentation or to any between-subject individual differences. To address these issues, we conducted a third study employing a novel set of PLDs with a counterbalanced, within-subject design.

Study 2

Method

Thirty-seven participants (20–49 years old, with no self-reported history of neurological or psychiatric diagnoses) participated in Study 2. The minimum sample size of 32 was determined using G*Power software (Faul et al., 2007) for a comparison of two dependent means, given effect size d = 0.514 (based on the pilot study) and power = 0.80.

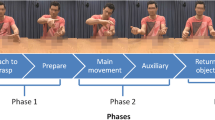

The experimental stimuli were prepared using the Social Perception and Interaction Database database (Okruszek & Chrustowicz, 2020). The database consists of a set of PLDs created to facilitate examination of COM and IND from single-agent and dyadic animations. All of the actions recorded for the database can be freely combined, enabling the generation of well-matched vignettes. The database has been validated in two studies involving samples of healthy participants (Okruszek & Chrustowicz, 2020).

The stimuli prepared for this study comprised 20 vignettes divided into 4 categories (single-agent COM, dyadic COM, single-agent IND, dyadic IND). The length of the stimuli ranged from 3 to 4.5 s. We chose 10 COM and 10 IND vignettes and split them into 2 counterbalanced versions of the task that were presented to 2 groups of participants (version A: N = 19, 10 males, age: M = 27.89, SD = 7.26; version B: N = 18, 5 males, age: M = 29.28, SD = 7.59). No differences in age, t(36) = − 0.57, p = 0.575, or gender, Χ2(1) = 2.37, p = 0.124, were found between the two groups. Single-agent COM animations from version A (e.g., an agent gives a signal to come closer) were presented as dyadic COM animations in version B by being matched with a gesture-congruent action of second agent (e.g., second agent comes closer). Similarly, single-agent IND from version A (e.g., an agent chops wood) were presented as dyadic in version B, matched with an incongruent action (e.g., second agent comes closer). Exemplary animations are presented in Fig. 4, and the exact scheme of the study can be found in Table 4.

Due to the COVID-19 pandemic, the experiment took place on an online platform. The task was divided into two blocks (single- and dyadic displays), each preceded by brief instructions (based on the instructions for the CID-5 task). Participants were asked to classify the animations in each block as COM or IND and choose from one of four potential descriptions, i.e., two COM and two IND alternatives for each vignette. The order of presentation was fixed: the block consisting of single-agent displays always preceded the dyadic displays. Starting with the more ambiguous (single-agent) stimuli prevented possible bias based on the cues given in the dyadic displays.

Statistical Analysis

In order to examine differences in SDT parameters between the tasks, two paired-sample t-tests were used to compare the criterion and sensitivity scores. Criterion scores were again compared to zero in order to examine response bias. Mixed ANOVA was used to measure the accuracy of interpretation of given actions with the type of action (COM vs. IND) and type of display (single vs dyadic) as within-subject factors and version of the task (A vs. B) as a between-subject factor.

Results

SDT Parameters

Mean hit and false-alarm rates for dyadic displays were 86% (SD = 8%) and 21% (SD = 14%) respectively, and 60% (SD = 17%) and 11% (SD = 5%) for single-agent displays (values were corrected as in previous studies).

Again, criterion differed from zero both for single-agent (M = 0.49; SD = 0.25) and dyadic displays (M = − 0.12; SD = 0.27): single-agent: t(36) = 11.99, p < 0.001; dyadic: t(36) = − 2.7, p < 0.05. Criterion was significantly lower for dyadic displays than for single-agent displays: t(36) = 11.09, p < 0.001, d = 1.82, 95% CI [0.50, 0.72].

In contrast, sensitivity was found to be significantly higher for dyadic displays (M = 2; SD = 0.53) as compared with single-agent displays (M = 1.51; SD = 0.52): t(36) = − 3.6, p < 0.001, d = 0.59, 95% CI [− 0.76, − 0.21]. The results are illustrated in Fig. 5.

Action Interpretation

Both main effects of the within-subject factors were significant. Again, dyadic animations (M = 0.86; SD = 0.11) were interpreted more accurately than single-agent animations (M = 0.66; SD = 0.11), F(1, 35) = 87.60, p < 0.001, η2 = 0.715, and so were IND (M = 0.89; SD = 0.11) compared with COM (M = 0.63; SD = 0.12), F(1, 35) = 123, p < 0.001, η2 = 0.778. The interaction effect between the type of display and type of action was also significant, F(1, 35) = 122.26, p < 0.001, η2 = 0.777. Bonferroni-corrected post-hoc comparisons showed that COM was interpreted more accurately in dyadic displays (M = 0.86; SD = 0.16) than in single-agent displays (M = 0.4; SD = 0.18; 95% CI [0.39, 0.54], p < 0.001), while IND was interpreted more accurately in single-agent displays (M = 0.93; SD = 0.11) than in dyadic displays (M = 0.86; SD = 0.16; 95% CI [0.01, 0.13], p = 0.015).

We additionally investigated the impact of versions of the tasks. While the analysis revealed a significant interaction between the type of action and version, F(1, 35) = 4.47, p = 0.042, η2 = 0.113, and a significant 3-way interaction, F(1, 35) = 5.22, p = 0.029, η2 = 0.130, the difference between scores in the two versions was found to be caused by one item. After its exclusion, the significance of the main effects and the interaction effect remained intact, while the effects of version became insignificant. The results are illustrated in Fig. 6.

Discussion

Study 2 provides further evidence for dyadic communicative bias, in line with the pilot study and Study 1, as participants had a more liberal response threshold when detecting COM actions for dyadic displays than single-agent displays. Furthermore, this study supports facilitated recognition, as participants showed a higher sensitivity to COM vs. IND displays when presented with dyadic vs. single-agent animations and, in line with Study 1, were more accurate when interpreting COM in dyadic (vs. single-agent) displays and IND in single-agent (vs. dyadic) displays.

General Discussion

The aim of the current study was to investigate the mechanisms associated with processing COM from single and dyadic displays. Although there is a large body of research focusing on the facilitated recognition hypothesis, which emphasizes the role of congruency between dyadic actions observed during dyadic communicative interactions for stimuli perception, we propose that the presentation of two agents itself is sufficient to elicit dyadic communicative bias, i.e., an increased likelihood of reporting COM when observing dyadic actions.

Analysis of the SDT parameters linked to dyadic communicative response and facilitated recognition provides robust evidence for the former. A decreased response threshold for COM was observed for dyadic vs. single-agent animations across all three studies, regardless of their methodological differences. This consistency supports the notion that third-party encounters are salient social signals that may alter the observer’s perceptual thresholds. The opposite effect—i.e., an increased likelihood of perceiving the masked second agent after perceiving the communicative gesture of the first agent—has been previously shown using psychophysics methodology in both the general population (Manera, Del Giudice, et al., 2011) and clinical samples (Okruszek et al., 2019). Observations from the current study are complemented by mounting behavioral (Papeo et al., 2017) and neuroimaging (Abassi & Papeo, 2020) evidence of strong visual preference for dyadic cues in interaction-enabling configurations.

Analysis of the sensitivity parameters throughout the studies provides mixed support for the facilitated recognition hypothesis. Higher sensitivity for COM in dyadic displays was only observed in the well-matched, within-subject design (Study 2). It is plausible that, in Study 1, the sensitivity scores may have been biased by individual differences in the participants of the two groups. Moreover, the pilot study utilized high-resolution PLDs with additional markers representing hands and fingers, which may have facilitated the recognition of COM actions and resulted in higher sensitivity to single-agent vs. dyadic actions. Although the SDT analyses do not provide congruent support for the facilitated recognition hypothesis, the presentation of dyadic displays increased recognition of specific COM actions—but decreased recognition of IND actions—in Studies 2 and 3. This pattern of findings suggests that, in line with the facilitated recognition hypothesis, the coupling of meaningful actions between two agents increases the ability to accurately interpret them. Notably, the reverse pattern of findings was found for IND actions: in the case of uncoupled actions, the presence of the second agent actually increases the processing effort and decreases the ability to correctly grasp both actions, which is congruent with the chunk-storage hypothesis (Ding et al., 2017).

Taken together, our findings extend the literature by providing evidence that both communicative bias and facilitated recognition affect the processing of COM from dyadic vs. single-agent displays. Based on our results, these two mechanisms may be seen as complementary rather than competitive. In line with two-systems theory (Satpute & Lieberman, 2006), dyadic communicative bias and facilitated recognition may be linked with different stages of stimuli processing. Dyadic communicative bias may be more related to early orienting toward salient stimuli and preattentive processes (Papeo & Abassi, 2019), while more detailed analysis of specific information carried by the stimuli is needed to elicit facilitated recognition. In line with these proposals, a recent functional neuroimaging study reported increased coupling between the amygdala, which supports bottom-up orienting toward salient stimuli, and the medial prefrontal cortex, which supports top-down mentalizing abilities, during communicative vs. individual action processing from PLDs (Zillekens et al., 2019). Given the previously observed double dissociation in overt and covert mechanisms of communicative interactions processing in patients with schizophrenia (Okruszek Piejka et al., 2018) and high-functioning autism spectrum conditions (von der Lühe et al., 2016), investigation of these processes may provide vital clinical insights and extend previous conceptualization of the processes underlying one of the most basic and crucial human capacities.

While the current article presents robust multi-study evidence for the communicative bias, some limitations of the current findings should be pointed out. First, there were significant discrepancies between the methodologies of the three presented studies. Even though different designs and paradigms were utilized, communicative bias has been consistently observed across studies. However, the similar consistency was lacking for sensitivity scores, thus no similar conclusions can be drawn about the impact of the second agent’s response on participants’ ability to process communicative gestures. Second, the unequal number of IND and COM stimuli and differences in timings of particular animations might have impacted the results in the pilot study and Study 1. However, Study 2 addressed those issues while still replicating the effect of dyadic presentation on response bias. Finally, the number of trials across paradigms is on the lower edge of SDT implementation. At the same time, a similar approach has been previously successfully implemented in CID studies (Manera et al., 2016). As the main finding of the study was repeatedly revealed across paradigms, participants groups, and study designs, the effect of communicative bias in dyadic displays can be considered as a consistent and significant insight into the mechanism of action perception and interpretation.

References

Abassi, E., & Papeo, L. (2020). The representation of two-body shapes in the human visual cortex. The Journal of Neuroscience, 40(4), 852–863. https://doi.org/10.1523/JNEUROSCI.1378-19.2019

Augusti, E.-M., Melinder, A., & Gredebäck, G. (2010). Look who’s talking: Pre-verbal infants’ perception of face-to-face and back-to-back social interactions. Frontiers in Psychology, 1, 161. https://doi.org/10.3389/fpsyg.2010.00161

Bala, A., Okruszek, Ł, Piejka, A., Głębicka, A., Szewczyk, E., Bosak, K., …, Marchel, A. (2018). Social perception in mesial temporal lobe epilepsy: Interpreting social information from moving shapes and biological motion. The Journal of Neuropsychiatry and Clinical Neurosciences, 30(3), 228–235. https://doi.org/10.1176/appi.neuropsych.17080153

Becchio, C., Manera, V., Sartori, L., Cavallo, A., & Castiello, U. (2012). Grasping intentions: From thought experiments to empirical evidence. Frontiers in Human Neuroscience, 6, 117. https://doi.org/10.3389/fnhum.2012.00117

Billino, J., Bremmer, F., & Gegenfurtner, K. R. (2008). Differential aging of motion processing mechanisms: Evidence against general perceptual decline. Vision Research, 48(10), 1254–1261. https://doi.org/10.1016/j.visres.2008.02.014

Brown, E. C., & Brüne, M. (2012). Evolution of social predictive brains? Frontiers in Psychology, 3, 414. https://doi.org/10.3389/fpsyg.2012.00414

Centelles, L., Assaiante, C., Etchegoyhen, K., Bouvard, M., & Schmitz, C. (2013). From action to interaction: Exploring the contribution of body motion cues to social understanding in typical development and in autism spectrum disorders. Journal of Autism and Developmental Disorders, 43(5), 1140–1150. https://doi.org/10.1007/s10803-012-1655-0

Ciaramidaro, A., Becchio, C., Colle, L., Bara, B. G., & Walter, H. (2014). Do you mean me? Communicative intentions recruit the mirror and the mentalizing system. Social Cognitive and Affective Neuroscience, 9(7), 909–916. https://doi.org/10.1093/scan/nst062

Ding, X., Gao, Z., & Shen, M. (2017). Two equals one: Two human actions during social interaction are grouped as one unit in working memory. Psychological Science, 28(9), 1311–1320. https://doi.org/10.1177/0956797617707318

Faul, F., Erdfelder, E., Lang, A.-G., & Buchner, A. (2007). G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39(2), 175–191. https://doi.org/10.3758/BF03193146

Fedorov, L. A., Chang, D.-S., Giese, M. A., Bülthoff, H. H., & de la Rosa, S. (2018). Adaptation aftereffects reveal representations for encoding of contingent social actions. Proceedings of the National Academy of Sciences of the United States of America, 115(29), 7515–7520. https://doi.org/10.1073/pnas.1801364115

Fletcher, P. C., & Frith, C. D. (2009). Perceiving is believing: A Bayesian approach to explaining the positive symptoms of schizophrenia. Nature Reviews. Neuroscience, 10(1), 48–58. https://doi.org/10.1038/nrn2536

Hugenberg, K., & Wilson, J. P. (2013). Faces are central to social cognition. Oxford University Press. https://doi.org/10.1093/oxfordhb/9780199730018.013.0009

Jaywant, A., Wasserman, V., Kemppainen, M., Neargarder, S., & Cronin-Golomb, A. (2016). Perception of communicative and non-communicative motion-defined gestures in Parkinson’s disease. Journal of the International Neuropsychological Society, 22(5), 540–550. https://doi.org/10.1017/S1355617716000114

Johansson, G. (1973). Visual perception of biological motion and a model for its analysis. Perception & Psychophysics, 14(2), 201–211. https://doi.org/10.3758/BF03212378

Macmillan, N. A., & Creelman, C. D. (2004). Detection theory: A user’s guide (2nd ed.). Psychology Press. https://doi.org/10.4324/9781410611147

Manera, V., Becchio, C., Schouten, B., Bara, B. G., & Verfaillie, K. (2011a). Communicative interactions improve visual detection of biological motion. PLoS ONE, 6(1), e14594. https://doi.org/10.1371/journal.pone.0014594

Manera, V., Del Giudice, M., Bara, B. G., Verfaillie, K., & Becchio, C. (2011b). The second-agent effect: Communicative gestures increase the likelihood of perceiving a second agent. PLoS ONE, 6(7), e22650. https://doi.org/10.1371/journal.pone.0022650

Manera, V., Schouten, B., Becchio, C., Bara, B. G., & Verfaillie, K. (2010). Inferring intentions from biological motion: A stimulus set of point-light communicative interactions. Behavior Research Methods, 42(1), 168–178. https://doi.org/10.3758/BRM.42.1.168

Manera, V., Schouten, B., Verfaillie, K., & Becchio, C. (2013). Time will show: Real time predictions during interpersonal action perception. PLoS ONE, 8(1), e54949. https://doi.org/10.1371/journal.pone.0054949

Manera, V., von der Lühe, T., Schilbach, L., Verfaillie, K., & Becchio, C. (2016). Communicative interactions in point-light displays: Choosing among multiple response alternatives. Behavior Research Methods, 48(4), 1580–1590. https://doi.org/10.3758/s13428-015-0669-x

Okruszek, Ł, & Chrustowicz, M. (2020). Social perception and interaction database—A novel tool to study social cognitive processes with point-light displays. Frontiers in Psychiatry, 11, 123. https://doi.org/10.3389/fpsyt.2020.00123

Okruszek, Ł, Piejka, A., Wysokiński, A., Szczepocka, E., & Manera, V. (2018). Biological motion sensitivity, but not interpersonal predictive coding is impaired in schizophrenia. Journal of Abnormal Psychology, 127(3), 305. https://doi.org/10.1037/abn0000335

Okruszek, Ł, Piejka, A., Wysokiński, A., Szczepocka, E., & Manera, V. (2019). The second agent effect: Interpersonal predictive coding in people with schizophrenia. Social Neuroscience, 14(2), 208–213. https://doi.org/10.1080/17470919.2017.1415969

Papeo, L., & Abassi, E. (2019). Seeing social events: The visual specialization for dyadic human-human interactions. Journal of Experimental Psychology. Human Perception and Performance, 45(7), 877–888. https://doi.org/10.1037/xhp0000646

Papeo, L., Stein, T., & Soto-Faraco, S. (2017). The two-body inversion effect. Psychological Science, 28(3), 369–379. https://doi.org/10.1177/0956797616685769

Puglia, M. H., & Morris, J. P. (2017). Neural response to biological motion in healthy adults varies as a function of autistic-like traits. Frontiers in Neuroscience, 11, 404. https://doi.org/10.3389/fnins.2017.00404

Redcay, E., & Carlson, T. A. (2015). Rapid neural discrimination of communicative gestures. Social Cognitive and Affective Neuroscience, 10(4), 545–551. https://doi.org/10.1093/scan/nsu089

Roché, L., Hernandez, N., Blanc, R., Bonnet-Brilhault, F., Centelles, L., Schmitz, C., & Martineau, J. (2013). Discrimination between biological motion with and without social intention: A pilot study using visual scanning in healthy adults. International Journal of Psychophysiology, 88(1), 47–54. https://doi.org/10.1016/j.ijpsycho.2013.01.009

Satpute, A. B., & Lieberman, M. D. (2006). Integrating automatic and controlled processes into neurocognitive models of social cognition. Brain Research, 1079(1), 86–97. https://doi.org/10.1016/j.brainres.2006.01.005

Stanislaw, H., & Todorov, N. (1999). Calculation of signal detection theory measures. Behavior Research Methods, Instruments, & Computers : A Journal of the Psychonomic Society Inc, 31(1), 137–149. https://doi.org/10.3758/BF03207704

Trujillo, J. P., Simanova, I., Özyürek, A., & Bekkering, H. (2020). Seeing the unexpected: How brains read communicative intent through kinematics. Cerebral Cortex, 30(3), 1056–1067. https://doi.org/10.1093/cercor/bhz148

Vestner, T., Tipper, S. P., Hartley, T., Over, H., & Rueschemeyer, S.-A. (2019). Bound together: Social binding leads to faster processing, spatial distortion, and enhanced memory of interacting partners. Journal of Experimental Psychology: General, 148(7), 1251–1268.

von der Lühe, T., Manera, V., Barisic, I., Becchio, C., Vogeley, K., & Schilbach, L. (2016). Interpersonal predictive coding, not action perception, is impaired in autism. Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences, 371, 1693. https://doi.org/10.1098/rstb.2015.0373

Yovel, G., & O’Toole, A. J. (2016). Recognizing people in motion. Trends in Cognitive Sciences, 20(5), 383–395. https://doi.org/10.1016/j.tics.2016.02.005

Zaini, H., Fawcett, J. M., White, N. C., & Newman, A. J. (2013). Communicative and noncommunicative point-light actions featuring high-resolution representation of the hands and fingers. Behavior Research Methods, 45(2), 319–328. https://doi.org/10.3758/s13428-012-0273-2

Zillekens, I. C., Brandi, M.-L., Lahnakoski, J. M., Koul, A., Manera, V., Becchio, C., & Schilbach, L. (2019). Increased functional coupling of the left amygdala and medial prefrontal cortex during the perception of communicative point-light stimuli. Social Cognitive and Affective Neuroscience, 14(1), 97–107. https://doi.org/10.1093/scan/nsy105

Funding

This work was supported by the National Science Centre, Poland (Grant No: 2016/23/D/HS6/02947).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Piejka, A., Piaskowska, L. & Okruszek, Ł. Two Means Together? Effects of Response Bias and Sensitivity on Communicative Action Detection. J Nonverbal Behav 46, 281–298 (2022). https://doi.org/10.1007/s10919-022-00398-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10919-022-00398-2