Abstract

Public authorities in many jurisdictions are concerned about the proliferation of illegal content and products on online platforms. One often discussed solution is to make the platform liable for third parties’ misconduct. In this paper, we first identify platform incentives to stop online misconduct in the absence of liability. Then, we provide an economic appraisal of platform liability that highlights the intended and unintended effects of a more stringent liability rule on several key variables such as prices, terms and conditions, business models, and investments. Specifically, we discuss the impact of the liability regime applying to online platforms on competition between them and the incentives of third parties relying on them. Finally, we analyze the potential costs and benefits of measures that have received much attention in recent policy discussions.

Similar content being viewed by others

1 Introduction

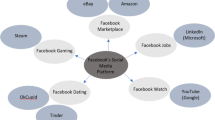

Digitization has played a significant role in our economies over the last decades. In 2019, among the ten biggest companies worldwide, seven were operating in digital markets (Apple, Microsoft, Amazon.com, Alphabet, Facebook, Alibaba, and Tencent Holdings), mostly as online intermediaries. In Europe, recent statistics indicate that 7 out of 10 consumers ordered online goods in 2019, sustaining a steady increase in the number of online transactions.Footnote 1 Such numbers are likely to increase in the next years, following the COVID-19 outbreak which spurred, even more, the use of online services for basic needs. Similarly, the share of individuals using hosting platforms such as social networks reached more than 56% in the European Union in 2018.Footnote 2

As some of the firms’ and consumers’ fundamental activities have moved online (such as shopping, socialization, and content consumption), online misconduct has proliferated too, taking different forms. For example, in e-commerce platforms, some merchants may sell counterfeit goods which violate intellectual property (IP) and harm right holders. Due to the market reach of some big platforms, it is not surprising that OECD (2018) referred to e-commerce platforms as “ideal storefronts for counterfeits”. In hosting, messaging, and video-sharing platforms, online misconduct occurs via the presence of illegal material, such as terrorist propaganda or the illegal distribution of copyrighted content. The presence of this material in social media platforms is further exacerbated by the large-scale production and diffusion of user-generated content that features hate speech and questionable material and might create societal externalities. The tragic events in Capitol Hill in January 2021, for instance, were mostly organized on social media sites, Gab and Parler, with no moderation of content.Footnote 3

Yet, preventing online misconduct can be challenging in practice, and requires substantial resources, including a combination of state-of-the-art technology for machine detection and human moderators. Moreover, striking the right balance with fundamental freedoms such as the freedom of speech is a difficult task (see e.g., the First Amendment in the US). This study aims to investigate the incentives of online platforms to mitigate or stop online misconduct in a laissez-faire regime, and how changes in liability rules might alter these incentives and affect platforms’ key strategic variables. This is relevant as the current liability regimes—for example, Sect. 230 of the Communications Decency Act in the US and the e-Commerce Directive (2000/31/EC) in the European Union—have been considered outdated and proposals were recently made. In December 2020, the European Commission has unveiled its proposal for the Digital Services Act, which continues to ensure conditional liability exemption to online intermediaries but introduces a differential system with some additional procedural obligations for “very large” platforms.Footnote 4 In the UK, the Government proposed the Online Safety Bill imposing a duty of care on digital services providers.Footnote 5 More stringent rules have been proposed in the California Assembly in February 2021 for intermediaries selling defective products.Footnote 6

The current liability regimes, which were designed in 1996 in the U.S. and 2000 in the EU, were established to help “information society services” to grow and protect them from endless litigation that might discourage investment. However, in the last twenty years, the platform economy has changed substantially. Due to the global reach of many intermediation services, it has become difficult, if not impossible, for a victim to claim damage for harm that occurred online, which increases the risk that a large number of victims would remain uncompensated for any damage they suffer. Similarly, harmful content can become easily viral thanks to groups, algorithms, and recommender systems. On top of these aspects, some platforms have become very large, have deep pockets, and are in the technological position to withhold support to users (or sellers) involved in online misconduct. Turning again on the events of Capitol Hill in 2021, coordinated action of Google, Apple, and Amazon withheld support to Parler in their app stores and hosting environment, respectively.

Against this background, this paper provides a novel analysis of the incentives of online platforms to engage in self-regulatory conduct and the economic effects of introducing more stringent platform liability. More specifically, we discuss some of the intended and unintended effects that changes in liability rules might generate and the social desirability of such changes. This is critical as most platforms follow a multi-sided business model, employ different pricing and non-pricing strategies, and generate value facilitating (or exploiting) interactions between different groups of agents. We restrict attention to the following types of online misconduct: (i) the presence of counterfeits on e-commerce websites and the related violation of intellectual property rights, (ii) copyright infringement on hosting platforms, (iii) hate speech and other unlawful materials (which may depend on specific national legislations) hosted on online intermediaries. To this end, we focus on e-commerce platforms, social networks, and hosting platforms and on the peculiarities of the business models that these platforms adopt.

Our analysis raises awareness of the importance of considering that platforms might react to a change in the liability regime by re-optimizing its strategy. Specifically, we suggest that a change in the liability system is likely to affect inter alia the pricing strategies of the platforms, the level of participation in their activities, their business models, their terms & conditions, and their investments. Therefore, understanding these effects is necessary to design appropriate liability rules and possible exemptions as well as to neutralize some unintended negative effects that may potentially arise.

Our paper is related to the law and economics literature on liability. This literature has mostly focused on the liability regime that applies to producers of a given good,Footnote 7 and has analyzed, among others, the advantages and shortcomings of so-called “strictly liability” and “negligence-based liability”.Footnote 8 Notable exceptions are recent works by Buiten et al. (2020), De Chiara et al. (2021), Hua and Spier (2021), and Jeon et al. (2021) that study the economic effects of holding platforms liable. The paper by Buiten et al. (2020) is the most related in spirit to ours. These authors examine liability rules for online hosting services from an economic and legal perspective and provide policy recommendations for a liability regime in the European Union. They identify several problems in the current liability framework for online intermediaries in the European Union. Above all, they consider that the absence of the so-called “Good Samaritan” protection in the EU e-Commerce Directive is highly problematic. The “Good Samaritan” clause grants liability exemption from any action voluntarily taken in good faith to restrict access to obscene, lewd, lascivious, filthy, excessively violent, harassing, or otherwise objectionable material. Such a clause is explicitly mentioned in the US Sect. 230(c)(2) of the Communications Decency Act. Yet, its absence in the current EU liability framework creates perverse incentives for platforms not to monitor online activity, thus undermining self-regulation. Buiten et al. (2020) also argue that responsibility should be shared among all the parties involved in the diffusion of illegal online material and that the liability rule applying to online platforms should be principles-based and supplemented by co-regulation or self-regulation. Our paper complements theirs by identifying novel channels through which the introduction of a more stringent liability might alter platform incentives in the short, medium, and long run.

The outline of the paper is as follows. In Sect. 2, we briefly discuss the relevant literature on liability in “traditional” markets, i.e., markets in which no intermediation takes place. In Sect. 3, we discuss the incentives of a platform to adopt self-regulation regardless of the imposition of a liability rule. In Sect. 4, we provide an economic analysis of liability for online platforms and investigate the impact of a more stringent liability regime on several key economic variables. In Sect. 5, we analyze the implications of specific aspects of liability rules. Among these, we discuss upgrades of the current liability system which are conditional to a series of procedural obligations, such as those that increase transparency on platform activities regarding the removal of unlawful content or the design and implementation of review schemes. Similarly, as a one-size-fits-all liability regime might consolidate the market dominance of large platforms, we discuss some additional clauses that could apply solely to “very large” platforms (e.g., those platforms with a large volume of sales or with a critical number of users). Finally, in Sect. 6, we summarize the main takeaways of our analysis.

2 Product liability in traditional markets

In this section, we present some of the key results in the law and economics literature on product liability in “traditional” markets, in which firms sell their products to consumers directly. From an economic perspective, a necessary condition for liability to be socially desirable is that firms’ private incentives to engage in care or precaution in order to reduce the potential harm caused by their product(s) are not aligned with the interests of society.

To start with, consider an idealized model, as proposed by Daughety and Reinganum (2013), where private and social incentives are fully aligned. The firm’s optimal level of care minimizes the “full marginal cost” it faces, i.e. the sum of its marginal cost of production (including the cost of care), the expected compensation the firm needs to pay to harmed consumers per unit consumed if the firm is held liable, its expected per-unit litigation costs, and the expected uncompensated loss to the consumer per unit consumed.Footnote 9 When deciding on its level of care, the firm internalizes all the costs borne by society and thus it behaves like a benevolent planner that maximizes social welfare. Imposing liability on the firm in these circumstances could reduce social welfare by generating costly litigation that would have been avoided in the absence of liability.

However, there are reasons for liability to be socially desirable, and these are associated with the presence of one or several market failures. Consider, for instance, a scenario with asymmetric information between the firm and consumers, with the latter not being able to observe the level of care chosen by the former. In that case, the demand the firm receives will be independent of the actual level of care it takes; rather, it will depend on the consumer’s conjectured care level. Absent liability, the firm is not rewarded for taking more care and, in turn, it will choose the minimal care level. A similar argument applies to the presence of a third party not participating in the transaction but who could potentially be harmed. In this case, imposing liability on the firm may be socially desirable, as the firm does not take account of the negative externality it exerts on the third party when choosing its level of care.

Another market failure that could also make it desirable to introduce some form of liability is market power. Hua and Spier (2020) illustrate it in a model wherein a monopolist sells a potentially dangerous product to a set of heterogeneous consumers who are fully informed about the level of product safety. In their model, absent liability, the private and social incentives to invest in product safety are not aligned. The reason is that the firm cares about the “marginal consumer”, while a social planner that maximizes social welfare cares about the “average consumer”. Thus, making the firm subject to some form of liability might help to mitigate the divergence between the private and social incentives for product safety by giving the firm incentives to invest more in product safety.Footnote 10

The discussion above suggests that, under a wide range of circumstances, imposing liability on firms (or making them subject to a more stringent liability regime) can be an effective way of aligning their private incentives for care with those of society. However, this benefit must be weighed against the potential costs associated with the existence and the level of liability. The most straightforward cost resulting from the imposition of liability on firms is an increase in litigation costs as harmed parties may fail to reach out-of-court settlements.

Other less straightforward costs might also be present. For example, the imposition of, or an increase in, liability leads to an increase in the firm’s full marginal cost because the firm may face litigation more often, which may increase its litigation costs. In turn, this affects not only the level of care chosen by the firm but also the firm’s level of activity. One might expect an increase in the full marginal cost to be passed on, at least partially, to consumers, which would lead to higher prices and lower output. This is a supply-driven effect. Similarly, more liability may also allow a firm to credibly commit vis-à-vis its customers to attain a certain level of care. In turn, a higher level of care might lead to a demand-driven increase in activity that could mitigate, or even outweigh, the negative supply-driven effect.

The socially optimal liability rule that firms should be subject to depends on the magnitude of the costs and benefits of such liability for society. Different rules are typically present and hereby discussed. To provide a stylized representation of the different liability rules, consider a scenario in which a firm sells a product that may cause an accident to a consumer. Suppose now the victim sues the firm and asks for compensation. As already discussed, in a no-liability regime, the firm will not be held liable regardless of its care level and, hence, it will minimize its care effort, e.g., investments in product safety. “Strict liability” represents an extreme case of liability. Let us consider the previous example again and suppose the victim can sue the firm and ask for compensation. Under this rule, the firm/injurer would have to bear the full cost of compensating the consumer regardless of the level of care undertaken. As consumers know that they would be compensated in case an accident occurs, they will not have any (monetary) incentive in taking precautionary actions themselves. By contrast, it is in the interest of the firm to avoid compensating the consumers and paying the related litigation costs by minimizing ex ante the risks of accidents. Naturally, this suggests that strict liability ensures a high level of care and precaution by firms, but this does not suffice to state that strict liability is socially desirable as its benefits for society should be weighed against its costs.Footnote 11

Different shades of liability exist between the two regimes we just discussed. A relevant one is the so-called “negligence-based rule”. According to this regime, a firm causing an accident is considered liable only if not ensuring “duty of care”. From an economic perspective, the duty of care represents legal obligations or precautionary actions (level of care) that would maximize social welfare. However, a negligence rule raises practical issues that do not arise under the two extreme rules of no liability and strict liability. First, it presumes the definition of a legal standard of care (reasonable care), which can sometimes be challenging to identify on a case-by-case basis. Second, the injured party must prove that the defendant failed to take a reasonable level of care thus causing harm to the injured party.

The above discussion considered liability rules that apply to direct wrongdoers. However, it might also be optimal to impose liability on indirect wrongdoers, as illustrated by Hay and Spier (2005). These authors study a perfectly competitive market supplying a product that can ultimately cause harm and both the manufacturer and consumers can take some costly effort to prevent downsides. When consumers have deep pockets (i.e., are fully solvent), imposing liability on consumers is efficient as they can pay for any damage they generate as well as take the optimal level of care. On the contrary, when consumers do not have deep pockets, they bear less than total responsibility for any harm they cause. It is therefore efficient to maintain primary liability on consumers and complement it with residual liability on the manufacturer. The possibility to pay for consumers’ risky behaviors creates incentives for the manufacturer to invest in safer products.Footnote 12

This example suggests that liability can also be introduced to induce some agents to take actions that stop or mitigate the harmful conduct of other players. The setting we have discussed is quite traditional, rooted around the bilateral relationship between buyers and sellers or manufacturers. In markets featuring the presence of online intermediaries, a prominent role can be played by the platform’s owner. This is especially true if direct enforcement of primary liability becomes too expensive (e.g., millions of buyers and sellers in a marketplace). One possible way to deal with this problem is to impose liability on the party that can help to prevent an accident or, in the case of illegal activities, misconduct. The law and economics literature has referred generally to these cases as secondary, indirect, or collateral liability. Most relevant for our analysis is the notion of “gatekeeper liability” (Kraakman, 1986). This is a special case of liability imposed on intermediaries whose characteristics and role enable them to disrupt misconduct by “withholding support” and prevent any infringement. Undoubtedly, platforms are nowadays key players that have developed tools and capabilities to monitor, identify, and to some extent, mitigate illegal activities and misconduct in their ecosystem.Footnote 13 Moreover, introducing liability for intermediaries might lead to a considerable reduction in enforcement costs. Landes and Lichtman (2003) discussed whether a manufacturer producing a decoder box should be held liable for third parties’ infringements of copyright. According to the authors, holding the manufacturer liable would result in substantial enforcement and administrative savings for the harmed party, which would therefore directly sue the manufacturer rather than multiple infringers.Footnote 14 Fagan (2020) showed instead that lawmakers’ decision about the introduction of a liability regime for platforms relative to a safe harbor provision depends on the relative size of different costs, such as the cost of enacting and enforcing a new law versus the cost of maintaining existing law.Footnote 15

3 Platform incentives and self-regulation

Before discussing the platform’s incentives to engage in forms of self-regulation, even in the absence of liability (“laissez-faire” regime), it is convenient to introduce some terminology for the key elements that distinguish online intermediaries. A key feature of most online intermediaries is the presence of within-group and/or cross-group network externalities. Within-group network externalities are generated from the activity of other peers belonging to the same side of the market. For example, in social media platforms, users typically benefit from other users’ participation in the platform environment (e.g., social interactions, user-generated content, data-enabled learning). Cross-group network externalities arise when agents gain or lose from the interactions with agents belonging to a different side of the market, thus rendering the market multi-sided (Armstrong, 2006; Caillaud & Jullien, 2003; Rochet & Tirole, 2003). By acting as managers of the network externalities, platforms generate value which is then captured via different monetization strategies. Belleflamme and Peitz (2021) identify two major strategies, which include charging the side(s) of the market that benefit from network externalities or offering a bundle that includes a source of negative externalities (e.g., advertising, or data collection).

When a monetary price is levied, this price can take different forms. For example, a platform can use membership fees when charging users (e.g., business or end users) for their participation in the platform ecosystem. This happens regardless of the level of the activity carried out on the platform. Alternatively, a platform can levy transaction fees, which can be fixed (i.e., fixed amount per transaction) or ad valorem (i.e., a proportion of the value of the transaction). Most marketplaces seem to use these pricing strategies for their business users or a combination of both.Footnote 16

Social networks and hosting platforms instead offer zero-pricing services to end-users and monetize user attention via advertising. The latter can be perceived as a source of network externality for the users as advertising can entail a nuisance or a privacy cost. There are however cases in which platforms offer subscription services that are ad-free and less-privacy invasive. In these cases, the business model of the platform follows a “freemium” strategy (e.g., YouTube Premium), letting users decide whether to pay a monetary price for ad-free service or use the service for free in exchange for data and attention.

3.1 Platform incentives

Platforms can take some proactive measures to stop online misconduct even in the absence of liability, for example by screening sellers or users’ activity. The literature has considered screening as a platform governance tool that may influence seller competition (Casner, 2020), affect quality provision (Teh, 2021), or protect advertisers from brand safety issues (Madio & Quinn, 2021). In what follows, we restrict attention to platform private incentives, abstracting from any reputational damage that can be borne by the platform because of online misconduct. Indeed, if the platform does not take into account any reputational loss, its incentives will fundamentally depend on whether any participant in the platform environment is harmed by the online misconduct. As the type of harm differs significantly across platforms, we discuss various examples.

Consider an e-commerce platform allowing interactions between sellers and buyers. Suppose the platform adopts an ‘open business model’ for which any seller can join the platform and offer its product(s) as long as it complies with the terms & conditions. Suppose further that in each product category there are original products and counterfeits, the latter being unsafe or manifestly different from the prior of the consumers. In these circumstances, if the platform expects to have repeated interactions with the consumers, it might have ex-ante incentives to police its environment and therefore engage in stricter screening policies. However, these incentives might not arise in the presence of one-off purchases.

Suppose now that the types of counterfeits available on the platform are benign, that is, they are safe for the consumers but still infringing IP rights. An example can be the (illicit) imitation of a branded pair of shoes. Suppose that consumers can distinguish between high-quality branded products and low-quality imitations and that some consumers have a taste for the latter, which can be attractive because of their low price. In this case, any transaction does not lower—but rather increases—buyer surplus, and the only harmed party is the brand owner who may suffer from missed sales. If the brand owner participates in the marketplace and ensures a large volume of sales, the platform might have an incentive to protect its sales and remove the illicit versions, regardless of the liability regime. On the contrary, if the platform can expand its market reach by admitting some low-quality products, even though infringing IP rights, then it might not have incentives to delist the infringers (Jeon et al., 2021). Note that the above discussion abstracts from the presence of screening and filtering costs, which can be quite substantial and limit self-regulatory conduct.

Consider now an ad-funded hosting platform on which viewers can enjoy user-uploaded content. Even absent any liability, a platform might prefer to engage in moderation of harmful content if both viewers and advertisers prefer content to be moderated. This is the case, for example, of hosting platforms that are tailored to family audiences, that attract indeed a quite sensitive customer base and a group of advertisers that demand strict terms and conditions to place their ads. For example, a major concern for advertisers is their “brand safety”, which can be defined as the harm caused to brands when their ads are displayed in unsuitable or harmful environments.Footnote 17

Finally, consider a social media platform. One of the most illicit crimes conducted online relates to hate speech. This crime concerns the production of user-generated content from one of the members of the hosting community, which can create damage to another person or group based on race, religion, sex, or sexual orientation.Footnote 18 In social media platforms, hate speech can be quite common. As a tiny minority of users produces hate speech, the platform might have an incentive to monitor their presence and exclude them if moderation costs are not too high and hate speech has a negative effect on user participation.

Another reason for why a platform might decide to police its ecosystem is to control seller competition and align sellers’ interests to its own. It is often the case that platforms set quality standards and safety requirements to control/limit the entry of third parties and manipulate on-platform competition.Footnote 19 For example, Apple uses its Safety Review to screen out dubious mobile apps. In this sense, screening and platform curation represent another tool for the platform to design and regulate its ecosystem alongside commission rates. Yet, as discussed by Teh (2021) in his work on platform governance, these quality controls might be too restrictive or too permissive compared to those that would be socially desirable.

3.2 Asymmetric information, reputation, and rating systems

In the previous section, we have discussed that product liability in “traditional markets” can be desirable to address asymmetric information. In a digitized world, platforms have powerful incentives and tools to mitigate asymmetric information, which might make liability less useful. Platform business models succeed when stimulating the participation of different agents, and this participation implies not only the number of sellers and buyers but also their quality. This allows platforms to build a somewhat collective reputation. If many sellers joining a platform start offering low-quality or fake products and buyers do not enjoy these products, the reputation of the platform might be adversely impacted. As a result, the strength of the network externalities would be reduced (Belleflamme & Peitz, 2018), and buyers would prefer to purchase on different platforms, offline, or directly from the retailer websites. Given this threat, platforms have incentives to act as regulators on their own space to build and maintain reputation. For example, favorable return policies can help to verify whether buyers’ prior expectations are consistent with the post-purchase realization and mitigate problems of asymmetric information.Footnote 20

Online intermediation can help to mitigate asymmetric information by providing review and rating schemes. Several studies have demonstrated the welfare gains associated with online reviews (e.g., Reimers & Waldfogel, 2021) and their economic impact has been largely discussed in the economic literature (for a survey, see e.g., Belleflamme & Peitz, 2018, 2020). Reviews and ratings are user-generated information that are available pre-purchase to potential new buyers and help to shape their expectations regarding quality, safety, and other characteristics. The presence of reviews is also an important proxy of a seller’s activity in the marketplace, and this can represent relevant information for the buyer.Footnote 21 If reviews are effective in mitigating the problem of unobservability of product characteristics and let consumers identify unsafe products, then the introduction of liability might not be necessary to mitigate asymmetric information.Footnote 22 Yet, not all buyers might pay attention to reviews. In addition, reviews might suffer from several issues related to asymmetric herding behavior, idiosyncratic tastes, strategically distorted ratings (Belleflamme & Peitz, 2018), or simply being fakes.Footnote 23 In the latter cases, the mere presence of a review system might not be enough to address the asymmetric information problem.

3.3 Private vs. social incentives

As discussed previously, a platform might have incentives to intervene in its ecosystem to preserve its reputation, to anticipate regulatory changes, or to preserve the strength and the quality of the network externalities it creates. However, its incentives are likely to be different from those of the social planner as a platform only cares about all (potential) users it can reach while the social planner also cares about the externalities exerted on players not active on the platform. In other words, the negative externalities for parties that are extraneous to the platform are not internalized by the platform when deciding its terms and conditions or screening policy, which implies that the platform incentives to attain a certain level of care might be lower than what would be socially desirable. Examples can be right holders that do not participate in the platform environment and observe their products being imitated illegally online, or individuals that do not use the platform but are harmed by hate speech produced by its users. Whether most of the social harm from illegal conduct on a platform is borne by its users or economic agents outside its ecosystem depends on several factors including the type of the conduct and the size of the platform. For instance, the impact of hate speech on non-users of a niche social media platform populated by users with extremist political views would account for most of the social harm associated with this misconduct. In contrast, the infringement of brand owners’ trademarks on a very large e-commerce platform hosting the vast majority of brand owners and serving a large share of potential consumers would primarily affect the platform’s users.

Let us return to the example of an e-commerce website in which low- and high-quality sellers co-exist Suppose that low-quality products can infringe IP rights but do not cause harm to final buyers, who are willing to buy the low-quality version of a branded product. Suppose also that the platform earns most of its revenues from the sales of low-quality products, as these generate substantial market expansion. In these circumstances, removing these listings and expelling dubious sellers and IP-infringers might be too costly for the platform’s owner and would reduce the platform’s profit. Indeed, liability rules can make a difference and a more stringent liability rule may be required to align the platform owner's private incentives with what is socially desirable.

Furthermore, as discussed in Buiten et al. (2020), sellers might not have the incentive to exploit buyers in a platform environment if the risk of being expelled is high. Indeed, an e-commerce platform may have incentives to police the sellers even in the absence of liability because of the fear that buyers leave it and join competing marketplaces. However, that buyers’ threat to move to competing platforms may not be credible in the presence of market power or lock-in effects. In this case, if network externalities are strong, an e-commerce platform acts as a unique gatekeeper vis-à-vis sellers. On the one hand, this puts the platform in a much stronger position vis-à-vis sellers willing to exploit consumers, given the lack of alternatives to the sellers. On the other hand, having achieved a critical size, a platform might not have a strong incentive to police the platform or to invest further resources on monitoring technologies and ex-ante verification of sellers.

Consider now a social media platform, wherein identifying victims can be challenging. As discussed already, the platform’s owner might have incentives to protect its users. Yet, such an incentive can be much lower if the victims (and their peers) find it hard to leave the platform. This can be the case of dominant platforms that attract most of the users. The threat to move to a (smaller) rival platform might be particularly low in this case. Even in the presence of competing platforms of similar size, this threat might not be credible if users single-home—i.e., patronize a single platform—and there are high switching costs.Footnote 24 This is because abandoning the platform for another one would require coordination of their network, which is sometimes difficult in practice. Indeed, users might be locked in, and the incentive of the platforms would be lowered.

4 Economic effects of platform liability

We can now discuss the economic effects of a change in the liability regime that makes it less favorable to platforms. One way to think about a more stringent liability regime is that it becomes more costly for the platform to benefit from liability exemption, e.g., because this requires abiding by additional screening obligations. A more stringent liability regime is likely to have two types of effects: first, an intended effect capturing the impact on the platform’s screening efforts; second, unintended effects capturing the impact on other decision variables that will be adjusted by platforms as a response to the change in the liability regime applying to them. Both types of effects matter when assessing the impact of a more stringent liability regime on social welfare.

In this section, we assume the following sequence of actions: lawmakers move first, followed by platforms, and then users (e.g., end-users and business users).

4.1 Pricing strategies and level of activity

First, we examine the impact of an increase in the liability applying to platforms on their pricing strategies and the level of activity in the different sides of the market. Our discussion of liability in traditional markets suggests that more stringent liability may lead to higher prices and a lower level of activity. This insight carries over to platform markets in certain circumstances. To illustrate this, we divide our discussion in two parts: the first part focuses on marketplace platforms, while the second part focuses on ad-funded platforms such as social media and hosting platforms.

Consider first a marketplace platform. In this case, buyers benefit from finding more sellers on the platform—which increases variety—and sellers benefit from reaching more buyers on the platform as this increases the probability of finalized transactions. Suppose that the platform maximizes profits by charging a membership fee to sellers. If an increase in liability entails an increase in the marginal cost of scrutinizing sellers—e.g., acquiring documentation, verifying misuse of platform features such as sales rank, ratings, reviews, or general precautional activities—the platform will react by partly passing this cost onto sellers, thereby charging a higher membership fee.Footnote 25 As a result, some sellers would find participation in the marketplace unprofitable and, hence, fewer sellers will join the platform. Given the multi-sided nature of the market, also fewer buyers will join the marketplace, again amplifying the loss for the platform and welfare.Footnote 26,Footnote 27

However, more stringent liability does not necessarily lead to a reduction in the level of activity. To see why, consider now a scenario in which a platform sets a membership fee to both buyers and sellers. An increase in liability that makes it more costly for the platform to verify sellers’ activity can again lead to an increase in the membership fee charged on this side of the market. However, the platform can now grant a discount to consumers to boost their participation and limit the exit of sellers from the market. In turn, depending on the relative magnitude of the network externalities in the two sides of the market, the participation level of buyers might even increase.

This stylized discussion shows that the impact on the level of activity depends on the pricing instruments platforms use. A change in the liability regime may also affect the activity on a given platform through a change in the composition of platform users. To illustrate this, consider once again an e-commerce platform and assume that, for a given good, there are two types of sellers: those that offer genuine items and those that offer counterfeit items. Suppose that there is a more stringent platform liability. Ceteris paribus, this should increase its incentives to verify the nature of items, which should reduce the number of sellers offering counterfeit items on the platform. This direct increase in the platform’s incentive can be amplified or mitigated by an indirect effect stemming from whether buyers on the other side of the platform tend to value positively or negatively a decrease in sellers of counterfeit goods. In the former scenario, an increase in platform liability would increase the activity on the buyer side, which may, in turn, lead to an increase in legitimate activity on the seller side. However, in the latter case, the activity on the buyer side may decrease if liability becomes more stringent for the platform.

Finally, note that whether the change in the level of activity induced by a change in the liability regime is socially desirable or not depends on the type of considered activity and the extent of the harm resulting from illegal activities. To illustrate this, consider social media platforms, wherein users typically benefit from the production of user-generated content and the presence of peers. However, in some circumstances, user-generated content can be harmful. This is the case of hate speech, illegal videos, or user-uploaded content violating intellectual property rights, which can result in negative spillovers for individuals, groups of users, and—in some cases—advertisers. In this case, the effect of a change in the level of activity (resulting from a change in the liability regime) on social welfare depends on the extent of illegal content on that platform.

Platforms that rely on advertising revenues, such as social media platforms and hosting platforms, might be exposed to different effects. An increase in liability costs related to hate speech, for instance, would decrease the net expected value of an additional user on the platform. If advertising is perceived as a nuisance by consumers, then a decrease in consumer value will give the platform incentives to increase the level of advertising to which consumers are exposed, e.g., by offering more advertising slots per consumer or decreasing per-consumer advertising charges (everything else equal). This is again an indirect channel through which consumers could be negatively affected by a more stringent liability regime for platforms. This is, however, not the only effect at work as consumers may value the decrease in illegal content positively. In the latter case the net effect on their utility may be positive or negative depending on whether gains from content moderation more or less than compensates for the higher disutility that the presence of more ads entails.

Consider now the impact of a more stringent liability regime for platforms on advertisers. As discussed before, this may give the platform incentives to decrease its per-consumer advertising charges because it has fewer incentives to “subsidize” consumers. Note, however, that if consumers benefit from the decrease in illegal content, their demand for the service offered by the platform may increase, which can lead to an increase in the (total) advertising price paid by each advertiser. Since this increase in the price would be driven by the rise in the number of consumers on the platform, advertisers could still be better off. This is especially the case if advertisers care about the environment in which they are placed and therefore a more stringent liability regime makes them benefit from a lower risk of being associated with unsafe material.

4.2 Business models

In the medium and long run, platforms might strategically respond to a change in the liability regime by modifying their business model. We distinguish the possible effects in the two main business models we have focused on (i) marketplaces, and (ii) ad-funded social media and hosting platforms.

Consider first a platform running a marketplace à la Amazon, in which buyers and sellers have transactions via a platform. In this case, the presence of a hybrid business model, in which the platform’s owner also competes with the third parties it hosts in its ecosystem, matters for identifying the right trade-offs that an intermediary faces. According to Hagiu and Wright (2015),

a fundamental distinction between marketplaces and re-sellers is the allocation of control rights between independent suppliers and the intermediary over noncontractible decisions (prices, advertising, customer service, responsibility for order fulfillment, etc.) pertaining to the products being sold.

Overall, this distinction dates back to the different forms of organization and the role played by transaction costs (Williamson, 1979). Importantly for our purpose, a pivotal aspect is the allocation of liability and its costs, which may affect a platform owner’s choice of business model.

By intermediating transactions only, a platform has limited control over the product being sold, and monitoring and reporting tools can only mitigate the risk for users (in the case of unsafe products) and right-holders (in the case of IP infringements). Indeed, holding other things equal, an increase in liability leads to an increase in liability costs for the platform either in the form of precaution costs (ex-ante verification, ex-post monitoring, takedown) or in the form of litigation costs. Differently, by acting as a re-seller, a platform’s owner can directly inspect and select the goods, verify their authenticity, acquire related certifications, and evaluate risks. It follows that, in response to a change in the liability regime, a platform’s owner might shift its optimal intermediation mode towards a reselling activity in order to lower its liability costs.

In a more nuanced view, one way to shift a marketplace towards a vertically integrated mode is to increase the commission fees paid by independent sellers (who would then bear part of the increase in liability costs), which would lead to fewer sellers on the platform and would adversely affect both the variety and prices of goods. Indeed, all sellers—including the platform’s reseller arm—will have an incentive to increase their prices because of the reduction in the number of competitors. This is further amplified by the fact that independent sellers will pass on at least part of the increase in their commissions to final consumers. Alternatively, the platform may engage in “self-preferencing” by making prominent, or generally favoring, its own products to the detriment of other third-party sellers. Whether such a practice might hurt consumers as well depends on the type and degree of competition occurring in the market and the cost of improving quality (De Cornière and Taylor 2019). Recent studies have already provided empirical evidence of the incentives of platform owners to enter the product space of their partners (for a review, see Zhu, 2019) and these strategies have been regarded with concern and suspicion by policymakers and third parties (e.g., vendors). For example, Amazon already offers branded products on its marketplace and, in recent years, has consolidated its activity entering the product space of high-demand and popular sellers (Jiang et al., 2011; Zhu & Liu, 2018). Increased liability might further strengthen this pattern and generate an entry into the product space of high-demand products for which the platform’s owner is not able to acquire enough documentation and information. Recent theoretical papers offer contrasting insights about the social desirability of platform entry (Anderson & Bedre-Defolie, 2021; Etro, 2021; Hagiu et al., 2021; Tremblay, 2020). In a very extreme case of strict liability, intermediation can posit too much risk and be extremely costly for the platform’s owner, thereby leading small platforms to disappear and some others to consolidate their presence in a vertically integrated fashion.

The above discussion illustrates well the more general idea that an increase in platform liability may give marketplace platforms incentives to increase their level of vertical integration. This is not only true regarding the owner of an e-commerce platform’s decision to act as a reseller (or expand the activity of its reseller arm) but also holds for platforms’ incentives to offer complementary products (which can be interpreted as another dimension of “vertical” integration).

To shed some further light, let us consider the example of Amazon and focus on one of the complementary products that Amazon offers to its sellers, namely the Seller-Fulfilled Prime program, which allows sellers to fulfill orders with the same benefits as Amazon Prime. In this sense, the platform can be considered as an active service provider as compared to a passive marketplace that ensures mere intermediation.Footnote 28 Consider a liability regime in which a passive e-commerce platform that does not offer any complementary services is exempted from liability while an active platform that provides complementary services is liable. Consider now a change from this liability regime to one that eliminates this distinction, i.e., a platform can be held liable even if it is passive. Such a policy change would clearly provide the platform incentives to offer complementary services, which may or may not be beneficial to consumers. On the positive side, the fact that the platform offers complementary services mitigates the well-known double marginalization problem: unlike an independent provider of the complementary service, the platform internalizes the positive externality exerted by the complementary service on its primary activity and, therefore, tends to offer a lower price (and/or a higher quality) for that service. On the negative side, there might be an effect on the intensity of competition for complementary services. If the platform is dominant in its primary market, it might have the ability and incentives to foreclose its rivals in the market for the complementary service by engaging, for instance, in tying. If such a foreclosure strategy is implemented and successful, the price of the complementary service will tend to increase and/or its quality will tend to decrease. Still on the negative side, a platform might also respond to increased liability with a shift towards a business model in which a marketplace co-exists with the physical inspection of products and delivery. Hence, there will be more room for an ecosystem in which different activities co-exist and the market power of the platform vis-à-vis sellers increases.

One can note that such a shift in the types of activities carried out by the platform may lead to both the creation of a bottleneck and an increase in the costs faced by the sellers (which may then be passed onto consumers). Currently, sellers are free to decide whether to adhere to the fulfillment program run by the platform or to use their own logistics. Products are not inspected and verified, but sellers are required to maintain documentation and authorization. With more stringent liability, an e-commerce platform might have an incentive to require sellers to join its fulfillment program to increase its control over the products they sell and reduce its liability costs. Moreover, the additional screening carried out by the platform might increase the fee sellers pay to be active on that platform.

Consider now an ad-based platform, such as a social media platform or an ad-funded hosting platform. If such a platform is subject to more stringent liability, it may decide to take more control over the content of ads it shows to its users to decrease its liability costs. One (arguably extreme) way of achieving this is to stop outsourcing ad inventories to third party ad networks and become vertically integrated into the online advertising business.Footnote 29 While this may lead to technical efficiencies, it can also give rise to a conflict of interest, market power, and anti-competitive foreclosure (CMA, 2019). Liability might further alter the incentives of the revenue model a social media platform adopts, inducing the latter to choose a subscription-based model rather than an advertising-based one. For example, Liu et al. (2021) present a model in which social media platforms are immune from liability, providing twofold results: if the platform finds it optimal not to moderate content, an ad-funded platform will have less extreme content than a subscription-based platform; on the contrary, if the platform finds it optimal to moderate content moderation, an advertising-based platform will lead to more extreme content under advertising. Although beyond the scope of their paper, one might conjecture that the introduction of a liability regime will induce a platform to moderate content and a strict liability regime might even induce the platform to select a subscription-based revenue (as better at maximizing profits, other things equal).

To see another potential effect of a change in the liability regime on the business model choice, consider the case of a hosting platform (e.g., YouTube) that offers both a basic ad-supported service that is offered for free to users and a premium service with a subscription charge but no advertising. If the platform considers that ads are potentially a major source of liability, it may rely more on the premium service and less on advertising to generate revenues. As a result, this may lead to a reduction in consumer welfare as users with a low willingness-to-pay for such a service may have no alternatives.

To sum up, a change in the liability regime applying to platforms may give them incentives to change their business model (either in an incremental or radical way) to reduce their liability costs. This change can be either beneficial or detrimental to consumers and social welfare depending on the precise circumstances.

4.3 Terms and conditions

A change in the liability regime applying to platforms is likely to affect not only their monetization strategies but also their terms and conditions. There are at least two ways through which this could happen.

Consider first the example of a marketplace platform allowing interactions between buyers and sellers. A policy change regarding platform liability can affect platforms’ terms and conditions is contractual liability. More precisely, a platform could design its terms and conditions in a way that “neutralizes”, at least partially, its own liability vis-à-vis users. For instance, an e-commerce platform may ask buyers to waive their right to sue the platform if they buy a counterfeit product on the platform (assuming this is forbidden by neither the laws governing platform liability nor consumer protection law).Footnote 30 Note, however, that contractual liability terms cannot protect a platform against harmed parties that are not users of the platform, as is the case for intellectual property holders in the case of counterfeit products and copyright, and individuals harmed by hate speech. Moreover, even if the platform has the ability to impose a contractual clause that makes the seller the sole liable party, it may not have the incentive to do so if it expects such a clause to reduce the attractiveness of the platform relative to other distribution channels (including competing platforms) in a significant way.

The above-described unintended effects are less likely to be present in social media and hosting platforms. Yet, terms and conditions may change in response to a change in the liability regime in the way the platform collects and monetizes user data. Consider for example hosting and social media platforms that rely primarily on the collection and use of personal data for the monetization of the services that are offered. In other words, customers pay the service with their personal data (which could be either used internally by the platform or shared with third parties). A more stringent liability regime and the corresponding increase in liability-related costs could lead to an increase in data collection and use. This can be explained by the decrease in the net value attached to attracting an extra user and can be interpreted as an alternative way of passing on the increase in screening costs onto users.Footnote 31 Similarly, a platform may want to make its users liable for actions committed on the platform such as the uploading or production of harmful or illegal content. A more stringent liability regimes may induce platforms to remove anonymity asking users to register with their ID cards or provide additional information. This might adversely affect consumer privacy as platforms may be tempted to monetize this information by sharing it with third parties.

4.4 Platforms’ investments

A policy change regarding platform liability is likely to have an impact on platforms’ investments in several areas. The discussion that follows applies to all types of platforms we have focused on so far.

The first paramount effect of introducing liability is on investments in ex-ante verification and ex-post monitoring, reporting, and removal tools that allow a given platform to take precautions and possibly comply with the conditions for a liability exemption (if such an exemption regime is available). A more stringent liability regime gives the platform stronger incentives to prevent harm and, therefore, may spur its investments in the adoption of technologies that aim at deterring platform users from engaging in illegal activities for which the platform is liable.Footnote 32 Moreover, a more stringent liability may also increase the platform’s incentives to invest in tools that help it and/or harmed users to identify primary wrongdoers as this may reduce the cost of compensating harmed parties (in case the several-and-joint liability rule applies to the platform and the primary wrongdoers).

Another area in which the liability regime is likely to have a significant impact on investments is innovation. A more stringent liability rule can affect both the rate and direction of innovation. First, the increase in the total cost of providing the service induced by the increase in liability costs will have an adverse effect on future profits from radical innovations that create new services, which could decrease platforms’ incentives to carry out such innovations. This argument holds not only for an incumbent platform but also for a potential entrant, thus highlighting another potential entry barrier induced by a more stringent regime. Note, however, that this argument may not apply to incremental innovations improving the quality of services that are already offered by an incumbent platform. The reason is that incentives to innovate are driven by the difference between post-innovation and pre-innovation profits, and, in this case, both terms may be affected by the increase in liability costs.

Second, a change in the liability rule applying to platforms may also affect the level of investments in innovation indirectly through its effect on competition between platforms. As emphasized before, a more stringent liability regime may lead to a decrease in competition because of an increase in entry barriers. Less intense competition will, in turn, affect the platform’s incentives to innovate. The long-standing literature on the effect of competition on innovation suggests that the level of innovation is either increasing in the degree of competition or is an inverse U-shaped function of competition intensity (see e.g., Aghion et al., 2005 and Vives, 2008). In both cases, a decrease in competition in a market that is highly concentrated (e.g., a market with a dominant platform) will tend to lower the incentives to innovate.

Third, the degree of stringency of the liability regime that platforms are subject to may also affect the “direction” of innovation, i.e., platforms’ choice between different types of innovations. More specifically, a (more) stringent liability rule is likely to make platforms favor (more) innovations leading to services and functionalities that do not entail high liability costs for them and innovations that reduce liability costs associated with the services offered by the platform. Whether such a distortion in the direction of innovation is socially desirable is an open question.

4.5 Third parties’ investments and their interplay with platform’s investments

The liability regime applying to platforms affects not only their investments but also the investments, and more generally the actions, of third parties. In what follows, we present a discussion that applies to a marketplace platform in which buyers and sellers have a transaction.

Consider first the case in which a marketplace is populated both by IP-infringing sellers (e.g., counterfeiting sellers) and brand owners, who invest in new (innovative) products. In this case, imposing or inducing a more stringent screening policy because of a more stringent liability regime might impact the ex-ante incentives of brand owners to develop new products (see Jeon et al., 2021 for a formal analysis). To understand how, suppose that brand owners make their investment decisions to develop a new product upon observing the platform’s screening policy. If brand owners expect to face competition from IP-infringers, their strategies might change accordingly. The most obvious effect is that brand owners will reduce their ex-ante investment effort given the ex-post costs and harm they would face when competing with IP-infringers or flagging these products. In this case, imposing a more stringent liability rule might sustain brand owners’ investment incentives. However, things might be different if, for example, the presence of IP-infringers that do not cause harm to consumers turns out to increase the value of the platform and attract more buyers. In this case, it is not a priori clear that brand owners would prefer a more stringent liability policy as a demand expansion effect should be weighed against the direct harm suffered by the brand owners.

Consider, for instance, the case of a right holder that can make investments to monitor (and possibly sue) IP-infringers on a given e-commerce platform. Assume that liability subject to become more stringent. We (and the right holder) would then expect the platform to invest more in detecting and banning sellers of counterfeit goods, thus exerting a positive externality on the right holder. This externality may make the right holder’s benefit from investing in monitoring and filtering lower, which would lead to a decrease in her investment. This is a typical free-rider problem and this example highlights a potential crowding-out effect that could offset the positive impact of more stringent platform liability on investments in actions reducing the presence of illegal goods on the platform.Footnote 33

There may, however, exist forces that work in the opposite direction, i.e., that amplify the direct effect of more stringent platform liability on such investments. To illustrate this, consider an e-commerce platform again but focus now on the incentives of a manufacturer (whose product can be sold on the platform) to invest in product safety, for instance, to comply with a certain level of safety below which the good is deemed illegal. If the manufacturer anticipates or observes an increase in platforms’ investments in the detection of unsafe products, it will have higher incentives to improve the safety of its product. To understand why, note that the manufacturer’s investment incentives are driven by the difference between its expected profit if it invests and its expected profit if it does not. The increase in platform’s detection efforts lowers the latter by reducing the probability that an unsafe product would go undetected and either leaves the former unchanged or increase it (e.g., because the lower risk of buying an unsafe product increases the number of buyers on the platform). In both cases, the manufacturer’s incentives to invest in product safety increase. We can therefore conclude that a more stringent liability regime for platforms may lead to a decrease in some third parties’ investments in harm-reducing actions but may also induce an increase in such investments on the part of other third parties.

4.6 Over-removal and errors

Another effect of imposing more stringent liability rules relates to the errors that imperfect screening technologies might imply. This discussion applies to all platforms we have considered in this paper.

First, monitoring and acquisition of information may be subject to errors. For instance, an automatic detection tool may perform poorly in understanding context and, hence, in disentangling the difference between what should be considered lawful and what should be considered unlawful. In social media platforms, for example, imperfections can concern the distinction between (unlawful) hate speech and lawful, but potentially harmful, content.Footnote 34 On hosting and marketplace platforms, errors can be related to what effectively infringes IPs and copyrighted material and what instead is considered legitimate content and imitations. By stimulating more enforcement, a more stringent liability regime might reduce the extent to which type-I errors (i.e., false positives) are present but increase the presence of type-II errors (i.e., false negatives). As this influences the composition of the content and products provided on the platforms, this may, in turn, impact users' and buyers' participation in the platform environment and, hence, the pricing strategies of the platform as well.

Due to the risk of being held liable for not promptly removing illegal content, a platform may take a defensive stance and over-remove potentially lawful content or products that lie in a “grey area”. This is especially the case when the probability of being sued for illegal removal is very low or, alternatively, the damage requested by a right holder (in the case of protected content) is large enough.Footnote 35 Such over-removal could therefore lead to a reduction of their welfare. Two recent papers provide some interesting insights in this respect. Liu et al. (2021) study the incentive of a social media platform to moderate content and find that imperfect targeting might lead to over-removal of moderate content relative to extremist ones. De Chiara et al. (2021) study the incentives of a hosting platform to take down content upon receiving notice by a right holder. They show that the platform will challenge the notice if the gains associated with keeping the content online are large enough whereas the platform will not challenge the notice and instead remove the content otherwise. Anticipating this, in the latter case, if the screening device employed by the right holder is imperfect and type-I errors can emerge, there are circumstances under which the right holders will never make effort to improve its screening technology, thus leading to over-removal.

4.7 Incumbency advantage and competition

Some considerations can also be made on the interplay between liability rules and competition. As already discussed, liability regimes impact both the fixed and marginal costs a platform faces to operate in the market. The lenient liability regime that online platforms have been subject to in the past years may have helped the existing platforms to grow as well as to become well-equipped and have the financial resources to comply with more stringent liability than entrant platforms. Thus, a more stringent one-size-fits-all liability regime for platforms might further amplify asymmetries in the market.Footnote 36 To see why, consider a competitive environment in which a big incumbent platform faces smaller potential entrants. A stringent one-size-fits-all liability regime increases the cost of entry and may result in the potential entrants deciding to stay out of the market, which would benefit the incumbent platform. Of course, this platform will also suffer from higher liability costs but this negative effect on its profit may well be outweighed by the positive effect resulting from the increase in entry barriers. This is to the detriment of market participants, who may face higher prices and fewer choices. An interesting analogy can be drawn with the implementation of the General Data Protection Regulation (GDPR) which may have helped big tech giants (Batikas et al., 2021; Gal & Aviv, 2020; Johnson et al., 2020).

Another reason why some platforms may derive an advantage from being an incumbent is that they have accumulated large amounts of user data which can be a valuable input for their monitoring and verification technologies. A general concern for competition policy is whether exclusive access to a vast amount of data can confer a competitive advantage in the market to provide consumers or users with better services, and in turn collect additional data (for a discussion on this feedback loop, see e.g., Biglaiser et al., 2019). A change towards a more stringent liability regime adds another dimension to the data-driven competitive advantage of incumbents as these platforms can use past (user) data to better “train the algorithms” and reduce their liability costs relative to new entrants that simply lack these data. As a result, the incumbents would not only be more likely to provide better services and capitalize on this advantage but would be more compliant than their rivals, who might struggle to expand their level of activity. This advantage is amplified if the incumbent platform is “big” as the amount of data collected is typically proportional to the platform’s activity, and/or if the new entrants are “small” as it would take them more time to collect enough data to have an effective monitoring technology.Footnote 37

5 Policy discussion

The economic analysis in Sect. 4 sheds light on some of the general trade-offs that policymakers should account for when designing a liability regime for platforms. In this section, we take our analysis one step further by studying the implications of three specific aspects of liability rules.

5.1 Incentivizing platforms to monitor

It is fundamental that service providers should not be discouraged from taking proactive measures that increase their level of knowledge on misconduct occurring on the platform. In the European Union, as discussed broadly by several scholars (e.g., Buiten et al., 2020), platforms might not have appropriate incentives to monitor online activities. This is because the current liability regime grants hosting platforms liability exemption based on the so-called “knowledge standard”. In other words, platforms can benefit from immunity when they do not know about illegal ongoing activity or information hosted on the platform and when they act expeditiously to block access to it upon notification. One often proposed solution is the adoption of a “Good Samaritan” approach, already present in the US, which exempts platforms from liability when pro-active measures to detect online misconduct are undertaken (Buiten et al., 2020). Such an approach would not only induce platforms to observe, monitor, and become informed about online misconduct but would also increase their incentives to ban and report wrongdoers in a timely fashion because of the reduced expected costs that banning and reporting would imply.Footnote 38 In this sense, a “Good Samaritan” rule may foster self-regulation. We note that the recent proposal unveiled by the European Commission for a Digital Services Act fills the void that was present in the previous regulation by introducing a liability exemption also for those intermediary services that “carry out voluntary own-initiative investigations or other activities aimed at detecting, identifying and removing, or disabling of access to, illegal content” (Art. 6).

Regarding the presence of errors, it is important that an optimal liability system should incentivize platforms to intervene in a reasonable amount of time to limit or avoid harm as well as to invest in prediction accuracy when using automatic tools. To be effective and avoid platform cherry-picking on what to remove (because risky) and what to maintain (because generating substantial revenues), a liability regime should not only promote platform intervention but also help third parties to report alleged violations and invest in screening technologies as well.Footnote 39

In the same vein, it is also important to discourage platforms from undertaking excessive actions. To tackle the issue of over-removals and limit misuse of the platform’s notice and takedown system, the recent European Commission’s proposal for a Digital Services Act imposes to online platforms to suspend, after a warning, the processing of notices and complaints submitted by those who “frequently submit notices or complaints that are manifestly unfounded” (art. 20).

5.2 Procedural obligations

Let us now consider a liability regime that grants liability exemption to online intermediaries that comply with a series of procedural obligations. Such obligations might be desirable if they induce, for example, platforms to promote unambiguously user-generated reporting.

For instance, an e-commerce platform could be eligible for liability exemption if it implements a simplified and transparent system to report illicit practices but also a timely response to solicitations and feedback requests from those sellers excluded by the platform or taken down.Footnote 40 This would reduce the occurrence and impact of over-removals of lawful content and products (i.e., type-II errors). At the same time, a more transparent system in which sellers can dispute removal requests may avoid opportunistic behavior from other sellers filling notice and takedown requests with the mere objective of harming competitors. In the context of social media platforms, liability exemption could be granted to a platform conditional on (i) presenting systems to report effectively hate speech and all types of misconduct and (ii) taking proactive measures to inform its users about its moderation policy. On the other hand, no liability exemption should be granted for unlawful content that the platform sponsors, renders prominent or indirectly viral.

To further increase transparency on platform activities, it would be advisable to have platforms provide reports and summary statistics to the public.Footnote 41 For instance, a social media platform could be required to provide information about the number of fake accounts being blocked, the types of content typically moderated, the number of reports filed by users (e.g., flagging a post or a user), as well as the number of groups seized because sharing illegal content. By the same token, e-commerce platforms could be required to provide information on the number of notifications received, the number of actions undertaken against sellers, the average time to reach a decision, as well as information on the effort provided to tackle misconduct online. It is also crucial that transparency applies as well to complaints about over-removals and the way the platform handles them.Footnote 42 The European Commission’s proposal for a Digital Services Act imposes to online intermediaries that are not microbusinesses transparency reporting obligations, with detailed reports to be published yearly about the content moderation activity carried out by the platform’s owner. Similar transparency obligations are required for those services that, in the UK, fall within Category 2A/2B.

Moreover, since review and rating systems might be effective in disciplining sellers and informing buyers, a policymaker might be willing to grant liability exemption subject to the implementation of operational duties such as the provision of effective reviews and rating systems. Also in this case, a review and rating system should be transparent, of easy accessibility and should prevent (or ex post delist) fake reviews.

5.3 The distinction between small and large platforms

As discussed in Sect. 4, a change in the liability regime might affect competition because some platforms might be better equipped than others to deal with online misconduct. A liability regime can take these aspects into account by introducing additional clauses for platforms that are “big”.Footnote 43 For instance, liability may apply to large online intermediaries when tortfeasors are no longer prosecutable or identifiable. For example, e-commerce platforms could be held liable whenever a vendor selling counterfeits disappears from the radar of primary enforcement. This may induce the platform to take appropriate measures to identify sellers and to prevent them from engaging in hit-and-run opportunistic behavior, i.e., from joining the platform to sell illegal products and then disappear and create a new account.

Relatedly, one could wonder whether a similar gatekeeper liability should be applied when direct enforcement of primary liability becomes challenging because the tortfeasors and the harmed party are not located in the same country. While this would increase platforms’ incentives to facilitate the enforcement of primary liability (e.g., by investing more in monitoring), it could also have unintended adverse consequences. For instance, it could encourage e-commerce platforms to restrict cross-country transactions, which could be detrimental to consumers. A careful cost–benefit analysis is therefore needed to assess whether a policy implementing such a transfer of liability would be desirable. This cost–benefit analysis should also take into account the effect of such a policy on the actions of third parties, including potential wrongdoers. The reason is that the changes in platforms’ behavior resulting from this policy may induce changes in the behavior of third parties that can be either desirable or undesirable. For instance, as discussed in Sect. 4, an increase in the platform’s monitoring efforts could undermine the monitoring efforts of some third parties (e.g., intellectual property holders) but could also lead to an increase in the level of care of other third parties (e.g. manufacturers of potentially unsafe goods).

A liability regime that places a specific burden on big platforms could also offer the possibility for such platforms to get exempted from this burden if they take pro-welfare actions, such as sharing data and technologies with rivals for the purpose of identifying illegal content. As discussed in Sect. 2, increased liability is likely to result in a competitive advantage for those platforms that have collected data and acquired skills to deal with illegal content and online misconduct. Exemption from the additional clauses intended for “big” platforms could be granted, for instance, if platforms license their technologies or make their past (yet anonymized) data available to their (smaller) rivals.Footnote 44 For instance, an e-commerce platform may share pictures and information about the most widespread counterfeit products as well as any data that can help to “train the algorithms”. Such a policy would, at the same time, limit the potential adverse effects of imposing more stringent liability on “big” platforms and reduce the liability costs of small and entrant platforms, thereby reducing barriers to entry and expansion.

However, making big platforms subject to a more stringent liability regime than smaller ones could have the unintended effect of transferring illegal content and material from big platforms towards smaller ones.Footnote 45 This could strengthen the competitive advantage of big platforms if users observe or believe that the risk of being harmed (e.g., the risk of buying a counterfeit product) is larger on smaller platforms and, consequently, decide to favor (even more) big players for their online activities.Footnote 46 Again, one way of addressing this problem would be to encourage the transfer of technology and data from big platforms to smaller ones and, more generally, to support the development of a well-functioning market for monitoring tools. That would allow small platforms to detect and remove illegal content and material more effectively and to ensure users that the likelihood of suffering harm on them is not higher than on big platforms.