Abstract

Customary confidence regions do not truly reflect in the majority of our geodetic applications the confidence one can have in one’s produced estimators. As it is common practice in our daily data analyses to combine methods of parameter estimation and hypothesis testing before the final estimator is produced, it is their combined uncertainty that has to be taken into account when constructing confidence regions. Ignoring the impact of testing on estimation will produce faulty confidence regions and therefore provide an incorrect description of estimator’s quality. In this contribution, we address the interplay between estimation and testing and show how their combined non-normal distribution can be used to construct truthful confidence regions. In doing so, our focus is on the designing phase prior to when the actual measurements are collected, where it is assumed that the working (null) hypothesis is true. We discuss two different approaches for constructing confidence regions: Approach I in which the region’s shape is user-fixed and only its size is determined by the distribution, and Approach II in which both the size and shape are simultaneously determined by the estimator’s non-normal distribution. We also prove and demonstrate that the estimation-only confidence regions have a poor coverage in the sense that they provide an optimistic picture. Next to the provided theory, we provide computational procedures, for both Approach I and Approach II, on how to compute confidence regions and confidence levels that truthfully reflect the combined uncertainty of estimation and testing.

Similar content being viewed by others

1 Introduction

To evaluate the quality of an estimator, it is not uncommon to compute the probability that a certain region, dependent on the estimator, covers the unknown true parameter (vector) or alternatively, compute the size of the region that corresponds with a certain preset value of the probability. Such region is called the confidence region/set and the corresponding probability is called its confidence level/coefficient (Koch 1999). Using the concept of confidence region and confidence level, one can evaluate the separation between the estimator and the unknown value it is aimed to estimate, and connect this separation to a probability. For a given confidence level, the smaller the size of the confidence region the higher the quality of the estimator, and for a given confidence region, the higher the confidence level the higher the quality of the estimator.

To construct the confidence region for the parameters of interest, one may take one of the following two approaches.

-

Approach I: One can define beforehand the shape of the region, e.g., spherical, ellipsoidal or rectangular, and then compute its size for a given confidence level (Hoover 1984; Šidák 1967; Hofmann-Wellenhof et al 2003); or

-

Approach II: One can have the confidence region determined by the contours of the probability density function (PDF) of the estimator for a given confidence level (Hyndman 1996; Gundlich and Koch 2002; Teunissen 2007).

Examples of Approach I are the circular error probable (CEP) or the spherical error probable (SEP) as used in the field of navigation for instance. CEP/SEP is defined as the radius of the circle/sphere around the to-be-estimated point such that it contains a certain fraction, say \(90\%\) or \(95\%\), of the estimator’s outcomes. Examples of Approach II are the error ellipses/ellipsoids as used in surveying and geodetic networks.

Approach I is the simplest and gives information of how often one can expect the solution to fall within the predefined shape region. Approach II is more complex, but also more informative since it shows through the shape of the confidence region where in space the solution is ‘weak’ and where ‘strong.’ We note that if the estimator has an elliptically contoured distribution (Cambanis et al 1981), e.g., normal distribution, then there would be no difference between Approach I and Approach II provided that the confidence region in Approach I is taken to be ellipsoidal and defined by the estimator’s variance matrix.

Whether Approach I or Approach II is taken, it is important to realize that the properties of the confidence region are determined by the probabilistic properties of the estimator. The size and, in case of Approach II, the shape of the confidence region are driven by the estimator’s PDF. It is therefore crucial, in order to have a realistic confidence region and one that properly reflects the quality of the estimator, that one works with the correct PDF of one’s estimator. It is here that we believe improvements in our geodetic and navigational practice of data analysis and quality control are in order.

In our daily data processing, it is common practice to combine methods of parameter estimation and hypothesis testing. Such examples can be found in a wide variety of different applications, like geodetic quality control (Kösters and Van der Marel 1990; Seemkooei 2001; Perfetti 2006), navigational integrity (Teunissen 1990; Gillissen and Elema 1996; Yang et al 2014), deformation analysis and structural health monitoring (Verhoef and De Heus 1995; Yavaşoğlu et al 2018; Durdag et al 2018; Lehmann and Lösler 2017; Nowel 2020), and GNSS integrity monitoring (Jonkman and De Jong 2000; Kuusniemi et al 2004; Hewitson and Wang 2006; Khodabandeh and Teunissen 2016). However, when combining parameter estimation and statistical testing, the finally produced estimator will have inherited uncertainties stemming from both the estimation and testing steps. It is therefore of importance, when constructing confidence regions, to take this combined uncertainty into account. In (Teunissen 2018) it was shown, by means of the PDF of the DIA-estimator, that this combined uncertainty cannot be captured by the usually assumed normal distribution. Hence, the customary procedure followed in practice of ignoring the impact of testing on estimation (Wieser 2004; Devoti et al 2011; Dheenathayalan et al 2016; Huang et al 2019; Niemeier and Tengen 2020) will produce faulty confidence regions and therefore provide an incorrect description of the estimator’s quality.

The aim of the present contribution is to address how the interplay between estimation and testing affects the confidence region and confidence level of the parameters of interest. We prove and demonstrate that the estimation-only confidence regions have a poor coverage in the sense that they provide a too optimistic picture and that they should thus be made larger in order to contain the required probability of one’s estimator. Next to the provided theory, we also provide computational procedures, for both Approach I and Approach II, on how to correctly compute and construct confidence regions and their confidence levels.

In doing so, our focus is on the designing phase prior to when the actual measurements are collected. When designing a measurement set up (e.g., a geodetic network), one will usually assume that the actual measurements and adjustment will also be done under the working (null) hypothesis \({\mathcal {H}}_{0}\). That is, a priori one has no reason to believe why a specific other model would be valid than \({\mathcal {H}}_{0}\). But one does know a priori what estimation and testing procedure one will apply, and it is on the basis of this knowledge that one then should construct the appropriate confidence regions, in order to be able to judge one’s measurement design properly.

This contribution is structured as follows. A brief review of the necessary background theory is provided in Sect. 2. We describe the null and alternative hypotheses, highlight the role of the misclosure space partitioning when testing these hypotheses, and provide and discuss the statistical distribution of the DIA-estimator. It is hereby emphasized that its distribution is non-normal even if one works with linear models and normally distributed observations. In Sect. 3, we consider, also as reference for later sections, the customary estimation-only case when testing is not taken into account. In Sect. 4, we discuss the method of constructing confidence region of Approach I. It is shown if one neglects the testing contribution and assumes the resulting estimator to be normally distributed, that the resulting confidence region of Approach I would actually cover a smaller probability than one presumes with the preset confidence level. Then, in Sect. 5, we take Approach II to form the confidence region of the DIA-estimator using its PDF contours. It is demonstrated that the confidence region resulting from Approach II can be a non-convex and even disconnected region. In Sect. 6, we describe the necessary steps for the numerical algorithms. This is first done for evaluating the confidence level of the DIA-estimator corresponding with any translational-invariant confidence region, which is then followed by a description of the numerical algorithms for constructing confidence regions based on both Approach I and Approach II. Finally, a summary with conclusions is provided in Sect. 7.

We use the following notation: \({\mathsf {E}}(\cdot )\) and \({\mathsf {D}}(\cdot )\) denote the expectation and dispersion operator, respectively. The n-dimensional space of real numbers is denoted as \({\mathbb {R}}^{n}\). Random vectors are indicated by use of the underlined symbol ‘\({\underline{\cdot }}\)’. Thus \({\underline{x}}\in {\mathbb {R}}^{n}\) is a random vector, while x is not. \(\hat{{\underline{x}}}\) and \(\bar{{\underline{x}}}\) denote the BLUE-estimator and the DIA-estimator of x, respectively. The squared weighted norm of a vector, with respect to a positive-definite matrix Q, is defined as \(\Vert \cdot \Vert ^{2}_{Q}=(\cdot )^{T}Q^{-1}(\cdot )\). \({\mathcal {H}}\) is reserved for statistical hypotheses, \({\mathcal {P}}\) for regions partitioning the misclosure space, \({\mathcal {N}}(x,Q)\) for the normal distribution with mean x and variance matrix Q, and \(\chi ^{2}(n,\xi )\) for the Chi-square distribution with n degrees of freedom and the non-centrality parameter of \(\xi \). \({\mathsf {P}}(\cdot )\) denotes the probability of the occurrence of the event within parentheses. The symbol \(\overset{{\mathcal {H}}}{\sim }\) should be read as ‘distributed as \(\ldots \) under \({\mathcal {H}}\).’ The superscripts \(^{T}\) and \(^{-1}\) are used to denote the transpose and the inverse of a matrix. The complement of a region is denoted by the superscript \(^{c}\). The expression ‘iff’ is used for ‘if and only if.’

2 Brief review of background theory

In this section, we provide a brief review of estimation \(+\) testing inference with corresponding DIA-estimator and describe the concept of translational-invariant confidence regions. We restrict our attention to the linear model with normally distributed observables. Departures from these assumptions, such as nonlinearity and/or distributional approximations are not taken into account, but see, e.g., (Teunissen 1989; Zaminpardaz et al. 2017; Niemeier and Tengen 2017; Lösler et al. 2021).

2.1 Integrated estimation and testing

Let \({\underline{y}}\in {\mathbb {R}}^{m}\) be the normally distributed random observable vector with an unknown mean but a known variance matrix. The following null and \(\kappa >0\) alternative hypotheses are put forward on the mean of \({\underline{y}}\),

for \(i=1, \ldots , \kappa \), with \(x\in {\mathbb {R}}^{n}\) the estimable unknown parameter vector, \(A\in {\mathbb {R}}^{m\times n}\) the known design matrix of rank\((A)=n\), and \(Q_{yy}\in {\mathbb {R}}^{m\times m}\) the positive-definite variance matrix of \({\underline{y}}\). We assume that \([A~~C_{i}]\) is a known matrix of full rank and \(b_{i}\) is an unknown vector.

To test the hypotheses in (1) against one another and select the most likely one, it is required to have redundant measurements under \({\mathcal {H}}_{0}\), i.e., \(r=m-n\ne 0\). In that case, an ancillary statistic, known as the misclosure vector \({\underline{t}}\in {\mathbb {R}}^{r}\), can be formed as (Teunissen 2006)

where \(B\in {\mathbb {R}}^{m\times r}\) is a matrix of rank\((B)=r\), such that \([A~~B]\in {\mathbb {R}}^{m\times m}\) is invertible and \(A^{T}B=0\), see, e.g., (Yang et al 2021; Teunissen 2003, p. 62) for constructing matrix B. With \({\underline{y}}\overset{{\mathcal {H}}_{0}}{\sim }{\mathcal {N}}(Ax,~Q_{yy})\) and \({\underline{y}}\overset{{\mathcal {H}}_{i}}{\sim }{\mathcal {N}}(Ax+C_{i}b_{i},~Q_{yy})\) for \(i=1,\ldots ,\kappa \), the misclosure vector is then distributed as

with \(Q_{tt}=B^{T}Q_{yy}B\) the variance matrix of \({\underline{t}}\) and \(C_{t_{i}}=B^{T}C_{i}\). As \({\underline{t}}\) has a known PDF under \({\mathcal {H}}_{0}\), which is the PDF of \({\mathcal {N}}(0,~Q_{tt})\), any statistical testing procedure is driven by the misclosure vector \({\underline{t}}\) and its known PDF under \({\mathcal {H}}_{0}\). A testing procedure can be established through unambiguously assigning the outcomes of \({\underline{t}}\) to the hypotheses \({\mathcal {H}}_{i}\)’s for \(i=0,1,\ldots ,\kappa \), which can be realized by partitioning the misclosure space \({\mathbb {R}}^{r}\) in \(\kappa +1\) subsets \({\mathcal {P}}_{i}\subset {\mathbb {R}}^{r}\) (\(i=0,1,\ldots ,\kappa \)). The testing procedure is then unambiguously defined as (Teunissen 2018)

Therefore, the testing procedure’s decisions are driven by the outcome of the misclosure vector \({\underline{t}}\). If \({\mathcal {H}}_{i}\) is true, then the decision is correct if \({\underline{t}}\in {\mathcal {P}}_{i}\), and wrong if \({\underline{t}}\in {\mathcal {P}}_{j\ne i}\). Note, in single-redundancy case \(r=1\), that \({\mathcal {P}}_{1}=\cdots ={\mathcal {P}}_{\kappa }={\mathcal {P}}_{0}^{c}\), implying that the alternative hypotheses are not distinguishable from one another.

Statistical testing is usually followed by the estimation of the parameters of interest x. These parameters then get estimated according to the model selected through the testing procedure, as

where \(\hat{{\underline{x}}}_{0}\) and \(\hat{{\underline{x}}}_{i\ne 0}\) are the BLUEs of x under \({\mathcal {H}}_{0}\)- and \({\mathcal {H}}_{i\ne 0}\)-model, \(A^{+}=Q_{{\hat{x}}_{0}{\hat{x}}_{0}}A^{T}Q_{yy}^{-1}\) the BLUE-inverse of A with \(Q_{{\hat{x}}_{0}{\hat{x}}_{0}}=(A^{T}Q_{yy}^{-1}A)^{-1}\) the variance matrix of \(\hat{{\underline{x}}}_{0}\), and \(L_{i}=A^{+}C_{i}C_{t_{i}}^{+}\) with \(C_{t_{i}}^{+}= (C_{t_{i}}^{T}Q_{tt}^{-1}C_{t_{i}})^{-1}C_{t_{i}}^{T}Q_{tt}^{-1}\). Being linear functions of the normally distributed random vector \({{\underline{y}}}\), the individual estimators \(\hat{{\underline{x}}}_{i}\)’s (\(i=0,1,\ldots ,\kappa \)) are all normally distributed with a mean of x under \({\mathcal {H}}_{0}\).

2.2 DIA-estimator

As (5) shows, the outcome of testing (4) dictates how the parameter vector x gets estimated. The probabilistic properties of such an estimation \(+\) testing combination is captured by the DIA-estimator of Teunissen (2018). The DIA-estimator of the parameter vector x is given as

where \(p_{i}({\underline{t}})\) is the indicator function of region \({\mathcal {P}}_{i}\) (cf. 4), i.e., \(p_{i}({\underline{t}})=1\) for \({\underline{t}}\in {\mathcal {P}}_{i}\) and \(p_{i}({\underline{t}})=0\) for \({\underline{t}}\notin {\mathcal {P}}_{i}\). The PDF of \(\bar{{\underline{x}}}\) is then driven by the probabilistic properties of the estimators \(\hat{{\underline{x}}}_{i}\)’s (\(i=0,1,\ldots ,\kappa \)) and \({\underline{t}}\), which under \({\mathcal {H}}_{i}\) is given by

where \(f_{\hat{{\underline{x}}}_{0}}(\xi |{\mathcal {H}}_{i},x)\) denotes the PDF of \({\hat{x}}_{0}\) under \({\mathcal {H}}_{i}\) which is characterized by the parameter vector x. The conditional PDF \(f_{\bar{{\underline{x}}}|{\underline{t}} \in {\mathcal {P}}_{j}}(\xi |{\underline{t}} \in {\mathcal {P}}_{j},{\mathcal {H}}_{i},x)\) conditioned on \({\underline{t}} \in {\mathcal {P}}_{j}\) is of the form

In the above equation, \(f_{\hat{{\underline{x}}}_{j}, {\underline{t}}}(\xi , \tau |{\mathcal {H}}_{i},x)\) is the joint PDF of \(\hat{{\underline{x}}}_{j}\) and \({\underline{t}}\) under \({\mathcal {H}}_{i}\) which is characterized by the parameter vector x and, given (5), can be obtained as

Note, although the estimators \(\hat{{\underline{x}}}_{i}\)’s (\(i=0,1,\ldots ,\kappa \)) and the misclosure \({\underline{t}}\) are normally distributed, that the DIA-estimator \(\bar{{\underline{x}}}\) is not due to its nonlinear dependency on \({\underline{t}}\). Figure 1 illustrates the non-normal PDF of \(\underline{{\bar{x}}}\) for a simple binary-hypothesis testing example, with the null hypothesis \({\mathcal {H}}_0\) and the alternative \({\mathcal {H}}_a\), as given in (Teunissen 2018), Examples 5 and 8. The results are shown for different values of false-alarm probability \({\mathsf {P}}_{\mathrm{FA}}\), standard deviation of \(\hat{{\underline{x}}}_{0}\) denoted by \(\sigma _{{\hat{x}}_{0}}\), standard deviation of \({{\underline{t}}}\) denoted by \(\sigma _{t}\), and bias values \(b_{a}\) under \({\mathcal {H}}_{a}\). The difference between the panels in Fig. 1 and those of Figures 6–8 in (ibid) is that the latter were accidentally based on other values than given in their captions (e.g., standard deviations instead of variances).

PDF of \(\underline{{\bar{x}}}\) under, from left to right, \({\mathcal {H}}_{0}\), \({\mathcal {H}}_{a}\) with \(b_{a}=1\), \({\mathcal {H}}_{a}\) with \(b_{a}=3\), and \({\mathcal {H}}_{a}\) with \(b_{a}=5\). The top panels correspond to \(\sigma _{{\hat{x}}_{0}}^{2}=0.5\) and \(\sigma _{t}^{2}=2\), while the bottom panels to \(\sigma _{{\hat{x}}_{0}}^{2}=0.25\) and \(\sigma _{t}^{2}=1\). For the results under \(\mathcal {H}_{a}\), \(L_ a\) was set to 0.5

In this contribution, we focus on confidence region and confidence level evaluation under \({\mathcal {H}}_{0}\), and thus we work with estimators’ PDF under \({\mathcal {H}}_{0}\). Using the total probability rule with correct acceptance \(\mathrm{CA}=\{{\underline{t}} \in {\mathcal {P}}_{0}, {\mathcal {H}}_{0}\}\) and false alarm \(\mathrm{FA}=\{{\underline{t}} \notin {\mathcal {P}}_{0}, {\mathcal {H}}_{0}\}\) events, the PDF of \(\bar{{\underline{x}}}\) under \({\mathcal {H}}_{0}\) can be decomposed as

with the false-alarm probability \({\mathsf {P}}_{\mathrm{FA}}\) and the correct-acceptance probability \({\mathsf {P}}_{\mathrm{CA}}\) satisfying \({\mathsf {P}}_{\mathrm{CA}}+{\mathsf {P}}_{\mathrm{FA}}=1\). The second equality of (10) is a consequence of \(\bar{{\underline{x}}}|\mathrm{CA}=\hat{{\underline{x}}}_{0}|\mathrm{CA}\) and that \(\hat{{\underline{x}}}_{0}\) and \({\underline{t}}\) are independent from each other, i.e., \(\hat{{\underline{x}}}_{0}|\mathrm{CA}=\hat{{\underline{x}}}_{0}\).

2.3 Confidence region

The general definition of a confidence region and confidence level reads as follows (Schervish 1995).

Definition (Confidence region & confidence level) Let \({\underline{x}}\in {\mathbb {R}}^{n}\) be a random vector of which the PDF is dependent on the unknown parameter vector \(x\in {\mathbb {R}}^{n}\). Let \({\mathcal {C}}_{\alpha }({\underline{x}})\subset {\mathbb {R}}^{n}\) be a random set of which the location, size and shape are determined by \(\alpha \) and \({\underline{x}}\) such that

Then, \({\mathcal {C}}_{\alpha }({\underline{x}})\) is said to be a \(100(1-\alpha )\%\) confidence region for x and \((1-\alpha )\) is called the confidence level. \(\square \)

Thus, a confidence region is random and it corresponds with a certain probability that it covers the parameter vector x. The interpretation of the confidence region is that if independent samples of \({\underline{x}}\) were to be generated many times, the realized confidence regions would then include x, \(100(1-\alpha )\%\) of the time.

A random confidence region \({\mathcal {C}}_{\alpha }({\underline{x}})\) can be constructed as follows (Casella and Berger 2001). Let \({\mathcal {S}}_{\alpha }(x)\subset {\mathbb {R}}^{n}\) be an x-dependent set with the property that

Then, the random set

is a \(100(1-\alpha )\%\) confidence region for x, satisfying (11). With this definition in mind, we refer, in the following, to \({\mathcal {S}}_{\alpha }(x)\) also as confidence region. For every realization of \({\underline{x}}\), the corresponding realization of (13) gives all values of \(\xi \in {\mathbb {R}}^{n}\) for which \({\mathcal {S}}_{\alpha }(\xi )\) covers that realization of \({\underline{x}}\). To compute the confidence region (13) for a given confidence level or the other way round, one needs to make use of (12). The probability \({\mathsf {P}}\left( {\underline{x}}\in {\mathcal {S}}_{\alpha }(x)\right) \) in this equation is, in general, dependent on the unknown x through the PDF of \({\underline{x}}\) and \({\mathcal {S}}_{\alpha }(x)\). The following result specifies the conditions under which this probability becomes independent of x.

Lemma 1

Let \({\underline{x}}\in {\mathbb {R}}^{n}\) be a random vector of which the PDF, denoted by \(f_{{\underline{x}}}(\xi |x)\), is dependent on the unknown parameter vector \(x\in {\mathbb {R}}^{n}\), and let \({\mathcal {S}}_{\alpha }(x)\subset {\mathbb {R}}^{n}\) be an x-dependent set. Then, \({\mathsf {P}}\left( {\underline{x}}\in {\mathcal {S}}_{\alpha }(x)\right) \) is independent of x if

-

(i)

\({\mathcal {S}}_{\alpha }(x)\) is translational invariant, i.e., \({\mathcal {S}}_{\alpha }(x+\gamma )={\mathcal {S}}_{\alpha }(x)+\gamma \) for any \(x,\gamma \in {\mathbb {R}}^{n}\) where \({\mathcal {S}}_{\alpha }(x)+\gamma \) is a set of vectors obtained by translating all the vectors inside \({\mathcal {S}}_{\alpha }(x)\) over \(\gamma \);

and

-

(ii)

\(f_{{\underline{x}}}(\xi |x)\) is translational invariant, i.e., \(f_{{\underline{x}}}(\xi +\gamma |x+\gamma )=f_{{\underline{x}}}(\xi |x)\) for any \(\xi ,x,\gamma \in {\mathbb {R}}^{n}\).

Proof

See ‘Appendix.’ \(\square \)

In this contribution, we will consider three different confidence regions as follows.

-

Estimation-only confidence region which is constructed based on the normal distribution of \(\hat{{\underline{x}}}_{0}\) under \({\mathcal {H}}_{0}\) neglecting the impact of testing preceding the estimation process, and is denoted by \({\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)\);

-

Estimation + testing confidence region (Approach I) which has the same shape as the estimation-only one but its size is determined by the PDF of \(\bar{{\underline{x}}}\) under \({\mathcal {H}}_{0}\), and is denoted by \({\mathcal {S}}_{\alpha }^{\mathrm{{I}}}(x)\);

-

Estimation + testing confidence region (Approach II) which is constructed based on the concept of highest density regions where its shape and size are determined by the contours of the PDF of \(\bar{{\underline{x}}}\) under \({\mathcal {H}}_{0}\), and is denoted by \({\mathcal {S}}_{\alpha }^{\mathrm{{II}}}(x)\).

Table 1 provides an overview of these three confidence regions. For a one-dimensional example \(x\in {\mathbb {R}}\), Fig. 2 shows the construction of these confidence regions for the same value of \(\alpha \). Confidence regions in the one-dimensional case are simply intervals referred to as confidence intervals. In each panel, the horizontal border of the green area indicates the corresponding confidence interval.

Construction of the three confidence intervals in Table 1 for the same value of \(\alpha \). [Left] Black graph: the normal PDF of \(\hat{{\underline{x}}}_{0}\); Green area: the \((1-\alpha )\) probability mass over \({\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)\) indicated by the green double arrow. [Middle] Black graph: the PDF of \(\bar{{\underline{x}}}\); Green area: the \((1-\alpha )\) probability mass over \({\mathcal {S}}_{\alpha }^{\mathrm{{I}}}(x)\) indicated by the green double arrow. [Right] Black graph: the PDF of \(\bar{{\underline{x}}}\); Green area: the \((1-\alpha )\) probability mass over \({\mathcal {S}}_{\alpha }^{\mathrm{{II}}}(x)\) indicated by the green double arrows

The estimation-only confidence interval in Fig. 2 [left] is an x-centered interval over which the probability mass of the normal PDF of \(\hat{{\underline{x}}}_{0}\) is \((1-\alpha )\). The estimation \(+\) testing confidence region using Approach I in Fig. 2 [middle] is an x-centered interval over which the probability mass of the PDF of \(\bar{{\underline{x}}}\) is \((1-\alpha )\). It is observed that since the PDF of \(\bar{{\underline{x}}}\) is less peaked around x compared to the PDF of \(\hat{{\underline{x}}}_{0}\), the confidence interval in the middle panel is wider than the one in the left panel. This implies that working with the estimation-only confidence interval when combining estimation with testing provides a too optimistic description of the estimator’s quality. The estimation \(+\) testing confidence interval using Approach II in Fig. 2 [right] consists of three disconnected sub-intervals over which the total probability mass of the PDF of \(\bar{{\underline{x}}}\) is \((1-\alpha )\) and the PDF of \(\bar{{\underline{x}}}\) is larger than a certain value. The reason for getting a disconnected confidence interval is that the PDF of \(\bar{{\underline{x}}}\) is multi-modal; having one major mode at x and two minor modes equally spaced to the left and right of x.

3 Estimation-only confidence region: current practice

Although parameter estimation is often carried out following a statistical testing procedure to select the most likely hypothesis, the approach that is usually followed in practice to evaluate the quality of the resulting estimator, including its confidence region, does not take into account the uncertainty of the testing decisions (Wieser 2004; Devoti et al 2011; Dheenathayalan et al 2016; Huang et al 2019; Niemeier and Tengen 2020). Therefore, in the designing phase, to construct a confidence region for the parameters of interest x under \({\mathcal {H}}_{0}\), the eventual estimator is taken as \(\hat{{\underline{x}}}_{0}\) (cf. 5) rather than \(\bar{{\underline{x}}}\) (cf. 6).

Confidence regions can in principle take many different shapes. For the linear model as in (1), the confidence region is often taken to be of ellipsoidal shape. This is a consequence of \(\hat{{\underline{x}}}_{0}\overset{{\mathcal {H}}_{0}}{\sim }{\mathcal {N}}(x,Q_{{\hat{x}}_{0}{\hat{x}}_{0}})\), and thus the quadratic form \(\Vert \hat{{\underline{x}}}_{0}-x\Vert _{Q_{{\hat{x}}_{0}{\hat{x}}_{0}}}^{2}\) being distributed as a central Chi-square distribution with n degrees of freedom,

The \(100(1-\alpha )\%\) ellipsoidal confidence region for x is then given as

where \(\chi _{1-\alpha }^{2}(n,0)\) is the \((1-\alpha )\) quantile of the central Chi-square distribution with n degrees of freedom. The smaller the \(\alpha \), the larger the confidence region. It will be clear that both the set (15) and the normal distribution \(f_{\underline{{\hat{x}}}_{0}}(\xi |{\mathcal {H}}_{0},x)\) satisfy the conditions of Lemma 1 and that therefore the probability \(\underline{{\hat{x}}}_{0}\) lying in \({\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)\) under \({\mathcal {H}}_{0}\) can be computed without knowing x.

Example 1

(Three-point network with angle measurements) Consider a two-dimensional (2-D) terrestrial survey network of three points forming an isosceles triangle as shown in Fig. 3. At each point, the internal angle is measured, yielding three measurements in total (\(m = 3\)). In the following, we use \(\mathrm{E}_{i}\) and \(\mathrm{N}_{i}\) to denote the East and North coordinates of point i, respectively, \(d_{ij}\) for the observed-minus-computed direction measurement made from point i to point j, \(l_{ij}\) for the computed distance between point i and point j, \(\eta _{i}\in {\mathbb {R}}^{2}\) for the coordinate increment vector of point i, and \(v_{ij}=\frac{1}{l_{ij}}[\mathrm{N}_{j}-\mathrm{N}_{i}~,~\mathrm{E}_{i}-\mathrm{E}_{j}]^{T}\in {\mathbb {R}}^{2}\) for the unit vector orthogonal to the unit direction vector from point i to point j. The general linearized observation equation of the angle measured from point i to points j and k for the 2-D network under \({\mathcal {H}}_{0}\) reads

with \(a_{jik}=d_{ik}-d_{ij}\). For the 2-D network in Fig. 3, we take points 1 and 2 as S-basis (Baarda 1973; Teunissen 1985), and thus the total number of unknowns is \(n = 2\), namely two unknown coordinate increments of point 3 (\(x=[x_{\mathrm{E}},~x_{\mathrm{N}}]^{T}=\eta _{3}\in {\mathbb {R}}^{2}\)). With \(m=3\) and \(n=2\), the redundancy is \(r = 1\). The angle measurements are assumed to be uncorrelated and normally distributed. Figure 4 shows the confidence ellipse \({\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)\) in (15) for \(\alpha =\) 0.1 assuming that the standard deviations of direction measurements are the same and equal to 5 seconds of arc.

Illustration of the \(90\%\) confidence ellipse \({\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)\) in (15) for the model specified in Example 1

To understand the shape and orientation of the ellipse in Fig. 4, driven by \(Q_{{\hat{x}}_{0}{\hat{x}}_{0}}\), let us first assume that the network points form an equilateral triangle. In this case, due to the symmetry of the network configuration and measuring all the three internal angles with the same precision, \({\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)\) would be of circular shape. Now, if one moves point 3 further away from the other two points in the North direction, the network configuration changes to an isosceles triangle like that in Fig. 3. In this case, the observational model becomes more sensitive to the movement of point 3 in the East direction than in the North direction. Thus, the previous circular confidence region would become an ellipse with its minor axis parallel to the East direction, as shown in Fig. 4. \(\square \)

4 Estimation \(+\) testing confidence region: Approach I

When estimation is combined with testing, using the probabilistic properties of \(\hat{{\underline{x}}}_{0}\) (cf. 5) to compute confidence region/level is statistically incorrect as it does not do justice to the statistical testing that preceded the estimation of the parameters x. Thus for a proper computation of confidence region/level, one needs to work with \(\bar{{\underline{x}}}\) (cf. 6). In this section, we consider the construction of confidence region using Approach I with the geodetic-common choice of ellipsoidal region, but based on the PDF of \(\bar{{\underline{x}}}\), as

where \(s_{\alpha }^{\mathrm{{I}}}\) is chosen so as to satisfy

The smaller the \(\alpha \), the larger the \(s_{\alpha }^{\mathrm{{I}}}\). The confidence region in (17) is an ellipsoidal region of the same shape and orientation as that of (15), be it that the size of (17) is determined by the PDF of \(\bar{{\underline{x}}}\) through (18). For the PDF \(f_{\bar{{\underline{x}}}}(\xi |{\mathcal {H}}_{0},x)\), we have the following result.

Lemma 2

The PDF of the DIA-estimator \(\underline{{\bar{x}}}\) under \({\mathcal {H}}_{0}\) (cf. 7) is translational invariant, i.e.,

Proof

See ‘Appendix.’ \(\square \)

With the above result and that (17) is a translational-invariant set, the probability in (18) can be computed without knowing x according to Lemma 1. This computation is, however, not trivial due to the complexity of \(f_{\bar{{\underline{x}}}}(\xi |{\mathcal {H}}_{0},x)\). We will come back to this in Sect. 6 where we present the required numerical algorithm.

Here we now discuss the consequences if one, because of convenience, would stick to using the estimation-only ellipsoidal confidence region as in (15). Then, since the probability is now driven by the PDF of \(\bar{{\underline{x}}}\), we have the following result.

Theorem 1

(Confidence levels of estimation-only versus estimation \(+\) testing under \({\mathcal {H}}_{0}\)) Let \({\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)\) be the ellipsoidal confidence region as in (15). Then, we have

Proof

See ‘Appendix.’ \(\square \)

The above theorem shows that \({\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)\) has poorer coverage for the DIA-estimator \(\bar{{\underline{x}}}\) than \((1-\alpha )\), and thus needs to be scaled up in order to contain the required probability of \((1-\alpha )\), which implies that \(s_{\alpha }^{\mathrm{{I}}}\ge \chi ^{2}_{1-\alpha }(n,0)\). Therefore, neglecting the link between estimation and testing and taking \({\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)\) as if it would be the \(100(1-\alpha )\%\) confidence region of \(\bar{{\underline{x}}}\) under \({\mathcal {H}}_{0}\), will provide a too optimistic description of the DIA-estimator’s quality, i.e., the region is then shown too small. With (7), the difference between the actual confidence level \({\mathsf {P}}(\bar{{\underline{x}}}\in {\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)|{\mathcal {H}}_{0})\) and the desired confidence level \((1-\alpha )\) reads

which would approach zero under any of the conditions below

-

\(f_{\hat{{\underline{x}}}_{0}}(\xi |{\mathcal {H}}_{0},x)-f_{\hat{{\underline{x}}}_{i}|{\underline{t}}\in {\mathcal {P}}_{i}}(\xi |{\underline{t}}\in {\mathcal {P}}_{i},{\mathcal {H}}_{0},x)\rightarrow 0\)

-

\({\mathsf {P}}({\underline{t}}\in {{\mathcal {P}}_{i\ne 0}}|{\mathcal {H}}_{0})\rightarrow 0\)

-

\(\alpha \rightarrow 0\)

The difference between \(f_{\hat{{\underline{x}}}_{0}}(\xi | {\mathcal {H}}_{0},x)\) and \(f_{\hat{{\underline{x}}}_{i}|{\underline{t}}\in {\mathcal {P}}_{i}}(\xi |{\underline{t}}\in {\mathcal {P}}_{i},{\mathcal {H}}_{0},x)\) would vanish, i.e., \(f_{\hat{{\underline{x}}}_{0}}(\xi | {\mathcal {H}}_{0},x)=f_{\hat{{\underline{x}}}_{i}|{\underline{t}}\in {\mathcal {P}}_{i}}(\xi |{\underline{t}}\in {\mathcal {P}}_{i},{\mathcal {H}}_{0},x)\), if the correlation between \(\hat{{\underline{x}}}_{i}\) and \({\underline{t}}\) would be zero. One can get zero correlation between \(\hat{{\underline{x}}}_{i}\) and \({\underline{t}}\) if \(L_{i}=0\) (cf. 5), which can happen if \(A^{T}Q_{yy}^{-1}C_{i}=0\) (\(C_{i}\) being \(Q_{yy}\)-orthogonal to the columns of A, zero influential bias (Teunissen 2017)). In addition, the correlation between \(\hat{{\underline{x}}}_{i}\) and \({\underline{t}}\) would get close to zero if \(L_{i}\,{\underline{t}}\) becomes far more precise than \(\hat{{\underline{x}}}_{0}\), i.e., \(Q_{{\hat{x}}_{0}{\hat{x}}_{0}}\gg L_{i}Q_{tt}L_{i}^{T}\), and/or \(L_{i}\rightarrow 0\) and/or \(Q_{tt}\rightarrow 0\). Given that \({\underline{t}}\overset{{\mathcal {H}}_{0}}{\sim }{\mathcal {N}}(0,Q_{tt})\), the probability \({\mathsf {P}}({\underline{t}}\in {{\mathcal {P}}_{i}}|{\mathcal {H}}_{0})\), for \(i=1,\ldots , \kappa \), would approach zero if \(f_{{\underline{t}}}(\tau |{\mathcal {H}}_{0})\) becomes highly peaked at zero, i.e., \(Q_{tt}\rightarrow 0\), and/or if regions \({\mathcal {P}}_{i\ne 0}\) shrink, i.e., \({\mathcal {P}}_{0}\rightarrow {\mathbb {R}}^{r}\) (\({\mathsf {P}}_{\mathrm{FA}}\rightarrow 0\)). Table 2 summarizes conditions under which \({\mathsf {P}}(\bar{{\underline{x}}}\in {\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)|{\mathcal {H}}_{0})\) gets closer to \((1-\alpha )\).

Despite Theorem 1, one might still be willing to work with \({\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)\), due to its lower computational burden compared to \({\mathcal {S}}_{\alpha }^{\mathrm{{I}}}(x)\), if the difference between \({\mathsf {P}}(\bar{{\underline{x}}}\in {\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)|{\mathcal {H}}_{0})\) and \((1-\alpha )\) is not deemed significant based on the requirements of the application at hand. The computation of the exact confidence level \({\mathsf {P}}(\bar{{\underline{x}}}\in {\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)|{\mathcal {H}}_{0})\) requires integrating \(f_{\bar{{\underline{x}}}}(\xi |{\mathcal {H}}_{0},x)\) over \({\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)\), which is not trivial due to the complexity of the integrand. The following result however presents an easy-to-compute upper bound for the difference between the actual and desired confidence levels.

Theorem 2

(Upper bound for the actual and desired confidence levels difference) The difference between the desired confidence level \((1-\alpha )\) and the actual confidence level \({\mathsf {P}}(\bar{{\underline{x}}}\in {\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)|{\mathcal {H}}_{0})\) can be bounded from above as

Proof

See ‘Appendix.’ \(\square \)

With the above easy-to-compute upper bound, one can decide whether or not to use the region \({\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)\) as the \(100(1-\alpha )\%\) confidence region.

Example 2

Here we graphically show, with the PDFs of a simple 1-dimensional example having one alternative hypothesis (\(\kappa =1\)), the effect of each of the factors in Table 2 on the difference between \({\mathsf {P}}(\bar{{\underline{x}}}\in {\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)|{\mathcal {H}}_{0})\) and \((1-{\alpha })\). Suppose that the \({\mathcal {H}}_{0}\)-model in (1) contains only one unknown parameter (\(n=1\)) and that its redundancy is one (\(r=1\)), i.e., \(x\in {\mathbb {R}}\) and \(t\in {\mathbb {R}}\). The canonical form of such a model, applying the Tienstra-transformation \({\mathcal {T}}\) to the normally distributed vector of observables \({\underline{y}}\), reads (Teunissen 2018)

where \(\sigma _{{\hat{x}}_{0}}\) and \(\sigma _{t}\) are the standard deviations of \(\hat{{\underline{x}}}_{0}\) and \({\underline{t}}\), respectively. As \(r=1\), we can only consider one single alternative hypothesis, say \({\mathcal {H}}_{a}\), and thus \(i\in \{0,a\}\). If more than one alternative is considered, they will not be separable from each other. Under the null and alternative hypotheses, we have

for some \(b_{a}\in {\mathbb {R}}{\setminus }\{0\}\) and \(L_{a}\in {\mathbb {R}}\) (cf. 5). To test \({\mathcal {H}}_{0}\) against \({\mathcal {H}}_{a}\), we use the following misclosure space partitioning

[Left] Illustration of the DIA-estimator PDF under \({\mathcal {H}}_{0}\) and its components (cf. 10) for the models and conditions specified in (23) to (25). [Right] Illustration of the confidence level (CL) difference \((1-\alpha )-{\mathsf {P}}(\bar{{\underline{x}}}\in {\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)|{\mathcal {H}}_{0})\) in gray and its upper bound (22) in red as a function of CI\(=\sqrt{\chi ^{2}_{1-\alpha }(1,0)}\,\sigma _{{\hat{x}}_{0}}\) (26)

In the simple model as given in (23), there is a scalar parameter of interest \(x\in {\mathbb {R}}\), and thus \({\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)\) in (15) reduces to the following interval

Figure 5 [left] shows the graphs of \(f_{\bar{{\underline{x}}}}(\xi |{\mathcal {H}}_{0},x)\) and its components (10), i.e., \(f_{\hat{{\underline{x}}}_{0}}(\xi |{\mathcal {H}}_{0},x)\) and \(f_{\hat{{\underline{x}}}_{a}|\mathrm{FA}}(\xi |\mathrm{FA},x)\), for different values of \(\sigma _{\hat{x}_{0}}\), \(\sigma _{t}\), \(L_{a}\) and \({\mathsf {P}}_{\mathrm{FA}}\). Figure 5 [right] shows the corresponding graphs of the confidence level difference \((1-\alpha )-{\mathsf {P}}(\bar{{\underline{x}}}\in {\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)|{\mathcal {H}}_{0})\) and its upper bound (22) as a function of CI\(=\sqrt{\chi ^{2}_{1-\alpha }(1,0)}\,\sigma _{{\hat{x}}_{0}}\) for \(0.001\le \mathrm{CI}\le 10\). In accordance with Table 2, it is observed that the difference between \({\mathsf {P}}(\bar{{\underline{x}}}\in {\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)|{\mathcal {H}}_{0})\) and \((1-\alpha )\) gets smaller for the following situations: \(\sigma _{{\hat{x}}_{0}}\uparrow \) (first vs. second row), \(\sigma _{t}\downarrow \) (first vs. third row), \(L_{a}\downarrow \) (first vs. fourth row) and \({\mathsf {P}}_{\mathrm{FA}}\downarrow \) (first vs. fifth row).

We note that increasing CI for a given \(\sigma _{{\hat{x}}_{0}}\) is equivalent to decreasing \(\alpha \) (cf. 26). As the left panels in Fig. 5 show, \(f_{\hat{{\underline{x}}}_{0}}(\xi |{\mathcal {H}}_{0},x)\), in blue, is more peaked than \(f_{\bar{{\underline{x}}}}(\xi |{\mathcal {H}}_{0},x)\), in magenta, around x, implying that \(1-\alpha ={\mathsf {P}}(\hat{{\underline{x}}}_{0}\in {\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)|{\mathcal {H}}_{0})\) increases more rapidly than \({\mathsf {P}}(\bar{{\underline{x}}}\in {\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)|{\mathcal {H}}_{0})\) as CI increases from 0.001. In addition, we have

Therefore, as the actual confidence level \({\mathsf {P}}(\bar{{\underline{x}}}\in {\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)|{\mathcal {H}}_{0})\) and the desired confidence level \((1-\alpha )\) are continuous functions of CI, the difference \((1-\alpha )-{\mathsf {P}}(\bar{{\underline{x}}}\in {\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)|{\mathcal {H}}_{0})\) will be an increasing and then decreasing function of CI. This behavior can clearly be recognized in all the panels. \(\square \)

5 Estimation \(+\) testing confidence region: Approach II

In this section, we take Approach II to construct confidence region by using the contours of the PDF \(f_{\bar{{\underline{x}}}}(\xi |{\mathcal {H}}_{0},x)\). For a given \({\mathcal {H}}_{0}\)-model and a misclosure space partitioning, the value of this PDF depends on \(\xi \) and x. We make use of the concept of highest density regions and define the \(100(1-\alpha )\%\) confidence region as the one which has the smallest volume among all the regions which cover the probability \((1-\alpha )\) for the DIA-estimator. Such region can be shown to take the following form (Hyndman 1996; Teunissen 2007)

where \(s_{\alpha }^{\mathrm{{II}}}\) is chosen such that

The smaller the \(\alpha \), the smaller the \(s_{\alpha }^{\mathrm{{II}}}\). The confidence region in (28) is driven by the contours of the DIA-estimator’s PDF. Therefore, Approach II is more informative than Approach I since it shows through the shape of the confidence region where in space the solution is ‘weak’ and where ‘strong’. In case \({\bar{{\underline{x}}}}\) has an elliptically contoured distribution, e.g., normal distribution, then the confidence region \({\mathcal {S}}_{\alpha }^{\mathrm{{II}}}(x)\) based on Approach II (28) would be identical to the confidence region \({\mathcal {S}}_{\alpha }^{\mathrm{{I}}}(x)\) based on Approach I (cf. 17).

Special case (detection only): Let the integrated estimation \(+\) testing procedure provide \(\hat{{\underline{x}}}_{0}\) as the estimate of x if \({\mathcal {H}}_{0}\) is accepted, while declare the solution for x to be unavailable if \({\mathcal {H}}_{0}\) is rejected. Hence, in this case the testing is confined to detection only and no identification is attempted. The DIA-estimator for this case is given by

To determine the confidence in \(\bar{{\underline{x}}}\), one should note that \(\bar{{\underline{x}}}\) solution is only available when \({\underline{t}}\in {\mathcal {P}}_{0}\). Thus we compute the confidence region conditioned on \({\underline{t}}\in {\mathcal {P}}_{0}\). As \(\bar{{\underline{x}}}|({\underline{t}}\in {\mathcal {P}}_{0})=\hat{{\underline{x}}}_{0}|({\underline{t}}\in {\mathcal {P}}_{0})\) and \(\hat{{\underline{x}}}_{0}\) is normally distributed and independent from \({\underline{t}}\), i.e., \(\hat{{\underline{x}}}_{0}|({\underline{t}}\in {\mathcal {P}}_{0})=\hat{{\underline{x}}}_{0}\), the confidence regions (17) and (28) should be formed based on the normal PDF of \(\hat{{\underline{x}}}_{0}\). Thus, these two confidence regions become identical and the same as \({\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)\) in (15). Therefore, if the testing procedure is confined to validating the null hypothesis only, there would be no difference between the estimation-only and estimation \(+\) testing confidence regions under \({\mathcal {H}}_{0}\), for a given \(\alpha \).

Determining the confidence region (28) requires the computation of \(s_{\alpha }^{\mathrm{{II}}}\) which can be done using (29). It was already shown in Lemma 2 that the PDF \(f_{\underline{{\bar{x}}}}(\xi |{\mathcal {H}}_{0},x)\) is translational invariant. Therefore, the probability in (29) will become independent of the unknown x if \({\mathcal {S}}_{\alpha }^{\mathrm{{II}}}(x)\) satisfies the condition (i) in Lemma 1, i.e., \({\mathcal {S}}_{\alpha }^{\mathrm{{II}}}(x)\) should be translational invariant. We have the following result.

Theorem 3

The confidence region \({\mathcal {S}}_{\alpha }^{\mathrm{{II}}}(x)\) in (28) is translational invariant iff \(f_{\underline{{\bar{x}}}}(\xi |{\mathcal {H}}_{0},x)\) is translational invariant.

Proof

See ‘Appendix.’ \(\square \)

As the above theorem shows, the confidence region (28) inherits the translational-invariance property from that of the PDF \(f_{\underline{{\bar{x}}}}(\xi |{\mathcal {H}}_{0},x)\). Therefore, the probability in (29) is independent of the unknown x.

Example 3

(Continuation of Example 1) We now add in parallel to the three angle measurements, one length ratio measurement (Baarda 1967) taken at point 1, thus increasing the total number of measurements by one to \(m = 4\). In the following, we use \(\rho _{ij}\) to denote the observed-minus-computed pseudo-distance measurement made from point i to point j, and \(u_{ij}=\frac{1}{l_{ij}}[\mathrm{E}_{j}-\mathrm{E}_{i}~,~\mathrm{N}_{j}-\mathrm{N}_{i}]^{T}\in {\mathbb {R}}^{2}\) for the unit direction vector from point i to point j. The general linearized observation equation of the length ratio measurement taken from point i to points j and k for the 2-D network under \({\mathcal {H}}_{0}\) reads

with \(r_{jik}=\frac{1}{l_{ik}}\,\rho _{ik}-\frac{1}{l_{ij}}\,\rho _{ij}\). As the total number of unknowns is \(n = 2\), the redundancy is \(r = 2\). The length ratio measurement is assumed to be normally distributed and uncorrelated with the angle measurements.

Illustration of \(100(1-\alpha )\%\) confidence regions of estimation-only and estimation \(+\) testing for the model specified in Example 3 and for different values of \(\alpha \). In each panel, green area indicates the region (28), red ellipse indicates the boundary of (17), and blue ellipse indicates the boundary of (15)

As alternative hypotheses, we consider those describing outliers in individual observations. Here we restrict ourselves to the case of one outlier at a time. In that case, there are as many alternative hypotheses as there are observations, i.e., \(\kappa =m=4\), with \(C_{i}=c_{i}\in {\mathbb {R}}^{4}\) (1), for \(i=1,\ldots ,4\), where the \(c_{i}\)-vector takes the form of a canonical unit vector having 1 as \(i^{th}\) entry and zeros elsewhere. To test the hypotheses at hand, we first use overall model test to check the validity of \({\mathcal {H}}_{0}\) and then employ datasnooping to screen each individual observation for the presence of an outlier (Baarda 1968; Kok 1984). The corresponding misclosure space partitioning is given by

where \(w_{i}\) is Baarda’s w-test statistic computed as (Baarda 1967; Teunissen 2006)

Figure 6 shows the three confidence regions in (15), (17) and (28), see Table 1. The green area shows the region \({\mathcal {S}}_{\alpha }^{\mathrm{{II}}}(x)\), while the blue and red ellipses show the boundary of the regions \({\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)\) and \({\mathcal {S}}_{\alpha }^{\mathrm{{I}}}(x)\), respectively. The results are illustrated for \(\alpha =\) 0.1, 0.05, 0.02 and 0.01 assuming that the standard deviations of direction and pseudo-distance measurements are 5 seconds of arc and 3 mm, respectively, and \({\mathsf {P}}_{\mathrm{FA}}=10^{-1}\). According to (20), the blue ellipse does not cover the probability \((1-\alpha )\) for the DIA-estimator. Figure 7 elaborates more on this by showing positive values for the difference \((1-\alpha )-{\mathsf {P}}(\bar{{\underline{x}}}\in {\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)|{\mathcal {H}}_{0})\) as a function of \(\alpha \). Thus the blue ellipse should be made larger in order to contain the required probability of \((1-\alpha )\). This explains why the red ellipse always encompasses the blue one.

Adding the length ratio measurement from point 1 to the previous angular measurements increases the sensitivity of the model to the movement of point 3 along the triangle side connecting point 1 to point 3. Therefore, the ellipse shown in Fig. 4 would shrink and its orientation would change depending on the length ratio precision with respect to the angular measurements. In the current example, the length ratio measurement is around two times more precise than the angle measurements. In the extreme case, if the length ratio is known with zero standard deviation, the confidence ellipse will have its minor axis parallel to the triangle side connecting point 1 to point 3. As this length ratio measurement gets less precise, the confidence ellipse’s minor axis moves further toward the East direction. This explains the shape and orientation of the ellipses in Fig. 6 as compared to those of the ellipse shown in Fig. 4.

It is observed in Fig. 6 that the green region \({\mathcal {S}}_{\alpha }^{\mathrm{{II}}}(x)\) can significantly differ in shape from the ellipsoidal confidence region which is conventionally used. Depending on the \(\alpha \) value, \({\mathcal {S}}_{\alpha }^{\mathrm{{II}}}(x)\) can be a non-convex and even disconnected region, which can be explained by the shape of the PDF of \(\bar{{\underline{x}}}\). Figure 8 (top-left) shows the contour plot of this PDF, i.e., \(f_{\bar{{\underline{x}}}}(\xi |{\mathcal {H}}_{0},x)\), which is a multi-modal distribution with three modes (peaks); one major mode at \(x=[x_{\mathrm{E}},~x_{\mathrm{N}}]^{T}\) with a PDF value larger than 2000, and two minor modes approximately North and South of \(x=[x_{\mathrm{E}},~x_{\mathrm{N}}]^{T}\) with the same PDF values between 20 and 50. According to the contour plot of \(f_{\bar{{\underline{x}}}}(\xi |{\mathcal {H}}_{0},x)\), for \(\alpha \) values corresponding with \(s_{\alpha }^{\mathrm{{II}}}>50\), the confidence region (28) is a single contiguous area. As \(s_{\alpha }^{\mathrm{{II}}}\) gets smaller than 50, the two minor modes of \(f_{\bar{{\underline{x}}}}(\xi |{\mathcal {H}}_{0},x)\) start contributing to the construction of the confidence region. As a result, (28) becomes a non-convex region which, for some values of \(s_{\alpha }^{\mathrm{{II}}}\) within the range \(10<s_{\alpha }^{\mathrm{{II}}}<50\), can even consist of three disconnected sub-regions.

The multi-modality of \(f_{\bar{{\underline{x}}}}(\xi |{\mathcal {H}}_{0},x)\) can also explain why the red ellipse is significantly larger than the blue one, i.e., \(s_{\alpha }^{\mathrm{{I}}}\) is significantly larger than \(\chi ^{2}_{1-\alpha }(2,0)\), for small values of \(\alpha \). Although the majority of the probability mass for the DIA-estimator under \({\mathcal {H}}_{0}\) is located around x, some probability mass can still be located far from x around the two minor modes of \(f_{\bar{{\underline{x}}}}(\xi |{\mathcal {H}}_{0},x)\). Therefore, in order for the red ellipse to cover large probabilities, equivalent to having small \(\alpha \) values, one needs to set large values for \(s_{\alpha }^{\mathrm{{I}}}\) such that the probability mass around the minor modes is also captured by the red ellipse.

The shape of \(f_{\bar{{\underline{x}}}}(\xi |{\mathcal {H}}_{0},x)\) is driven by its constituent components (7), i.e., \(f_{\hat{{\underline{x}}}_{0}}(\xi |{\mathcal {H}}_{0},x)\) and \(f_{\bar{{\underline{x}}}|{\underline{t}}\in {\mathcal {P}}_{i}}(\xi |{\underline{t}}\in {\mathcal {P}}_{i},{\mathcal {H}}_{0},x)\) for \(i=1,\ldots ,4\), which are all illustrated in Fig. 8. The contribution of \(f_{\hat{{\underline{x}}}_{0}}(\xi |{\mathcal {H}}_{0},x)\) and \(f_{\bar{{\underline{x}}}|{\underline{t}}\in {\mathcal {P}}_{i}}(\xi |{\underline{t}}\in {\mathcal {P}}_{i},{\mathcal {H}}_{0},x)\), for \(i=1,\ldots ,4\), to the PDF of \(\bar{{\underline{x}}}\) under \({\mathcal {H}}_{0}\) is driven by the respective probabilities \({\mathsf {P}}({\underline{t}}\in {\mathcal {P}}_{0}|{\mathcal {H}}_{0})=1-{\mathsf {P}}_{\mathrm{FA}}\) and \({\mathsf {P}}({\underline{t}}\in {\mathcal {P}}_{i}|{\mathcal {H}}_{0})\). As \({\mathsf {P}}_{\mathrm{FA}}=10^{-1}\) and \(\sum _{i=1}^{4}{\mathsf {P}}({\underline{t}}\in {\mathcal {P}}_{i}|{\mathcal {H}}_{0})={\mathsf {P}}_{\mathrm{FA}}\), then \(f_{\hat{{\underline{x}}}_{0}}(\xi |{\mathcal {H}}_{0},x)\) has the largest contribution. This explains the similarity of the shapes of the three contour lines 200, 1000 and 2000 (the three closest lines to \(x=[x_{\mathrm{E}},~x_{\mathrm{N}}]^{T}\)) between the top-left and top-middle panels. The multi-modality of \(f_{\bar{{\underline{x}}}}(\xi |{\mathcal {H}}_{0},x)\) results from the same property of the conditional distribution \(f_{\bar{{\underline{x}}}|{\underline{t}}\in {\mathcal {P}}_{4}}(\xi |{\underline{t}}\in {\mathcal {P}}_{4},{\mathcal {H}}_{0},x)\) shown in the bottom-right panel. \(\square \)

6 On the computation of DIA-estimator’s confidence level and confidence region under \({\mathcal {H}}_{0}\)

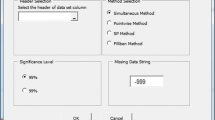

In this section, we present a numerical algorithm for computing the DIA-estimator’s confidence level under \({\mathcal {H}}_{0}\) corresponding with any arbitrary translational-invariant confidence region. In addition, numerical algorithms are also presented for computing the DIA-estimator’s confidence region under \({\mathcal {H}}_{0}\) based on Approach I and Approach II. An overview of these algorithms is given in Fig. 9.

6.1 Confidence level

Let \({\mathcal {S}}_{\alpha }(x)\subset {\mathbb {R}}^{n}\) be a translational-invariant confidence region satisfying the condition (i) in Lemma 1. The computation of the confidence level \({\mathsf {P}}(\underline{{\bar{x}}}\in {\mathcal {S}}_{\alpha }(x)|{\mathcal {H}}_{0})\) requires integrating the non-normal PDF \(f_{\underline{{\bar{x}}}}(\xi |{\mathcal {H}}_{0},x)\) over the region \({\mathcal {S}}_{\alpha }(x)\). This is not an easy task due to the complexity of the PDF of \(\underline{{\bar{x}}}\), see, e.g., Fig. 8. We therefore show how the confidence level can be computed by means of Monte Carlo simulation. In doing so, we make use of the fact that a probability can always be written as an expectation, and an expectation can be approximated by taking the average of a sufficient number of samples from the distribution. The probability \({\mathsf {P}}(\underline{{\bar{x}}}\in {\mathcal {S}}_{\alpha }(x)|{\mathcal {H}}_{0})\) can be written as

with the indicator function

Note, the above probability is independent of the unknown x as \({\mathcal {S}}_{\alpha }(x)\) and \(f_{\underline{{\bar{x}}}}(\xi |{\mathcal {H}}_{0},x)\) satisfy the conditions (i) and (ii) in Lemma 1, respectively. Therefore, one may choose for the unknown x any n-vector for the computation of (34). Let \({\bar{x}}^{(s)} \in {\mathbb {R}}^{n}\), \(s=1, \ldots , N\), be sample vectors independently drawn from the distribution \(f_{\underline{{\bar{x}}}}(\xi |{\mathcal {H}}_{0},x)\). Then, for sufficiently large N, determined by the requirements of the application at hand, the confidence level \({\mathsf {P}}(\underline{{\bar{x}}}\in {\mathcal {S}}_{\alpha }(x)|{\mathcal {H}}_{0})\) can be well approximated by

The simulation of \({\bar{x}}^{(s)}\) under \({\mathcal {H}}_{0}\) can be carried out through the following steps.

-

1.

Simulation of \(y^{(s)}\) under \({\mathcal {H}}_{0}\): To simulate a sample vector \(y^{(s)} \in {\mathbb {R}}^{m}\) from \({\mathcal {N}}(Ax, Q_{yy})\), we first use a random number generator to simulate a sample \(u^{(s)} \in {\mathbb {R}}^{m}\) from the multivariate standard normal distribution \({\mathcal {N}}(0, I_{m})\). Then, we use the Cholesky-factor \(C^{T}\) of the Cholesky-factorization \(Q_{yy}=C^{T}C\), to transform \(u^{(s)}\) to \(C^{T}u^{(s)}\), which now can be considered to be a sample from \({\mathcal {N}}(0, Q_{yy})\). Then, we shift \(C^{T}u^{(s)}\) to \(y^{(s)}=C^{T}u^{(s)}+Ax\), to get the asked for sample from \({\mathcal {N}}(Ax, Q_{yy})\). Note that A, \(Q_{yy}\) and x are needed to generate \(y^{(s)}\). The matrices A and \(Q_{yy}\) are known, as they define the assumed model. Although vector \(x \in {\mathbb {R}}^{n}\) is not known, one can, fortunately, take any n-vector as choice for x.

-

2.

Computation of \({\hat{x}}_{0}^{(s)}\) and \(t^{(s)}\): Application of the Tienstra-transformation \({\mathcal {T}} = [A^{+ T}, B]^{T}\in {\mathbb {R}}^{m \times m}\) to \(y^{(s)}\) gives

$$\begin{aligned} \left[ \begin{array}{c} {\hat{x}}_{0}^{(s)} \\ t^{(s)} \end{array} \right] = {\mathcal {T}} y^{(s)} \end{aligned}$$(37)with \({\hat{x}}_{0}^{(s)}\) and \(t^{(s)}\) being the samples of the BLUE of x and the misclosure under \({\mathcal {H}}_{0}\)-model, respectively.

-

3.

Computation of \({\bar{x}}^{(s)}\): At this stage it must be known which testing procedure is chosen to select the most likely hypothesis. With the testing procedure known and the misclosure sample \(t^{(s)}\), one of the hypotheses is selected. If \({\mathcal {H}}_{0}\) is selected, then \({\hat{x}}_{0}^{(s)}\) is the asked for sample of \({\bar{x}}\), i.e., \({\bar{x}}^{(s)}={\hat{x}}_{0}^{(s)}\). If \({\mathcal {H}}_{i\ne 0}\) is selected, we first compute the sample of the bias-estimator as \({\hat{b}}_{i}^{(s)}=C_{t_{i}}^{+}t^{(s)}\) and then \({\hat{x}}_{i}^{(s)}={\hat{x}}_{0}^{(s)}-A^{+}C_{i}{\hat{b}}_{i}^{(s)}\) which is the asked for sample of \({\bar{x}}\), i.e., \({\bar{x}}^{(s)}={\hat{x}}_{i}^{(s)}\).

With the above three steps repeated N times, one obtains the sample set \({\bar{x}}^{(s)}\), \(s=1, \ldots , N\), as needed for the computation of (36).

6.2 Confidence region: Approach I

In order to determine the confidence region \({\mathcal {S}}_{\alpha }^{\mathrm{{I}}}(x)\) in (17) for a chosen value of \(\alpha \), one needs to find the threshold value \(s_{\alpha }^{\mathrm{{I}}}\). With (18), this threshold value can be computed by inverting

which is not trivial due to the complexity of the PDF of \(\bar{{\underline{x}}}\). We therefore show how \(s_{\alpha }^{\mathrm{{I}}}\) can be computed for a chosen value of \(\alpha \) by means of Monte Carlo simulation and density quantile approach (Hyndman 1996). We first define the following random variable

Therefore \(s_{\alpha }^{\mathrm{{I}}}\) should satisfy

implying that \(-s_{\alpha }^{\mathrm{{I}}}\) is the \(\alpha \) quantile of \({\underline{\theta }}\). Therefore, \(-s_{\alpha }^{\mathrm{{I}}}\) can be approximated as \(\alpha \) sample quantile from a set of independent samples from the distribution of \({\underline{\theta }}\). Let \({\bar{x}}^{(s)} \in {\mathbb {R}}^{n}\), \(s=1, \ldots , N\), be sample vectors independently drawn from the distribution \(f_{\underline{{\bar{x}}}}(\xi |{\mathcal {H}}_{0},x)\). Then, \(\theta ^{(s)}= -\Vert \bar{{x}}^{(s)}-x\Vert _{Q_{{{\hat{x}}_{0}}{{\hat{x}}_{0}}}}^{2}\in {\mathbb {R}}\), \(s=1, \ldots , N\) are independent samples from the distribution of \({\underline{\theta }}\) under \({\mathcal {H}}_{0}\). After sorting the samples in an ascending order, the first sample with an index larger than \(\alpha N\) gives an approximation of the \(\alpha \) quantile of \({\underline{\theta }}\) (Hyndman 1996). An approximation of \(s_{\alpha }^{\mathrm{{I}}}\) is then obtained by changing the sign of the mentioned sample.

With the above procedure, one needs to take the following steps to find an approximation of \(s_{\alpha }^{\mathrm{{I}}}\) for a given \(\alpha \).

-

1.

Generate N independent samples \({\bar{x}}^{(1)},{\bar{x}}^{(2)},\ldots ,{\bar{x}}^{(N)}\) from the distribution \(f_{\underline{{\bar{x}}}}(\xi |{\mathcal {H}}_{0},x)\) by repeating the three simulation steps explained in Sect. 6.1, N times, taking any n-vector as choice for x.

-

2.

For each sample \({\bar{x}}^{(s)}\), for \(s=1, \ldots , N\), compute the corresponding sample \(\theta ^{(s)}\) as

$$\begin{aligned} {\theta }^{(s)}=-\Vert \bar{{x}}^{(s)}-x\Vert _{Q_{{{\hat{x}}_{0}}{{\hat{x}}_{0}}}}^{2} \end{aligned}$$(41) -

3.

Sort the samples \(\theta ^{(1)},\theta ^{(2)},\ldots ,\theta ^{(N)}\) in an ascending order. Let \(\theta _{1}\le \theta _{2}\le ,\ldots ,\le \theta _{N}\) be the sorted values of the samples \(\theta ^{(1)},\theta ^{(2)},\ldots ,\theta ^{(N)}\).

-

4.

An approximation of \(s_{\alpha }^{\mathrm{{I}}}\) is then given by

$$\begin{aligned} {\hat{s}}_{\alpha }^{\mathrm{I}}=-\theta _{\lceil N\alpha \rceil } \end{aligned}$$(42)where \(\lceil \cdot \rceil \) is the ceiling function which rounds numbers up.

6.3 Confidence region: Approach II

In order to determine the confidence region \({\mathcal {S}}_{\alpha }^{\mathrm{{II}}}(x)\) in (28) for a chosen value of \(\alpha \), one first needs to find the threshold value \(s_{\alpha }^{\mathrm{{II}}}\). With (29), this threshold value can be computed by inverting

which is again not trivial due to the complexity of the PDF of \(\underline{{\bar{x}}}\). We therefore show how \(s_{\alpha }^{\mathrm{{II}}}\) can be computed for a chosen value of \(\alpha \) by means of Monte Carlo simulation and density quantile approach. We first define the following random variable

Therefore \(s_{\alpha }^{\mathrm{{II}}}\) should satisfy

implying that \(s_{\alpha }^{\mathrm{{II}}}\) is the \(\alpha \) quantile of \({\underline{\nu }}\). Therefore, \(s_{\alpha }^{\mathrm{{II}}}\) can be approximated as \(\alpha \) sample quantile from a set of independent samples from the distribution of \({\underline{\nu }}\). Let \({\bar{x}}^{(s)} \in {\mathbb {R}}^{n}\), \(s=1,\ldots , N\), be sample vectors independently drawn from the distribution \(f_{\underline{{\bar{x}}}}(\xi |{\mathcal {H}}_{0},x)\). Then, \(\nu ^{(s)}= f_{\underline{{\bar{x}}}}({{\bar{x}}}^{(s)}|{\mathcal {H}}_{0},x)\), \(s=1, \ldots , N\), are independent samples from the distribution of \({\underline{\nu }}\) under \({\mathcal {H}}_{0}\). After sorting the samples in an ascending order, the first sample with an index larger than \(\alpha N\) gives an approximation of the \(\alpha \) quantile of \({\underline{\nu }}\), i.e., \(s_{\alpha }^{\mathrm{{II}}}\).

To compute the samples of \({\underline{\nu }}\) in (44), one needs to compute the PDF of \(\underline{{\bar{x}}}\), which, using (7) to (9), can be written as

where \(L_{0}=0\) and the expectation in the last equality is with respect to \(f_{{\underline{t}}}(\tau |{\mathcal {H}}_{0})\). Given the last equality, we compute \(f_{\bar{{\underline{x}}}}(\xi |{\mathcal {H}}_{0},x)\) using a Monte Carlo simulation. Let \(t^{(l)}\in {\mathbb {R}}^{r}\), for \(l= 1,\ldots ,M\), be sample vectors independently drawn from the distribution \(f_{{\underline{t}}}(\tau |{\mathcal {H}}_{0})\). Then, for sufficiently large M, determined by the requirements of the application at hand, the value of \(f_{\bar{{\underline{x}}}}(\xi |{\mathcal {H}}_{0},x)\) can be well approximated by

The procedure of finding an approximation of \(s_{\alpha }^{\mathrm{{II}}}\) for a given \(\alpha \), explained above, can be summarized in the following steps.

-

1.

Generate N independent samples \({\bar{x}}^{(1)},{\bar{x}}^{(2)},\ldots ,{\bar{x}}^{(N)}\) from the distribution \(f_{\underline{{\bar{x}}}}(\xi |{\mathcal {H}}_{0},x)\) by repeating the three simulation steps explained in Sect. 6.1, N times, taking any n-vector as choice for x.

-

2.

Generate M independent samples \(t^{(1)},t^{(2)},\ldots ,t^{(M)}\) from the distribution \(f_{{\underline{t}}}(\tau |{\mathcal {H}}_{0})\) by repeating the first two simulation steps explained in Sect. 6.1, M times.

-

3.

For each sample \({\bar{x}}^{(s)}\), for \(s=1, \ldots , N\), compute the corresponding sample \(\nu ^{(s)}\) as

$$\begin{aligned} \nu ^{(s)}=\dfrac{\sum \nolimits _{l=1}^{M} \left( \sum \nolimits _{j=0}^{\kappa }f_{\hat{{\underline{x}}}_{0}}\left( {\bar{x}}^{(s)}+L_{j}t^{(l)}\big |{\mathcal {H}}_{0},x\right) \,p_{j}\left( t^{(l)}\right) \right) }{M}\nonumber \\ \end{aligned}$$(48) -

4.

Sort the samples \(\nu ^{(1)},\nu ^{(2)},\ldots ,\nu ^{(N)}\) in an ascending order. Let \(\nu _{1}\le \nu _{2}\le ,\ldots ,\le \nu _{N}\) be the sorted values of the samples \(\nu ^{(1)},\nu ^{(2)},\ldots ,\nu ^{(N)}\).

-

5.

An approximation of \(s_{\alpha }^{\mathrm{{II}}}\) is then given by

$$\begin{aligned} {\hat{s}}_{\alpha }^{\mathrm{II}}=\nu _{\lceil N\alpha \rceil } \end{aligned}$$(49)

7 Summary and conclusions

In this contribution, a critical appraisal was provided on the computation and evaluation of confidence regions and standard ellipses. We made the case that in the majority of our geodetic applications the customary confidence regions do not truly reflect the confidence one can have in one’s produced estimators.

We have shown that the common practice of combining parameter estimation with hypothesis testing, necessitates that the uncertainties of both estimation and testing are taken into account when constructing and evaluating confidence regions. We provided theory and computational procedures on how to compute and construct such confidence regions and associated confidence levels. In doing so, we made use of the concept of DIA-estimator and focused on the designing phase prior to when the actual measurements are collected, thereby naturally assuming that the null hypothesis \({\mathcal {H}}_{0}\) is valid.

To construct the confidence region for the parameters of interest, two different approaches were discussed: Approach I in which the region’s shape is user-fixed and only its size is determined by the estimator’s distribution; and Approach II in which both the size and shape are simultaneously determined by the estimator’s distribution. It was hereby emphasized irrespective of which approach is taken, that the properties of the confidence region are determined by the probabilistic properties of the estimator.

In using Approach I, we considered the geodetic-common choice of ellipsoidal region and then determined its size by the non-normal PDF of the DIA-estimator. We proved and demonstrated that if one neglects the testing contribution and assumes the estimator resulting from combined estimation \(+\) testing to be normally distributed, that the resulting confidence region of Approach I would actually cover a smaller probability than one presumes with the preset confidence level. We also provided an easy-to-compute upper bound for the difference between the actual and preset confidence level.

To form the confidence region of Approach II, we used the concept of highest density regions and defined the confidence region as the one which has the smallest volume among all the regions which cover the same probability. The shape and size of such confidence region are then driven by the contours of the DIA-estimator’s PDF. It was demonstrated that the confidence region of Approach II can significantly differ in shape from the ellipsoidal confidence region which is conventionally used. It can be a non-convex and even disconnected region depending on the preset confidence level. However, in the very special case when one opts for not including identification, but have the testing procedure be confined to detection only, there would be no difference between the customary confidence region and those of Approach I and Approach II.

We presented the numerical algorithms for computing the confidence level of the DIA-estimator corresponding with any translational-invariant confidence region, as well as the numerical algorithms for constructing confidence regions based on both Approach I and Approach II. These are summarized in Fig. 9. Although Approach II confidence regions are more informative than those of Approach I, they demand higher computational complexities. The trade-offs, based on informativity and complexity, in selecting these approaches are determined by the application at hand.

We remark that although our analyses were presented assuming a normal distribution for the measurements, our confidence region/level computation algorithms in Sect. 6 and Fig. 9 can be applied for any arbitrary distribution of the measurements. In that case, for Approach II, use needs to be made of a more generalized form of (47) as

Finally, in this study, attention was focused on the computation of truthful confidence regions/levels under the null hypothesis \({\mathcal {H}}_{0}\). A similar study under alternative hypotheses, is the topic of future works.

Data availability

No data are used for this study.

References

Baarda W (1967) Statistical concepts in geodesy. In: Netherlands Geodetic Commission. Publications on geodesy. New series, vol 2, no 4

Baarda W (1968) A testing procedure for use in geodetic networks. In: Netherlands Geodetic Commission. Publications on geodesy. New series, vol 2, no 5

Baarda W (1973) S-transformations and criterion matrices. In: Netherlands Geodetic Commission, vol 5, no 1

Cambanis S, Huang S, Simons G (1981) On the theory of elliptically contoured distributions. J Multivar Anal 11(3):368–385

Casella G, Berger RL (2001) Statistical inference, 2nd edn. Cengage Learning

Devoti R, Esposito A, Pietrantonio G, Pisani AR, Riguzzi F (2011) Evidence of large-scale deformation patterns from GPS data in the Italian subduction boundary. Earth Planet Sci Lett 311:230–241

Dheenathayalan P, Small D, Schubert A, Hanssen RF (2016) High-precision positioning of radar scatterers. J Geodesy 90(5):403–422

Durdag UM, Hekimoglu S, Erdogan B (2018) Reliability of models in kinematic deformation analysis. J Surv Eng 144(3):04018,004

Gillissen I, Elema I (1996) Test results of DIA: a real-time adaptive integrity monitoring procedure, used in an integrated naviation system. Int Hydrogr Rev 73(1):75–100

Gundlich B, Koch KR (2002) Confidence regions for GPS baselines by Bayesian statistics. J Geodesy 76(1):55–62

Hewitson S, Wang J (2006) GNSS receiver autonomous integrity monitoring (RAIM) performance analysis. GPS Solut 10(3):155–170

Hofmann-Wellenhof B, Legat K, Wieser M (2003) Navigation. Principles of positioning and guidance. Springer, New York

Hoover W (1984) Algorithms for confidence circles and ellipses (NOAA Technical Report NOS 107 C&GS 3). National Oceanic and Atmospheric Administration, Rockville, MD

Huang S, Li G, Wang X, Zhang W (2019) Geodetic network design and data processing for Hong Kong–Zhuhai–Macau link immersed tunnel. Surv Rev 51(365):114–122

Hyndman RJ (1996) Computing and graphing highest density regions. Am Stat 50(2):120–126

Jonkman N, De Jong K (2000) Integrity monitoring of IGEX-98 data, part II: cycle slip and outlier detection. GPS Solut 3(4):24–34

Khodabandeh A, Teunissen PJG (2016) Single-epoch GNSS array integrity: An analytical study. In: Sneeuw N, Novák P, Crespi M, Sansò F (eds) VIII Hotine-Marussi symposium on mathematical geodesy: proceedings of the symposium in Rome, 17–21 June, 2013. Springer, pp 263–272

Koch KR (1999) Parameter estimation and hypothesis testing in linear models. Springer, New York

Kok J (1984) Statistical analysis of deformation problems using Baarda’s testing procedure. In: “Forty Years of Thought” Anniversary Volume on the Occasion of Prof Baarda’s 65th Birthday, Delft, vol 2, pp 470–488

Kösters A, Van der Marel H (1990) Statistical testing and quality analysis of 3-D networks. In: Global positioning system: an overview. Springer, pp 282–289

Kuusniemi H, Lachapelle G, Takala JH (2004) Position and velocity reliability testing in degraded GPS signal environments. GPS Solut 8(4):226–237

Lehmann R, Lösler M (2017) Congruence analysis of geodetic networks-hypothesis tests versus model selection by information criteria. J Appl Geod 11(4):271–283

Lösler M, Lehmann R, Neitzel F, Eschelbach C (2021) Bias in least-squares adjustment of implicit functional models. Surv Rev 53(378):223–234

Niemeier W, Tengen D (2017) Uncertainty assessment in geodetic network adjustment by combining gum and Monte-Carlo-simulations. J Appl Geod 11(2):67–76

Niemeier W, Tengen D (2020) Stochastic properties of confidence ellipsoids after least squares adjustment, derived from GUM analysis and Monte Carlo simulations. Mathematics 8(8):1318

Nowel K (2020) Specification of deformation congruence models using combinatorial iterative DIA testing procedure. J Geod 94(12):1–23

Perfetti N (2006) Detection of station coordinate discontinuities within the Italian GPS fiducial network. J Geod 80(7):381–396

Schervish MJ (1995) Theory of statistics, 1st edn. Springer, New York

Seemkooei A (2001) Comparison of reliability and geometrical strength criteria in geodetic networks. J Geod 75(4):227–233

Šidák Z (1967) Rectangular confidence regions for the means of multivariate normal distributions. J Am Stat Assoc 62(318):626–633

Teunissen P (1985) Quality control in geodetic networks. In: Grafarend EW, Sansó F (eds) Optimization and design of geodetic networks. Springer, New York, pp 526–547

Teunissen P (1990) Quality control in integrated navigation systems. IEEE Aerosp Electron Syst Mag 5(7):35–41

Teunissen PJG (1989) Estimation in nonlinear models, II Hotine-Marussi symposium on mathematical geodesy, Pisa, Italy, June 5–8

Teunissen PJG (2003) adjustment theory: an introduction. Delft University Press, Series on Mathematical Geodesy and Positioning

Teunissen PJG (2006) Testing theory: an introduction, 2nd edn. Delft University Press, Series on Mathematical Geodesy and Positioning

Teunissen PJG (2007) Least-squares prediction in linear models with integer unknowns. J Geod 81:565–579

Teunissen PJG (2017) Batch and recursive model validation. In: Teunissen PJG, Montenbruck O (eds) Springer handbook of global navigation satellite systems, chap 24, pp 727–757

Teunissen PJG (2018) Distributional theory for the DIA method. J Geod 92(1):59–80. https://doi.org/10.1007/s00190-017-1045-7

Verhoef HME, De Heus HM (1995) On the estimation of polynomial breakpoints in the subsidence of the Groningen gasfield. Surv Rev 33(255):17–30

Wieser A (2004) Reliability checking for GNSS baseline and network processing. GPS Solut 8(3):55–66

Yang L, Li Y, Wu Y, Rizos C (2014) An enhanced MEMS-INS/GNSS integrated system with fault detection and exclusion capability for land vehicle navigation in urban areas. GPS Solut 18(4):593–603

Yang L, Shen Y, Li B, Rizos C (2021) Simplified algebraic estimation for the quality control of DIA estimator. J Geod 95(1):1–15

Yavaşoğlu HH, Kalkan Y, Tiryakioğlu I, Yigit C, Özbey V, Alkan MN, Bilgi S, Alkan RM (2018) Monitoring the deformation and strain analysis on the Ataturk Dam, Turkey. Geomat Nat Haz Risk 9(1):94–107

Zaminpardaz S, Teunissen PJ, Nadarajah N (2017) Single-frequency L5 attitude determination from IRNSS/NavIC and GPS: a single-and dual-system analysis. J Geod 91(12):1415–1433

Acknowledgements

We thank Sebastian Ciuban for his contribution to Fig. 1

Open Access

This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Author information

Authors and Affiliations

Contributions

S.Z. and P.J.G.T. contributed to the design, implementation of the research, analysis of the results and the writing of the manuscript.

Corresponding author

Appendix

Appendix

Proof of Lemma 1

Given that

we show that the above integral remains invariant for any change in x. If we replace x by \(x'=x+\gamma \) for an arbitrary \(\gamma \in {\mathbb {R}}^{n}\), then we have

showing that the probability in (51) is independent of x, thus proving Lemma 1. In (52), in the first equality use is made of condition (i), the second equality is obtained by change of variable \(\xi \rightarrow \eta =\xi -\gamma \), and in the last equality use is made of condition (ii). \(\square \)

Proof of Lemma 2

Combining (7) to (9) and defining \(L_{0}=0\), one can write, for any arbitrary vector \(\gamma \in {\mathbb {R}}^{n}\),

which proves Lemma 2. Note, the second equality is a consequence of the PDF of \(\underline{{\hat{x}}}_{0}\) under \({\mathcal {H}}_{0}\) being translational invariant.

\(\square \)

Proof of Theorem 1

The probability \({\mathsf {P}}(\bar{{\underline{x}}}\in {\mathcal {S}}_{\alpha }^{\mathrm{{E}}}(x)|{\mathcal {H}}_{0})\) can be written, using (7) to (9), as

where \({\underline{\chi }}^{2}_{0}\sim \chi ^{2}(n,0)\) and \({\underline{\chi }}^{2}_{i}(\tau )\sim \chi ^{2}(n,\Vert L_{i}\tau \Vert ^{2}_{Q_{{\hat{x}}_{0}{\hat{x}}_{0}}})\) for \(i=1,\ldots ,\kappa \). Since \({\mathsf {P}}({\underline{\chi }}^{2}_{i}(\tau )\le \chi ^{2}_{1-\alpha }(n,0))\) is always smaller than or equal to \({\mathsf {P}}({\underline{\chi }}^{2}_{0}\le \chi ^{2}_{1-\alpha }(n,0))\), then the term between square brackets in the last equality will always be a non-positive value, from which (20) follows. \(\square \)

Proof of Theorem 2

Taking the integral of both sides of (10) over \({\mathcal {S}}_{\alpha }^{\mathrm{{E}}}({x})\) gives

Using the above lower bound and that \({\mathsf {P}}_{\mathrm{FA}}=1-{\mathsf {P}}_{\mathrm{CA}}\), we arrive at (22). \(\square \)

Proof of Theorem 3

‘if’ part: with \(f_{\underline{{\bar{x}}}}(\xi |{\mathcal {H}}_{0},x)\) being translational invariant, we have, for any \(\gamma ,x,\xi \in {\mathbb {R}}^{n}\),

from which we can conclude that \({\mathcal {S}}_{\alpha }^{\mathrm{{II}}}(x+\gamma )={\mathcal {S}}_{\alpha }^{\mathrm{{II}}}(x)+\gamma \) for any \(\gamma ,x\in {\mathbb {R}}^{n}\). ‘only if’ part: let \({\mathcal {S}}_{\alpha }^{\mathrm{{II}}}(x+\gamma )={\mathcal {S}}_{\alpha }^{\mathrm{{II}}}(x)+\gamma \) be valid for any \(\gamma ,x\in {\mathbb {R}}^{n}\) and \(\alpha \ge 0\). Then, we have

That (57) holds for all \(s_{\alpha }^{\mathrm{{II}}}\) proves that the PDF \(f_{\underline{{\bar{x}}}}(\xi |{\mathcal {H}}_{0},x)\) is translational invariant. \(\square \)

Rights and permissions